bakllava

Maintainer: lucataco

38

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | View on Github |

| Paper link | No paper link provided |

Create account to get full access

Model overview

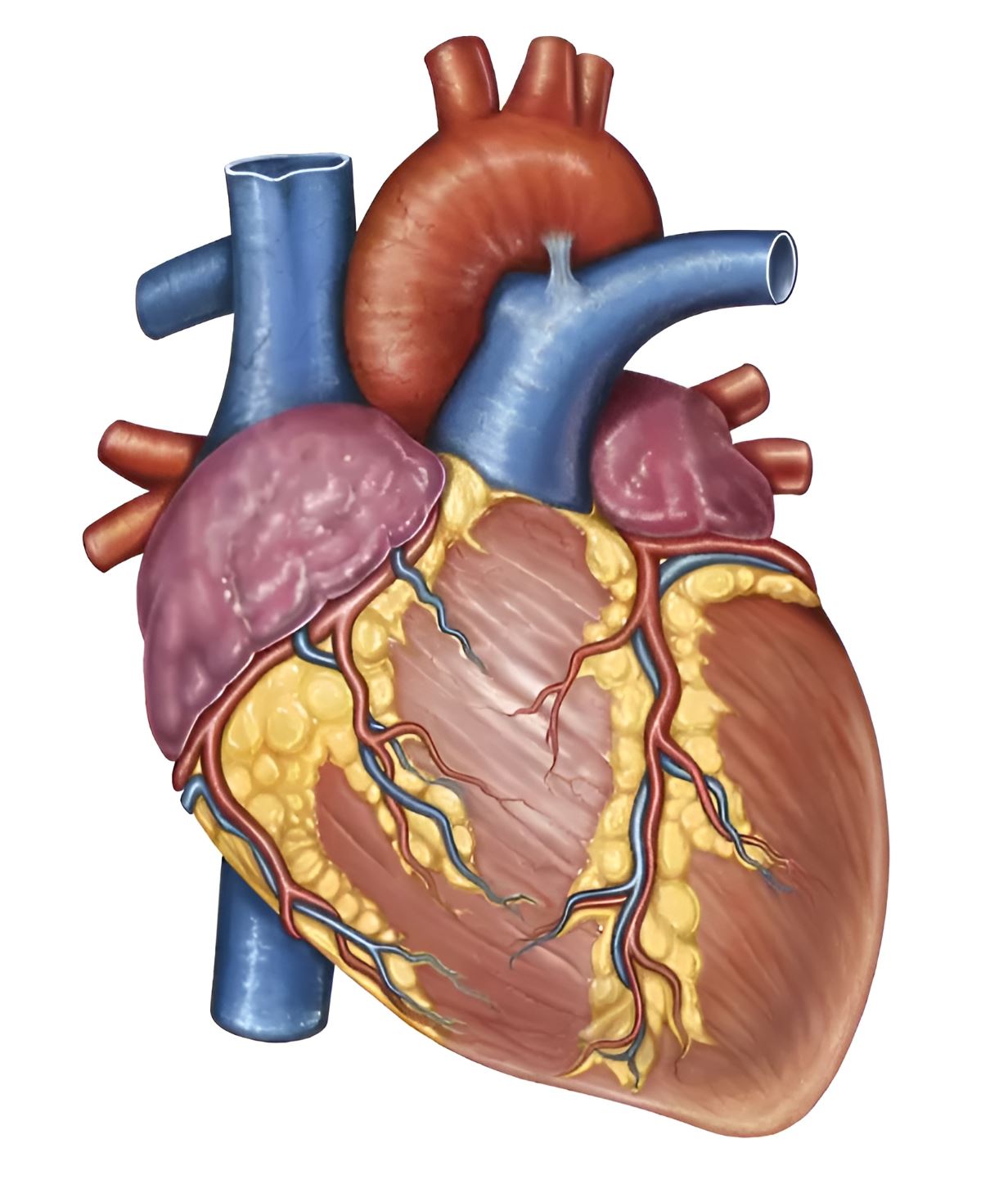

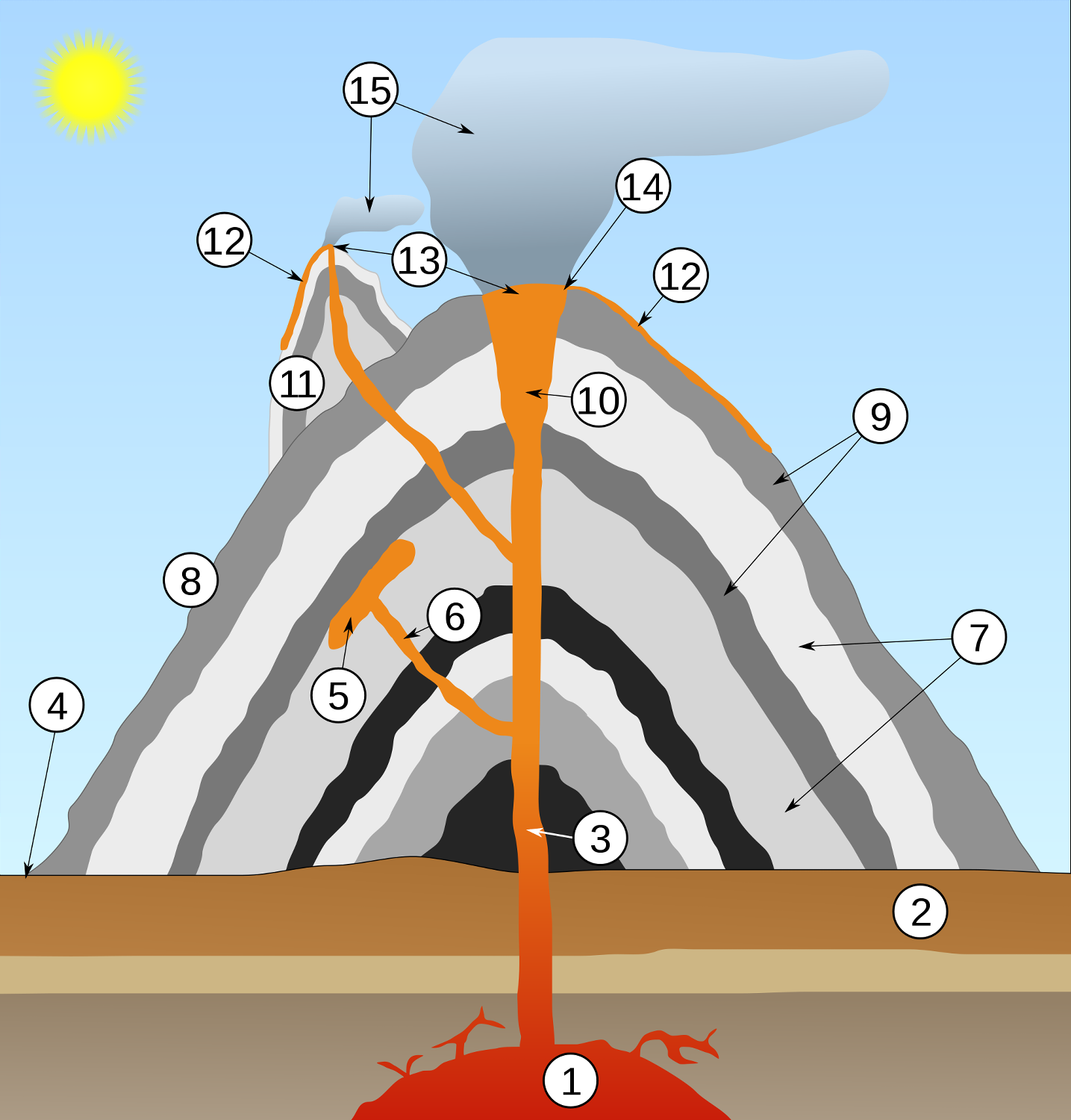

BakLLaVA-1 is a large language model developed by the SkunkworksAI team. It is built upon the Mistral 7B base and incorporates the LLaVA 1.5 architecture, a vision-language model. This combination allows BakLLaVA-1 to excel at both language understanding and generation, as well as visual tasks like image captioning and visual question answering.

The model is similar to other vision-language models like DeepSeek-VL: An open-source Vision-Language Model and LLaVA v1.6: Large Language and Vision Assistant (Mistral-7B), which aim to combine language and vision capabilities in a single model.

Model inputs and outputs

BakLLaVA-1 takes two main inputs: an image and a prompt. The image can be in various formats, and the prompt is a natural language instruction or question about the image. The model then generates a textual output, which could be a description, analysis, or answer related to the input image and prompt.

Inputs

- Image: An input image in various formats

- Prompt: A natural language instruction or question about the input image

Outputs

- Text: A generated textual response describing, analyzing, or answering the prompt in relation to the input image

Capabilities

BakLLaVA-1 is capable of a wide range of vision and language tasks, including image captioning, visual question answering, and multi-modal reasoning. It can generate detailed descriptions of images, answer questions about the contents of an image, and even perform analysis and inference based on the visual and textual inputs.

What can I use it for?

BakLLaVA-1 can be useful for a variety of applications, such as:

- Automated image captioning and description generation for social media, e-commerce, or accessibility

- Visual question answering for educational or assistive technology applications

- Multimodal content creation and generation for marketing, journalism, or creative industries

- Enhancing existing computer vision and natural language processing pipelines with its robust capabilities

Things to try

One interesting aspect of BakLLaVA-1 is its ability to perform cross-modal reasoning, where it can infer information about an image based on the prompt, or vice versa. For example, you could try providing the model with an image of a particular object and ask it to describe the object in detail, or you could give it a prompt about a scene and ask it to generate an image that matches the description.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

llava-phi-3-mini

3

llava-phi-3-mini is a LLaVA model fine-tuned from microsoft/Phi-3-mini-4k-instruct by XTuner. It is a lightweight, state-of-the-art open model trained with the Phi-3 datasets, similar to phi-3-mini-128k-instruct and llava-phi-3-mini-gguf. The model uses the CLIP-ViT-Large-patch14-336 visual encoder and MLP projector, with a resolution of 336. Model inputs and outputs llava-phi-3-mini takes an image and a prompt as inputs, and generates a text output in response. The model is capable of performing a variety of multimodal tasks, such as image captioning, visual question answering, and visual reasoning. Inputs Image**: The input image, provided as a URL or file path. Prompt**: The text prompt that describes the task or query the user wants the model to perform. Outputs Text**: The model's generated response to the input prompt, based on the provided image. Capabilities llava-phi-3-mini is a powerful multimodal model that can perform a wide range of tasks, such as image captioning, visual question answering, and visual reasoning. The model has been fine-tuned on a variety of datasets, including ShareGPT4V-PT and InternVL-SFT, which have improved its performance on tasks like MMMU Val, SEED-IMG, AI2D Test, ScienceQA Test, HallusionBench aAcc, POPE, GQA, and TextVQA. What can I use it for? You can use llava-phi-3-mini for a variety of applications that require multimodal understanding and generation, such as image-based question answering, visual storytelling, or even image-to-text translation. The model's lightweight nature and strong performance make it a great choice for projects that require efficient and effective multimodal AI capabilities. Things to try With llava-phi-3-mini, you can explore a range of multimodal tasks, such as generating detailed captions for images, answering questions about the contents of an image, or even describing the relationships between objects in a scene. The model's versatility and performance make it a valuable tool for anyone working on projects that combine vision and language.

Updated Invalid Date

flux-dev-lora

1.4K

The flux-dev-lora model is a FLUX.1-Dev LoRA explorer created by replicate/lucataco. This model is an implementation of the black-forest-labs/FLUX.1-schnell model as a Cog model. The flux-dev-lora model shares similarities with other LoRA-based models like ssd-lora-inference, fad_v0_lora, open-dalle-1.1-lora, and lora, all of which focus on leveraging LoRA technology for improved inference performance. Model inputs and outputs The flux-dev-lora model takes in several inputs, including a prompt, seed, LoRA weights, LoRA scale, number of outputs, aspect ratio, output format, guidance scale, output quality, number of inference steps, and an option to disable the safety checker. These inputs allow for customized image generation based on the user's preferences. Inputs Prompt**: The text prompt that describes the desired image to be generated. Seed**: The random seed to use for reproducible generation. Hf Lora**: The Hugging Face path or URL to the LoRA weights. Lora Scale**: The scale to apply to the LoRA weights. Num Outputs**: The number of images to generate. Aspect Ratio**: The aspect ratio for the generated image. Output Format**: The format of the output images. Guidance Scale**: The guidance scale for the diffusion process. Output Quality**: The quality of the output images, from 0 to 100. Num Inference Steps**: The number of inference steps to perform. Disable Safety Checker**: An option to disable the safety checker for the generated images. Outputs A set of generated images in the specified format (e.g., WebP). Capabilities The flux-dev-lora model is capable of generating images from text prompts using a FLUX.1-based architecture and LoRA technology. This allows for efficient and customizable image generation, with the ability to control various parameters like the number of outputs, aspect ratio, and quality. What can I use it for? The flux-dev-lora model can be useful for a variety of applications, such as generating concept art, product visualizations, or even personalized content for marketing or social media. The ability to fine-tune the model with LoRA weights can also enable specialized use cases, like improving the model's performance on specific domains or styles. Things to try Some interesting things to try with the flux-dev-lora model include experimenting with different LoRA weights to see how they affect the generated images, testing the model's performance on a variety of prompts, and exploring the use of the safety checker toggle to generate potentially more creative or unusual content.

Updated Invalid Date

vicuna-7b-v1.3

28

The vicuna-7b-v1.3 is a large language model developed by LMSYS through fine-tuning the LLaMA model on user-shared conversations collected from ShareGPT. It is designed as a chatbot assistant, capable of engaging in natural language conversations. This model is related to other Vicuna and LLaMA-based models such as vicuna-13b-v1.3, upstage-llama-2-70b-instruct-v2, llava-v1.6-vicuna-7b, and llama-2-7b-chat. Model inputs and outputs The vicuna-7b-v1.3 model takes a text prompt as input and generates relevant text as output. The prompt can be an instruction, a question, or any other natural language input. The model's outputs are continuations of the input text, generated based on the model's understanding of the context. Inputs Prompt**: The text prompt that the model uses to generate a response. Temperature**: A parameter that controls the model's creativity and diversity of outputs. Lower temperatures result in more conservative and focused outputs, while higher temperatures lead to more exploratory and varied responses. Max new tokens**: The maximum number of new tokens the model will generate in response to the input prompt. Outputs Generated text**: The model's response to the input prompt, which can be of variable length depending on the prompt and parameters. Capabilities The vicuna-7b-v1.3 model is capable of engaging in open-ended conversations, answering questions, providing explanations, and generating creative text across a wide range of topics. It can be used for tasks such as language modeling, text generation, and chatbot development. What can I use it for? The primary use of the vicuna-7b-v1.3 model is for research on large language models and chatbots. Researchers and hobbyists in natural language processing, machine learning, and artificial intelligence can use this model to explore various applications, such as conversational AI, task-oriented dialogue systems, and language generation. Things to try With the vicuna-7b-v1.3 model, you can experiment with different prompts to see how the model responds. Try asking it questions, providing it with instructions, or giving it open-ended prompts to see the range of its capabilities. You can also adjust the temperature and max new tokens parameters to observe how they affect the model's output.

Updated Invalid Date

vicuna-13b-v1.3

35

The vicuna-13b-v1.3 is a language model developed by the lmsys team. It is based on the Llama model from Meta, with additional training to instill more capable and ethical conversational abilities. The vicuna-13b-v1.3 model is similar to other Vicuna-based models and the Llama 2 Chat models in that they all leverage the strong language understanding and generation capabilities of Llama while fine-tuning for more natural, engaging, and trustworthy conversation. Model inputs and outputs The vicuna-13b-v1.3 model takes a single input - a text prompt - and generates a text response. The prompt can be any natural language instruction or query, and the model will attempt to provide a relevant and coherent answer. The output is an open-ended text response, which can range from a short phrase to multiple paragraphs depending on the complexity of the input. Inputs Prompt**: The natural language instruction or query to be processed by the model Outputs Response**: The model's generated text response to the input prompt Capabilities The vicuna-13b-v1.3 model is capable of engaging in open-ended dialogue, answering questions, providing explanations, and generating creative content across a wide range of topics. It has been trained to be helpful, honest, and harmless, making it suitable for various applications such as customer service, education, research assistance, and creative writing. What can I use it for? The vicuna-13b-v1.3 model can be used for a variety of applications, including: Conversational AI**: The model can be integrated into chatbots or virtual assistants to provide natural language interaction and task completion. Content Generation**: The model can be used to generate text for articles, stories, scripts, and other creative writing projects. Question Answering**: The model can be used to answer questions on a wide range of topics, making it useful for research, education, and customer support. Summarization**: The model can be used to summarize long-form text, making it useful for quickly digesting and understanding complex information. Things to try Some interesting things to try with the vicuna-13b-v1.3 model include: Engaging the model in open-ended dialogue to see the depth and nuance of its conversational abilities. Providing the model with creative writing prompts and observing the unique and imaginative responses it generates. Asking the model to explain complex topics, such as scientific or historical concepts, and evaluating the clarity and accuracy of its explanations. Pushing the model's boundaries by asking it to tackle ethical dilemmas or hypothetical scenarios, and observing its responses.

Updated Invalid Date