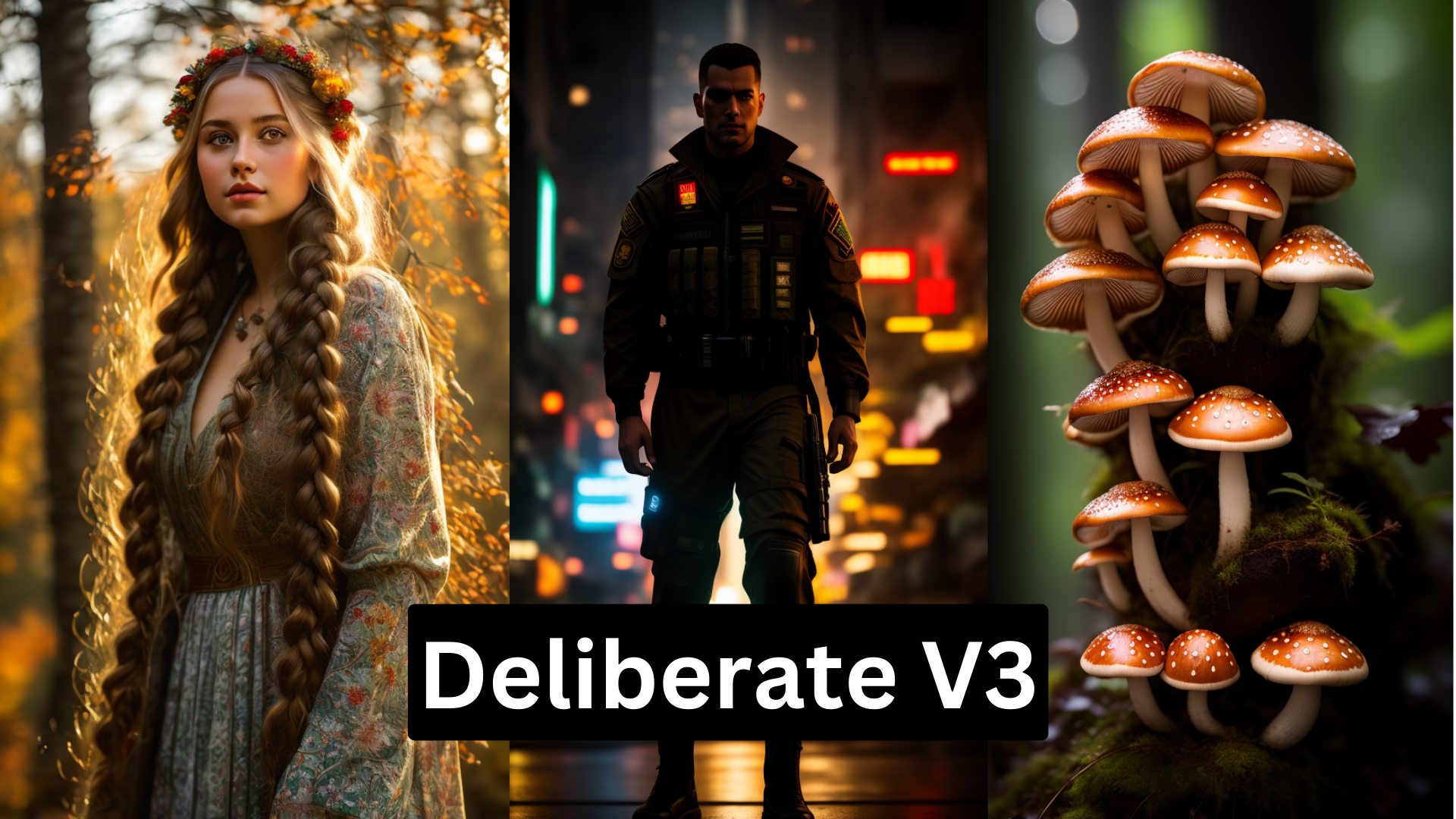

deliberate-v3

Maintainer: pagebrain

8

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | View on Github |

| Paper link | View on Arxiv |

Create account to get full access

Model overview

The deliberate-v3 model is a powerful AI model developed by pagebrain. It shares similar capabilities with other models in pagebrain's lineup, such as dreamshaper-v8, epicphotogasm-v1, epicrealism-v4, realistic-vision-v5-1, and epicrealism-v5. These models leverage a T4 GPU, negative embeddings, img2img, inpainting, safety checker, KarrasDPM, and pruned fp16 safetensor to deliver high-quality, safe image generation results.

Model inputs and outputs

The deliberate-v3 model accepts a variety of inputs, including a prompt, an optional input image for img2img or inpainting, and additional parameters like seed, width, height, guidance scale, and more. The model then generates one or more output images based on the provided inputs.

Inputs

- Prompt: The text prompt that describes the desired image.

- Image: An optional input image for img2img or inpainting mode.

- Mask: An optional input mask for the inpainting mode, where black areas will be preserved and white areas will be inpainted.

- Seed: The random seed to use for generating the output image(s).

- Width and Height: The desired width and height of the output image(s).

- Negative Prompt: Specific things to avoid in the output image.

- Prompt Strength: The strength of the prompt when using an init image.

- Num Inference Steps: The number of denoising steps to perform.

- Guidance Scale: The scale for classifier-free guidance.

- Safety Checker: Whether to enable the safety checker to filter out potentially unsafe content.

Outputs

- Image(s): One or more generated images based on the provided inputs.

Capabilities

The deliberate-v3 model is capable of generating high-quality, realistic images based on text prompts. It can also perform img2img and inpainting tasks, allowing users to refine or modify existing images. The model's safety checker helps ensure the generated content is appropriate and does not contain harmful or explicit material.

What can I use it for?

The deliberate-v3 model can be used for a variety of creative and practical applications. For example, you could use it to generate concept art, product visualizations, landscapes, portraits, and more. The img2img and inpainting capabilities also make it useful for photo editing and manipulation tasks. Additionally, the model's safety features make it suitable for use in commercial or professional settings where content filtering is important.

Things to try

Some interesting things to try with the deliberate-v3 model include experimenting with different prompts and negative prompts to see how they affect the generated output, using the img2img and inpainting features to enhance or modify existing images, and combining the model with other tools or techniques for more complex projects. As with any AI model, it's important to carefully review the generated content and ensure it aligns with your intended use case.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

dreamshaper-v8

9

dreamshaper-v8 is a Stable Diffusion model developed by pagebrain that aims to produce high-quality, diverse, and flexible image generations. It leverages a variety of techniques like negative embeddings, inpainting, and safety checking to enhance the model's capabilities. This model can be compared to similar offerings like majicmix-realistic-v7 and dreamshaper-xl-turbo, which also target general-purpose image generation tasks. Model inputs and outputs dreamshaper-v8 accepts a variety of inputs, including a text prompt, an optional input image for img2img or inpainting, a mask for inpainting, and various settings to control the output. The model can generate multiple output images based on the provided parameters. Inputs Prompt**: The text description of the desired image. Image**: An optional input image for img2img or inpainting tasks. Mask**: An optional mask for inpainting, where black areas will be preserved, and white areas will be inpainted. Seed**: A random seed value to control the output. Width/Height**: The desired size of the output image. Num Outputs**: The number of images to generate. Guidance Scale**: The scale for classifier-free guidance. Num Inference Steps**: The number of denoising steps. Safety Checker**: A flag to enable or disable the safety checker. Negative Prompt**: Attributes to avoid in the output image. Prompt Strength**: The strength of the prompt when using an input image. Outputs The generated image(s) as a URI(s). Capabilities dreamshaper-v8 can perform a range of image generation tasks, including text-to-image, img2img, and inpainting. The model leverages various techniques like negative embeddings and safety checking to produce high-quality, diverse, and flexible outputs. It can be used for a variety of creative projects, from art generation to product visualization. What can I use it for? With its versatile capabilities, dreamshaper-v8 can be a valuable tool for a wide range of applications. Artists and designers can use it to generate unique and compelling artwork, while marketers and e-commerce businesses can leverage it for product visualization and advertising. The model's ability to perform inpainting can also be useful for tasks like photo editing and restoration. Things to try One interesting aspect of dreamshaper-v8 is its use of negative embeddings, which can help the model avoid generating certain undesirable elements in the output. Experimenting with different negative prompts can lead to unexpected and intriguing results. Additionally, the model's img2img capabilities allow for interesting transformations and manipulations of existing images, opening up creative possibilities.

Updated Invalid Date

dreamshaper-v7

1

The dreamshaper-v7 model is a powerful AI-powered image generation system developed by pagebrain. It is similar to other models created by pagebrain, such as dreamshaper-v8, deliberate-v3, epicphotogasm-v1, realistic-vision-v5-1, and cyberrealistic-v3-3. These models share common capabilities, such as using a T4 GPU, leveraging negative embeddings, supporting img2img and inpainting, incorporating a safety checker, and utilizing KarrasDPM and pruned fp16 safetensors. Model inputs and outputs The dreamshaper-v7 model accepts a variety of inputs, including a text prompt, an optional input image for img2img or inpainting, and various configuration options. The outputs are one or more generated images that match the provided prompt and input. Inputs Prompt**: The text prompt that describes the desired image. Image**: An optional input image for img2img or inpainting mode. Mask**: An optional mask for inpainting mode, where black areas will be preserved and white areas will be inpainted. Seed**: An optional random seed value for reproducibility. Width and Height**: The desired width and height of the output image. Scheduler**: The denoising scheduler to use, with the default being K_EULER. Num Outputs**: The number of images to generate, up to a maximum of 4. Guidance Scale**: The scale for classifier-free guidance, which controls the balance between the prompt and the model's own "imagination". Safety Checker**: A toggle to enable or disable the safety checker, which can filter out potentially unsafe content. Negative Prompt**: Text describing things the model should avoid generating in the output. Prompt Strength**: The strength of the prompt when using an input image, where 1.0 corresponds to fully replacing the input image. Num Inference Steps**: The number of denoising steps to perform during the generation process. Outputs One or more generated images that match the provided prompt and input. Capabilities The dreamshaper-v7 model is capable of generating high-quality, photorealistic images based on text prompts. It can also perform img2img and inpainting tasks, where an existing image is used as a starting point for generation or modification. The model's safety checker helps ensure the output is appropriate and avoids potentially harmful or explicit content. What can I use it for? The dreamshaper-v7 model can be used for a variety of creative and practical applications, such as: Generating concept art or illustrations for games, books, or other media Creating unique and personalized images for social media, marketing, or advertising Enhancing existing images through inpainting or img2img capabilities Exploring and visualizing creative ideas and concepts through text-to-image generation As with any powerful AI tool, it's important to use the dreamshaper-v7 model responsibly and ethically, considering the potential implications and impacts of the generated content. Things to try One interesting aspect of the dreamshaper-v7 model is its ability to generate visually striking and imaginative images based on even the most abstract or unusual prompts. Try experimenting with prompts that combine seemingly unrelated concepts or elements, or that challenge the model to depict surreal or fantastical scenes. The model's integration of negative embeddings and safety features also allows for more nuanced and controlled generation, giving users the ability to refine and fine-tune the output to their specific needs.

Updated Invalid Date

cyberrealistic-v3-3

6

cyberrealistic-v3-3 is an AI model developed by pagebrain that aims to generate highly realistic and detailed images. It is similar to other models like dreamshaper-v8, realistic-vision-v5-1, deliberate-v3, epicrealism-v2, and epicrealism-v4 in its use of a T4 GPU, negative embeddings, img2img, inpainting, safety checker, KarrasDPM, and pruned fp16 safetensor. Model inputs and outputs cyberrealistic-v3-3 takes a variety of inputs, including a text prompt, an optional input image for img2img or inpainting, a seed for reproducibility, and various settings to control the output. The model can generate multiple images based on the provided inputs. Inputs Prompt**: The text prompt that describes the desired image. Image**: An optional input image that can be used for img2img or inpainting. Seed**: A random seed value to ensure reproducible results. Width and Height**: The desired width and height of the output image. Num Outputs**: The number of images to generate. Guidance Scale**: The scale for classifier-free guidance, which affects the balance between the prompt and the model's learned priors. Num Inference Steps**: The number of denoising steps to perform during image generation. Negative Prompt**: Text that specifies things the model should avoid generating in the output. Prompt Strength**: The strength of the input image's influence on the output when using img2img. Safety Checker**: A toggle to enable or disable the model's safety checker. Outputs Images**: The generated images that match the provided prompt and other input settings. Capabilities cyberrealistic-v3-3 is capable of generating highly realistic and detailed images based on text prompts. It can also perform img2img and inpainting, allowing users to refine or edit existing images. The model's safety checker helps ensure the generated images are appropriate and do not contain harmful content. What can I use it for? cyberrealistic-v3-3 can be used for a variety of creative and practical applications, such as digital art, product visualization, architectural rendering, and scientific illustration. The model's ability to generate realistic images from text prompts can be particularly useful for creative professionals and hobbyists who want to bring their ideas to life. Things to try With cyberrealistic-v3-3, you can experiment with different prompts to see the range of images the model can generate. Try combining prompts with specific details or using the img2img or inpainting features to refine existing images. Adjust the various settings, such as guidance scale and number of inference steps, to see how they affect the output. Explore the negative prompt feature to see how you can guide the model away from generating unwanted content.

Updated Invalid Date

realistic-vision-v5-1

6

The realistic-vision-v5-1 model is a text-to-image AI model developed by the creator pagebrain. It is similar to other pagebrain models like dreamshaper-v8 and majicmix-realistic-v7 that use negative embeddings, img2img, inpainting, and a safety checker. The model is powered by a T4 GPU and utilizes KarrasDPM for its scheduler. Model inputs and outputs The realistic-vision-v5-1 model accepts a text prompt, an optional input image, and various parameters to control the generation process. It outputs one or more generated images that match the provided prompt. Inputs Prompt**: The text prompt describing the image you want to generate. Negative Prompt**: Specify things you don't want to see in the output, such as "bad quality, low resolution". Image**: An optional input image to use for img2img or inpainting mode. Mask**: An optional mask image to specify areas of the input image to inpaint. Seed**: A random seed to use for generating the image. Leave blank to randomize. Width/Height**: The desired size of the output image. Num Outputs**: The number of images to generate (up to 4). Guidance Scale**: The strength of the guidance towards the text prompt. Num Inference Steps**: The number of denoising steps to perform. Safety Checker**: Toggle whether to enable the safety checker to filter out potentially unsafe content. Outputs Generated Images**: One or more images matching the provided prompt. Capabilities The realistic-vision-v5-1 model is capable of generating highly realistic and detailed images from text prompts. It can also perform img2img and inpainting tasks, allowing you to manipulate and refine existing images. The model's safety checker helps filter out potentially unsafe or inappropriate content. What can I use it for? The realistic-vision-v5-1 model can be used for a variety of creative and practical applications, such as: Generating realistic illustrations, portraits, and scenes for use in art, design, or marketing Enhancing and editing existing images through img2img and inpainting Prototyping and visualizing ideas or concepts described in text Exploring creative prompts and experimenting with different text-to-image approaches Things to try Some interesting things to try with the realistic-vision-v5-1 model include: Exploring the limits of its realism by generating highly detailed natural scenes or technical diagrams Combining the model with other tools like GFPGAN or Real-ESRGAN to enhance and refine the output images Experimenting with different negative prompts to see how the model handles requests to avoid certain elements or styles Iterating on prompts and adjusting parameters like guidance scale and number of inference steps to achieve specific visual effects

Updated Invalid Date