disco-diffusion-style

Maintainer: cjwbw

3

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | No Github link provided |

| Paper link | No paper link provided |

Create account to get full access

Model overview

The disco-diffusion-style model is a Stable Diffusion model fine-tuned to capture the distinctive Disco Diffusion visual style. This model was developed by cjwbw, who has also created other Stable Diffusion models like analog-diffusion, stable-diffusion-v2, and stable-diffusion-2-1-unclip. The disco-diffusion-style model is trained using Dreambooth, allowing it to generate images in the distinct Disco Diffusion artistic style.

Model inputs and outputs

The disco-diffusion-style model takes a text prompt as input and generates one or more images as output. The prompt can describe the desired image, and the model will attempt to create a corresponding image in the Disco Diffusion style.

Inputs

- Prompt: The text description of the desired image

- Seed: A random seed value to control the image generation process

- Width/Height: The dimensions of the output image, with a maximum size of 1024x768 or 768x1024

- Number of outputs: The number of images to generate

- Guidance scale: The scale for classifier-free guidance, which controls the balance between the prompt and the model's own creativity

- Number of inference steps: The number of denoising steps to take during the image generation process

Outputs

- Image(s): One or more generated images in the Disco Diffusion style, returned as image URLs

Capabilities

The disco-diffusion-style model can generate a wide range of images in the distinctive Disco Diffusion visual style, from abstract and surreal compositions to fantastical and whimsical scenes. The model's ability to capture the unique aesthetic of Disco Diffusion makes it a powerful tool for artists, designers, and creative professionals looking to expand their visual repertoire.

What can I use it for?

The disco-diffusion-style model can be used for a variety of creative and artistic applications, such as:

- Generating promotional or marketing materials with a eye-catching, dreamlike quality

- Creating unique and visually striking artwork for personal or commercial use

- Exploring and experimenting with the Disco Diffusion style in a more accessible and user-friendly way

By leveraging the model's capabilities, users can tap into the Disco Diffusion aesthetic without the need for specialized knowledge or training in that particular style.

Things to try

One interesting aspect of the disco-diffusion-style model is its ability to capture the nuances and subtleties of the Disco Diffusion style. Users can experiment with different prompts and parameter settings to see how the model responds, potentially unlocking unexpected and captivating results. For example, users could try combining the Disco Diffusion style with other artistic influences or genre-specific themes to create unique and compelling hybrid images.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

stable-diffusion-v2

277

The stable-diffusion-v2 model is a test version of the popular Stable Diffusion model, developed by the AI research group Replicate and maintained by cjwbw. The model is built on the Diffusers library and is capable of generating high-quality, photorealistic images from text prompts. It shares similarities with other Stable Diffusion models like stable-diffusion, stable-diffusion-2-1-unclip, and stable-diffusion-v2-inpainting, but is a distinct test version with its own unique properties. Model inputs and outputs The stable-diffusion-v2 model takes in a variety of inputs to generate output images. These include: Inputs Prompt**: The text prompt that describes the desired image. This can be a detailed description or a simple phrase. Seed**: A random seed value that can be used to ensure reproducible results. Width and Height**: The desired dimensions of the output image. Init Image**: An initial image that can be used as a starting point for the generation process. Guidance Scale**: A value that controls the strength of the text-to-image guidance during the generation process. Negative Prompt**: A text prompt that describes what the model should not include in the generated image. Prompt Strength**: A value that controls the strength of the initial image's influence on the final output. Number of Inference Steps**: The number of denoising steps to perform during the generation process. Outputs Generated Images**: The model outputs one or more images that match the provided prompt and other input parameters. Capabilities The stable-diffusion-v2 model is capable of generating a wide variety of photorealistic images from text prompts. It can produce images of people, animals, landscapes, and even abstract concepts. The model's capabilities are constantly evolving, and it can be fine-tuned or combined with other models to achieve specific artistic or creative goals. What can I use it for? The stable-diffusion-v2 model can be used for a variety of applications, such as: Content Creation**: Generate images for articles, blog posts, social media, or other digital content. Concept Visualization**: Quickly visualize ideas or concepts by generating relevant images from text descriptions. Artistic Exploration**: Use the model as a creative tool to explore new artistic styles and genres. Product Design**: Generate product mockups or prototypes based on textual descriptions. Things to try With the stable-diffusion-v2 model, you can experiment with a wide range of prompts and input parameters to see how they affect the generated images. Try using different types of prompts, such as detailed descriptions, abstract concepts, or even poetry, to see the model's versatility. You can also play with the various input settings, such as the guidance scale and number of inference steps, to find the right balance for your desired output.

Updated Invalid Date

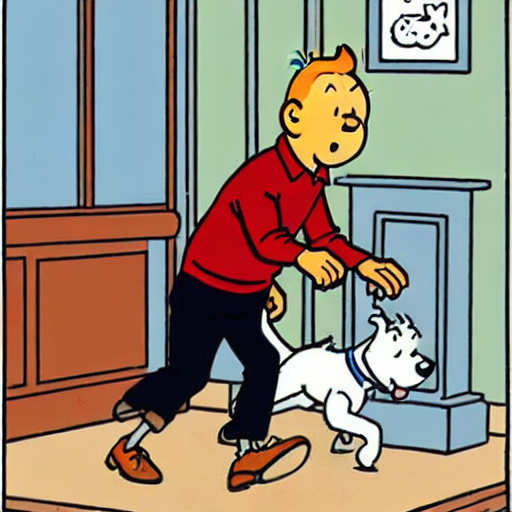

herge-style

2

The herge-style model is a Stable Diffusion model fine-tuned by cjwbw to generate images in the distinctive style of the Belgian cartoonist Hergé. This model builds on the capabilities of the base Stable Diffusion model by incorporating the unique visual characteristics of Hergé's iconic "ligne claire" (clear line) drawing technique. Similar models like disco-diffusion-style and analog-diffusion demonstrate the versatility of the Stable Diffusion framework in adapting to diverse visual styles. Model inputs and outputs The herge-style model accepts a text prompt as input and generates one or more images that match the specified prompt. The inputs include the prompt text, the number of images to generate, the image size, the guidance scale, and the number of inference steps. The output is an array of image URLs, each representing a generated image. Inputs Prompt**: The text prompt that describes the desired image Seed**: A random seed value to use for image generation (leave blank to randomize) Width**: The width of the output image (maximum 1024x768 or 768x1024) Height**: The height of the output image (maximum 1024x768 or 768x1024) Num Outputs**: The number of images to generate Guidance Scale**: The scale for classifier-free guidance (range 1 to 20) Num Inference Steps**: The number of denoising steps (range 1 to 500) Outputs Image URLs**: An array of URLs pointing to the generated images Capabilities The herge-style model can generate images that capture the distinct visual style of Hergé's Tintin comics. The images have a clean, minimal line art aesthetic with a muted color palette, reminiscent of the iconic "ligne claire" drawing technique. This allows the model to create illustrations that evoke the whimsical, adventurous spirit of Hergé's work. What can I use it for? You can use the herge-style model to create illustrations, book covers, or other visual content that pays homage to the classic Tintin comics. The model's ability to generate images in this unique style can be valuable for projects in children's literature, graphic design, or even film and animation. By leveraging the power of Stable Diffusion, you can easily experiment with different prompts and ideas to bring the world of Hergé to life in your own creative endeavors. Things to try One interesting aspect of the herge-style model is its ability to capture the essence of Hergé's drawing style while still allowing for a degree of creative interpretation. By adjusting the input prompt, you can explore variations on the classic Tintin aesthetic, such as imagining the characters in different settings or scenarios. Additionally, you can experiment with combining the herge-style model with other Stable Diffusion-based models, like disco-diffusion-style or analog-diffusion, to create unique hybrid styles or to integrate the Hergé-inspired visuals with other creative elements.

Updated Invalid Date

analog-diffusion

234

analog-diffusion is a Stable Diffusion model trained by cjwbw on a diverse set of analog photographs. It is similar to other dreambooth models like dreambooth and dreambooth-batch, which allow you to fine-tune Stable Diffusion on your own images. However, analog-diffusion has a unique style inspired by analog photography. Model inputs and outputs analog-diffusion takes in a text prompt and generates an image in response. The input includes parameters like the seed, image size, and number of inference steps. The output is an array of image URLs. Inputs Prompt**: The text prompt describing the desired image Seed**: A random number used to generate the image Width/Height**: The size of the output image Number of Inference Steps**: The number of denoising steps to take Guidance Scale**: The scale for classifier-free guidance Outputs Image URLs**: An array of URLs pointing to the generated images Capabilities analog-diffusion can generate high-quality, analog-style images from text prompts. The model has been trained on a diverse set of analog photographs, allowing it to capture the unique characteristics of that aesthetic. It can create portraits, landscapes, and other scenes with a dreamy, vintage-inspired look. What can I use it for? You can use analog-diffusion to create visually striking images for a variety of applications, such as art, photography, advertising, and more. The model's ability to generate analog-style images can be particularly useful for projects that aim to evoke a nostalgic or vintage feel. For example, you could use it to create cover art for a retro-inspired album or to generate images for a collage-based design. Things to try One interesting aspect of analog-diffusion is its ability to capture the imperfections and unique characteristics of analog photography. Try experimenting with different prompts to see how the model renders things like lens flare, film grain, and other analog effects. You can also try combining analog-diffusion with other Stable Diffusion models like anything-v4.0, dreamshaper, or pastel-mix to create even more diverse and compelling imagery.

Updated Invalid Date

future-diffusion

5

future-diffusion is a text-to-image AI model fine-tuned by cjwbw on high-quality 3D images with a futuristic sci-fi theme. It is built on top of the stable-diffusion model, which is a powerful latent text-to-image diffusion model capable of generating photo-realistic images from any text input. future-diffusion inherits the capabilities of stable-diffusion while adding a specialized focus on futuristic, sci-fi-inspired imagery. Model inputs and outputs future-diffusion takes a text prompt as the primary input, along with optional parameters like the image size, number of outputs, and sampling settings. The model then generates one or more corresponding images based on the provided prompt. Inputs Prompt**: The text prompt that describes the desired image Seed**: A random seed value to control the image generation process Width/Height**: The desired size of the output image Scheduler**: The algorithm used to sample the image during the diffusion process Num Outputs**: The number of images to generate Guidance Scale**: The scale for classifier-free guidance, which controls the balance between the text prompt and the model's own biases Negative Prompt**: Text describing what should not be included in the generated image Outputs Image(s)**: One or more images generated based on the provided prompt and other inputs Capabilities future-diffusion is capable of generating high-quality, photo-realistic images with a distinct futuristic and sci-fi aesthetic. The model can create images of advanced technologies, alien landscapes, cyberpunk environments, and more, all while maintaining a strong sense of visual coherence and plausibility. What can I use it for? future-diffusion could be useful for a variety of creative and visualization applications, such as concept art for science fiction films and games, illustrations for futuristic technology articles or books, or even as a tool for world-building and character design. The model's specialized focus on futuristic themes makes it particularly well-suited for projects that require a distinct sci-fi flavor. Things to try Experiment with different prompts to explore the model's capabilities, such as combining technical terms like "nanotech" or "quantum computing" with more emotive descriptions like "breathtaking" or "awe-inspiring." You can also try providing detailed prompts that include specific elements, like "a sleek, flying car hovering above a sprawling, neon-lit metropolis."

Updated Invalid Date