jojogan

Maintainer: mchong6

74

| Property | Value |

|---|---|

| Model Link | View on Replicate |

| API Spec | View on Replicate |

| Github Link | View on Github |

| Paper Link | View on Arxiv |

Get summaries of the top AI models delivered straight to your inbox:

Model overview

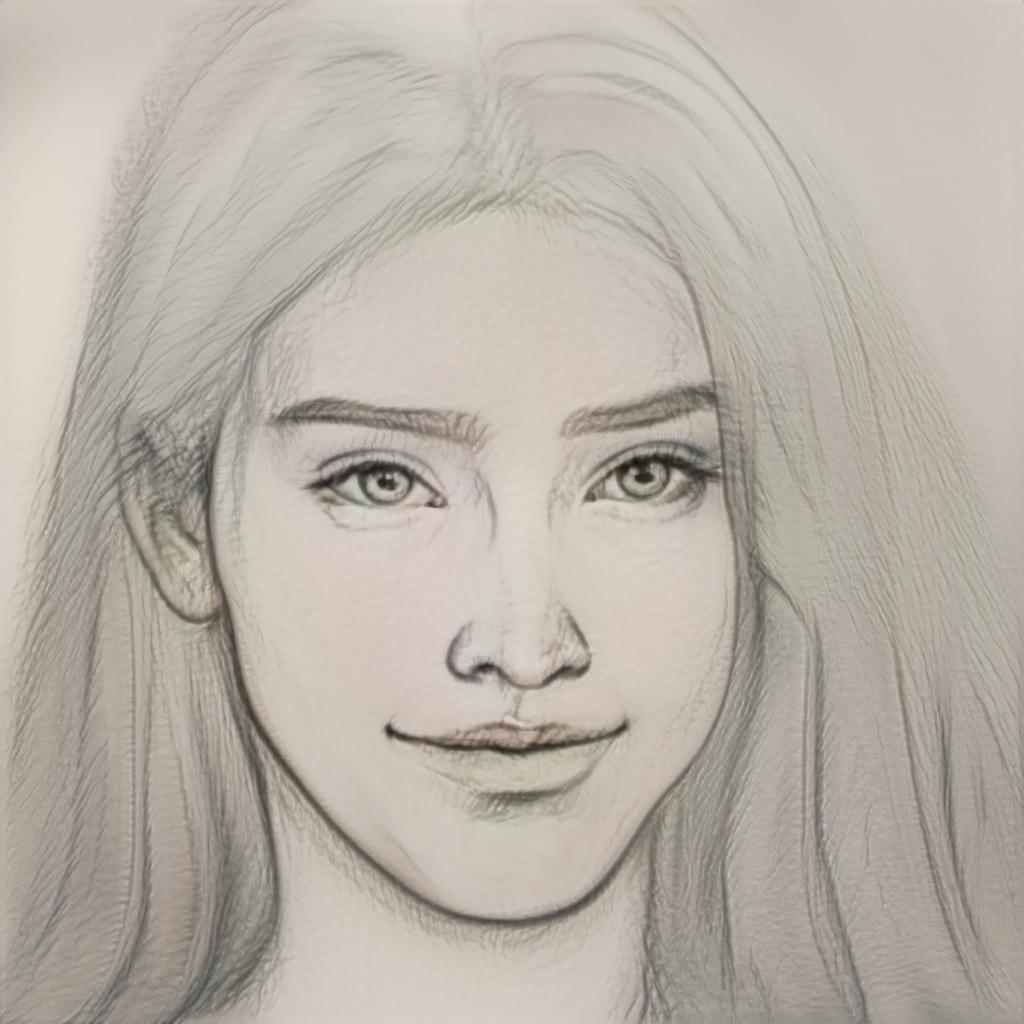

The jojogan model, created by maintainer mchong6, is a one-shot face stylization AI that can apply a unique artistic style to any face image. Unlike other few-shot stylization methods, JoJoGAN aims to capture fine-grained stylistic details like the shape of the eyes and boldness of lines. It does this by approximating paired real data through GAN inversion and finetuning a pretrained StyleGAN model. This allows the model to generalize the learned style to apply it to any face. The model is related to other face-focused models like gans-n-roses, GFPGAN, and StyleCarIGAN, which also leverage StyleGAN for face-based tasks.

Model inputs and outputs

The jojogan model takes a face image as input and applies a unique artistic style to it, outputting the stylized face image. The model allows the user to choose from several pre-trained styles or provide their own style image(s) for one-shot stylization.

Inputs

- Input Face: Photo of a human face

- Pretrained: Identifier of a pre-trained style to apply

- Style Img 0-3: Face style image(s) to use for one-shot stylization

- Num Iter: Number of finetuning steps (unused if a pretrained style is used)

- Alpha: Strength of the finetuned style

- Preserve Color: Option to preserve the colors of the original image

Outputs

- Output: The face image with the applied artistic style

Capabilities

The jojogan model is capable of applying a unique artistic style to any face image in a one-shot manner, preserving fine-grained stylistic details that other few-shot stylization methods often miss. The model supports both pre-trained styles as well as the ability to apply a custom style from provided reference images.

What can I use it for?

The jojogan model could be used for a variety of creative applications, such as generating unique portraits, character designs, or even concepts for illustrated books or comics. Its ability to capture fine details in the style transfer makes it particularly well-suited for artistic and illustrative tasks. Companies in the creative industries, like animation studios or game developers, could potentially use this model to generate concept art or stylize existing character designs.

Things to try

One interesting thing to try with the jojogan model is to experiment with the combination of multiple style images. By providing several reference style images, the model can blend the different artistic elements into a cohesive and unique stylization. This could allow for the creation of truly novel and imaginative face designs. Another avenue to explore is using the model's sketch mode, which can generate stylized face sketches, opening up possibilities for comic book-inspired artwork or character designs.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

gfpgan

74.2K

gfpgan is a practical face restoration algorithm developed by the Tencent ARC team. It leverages the rich and diverse priors encapsulated in a pre-trained face GAN (such as StyleGAN2) to perform blind face restoration on old photos or AI-generated faces. This approach contrasts with similar models like Real-ESRGAN, which focuses on general image restoration, or PyTorch-AnimeGAN, which specializes in anime-style photo animation. Model inputs and outputs gfpgan takes an input image and rescales it by a specified factor, typically 2x. The model can handle a variety of face images, from low-quality old photos to high-quality AI-generated faces. Inputs Img**: The input image to be restored Scale**: The factor by which to rescale the output image (default is 2) Version**: The gfpgan model version to use (v1.3 for better quality, v1.4 for more details and better identity) Outputs Output**: The restored face image Capabilities gfpgan can effectively restore a wide range of face images, from old, low-quality photos to high-quality AI-generated faces. It is able to recover fine details, fix blemishes, and enhance the overall appearance of the face while preserving the original identity. What can I use it for? You can use gfpgan to restore old family photos, enhance AI-generated portraits, or breathe new life into low-quality images of faces. The model's capabilities make it a valuable tool for photographers, digital artists, and anyone looking to improve the quality of their facial images. Additionally, the maintainer tencentarc offers an online demo on Replicate, allowing you to try the model without setting up the local environment. Things to try Experiment with different input images, varying the scale and version parameters, to see how gfpgan can transform low-quality or damaged face images into high-quality, detailed portraits. You can also try combining gfpgan with other models like Real-ESRGAN to enhance the background and non-facial regions of the image.

Updated Invalid Date

gans-n-roses

4

The gans-n-roses model is a Pytorch implementation of a novel AI technique for converting images or videos of faces into diverse, high-quality anime-style art. Developed by researchers Min Jin Chong and David Forsyth, this model builds upon advancements in Generative Adversarial Networks (GANs) and image-to-image translation. Unlike previous methods, gans-n-roses is able to capture the complex and varied styles found in anime, producing a wide range of potential outputs from a single input face. This model can be contrasted with similar AI-powered anime art generators like AnimeGANv3, AnimeGANv2, and PyTorch-AnimeGAN, which tend to have a more limited stylistic range. The maintainer mchong6 has also developed the GFPGAN and Real-ESRGAN models for face restoration and image upscaling, respectively. Model inputs and outputs The gans-n-roses model takes an input image or short video of a face and generates a corresponding anime-style rendering. The model learns a mapping from real face images to a diverse space of anime styles, allowing it to produce a wide variety of potential outputs from a single input. Inputs Inpath**: An image or short video file of a face Outputs Output**: An anime-style rendering of the input face image or video Capabilities The gans-n-roses model excels at capturing the rich and varied artistic styles found in anime, going beyond the more limited outputs of previous anime art generators. By leveraging a novel adversarial loss function, the model is able to learn a diverse mapping from input faces to a wide range of potential anime renderings. What can I use it for? The gans-n-roses model could be useful for a variety of creative and entertainment applications, such as generating anime-style profile pictures, avatars, or promotional content. It could also be used to transform existing photos or videos into an anime-inspired aesthetic, opening up new artistic opportunities for filmmakers, animators, and content creators. Things to try One interesting aspect of the gans-n-roses model is its ability to perform video-to-video translation without ever being trained on video data. This means you can feed it short video clips of faces and it will generate the corresponding anime-style animations. Try experimenting with different input videos to see the range of styles the model can produce.

Updated Invalid Date

blendgan

7

BlendGAN is a generative adversarial network (GAN) model developed by researchers at Y-tech, Kuaishou Technology. It is designed for arbitrary stylized face generation, allowing users to apply a diverse range of artistic styles to facial images. This sets it apart from similar models like StarGANv2, which are limited to a fixed set of styles. BlendGAN leverages a self-supervised style encoder trained on a large-scale artistic face dataset called AAHQ, enabling it to capture a wide variety of artistic styles. Additionally, it introduces a weighted blending module that blends face and style representations implicitly, providing fine-grained control over the stylization effect. Model inputs and outputs BlendGAN takes two inputs: a source facial image and a style reference image. The model aligns and resizes both inputs to 1024x1024 resolution before processing. As output, it generates a new facial image that seamlessly blends the source face with the artistic style of the reference image. Inputs Style**: A facial image that serves as the reference for the desired artistic style. Source**: The facial image to be stylized. Outputs Output**: The stylized facial image, where the source face has been blended with the artistic style of the reference image. Capabilities BlendGAN excels at generating highly realistic and diverse stylized facial images. Unlike previous methods that require case-by-case training for each style, BlendGAN can gracefully fit arbitrary styles in a single model. This is achieved through its self-supervised style encoder and weighted blending module, which enable fine-grained control over the stylization process. What can I use it for? BlendGAN is well-suited for creative applications such as digital art, photo editing, and character design. It can be used to transform portraits and headshots into unique, artistic-looking images with a wide range of styles, from impressionistic to abstract. This can be particularly useful for enhancing social media content, designing book covers, or creating concept art for games and films. Things to try One interesting aspect of BlendGAN is its ability to blend the source face and style reference in a seamless and controllable way. By adjusting the parameters of the weighted blending module, users can explore different levels of stylization, from subtle enhancements to dramatic transformations. Experimenting with various source and style images can lead to unexpected and visually striking results, providing a fun and creative way to play with facial features and artistic expression.

Updated Invalid Date

real-esrgan

45.2K

real-esrgan is a practical image restoration model developed by researchers at the Tencent ARC Lab and Shenzhen Institutes of Advanced Technology. It aims to tackle real-world blind super-resolution, going beyond simply enhancing image quality. Compared to similar models like absolutereality-v1.8.1, instant-id, clarity-upscaler, and reliberate-v3, real-esrgan is specifically focused on restoring real-world images and videos, including those with face regions. Model inputs and outputs real-esrgan takes an input image and outputs an upscaled and enhanced version of that image. The model can handle a variety of input types, including regular images, images with alpha channels, and even grayscale images. The output is a high-quality, visually appealing image that retains important details and features. Inputs Image**: The input image to be upscaled and enhanced. Scale**: The desired scale factor for upscaling the input image, typically between 2x and 4x. Face Enhance**: An optional flag to enable face enhancement using the GFPGAN model. Outputs Output Image**: The restored and upscaled version of the input image. Capabilities real-esrgan is capable of performing high-quality image upscaling and restoration, even on challenging real-world images. It can handle a variety of input types and produces visually appealing results that maintain important details and features. The model can also be used to enhance facial regions in images, thanks to its integration with the GFPGAN model. What can I use it for? real-esrgan can be useful for a variety of applications, such as: Photo Restoration**: Upscale and enhance low-quality or blurry photos to create high-resolution, visually appealing images. Video Enhancement**: Apply real-esrgan to individual frames of a video to improve the overall visual quality and clarity. Anime and Manga Upscaling**: The RealESRGAN_x4plus_anime_6B model is specifically optimized for anime and manga images, producing excellent results. Things to try Some interesting things to try with real-esrgan include: Experiment with different scale factors to find the optimal balance between quality and performance. Combine real-esrgan with other image processing techniques, such as denoising or color correction, to achieve even better results. Explore the model's capabilities on a wide range of input images, from natural photographs to detailed illustrations and paintings. Try the RealESRGAN_x4plus_anime_6B model for enhancing anime and manga-style images, and compare the results to other upscaling solutions.

Updated Invalid Date