kosmos-g

Maintainer: adirik

3

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | View on Github |

| Paper link | View on Arxiv |

Create account to get full access

Model overview

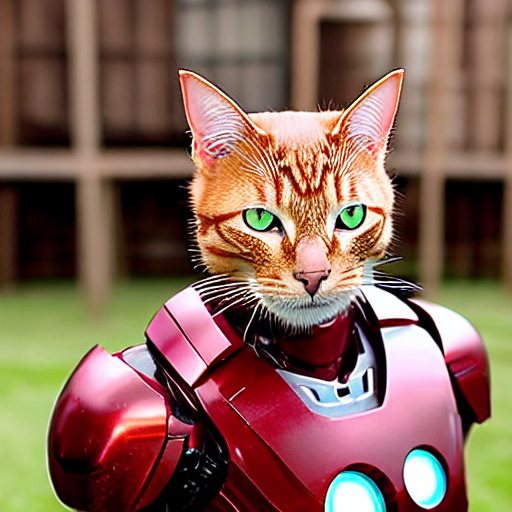

Kosmos-G is a multimodal large language model developed by adirik that can generate images based on text prompts. It builds upon previous work in text-to-image generation, such as the stylemc model, to enable more contextual and versatile image creation. Kosmos-G can take multiple input images and a text prompt to generate new images that blend the visual and semantic information. This allows for more nuanced and compelling image generation compared to models that only use text prompts.

Model inputs and outputs

Kosmos-G takes a variety of inputs to generate new images, including one or two starting images, a text prompt, and various configuration settings. The model outputs a set of generated images that match the provided prompt and visual context.

Inputs

- image1: The first input image, used as a starting point for the generation

- image2: An optional second input image, which can provide additional visual context

- prompt: The text prompt describing the desired output image

- negative_prompt: An optional text prompt specifying elements to avoid in the generated image

- num_images: The number of images to generate

- num_inference_steps: The number of steps to use during the image generation process

- text_guidance_scale: A parameter controlling the influence of the text prompt on the generated images

Outputs

- Output: An array of generated image URLs

Capabilities

Kosmos-G can generate unique and contextual images based on a combination of input images and text prompts. It is able to blend the visual information from the starting images with the semantic information in the text prompt to create new compositions that maintain the essence of the original visuals while incorporating the desired conceptual elements. This allows for more flexible and expressive image generation compared to models that only use text prompts.

What can I use it for?

Kosmos-G can be used for a variety of creative and practical applications, such as:

- Generating concept art or illustrations for creative projects

- Producing visuals for marketing and advertising campaigns

- Enhancing existing images by blending them with new text-based elements

- Aiding in the ideation and visualization process for product design or other visual projects

The model's ability to leverage both visual and textual inputs makes it a powerful tool for users looking to create unique and expressive imagery.

Things to try

One interesting aspect of Kosmos-G is its ability to generate images that seamlessly integrate multiple visual and conceptual elements. Try providing the model with a starting image and a prompt that describes a specific scene or environment, then observe how it blends the visual elements from the input image with the new conceptual elements to create a cohesive and compelling result. You can also experiment with different combinations of input images and text prompts to see the range of outputs the model can produce.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

kosmos-2

1

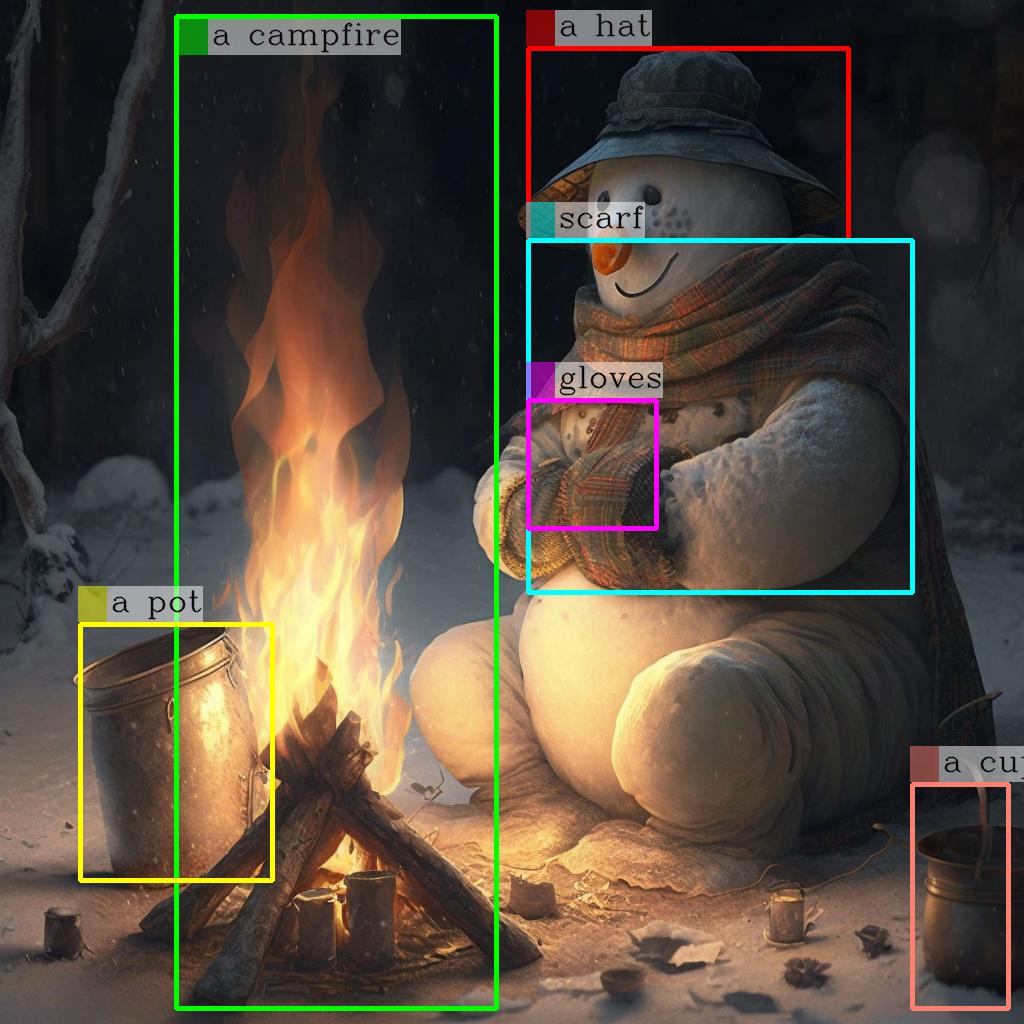

kosmos-2 is a large language model developed by Microsoft that aims to ground multimodal language models to the real world. It is similar to other models created by the same maintainer, such as Kosmos-G, Moondream1, and DeepSeek-VL, which focus on generating images, performing vision-language tasks, and understanding real-world applications. Model inputs and outputs kosmos-2 takes an image as input and outputs a text description of the contents of the image, including bounding boxes around detected objects. The model can also provide a more detailed description if requested. Inputs Image**: An input image to be analyzed Outputs Text**: A description of the contents of the input image Image**: The input image with bounding boxes around detected objects Capabilities kosmos-2 is capable of detecting and describing various objects, scenes, and activities in an input image. It can identify and localize multiple objects within an image and provide a textual summary of its contents. What can I use it for? kosmos-2 can be useful for a variety of applications that require image understanding, such as visual search, image captioning, and scene understanding. It could be used to enhance user experiences in e-commerce, social media, or other image-driven applications. The model's ability to ground language to the real world also makes it potentially useful for tasks like image-based question answering or visual reasoning. Things to try One interesting aspect of kosmos-2 is its potential to be used in conjunction with other models like Kosmos-G to enable multimodal applications that combine image generation and understanding. Developers could explore ways to leverage kosmos-2's capabilities to build novel applications that seamlessly integrate visual and language processing.

Updated Invalid Date

vila-7b

2

vila-7b is a multi-image visual language model developed by Replicate creator adirik. It is a smaller version of the larger VILA model, which was pretrained on interleaved image-text data. The vila-7b model can be used for tasks like image captioning, visual question answering, and multimodal reasoning. It is similar to other multimodal models like stylemc, realistic-vision-v6.0, and kosmos-g also created by adirik. Model inputs and outputs The vila-7b model takes an image and a text prompt as input, and generates a textual response based on the prompt and the content of the image. The input image can be used to provide additional context and grounding for the generated text. Inputs image:** The image to discuss prompt:** The query to generate a response for top_p:** When decoding text, samples from the top p percentage of most likely tokens; lower to ignore less likely tokens temperature:** When decoding text, higher values make the model more creative num_beams:** Number of beams to use when decoding text; higher values are slower but more accurate max_tokens:** Maximum number of tokens to generate Outputs Output:** The model's generated response to the provided prompt and image Capabilities The vila-7b model can be used for a variety of multimodal tasks, such as image captioning, visual question answering, and multimodal reasoning. It can generate relevant and coherent responses to prompts about images, drawing on the visual information to provide informative and contextual outputs. What can I use it for? The vila-7b model could be useful for applications that require understanding and generating text based on visual input, such as automated image description generation, visual-based question answering, or even as a component in larger multimodal systems. Companies in industries like media, advertising, or e-commerce could potentially leverage the model's capabilities to automate image-based content generation or enhance their existing visual-text applications. Things to try One interesting thing to try with the vila-7b model is to provide it with a diverse set of images and prompts that require it to draw connections between the visual and textual information. For example, you could ask the model to compare and contrast two different images, or to generate a story based on a series of images. This could help explore the model's ability to truly understand and reason about the relationships between images and text.

Updated Invalid Date

stylemc

2

StyleMC is a text-guided image generation and editing model developed by Replicate creator adirik. It uses a multi-channel approach to enable fast and efficient text-guided manipulation of images. StyleMC can be used to generate and edit images based on textual prompts, allowing users to create new images or modify existing ones in a guided manner. Similar models like GFPGAN focus on practical face restoration, while Deliberate V6, LLaVA-13B, AbsoluteReality V1.8.1, and Reliberate V3 offer more general text-to-image and image-to-image capabilities. StyleMC aims to provide a specialized solution for text-guided image editing and manipulation. Model inputs and outputs StyleMC takes in an input image and a text prompt, and outputs a modified image based on the provided prompt. The model can be used to generate new images from scratch or to edit existing images in a text-guided manner. Inputs Image**: The input image to be edited or manipulated. Prompt**: The text prompt that describes the desired changes to be made to the input image. Change Alpha**: The strength coefficient to apply the style direction with. Custom Prompt**: An optional custom text prompt that can be used instead of the provided prompt. Id Loss Coeff**: The identity loss coefficient, which can be used to control the balance between preserving the original image's identity and applying the desired changes. Outputs Modified Image**: The output image that has been generated or edited based on the provided text prompt and other input parameters. Capabilities StyleMC excels at text-guided image generation and editing. It can be used to create new images from scratch or modify existing images in a variety of ways, such as changing the hairstyle, adding or removing specific features, or altering the overall style or mood of the image. What can I use it for? StyleMC can be particularly useful for creative applications, such as generating concept art, designing characters or scenes, or experimenting with different visual styles. It can also be used for more practical applications, such as editing product images or creating personalized content for social media. Things to try One interesting aspect of StyleMC is its ability to find a global manipulation direction based on a target text prompt. This allows users to explore the range of possible edits that can be made to an image based on a specific textual description, and then apply those changes in a controlled manner. Another feature to try is the video generation capability, which can create an animation of the step-by-step manipulation process. This can be a useful tool for understanding and demonstrating the model's capabilities.

Updated Invalid Date

texture

1

The texture model, developed by adirik, is a powerful tool for generating textures for 3D objects using text prompts. This model can be particularly useful for creators and designers who want to add realistic textures to their 3D models. Compared to similar models like stylemc, interior-design, text2image, styletts2, and masactrl-sdxl, the texture model is specifically focused on generating textures for 3D objects. Model inputs and outputs The texture model takes a 3D object file, a text prompt, and several optional parameters as inputs to generate a texture for the 3D object. The model's outputs are an array of image URLs representing the generated textures. Inputs Shape Path**: The 3D object file to generate the texture onto Prompt**: The text prompt used to generate the texture Shape Scale**: The factor to scale the 3D object by Guidance Scale**: The factor to scale the guidance image by Texture Resolution**: The resolution of the texture to generate Texture Interpolation Mode**: The texture mapping interpolation mode, with options like "nearest", "bilinear", and "bicubic" Seed**: The seed for the inference Outputs An array of image URLs representing the generated textures Capabilities The texture model can generate high-quality textures for 3D objects based on text prompts. This can be particularly useful for creating realistic-looking 3D models for various applications, such as game development, product design, or architectural visualizations. What can I use it for? The texture model can be used by 3D artists, game developers, product designers, and others who need to add realistic textures to their 3D models. By providing a text prompt, users can quickly generate a variety of textures that can be applied to their 3D objects. This can save a significant amount of time and effort compared to manually creating textures. Additionally, the model's ability to scale the 3D object and adjust the texture resolution and interpolation mode allows for fine-tuning the output to meet the specific needs of the project. Things to try One interesting thing to try with the texture model is experimenting with different text prompts to see the range of textures the model can generate. For example, you could try prompts like "a weathered metal surface" or "a lush, overgrown forest floor" to see how the model responds. Additionally, you could try adjusting the shape scale, guidance scale, and texture resolution to see how those parameters affect the generated textures.

Updated Invalid Date