phi-3-mini-4k-instruct

Maintainer: lucataco

76

| Property | Value |

|---|---|

| Model Link | View on Replicate |

| API Spec | View on Replicate |

| Github Link | View on Github |

| Paper Link | No paper link provided |

Create account to get full access

Model overview

The phi-3-mini-4k-instruct is a 3.8B parameter, lightweight, state-of-the-art open model trained with the Phi-3 datasets by lucataco. It is similar to other models like the reliberate-v3, absolutereality-v1.8.1, instant-id, and phi-2 in its capabilities.

Model inputs and outputs

The phi-3-mini-4k-instruct model takes a text prompt as input and generates text outputs. The key inputs include:

Inputs

- Prompt: The text prompt to send to the model.

- Max Length: The maximum number of tokens to generate.

- Temperature: Adjusts the randomness of the outputs.

- Top K: Samples from the top k most likely tokens when decoding text.

- Top P: Samples from the top p percentage of most likely tokens when decoding text.

- Repetition Penalty: Penalty for repeated words in the generated text.

- System Prompt: The system prompt provided to the model.

Outputs

- The model generates a list of text outputs based on the provided inputs.

Capabilities

The phi-3-mini-4k-instruct model is capable of generating text outputs based on the provided prompts. It can be used for a variety of language tasks, such as text generation, summarization, and question answering.

What can I use it for?

The phi-3-mini-4k-instruct model can be used for a variety of projects, such as creating chatbots, generating creative writing, or augmenting content creation workflows. It could be particularly useful for companies looking to automate certain text-based tasks or enhance their existing language models.

Things to try

One interesting thing to try with the phi-3-mini-4k-instruct model is to experiment with different temperature and top-k/top-p settings to see how they affect the diversity and coherence of the generated text. You could also try providing more detailed or specific prompts to see how the model responds and whether it can generate relevant and informative outputs.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

phi-3-mini-128k-instruct

4

The phi-3-mini-128k-instruct is a 3.8 billion-parameter, lightweight, state-of-the-art open model trained using the Phi-3 datasets. It is part of the Phi-3 family of models, which also includes the Phi-3-mini-4k-instruct variant. Both models have undergone a post-training process that incorporates supervised fine-tuning and direct preference optimization to enhance their ability to follow instructions and adhere to safety measures. Model inputs and outputs The phi-3-mini-128k-instruct model is best suited for text-based inputs, particularly prompts using a chat format. It can generate relevant and coherent responses to a wide range of queries, drawing upon its extensive training on high-quality data. Inputs Prompt**: The text prompt to be processed by the model. System Prompt**: An optional system prompt that sets the tone and context for the assistant. Additional parameters**: The model also accepts various parameters to control the output, such as temperature, top-k, top-p, and repetition penalty. Outputs Generated text**: The model's response to the input prompt, generated in an iterative manner. Capabilities The phi-3-mini-128k-instruct model has demonstrated strong performance on a variety of benchmarks testing common sense, language understanding, mathematics, coding, long-term context, and logical reasoning. It is particularly adept at tasks that require robust reasoning and understanding, such as solving complex math problems or generating code snippets. What can I use it for? The phi-3-mini-128k-instruct model is intended for commercial and research use in English-language applications. It is well-suited for memory and compute-constrained environments, as well as latency-bound scenarios that require strong reasoning capabilities. Potential use cases include: Developing AI-powered features for applications that leverage language understanding and generation Accelerating research on language and multimodal models Deploying in environments with limited resources, such as edge devices or mobile applications Things to try One interesting aspect of the phi-3-mini-128k-instruct model is its ability to engage in coherent, context-aware dialogue. Try providing the model with a series of related prompts or questions, and observe how it maintains and builds upon the conversation. You can also experiment with different parameter settings, such as adjusting the temperature or top-k/top-p values, to see how they affect the model's output.

Updated Invalid Date

llava-phi-3-mini

3

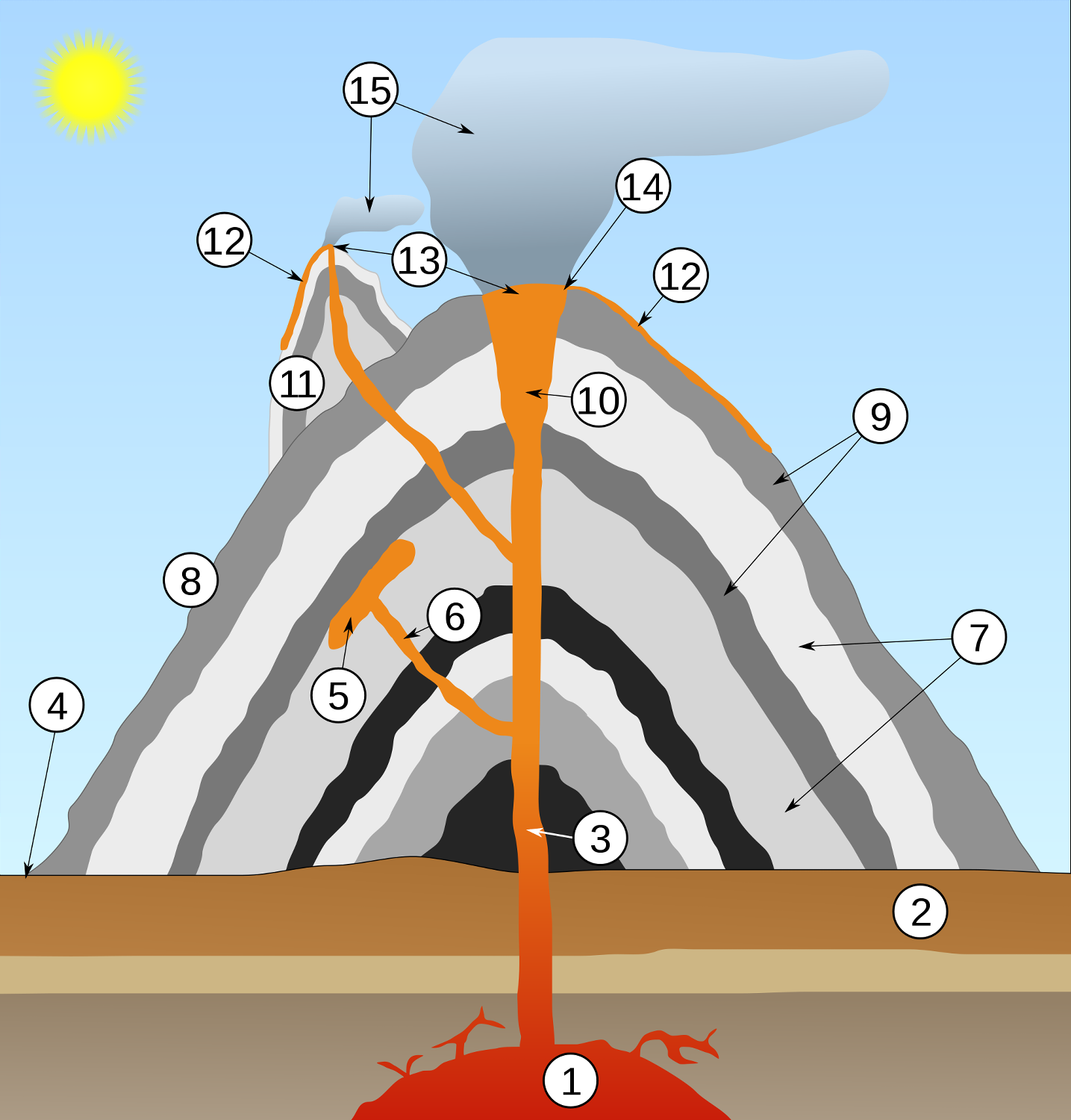

llava-phi-3-mini is a LLaVA model fine-tuned from microsoft/Phi-3-mini-4k-instruct by XTuner. It is a lightweight, state-of-the-art open model trained with the Phi-3 datasets, similar to phi-3-mini-128k-instruct and llava-phi-3-mini-gguf. The model uses the CLIP-ViT-Large-patch14-336 visual encoder and MLP projector, with a resolution of 336. Model inputs and outputs llava-phi-3-mini takes an image and a prompt as inputs, and generates a text output in response. The model is capable of performing a variety of multimodal tasks, such as image captioning, visual question answering, and visual reasoning. Inputs Image**: The input image, provided as a URL or file path. Prompt**: The text prompt that describes the task or query the user wants the model to perform. Outputs Text**: The model's generated response to the input prompt, based on the provided image. Capabilities llava-phi-3-mini is a powerful multimodal model that can perform a wide range of tasks, such as image captioning, visual question answering, and visual reasoning. The model has been fine-tuned on a variety of datasets, including ShareGPT4V-PT and InternVL-SFT, which have improved its performance on tasks like MMMU Val, SEED-IMG, AI2D Test, ScienceQA Test, HallusionBench aAcc, POPE, GQA, and TextVQA. What can I use it for? You can use llava-phi-3-mini for a variety of applications that require multimodal understanding and generation, such as image-based question answering, visual storytelling, or even image-to-text translation. The model's lightweight nature and strong performance make it a great choice for projects that require efficient and effective multimodal AI capabilities. Things to try With llava-phi-3-mini, you can explore a range of multimodal tasks, such as generating detailed captions for images, answering questions about the contents of an image, or even describing the relationships between objects in a scene. The model's versatility and performance make it a valuable tool for anyone working on projects that combine vision and language.

Updated Invalid Date

phi-2

2

The phi-2 model is a Cog implementation of the Microsoft Phi-2 model, developed by the Replicate team member lucataco. The Phi-2 model is a large language model trained by Microsoft, designed for tasks such as question answering, text generation, and text summarization. It can be thought of as a more capable version of the earlier Phi-3-Mini-4K-Instruct model, with enhanced prompt understanding and stylistic capabilities approaching that of the Proteus v0.2 model. Model inputs and outputs The phi-2 model takes a text prompt as input and generates a text output in response. The input prompt can be up to 2048 characters in length, and the model will generate a response up to 200 characters long. Inputs Prompt**: The text prompt that the model will use to generate a response. Outputs Output**: The text generated by the model in response to the input prompt. Capabilities The phi-2 model is a powerful language model that can be used for a variety of tasks, such as question answering, text generation, and text summarization. It has been trained on a large amount of data and has demonstrated strong performance on a range of language understanding and generation tasks. What can I use it for? The phi-2 model can be used for a variety of applications, such as: Content Generation**: The model can be used to generate high-quality text content, such as blog posts, articles, or stories. Question Answering**: The model can be used to answer questions by generating relevant and informative responses. Summarization**: The model can be used to summarize long text documents or articles, highlighting the key points and ideas. Dialogue Systems**: The model can be used to power conversational agents or chatbots, engaging in natural language interactions. Things to try One interesting thing to try with the phi-2 model is to experiment with different prompts and see how the model responds. For example, you could try prompts that involve creative writing, analytical tasks, or open-ended questions, and observe how the model generates unique and insightful responses. Additionally, you could explore using the model in combination with other AI tools or frameworks to create more sophisticated applications.

Updated Invalid Date

🚀

Phi-3-mini-4k-instruct

603

The Phi-3-mini-4k-instruct is a compact, 3.8 billion parameter language model developed by Microsoft. It is part of the Phi-3 family of models, which includes both the 4K and 128K variants that differ in their maximum context length. This model was trained on a combination of synthetic data and filtered web data, with a focus on reasoning-dense content. When evaluated on benchmarks testing common sense, language understanding, math, code, long context, and logical reasoning, the Phi-3-mini-4k-instruct demonstrated robust and state-of-the-art performance among models with less than 13 billion parameters. The model has undergone a post-training process that incorporates both supervised fine-tuning and direct preference optimization for instruction following and safety. This aligns it with human preferences for helpfulness and safety. Similar models include the Phi-3-mini-4k-instruct and the Meta-Llama-3-8B-Instruct, which are also compact, instruction-tuned language models. Model inputs and outputs Inputs The Phi-3-mini-4k-instruct model accepts text as input. Outputs The model generates text, including natural language and code. Capabilities The Phi-3-mini-4k-instruct model can be used for a variety of language-related tasks, such as summarization, question answering, and code generation. It has demonstrated strong performance on benchmarks testing common sense, language understanding, math, code, and logical reasoning. The model's compact size and instruction-following capabilities make it suitable for use in memory and compute-constrained environments, as well as latency-bound scenarios. What can I use it for? The Phi-3-mini-4k-instruct model can be a valuable tool for researchers and developers working on language models and generative AI applications. Its strong performance on a range of tasks, coupled with its small footprint, makes it an attractive option for building AI-powered features in resource-constrained environments. Potential use cases include chatbots, question-answering systems, and code generation tools. Things to try One interesting aspect of the Phi-3-mini-4k-instruct model is its ability to reason about complex topics and provide step-by-step solutions. Try prompting the model with math or coding problems and see how it approaches the task. Additionally, the model's instruction-following capabilities could be explored by providing it with detailed prompts or templates for specific tasks, such as writing business emails or creating an outline for a research paper.

Updated Invalid Date