pyglide

Maintainer: afiaka87

18

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | View on Github |

| Paper link | View on Arxiv |

Create account to get full access

Model overview

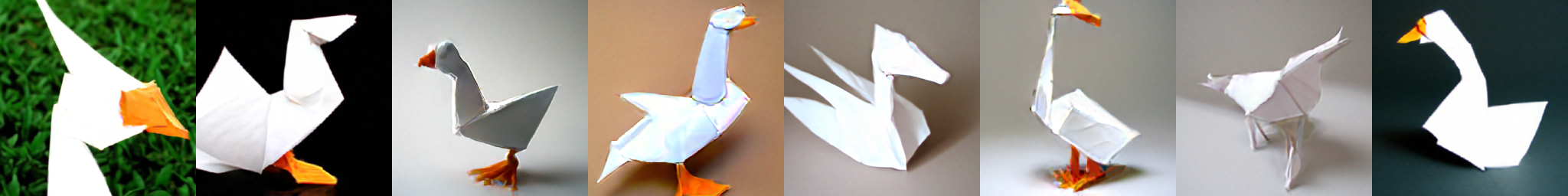

pyglide is a text-to-image generation model that is the predecessor to the popular DALL-E 2 model. It is based on the GLIDE (Generative Latent Diffusion) model, but with faster Pseudo-Resnext (PRK) and Pseudo-Linear Multistep (PLMS) sampling. The model was developed by afiaka87, who has also created other AI models like stable-diffusion, stable-diffusion-speed-lab, and open-dalle-1.1-lora.

Model inputs and outputs

pyglide takes in a text prompt and generates a corresponding image. The model supports various input parameters such as seed, side dimensions, batch size, guidance scale, and more. The output is an array of image URLs, with each URL representing a generated image.

Inputs

- Prompt: The text prompt to use for image generation

- Seed: A seed value for reproducibility

- Side X: The width of the image (must be a multiple of 8)

- Side Y: The height of the image (must be a multiple of 8)

- Batch Size: The number of images to generate (between 1 and 8)

- Upsample Temperature: The temperature to use for the upsampling stage

- Guidance Scale: The classifier-free guidance scale (between 4 and 16)

- Upsample Stage: Whether to use both the base and upsample models

- Timestep Respacing: The number of timesteps to use for base model sampling

- SR Timestep Respacing: The number of timesteps to use for upsample model sampling

Outputs

- Array of Image URLs: The generated images as a list of URLs

Capabilities

pyglide is capable of generating photorealistic images from text prompts. Like other text-to-image models, it can create a wide variety of images, from realistic scenes to abstract concepts. The model's fast sampling capabilities and the ability to use both the base and upsample models make it a powerful tool for quick image generation.

What can I use it for?

You can use pyglide for a variety of applications, such as creating illustrations, generating product images, designing book covers, or even producing concept art for games and movies. The model's speed and flexibility make it a valuable tool for creative professionals and hobbyists alike.

Things to try

One interesting thing to try with pyglide is experimenting with the guidance scale parameter. Adjusting the guidance scale can significantly affect the generated images, allowing you to move between more photorealistic and more abstract or stylized outputs. You can also try using the upsample stage to see the difference in quality and detail between the base and upsampled models.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

glid-3-xl

7

The glid-3-xl model is a text-to-image diffusion model created by the Replicate team. It is a finetuned version of the CompVis latent-diffusion model, with improvements for inpainting tasks. Compared to similar models like stable-diffusion, inkpunk-diffusion, and inpainting-xl, glid-3-xl focuses specifically on high-quality inpainting capabilities. Model inputs and outputs The glid-3-xl model takes a text prompt, an optional initial image, and an optional mask as inputs. It then generates a new image that matches the text prompt, while preserving the content of the initial image where the mask specifies. The outputs are one or more high-resolution images. Inputs Prompt**: The text prompt describing the desired image Init Image**: An optional initial image to use as a starting point Mask**: An optional mask image specifying which parts of the initial image to keep Outputs Generated Images**: One or more high-resolution images matching the text prompt, with the initial image content preserved where specified by the mask Capabilities The glid-3-xl model excels at generating high-quality images that match text prompts, while also allowing for inpainting of existing images. It can produce detailed, photorealistic illustrations as well as more stylized artwork. The inpainting capabilities make it useful for tasks like editing and modifying existing images. What can I use it for? The glid-3-xl model is well-suited for a variety of creative and generative tasks. You could use it to create custom illustrations, concept art, or product designs based on textual descriptions. The inpainting functionality also makes it useful for tasks like photo editing, object removal, and image manipulation. Businesses could leverage the model to generate visuals for marketing, product design, or even custom content creation. Things to try Try experimenting with different types of prompts to see the range of images the glid-3-xl model can generate. You can also play with the inpainting capabilities by providing an initial image and mask to see how the model can modify and enhance existing visuals. Additionally, try adjusting the various input parameters like guidance scale and aesthetic weight to see how they impact the output.

Updated Invalid Date

clip-guided-diffusion

43

clip-guided-diffusion is an AI model that can generate images from text prompts. It works by using a CLIP (Contrastive Language-Image Pre-training) model to guide a denoising diffusion model during the image generation process. This allows the model to produce images that are semantically aligned with the input text. The model was created by afiaka87, who has also developed similar text-to-image models like sd-aesthetic-guidance and retrieval-augmented-diffusion. Model inputs and outputs clip-guided-diffusion takes text prompts as input and generates corresponding images as output. The model can also accept an initial image to blend with the generated output. The main input parameters include the text prompt, the image size, the number of diffusion steps, and the clip guidance scale. Inputs Prompts**: The text prompt(s) to use for image generation, with optional weights. Image Size**: The size of the generated image, which can be 64, 128, 256, or 512 pixels. Timestep Respacing**: The number of diffusion steps to use, which affects the speed and quality of the generated images. Clip Guidance Scale**: The scale for the CLIP spherical distance loss, which controls how closely the generated image matches the text prompt. Outputs Generated Images**: The model outputs one or more images that match the input text prompt. Capabilities clip-guided-diffusion can generate a wide variety of images from text prompts, including scenes, objects, and abstract concepts. The model is particularly skilled at capturing the semantic meaning of the text and producing visually coherent and plausible images. However, the generation process can be relatively slow compared to other text-to-image models. What can I use it for? clip-guided-diffusion can be used for a variety of creative and practical applications, such as: Generating custom artwork and illustrations for personal or commercial use Prototyping and visualizing ideas before implementing them Enhancing existing images by blending them with text-guided generations Exploring and experimenting with different artistic styles and visual concepts Things to try One interesting aspect of clip-guided-diffusion is the ability to control the generated images through the use of weights in the text prompts. By assigning positive or negative weights to different components of the prompt, you can influence the model to emphasize or de-emphasize certain aspects of the output. This can be particularly useful for fine-tuning the generated images to match your specific preferences or requirements. Another useful feature is the ability to blend an existing image with the text-guided diffusion process. This can be helpful for incorporating specific visual elements or styles into the generated output, or for refining and improving upon existing images.

Updated Invalid Date

laionide-v4

9

laionide-v4 is a text-to-image model developed by Replicate user afiaka87. It is based on the GLIDE model from OpenAI, which was fine-tuned on a larger dataset to expand its capabilities. laionide-v4 can generate images from text prompts, with additional features like the ability to incorporate human and experimental style prompts. It builds on earlier iterations like laionide-v2 and laionide-v3, which also fine-tuned GLIDE on larger datasets. The predecessor to this model, pyglide, was an earlier GLIDE-based model with faster sampling. Model inputs and outputs laionide-v4 takes in a text prompt describing the desired image and generates an image based on that prompt. The model supports additional parameters like batch size, guidance scale, and upsampling settings to customize the output. Inputs Prompt**: The text prompt describing the desired image Batch Size**: The number of images to generate simultaneously Guidance Scale**: Controls the trade-off between fidelity to the prompt and creativity in the output Image Size**: The desired size of the generated image Upsampling**: Whether to use a separate upsampling model to increase the resolution of the generated image Outputs Image**: The generated image based on the provided prompt and parameters Capabilities laionide-v4 can generate a wide variety of images from text prompts, including realistic scenes, abstract art, and surreal compositions. It demonstrates strong performance on prompts involving humans, objects, and experimental styles. The model can also produce high-resolution images through its upsampling capabilities. What can I use it for? laionide-v4 can be useful for a variety of creative and artistic applications, such as generating images for digital art, illustrations, and concept design. It could also be used to create unique stock imagery or to explore novel visual ideas. With its ability to incorporate style prompts, the model could be particularly valuable for fashion, interior design, and other aesthetic-driven industries. Things to try One interesting aspect of laionide-v4 is its ability to generate images with human-like features and expressions. You could experiment with prompts that ask the model to depict people in different emotional states or engaging in various activities. Another intriguing possibility is to combine the model's text-to-image capabilities with its style prompts to create unique, genre-blending artworks.

Updated Invalid Date

glid-3-xl-stable

13

glid-3-xl-stable is a variant of the Stable Diffusion text-to-image AI model, developed by devxpy. It builds upon the original Stable Diffusion model by adding more powerful in-painting and out-painting capabilities. This allows users to seamlessly edit and extend existing images based on text prompts. Model inputs and outputs glid-3-xl-stable takes text prompts as input and generates high-quality images as output. It can also take an initial image and a mask as input to perform targeted in-painting or out-painting. Inputs Prompt**: The text prompt describing the desired image Init Image**: An optional initial image to use as a starting point Mask**: An optional mask image to define the area to be in-painted or out-painted Outputs Generated Image(s)**: One or more images generated based on the input prompt and optional initial image and mask Capabilities In addition to the standard text-to-image generation capabilities of Stable Diffusion, glid-3-xl-stable offers enhanced in-painting and out-painting features. This allows users to seamlessly edit and extend existing images by providing a text prompt and a mask. The model can also perform classifier-guided generation to produce images that align with specific visual styles, such as anime, art, or photography. What can I use it for? glid-3-xl-stable can be used for a variety of applications, including: Creative Image Editing**: Enhance or modify existing images by in-painting or out-painting based on text prompts. Conceptual Artwork Generation**: Generate unique and imaginative artworks by providing text descriptions. Product Visualization**: Create realistic product renderings by combining 3D models with text-based descriptions. Photo Editing and Manipulation**: Remove unwanted elements from images or add new content based on text prompts. Things to try One interesting use case for glid-3-xl-stable is the ability to perform classifier-guided generation. By providing a text prompt along with a specific classifier (e.g., for anime, art, or photography), the model can generate images that align with the desired visual style. This can be particularly useful for creating conceptual artwork or for exploring different artistic interpretations of a given idea. Another compelling feature is the model's out-painting capability, which allows users to extend the canvas of an existing image. This can be useful for tasks such as creating panoramic images, generating larger-scale artwork, or expanding the background of a scene.

Updated Invalid Date