pytorch-animegan

Maintainer: ptran1203

30

| Property | Value |

|---|---|

| Model Link | View on Replicate |

| API Spec | View on Replicate |

| Github Link | View on Github |

| Paper Link | View on Arxiv |

Get summaries of the top AI models delivered straight to your inbox:

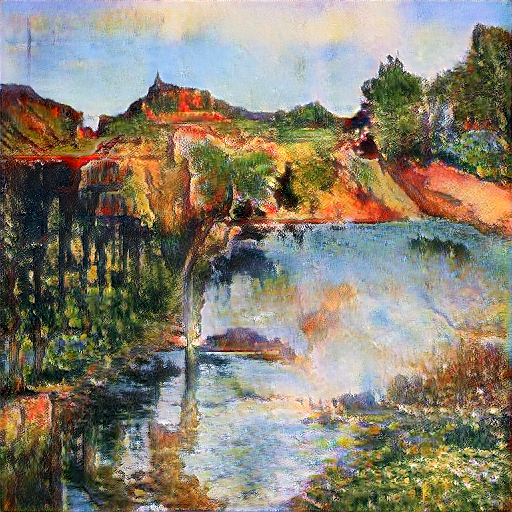

Model overview

The pytorch-animegan model is a PyTorch implementation of the AnimeGAN, a novel lightweight Generative Adversarial Network (GAN) for fast photo animation. Developed by ptran1203, this model aims to transform natural photos into anime-style illustrations, capturing the distinctive visual aesthetics of Japanese animation.

In contrast to similar models like real-esrgan, pytorch-animegan focuses specifically on the task of photo-to-anime style transfer, rather than general super-resolution or image enhancement. The model is inspired by the AnimeGAN paper published on Semantic Scholar, with the original TensorFlow implementation available on GitHub.

Model inputs and outputs

Inputs

- Image: A natural photograph or digital image that the model will transform into an anime-style illustration.

- Model: The specific style of anime to apply to the input image, such as the "Hayao" style.

Outputs

- Transformed Image: The input image, with the specified anime style applied, resulting in an anime-like illustration.

Capabilities

The pytorch-animegan model can effectively transform real-world photographs into anime-style illustrations, capturing the unique visual aesthetics of Japanese animation. The model can handle a variety of input images, including landscapes, portraits, and scenes, and can produce high-quality anime-style outputs.

What can I use it for?

The pytorch-animegan model is well-suited for a variety of creative and artistic applications, such as:

- Photo Editing and Illustration: Transform your personal photos into anime-style artworks, adding a unique and stylized touch to your digital creations.

- Content Creation: Easily create anime-inspired illustrations or backgrounds for your videos, games, or other multimedia projects.

- Cosplay and Fanart: Use the model to generate anime-style versions of your favorite characters or scenes, perfect for cosplay or fan art projects.

Things to try

One interesting aspect of the pytorch-animegan model is its ability to handle different anime styles, such as the "Hayao" style inspired by the work of renowned anime director Hayao Miyazaki. By experimenting with the available style options, you can explore how the model adapts to different visual aesthetics and discover new ways to apply the anime transformation to your images.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

gfpgan

74.0K

gfpgan is a practical face restoration algorithm developed by the Tencent ARC team. It leverages the rich and diverse priors encapsulated in a pre-trained face GAN (such as StyleGAN2) to perform blind face restoration on old photos or AI-generated faces. This approach contrasts with similar models like Real-ESRGAN, which focuses on general image restoration, or PyTorch-AnimeGAN, which specializes in anime-style photo animation. Model inputs and outputs gfpgan takes an input image and rescales it by a specified factor, typically 2x. The model can handle a variety of face images, from low-quality old photos to high-quality AI-generated faces. Inputs Img**: The input image to be restored Scale**: The factor by which to rescale the output image (default is 2) Version**: The gfpgan model version to use (v1.3 for better quality, v1.4 for more details and better identity) Outputs Output**: The restored face image Capabilities gfpgan can effectively restore a wide range of face images, from old, low-quality photos to high-quality AI-generated faces. It is able to recover fine details, fix blemishes, and enhance the overall appearance of the face while preserving the original identity. What can I use it for? You can use gfpgan to restore old family photos, enhance AI-generated portraits, or breathe new life into low-quality images of faces. The model's capabilities make it a valuable tool for photographers, digital artists, and anyone looking to improve the quality of their facial images. Additionally, the maintainer tencentarc offers an online demo on Replicate, allowing you to try the model without setting up the local environment. Things to try Experiment with different input images, varying the scale and version parameters, to see how gfpgan can transform low-quality or damaged face images into high-quality, detailed portraits. You can also try combining gfpgan with other models like Real-ESRGAN to enhance the background and non-facial regions of the image.

Updated Invalid Date

animeganv2

46

animeganv2 is a PyTorch-based implementation of the AnimeGANv2 model, which is a face portrait style transfer model capable of converting real-world facial images into an "anime-style" look. It was developed by the Replicate user 412392713, who has also created other similar models like VToonify. Compared to other face stylization models like GFPGAN and the original PyTorch AnimeGAN, animeganv2 aims to produce more refined and natural-looking "anime-fied" portraits. Model inputs and outputs The animeganv2 model takes a single input image and generates a stylized output image. The input can be any facial photograph, while the output will have an anime-inspired artistic look and feel. Inputs image**: The input facial photograph to be stylized Outputs Output image**: The stylized "anime-fied" portrait Capabilities The animeganv2 model can take real-world facial photographs and convert them into high-quality anime-style portraits. It produces results that maintain a natural look while adding distinctive anime-inspired elements like simplified facial features, softer skin tones, and stylized hair. The model is particularly adept at handling diverse skin tones, facial structures, and hairstyles. What can I use it for? The animeganv2 model can be used to quickly and easily transform regular facial photographs into anime-style portraits. This could be useful for creating unique profile pictures, custom character designs, or stylized portraits. The model's ability to work on a wide range of faces also makes it suitable for applications like virtual avatars, social media filters, and creative content generation. Things to try Experiment with the animeganv2 model on a variety of facial photographs, from close-up portraits to more distant shots. Try different input images to see how the model handles different skin tones, facial features, and hair styles. You can also compare the results to the original PyTorch AnimeGAN model to see the improvements in realism and visual quality.

Updated Invalid Date

animeganv3

2

AnimeGANv3 is a novel double-tail generative adversarial network developed by researcher Asher Chan for fast photo animation. It builds upon previous iterations of the AnimeGAN model, which aims to transform regular photos into anime-style art. Unlike AnimeGANv2, AnimeGANv3 introduces a more efficient architecture that can generate anime-style images at a faster rate. The model has been trained on various anime art styles, including the distinctive styles of directors Hayao Miyazaki and Makoto Shinkai. Model inputs and outputs AnimeGANv3 takes a regular photo as input and outputs an anime-style version of that photo. The model supports a variety of anime art styles, which can be selected as input parameters. In addition to photo-to-anime conversion, the model can also be used to animate videos, transforming regular footage into anime-style animations. Inputs image**: The input photo or video frame to be converted to an anime style. style**: The desired anime art style, such as Hayao, Shinkai, Arcane, or Disney. Outputs Output image/video**: The input photo or video transformed into the selected anime art style. Capabilities AnimeGANv3 can produce high-quality, anime-style renderings of photos and videos with impressive speed and efficiency. The model's ability to capture the distinct visual characteristics of various anime styles, such as Hayao Miyazaki's iconic watercolor aesthetic or Makoto Shinkai's vibrant, detailed landscapes, sets it apart from previous iterations of the AnimeGAN model. What can I use it for? AnimeGANv3 can be a powerful tool for artists, animators, and content creators looking to quickly and easily transform their work into anime-inspired art. The model's versatility allows it to be applied to a wide range of projects, from personal photo edits to professional-grade animated videos. Additionally, the model's ability to convert photos and videos into different anime styles can be useful for filmmakers, game developers, and other creatives seeking to create unique, anime-influenced content. Things to try One exciting aspect of AnimeGANv3 is its ability to animate videos, transforming regular footage into stylized, anime-inspired animations. Users can experiment with different input videos and art styles to create unique, eye-catching results. Additionally, the model's wide range of supported styles, from the classic Hayao and Shinkai looks to more contemporary styles like Arcane and Disney, allows for a diverse array of creative possibilities.

Updated Invalid Date

fastgan-pytorch

3

fastgan-pytorch is a fast and stable GAN (Generative Adversarial Network) model developed by the AI researcher odegeasslbc for generating high-quality images from small and high-resolution datasets. It is based on the paper "Towards Faster and Stabilized GAN Training for High-fidelity Few-shot Image Synthesis" and aims to address challenges in training GANs on limited data. The model is capable of converging on 80% of the over 20 datasets with less than 100 images that it was tested on, although the specific dataset properties that lead to successful convergence are not yet clear. Model inputs and outputs fastgan-pytorch takes in a seed value and a variant parameter as inputs. The seed value is used to initialize the random number generator, allowing for reproducible results. The variant parameter specifies the model configuration to use, with the "art" variant being the default. Inputs seed**: An integer value used to initialize the random number generator, with -1 indicating a random seed. variant**: The model configuration to use, with "art" being the default. Outputs The model generates high-quality images based on the provided inputs. Capabilities fastgan-pytorch is capable of generating high-fidelity images from small and high-resolution datasets, outperforming traditional GAN models in this setting. The model includes several advanced features, such as style-mixing, latent vector finding, and video generation from image interpolation. What can I use it for? fastgan-pytorch can be used for a variety of image generation tasks, particularly in scenarios where limited data is available. This could include generating art, product images, or other specialized content. The model's ability to work with small datasets makes it a valuable tool for individuals or companies with limited resources for image generation. Things to try Researchers and developers can experiment with tuning the model's hyperparameters, such as the augmentation options, model depth, and model width, to optimize performance on their specific datasets. The provided pre-trained models and scripts can also be used as a starting point for further exploration and development.

Updated Invalid Date