sdxl-gta-v

Maintainer: pwntus

48

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | No Github link provided |

| Paper link | No paper link provided |

Create account to get full access

Model Overview

sdxl-gta-v is a fine-tuned version of the SDXL (Stable Diffusion XL) model, trained on art from the popular video game Grand Theft Auto V. This model was developed by pwntus, who has also created other interesting AI models like gfpgan, a face restoration algorithm for old photos or AI-generated faces.

Model Inputs and Outputs

The sdxl-gta-v model accepts a variety of inputs to generate unique images, including a prompt, an input image for img2img or inpaint mode, and various settings to control the output. The model can produce one or more images per run, with options to adjust aspects like the image size, guidance scale, and number of inference steps.

Inputs

- Prompt: The text prompt that describes the desired image

- Image: An input image for img2img or inpaint mode

- Mask: A mask for the inpaint mode, where black areas will be preserved and white areas will be inpainted

- Seed: A random seed value, which can be left blank to randomize the output

- Width/Height: The desired dimensions of the output image

- Num Outputs: The number of images to generate (up to 4)

- Scheduler: The denoising scheduler to use

- Guidance Scale: The scale for classifier-free guidance

- Num Inference Steps: The number of denoising steps to perform

- Prompt Strength: The strength of the prompt when using img2img or inpaint mode

- Refine: The refine style to use

- LoRA Scale: The additive scale for LoRA (only applicable on trained models)

- High Noise Frac: The fraction of noise to use for the expert_ensemble_refiner

- Apply Watermark: Whether to apply a watermark to the generated images

Outputs

- One or more output images generated based on the provided inputs

Capabilities

The sdxl-gta-v model is capable of generating high-quality, GTA V-themed images based on text prompts. It can also perform inpainting tasks, where it fills in missing or damaged areas of an input image. The model's fine-tuning on GTA V art allows it to capture the unique aesthetics and style of the game, making it a useful tool for creators and artists working in the GTA V universe.

What Can I Use It For?

The sdxl-gta-v model could be used for a variety of projects, such as creating promotional materials, fan art, or even generating assets for GTA V-inspired games or mods. Its inpainting capabilities could also be useful for restoring or enhancing existing GTA V artwork. Additionally, the model's versatility allows it to be used for more general image generation tasks, making it a potentially valuable tool for a wide range of creative applications.

Things to Try

Some interesting things to try with the sdxl-gta-v model include experimenting with different prompt styles to capture various aspects of the GTA V universe, such as specific locations, vehicles, or characters. You could also try using the inpainting feature to modify existing GTA V-themed images or to create seamless composites of different game elements. Additionally, exploring the model's capabilities with different settings, like adjusting the guidance scale or number of inference steps, could lead to unique and unexpected results.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

gta5_artwork_diffusion

4

The gta5_artwork_diffusion model is a Stable Diffusion-based AI model trained on artwork from the Grand Theft Auto V (GTA V) video game. It was developed by the Replicate creator cjwbw. This model can generate images in the distinct visual style of GTA V, including characters, backgrounds, and objects from the game. It builds upon the capabilities of the Stable Diffusion model and uses the Dreambooth technique to fine-tune the model on GTA V artwork. Similar models like the GTA5_Artwork_Diffusion and sdxl-gta-v also leverage the GTA V art style, but this model is specifically trained using Dreambooth. Model inputs and outputs The gta5_artwork_diffusion model takes in a text prompt as input and generates one or more images based on that prompt. The input prompt can describe the desired content, style, and other characteristics of the output image. The model also accepts parameters to control aspects like the image size, number of outputs, and the denoising process. Inputs Prompt**: A text description of the desired image content and style Negative Prompt**: Text describing elements that should not be included in the output image Seed**: A random seed value to control image generation Width and Height**: The desired dimensions of the output image Num Outputs**: The number of images to generate Guidance Scale**: The strength of the prompt guidance during image generation Prompt Strength**: The strength of the prompt when using an init image Num Inference Steps**: The number of denoising steps to perform during generation Outputs Image(s)**: One or more images generated based on the input prompt and parameters Capabilities The gta5_artwork_diffusion model can generate a wide variety of images in the distinctive visual style of GTA V. This includes portraits of characters, detailed landscapes and environments, and various objects and vehicles from the game. The model is particularly adept at capturing the gritty, realistic aesthetic of GTA V, with the ability to depict characters, settings, and props that closely resemble the game's art. What can I use it for? The gta5_artwork_diffusion model can be used for a variety of creative and entertainment-focused applications. Artists and designers may find it helpful for generating concept art, character designs, or illustrations inspired by the GTA V universe. Hobbyists and enthusiasts could use the model to create custom fan art or content related to the game. Additionally, the model's capabilities could be leveraged for visual effects, animations, or other multimedia projects that require content in the GTA V style. Things to try One interesting aspect of the gta5_artwork_diffusion model is its ability to blend the realism of GTA V's art with more fantastical or exaggerated elements. By experimenting with the prompts and parameters, users can explore how the model handles requests for characters, scenes, or objects that push the boundaries of the game's typical visual style. This could lead to the creation of unique and imaginative GTA V-inspired artwork that goes beyond the scope of the original game.

Updated Invalid Date

sdxl-woolitize

1

The sdxl-woolitize model is a fine-tuned version of the SDXL (Stable Diffusion XL) model, created by the maintainer pwntus. It is based on felted wool, a unique material that gives the generated images a distinctive textured appearance. Similar models like woolitize and sdxl-color have also been created to explore different artistic styles and materials. Model inputs and outputs The sdxl-woolitize model takes a variety of inputs, including a prompt, image, mask, and various parameters to control the output. It generates one or more output images based on the provided inputs. Inputs Prompt**: The text prompt describing the desired image Image**: An input image for img2img or inpaint mode Mask**: An input mask for inpaint mode, where black areas will be preserved and white areas will be inpainted Width/Height**: The desired width and height of the output image Seed**: A random seed value to control the output Refine**: The refine style to use Scheduler**: The scheduler algorithm to use LoRA Scale**: The LoRA additive scale (only applicable on trained models) Num Outputs**: The number of images to generate Refine Steps**: The number of steps to refine the image (for base_image_refiner) Guidance Scale**: The scale for classifier-free guidance Apply Watermark**: Whether to apply a watermark to the generated image High Noise Frac**: The fraction of noise to use (for expert_ensemble_refiner) Negative Prompt**: An optional negative prompt to guide the image generation Outputs Image(s)**: One or more generated images in the specified size Capabilities The sdxl-woolitize model is capable of generating images with a unique felted wool-like texture. This style can be used to create a wide range of artistic and whimsical images, from fantastical creatures to abstract compositions. What can I use it for? The sdxl-woolitize model could be used for a variety of creative projects, such as generating concept art, illustrations, or even textiles and fashion designs. The distinct felted wool aesthetic could be particularly appealing for children's books, fantasy-themed projects, or any application where a handcrafted, organic look is desired. Things to try Experiment with different prompt styles and modifiers to see how the model responds. Try combining the sdxl-woolitize model with other fine-tuned models, such as sdxl-gta-v or sdxl-deep-down, to create unique hybrid styles. Additionally, explore the limits of the model by providing challenging or abstract prompts and see how it handles them.

Updated Invalid Date

material-diffusion-sdxl

1

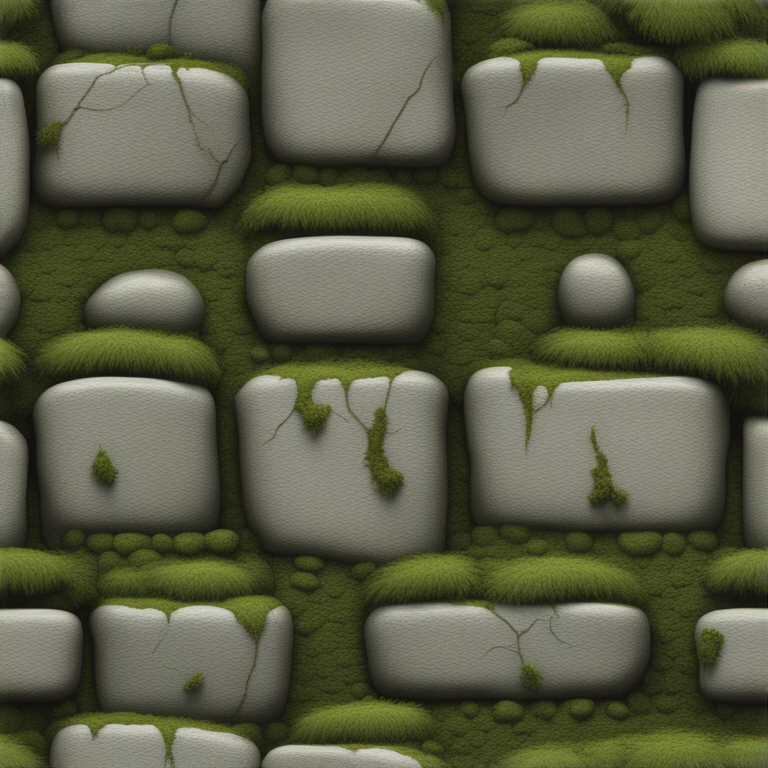

material-diffusion-sdxl is a Stable Diffusion XL model developed by pwntus that outputs tileable images for use in 3D applications such as Monaverse. It builds upon the Diffusers Stable Diffusion XL model by optimizing the output for seamless tiling. This can be useful for creating textures, patterns, and seamless backgrounds for 3D environments and virtual worlds. Model inputs and outputs The material-diffusion-sdxl model takes a variety of inputs to control the generation process, including a text prompt, image size, number of outputs, and more. The outputs are URLs pointing to the generated image(s). Inputs Prompt**: The text prompt that describes the desired image Negative Prompt**: Text to guide the model away from certain outputs Width/Height**: The dimensions of the generated image Num Outputs**: The number of images to generate Num Inference Steps**: The number of denoising steps to use during generation Guidance Scale**: The scale for classifier-free guidance Seed**: A random seed to control the generation process Refine**: The type of refiner to use on the output Refine Steps**: The number of refine steps to use High Noise Frac**: The fraction of noise to use for the expert ensemble refiner Apply Watermark**: Whether to apply a watermark to the generated images Outputs Image URLs**: A list of URLs pointing to the generated images Capabilities The material-diffusion-sdxl model is capable of generating high-quality, tileable images across a variety of subjects and styles. It can be used to create seamless textures, patterns, and backgrounds for 3D environments and virtual worlds. The model's ability to output images in a tileable format sets it apart from more general text-to-image models like Stable Diffusion. What can I use it for? The material-diffusion-sdxl model can be used to generate tileable textures, patterns, and backgrounds for 3D applications, virtual environments, and other visual media. This can be particularly useful for game developers, 3D artists, and designers who need to create seamless and repeatable visual elements. The model can also be fine-tuned on specific materials or styles to create custom assets, as demonstrated by the sdxl-woolitize model. Things to try Experiment with different prompts and input parameters to see the variety of tileable images the material-diffusion-sdxl model can generate. Try prompts that describe specific materials, patterns, or textures to see how the model responds. You can also try using the model in combination with other tools and techniques, such as 3D modeling software or image editing programs, to create unique and visually striking assets for your projects.

Updated Invalid Date

sdxl-mk1

7

The sdxl-mk1 model is designed to generate Mortal Kombat 1 fighters and character skins. It is a specialized model created by asronline that is similar to other SDXL-based models like mk1-redux, masactrl-sdxl, sdxl-akira, and sdxl-mascot-avatars. These models offer a range of capabilities, from generating classic Mortal Kombat fighters to producing cute mascot avatars. Model inputs and outputs The sdxl-mk1 model accepts a variety of inputs, including a prompt, image, and various parameters to control the output. The outputs are generated images depicting Mortal Kombat 1 fighters and character skins. Inputs Prompt**: The input prompt that describes the desired output image. Image**: An input image that can be used as a starting point for the generation process. Mask**: An input mask that can be used to define areas of the image that should be preserved or inpainted. Seed**: A random seed value that can be used to control the randomness of the generated output. Width and Height**: The desired dimensions of the output image. Refine**: The refinement style to use when generating the output. Scheduler**: The scheduler algorithm to use when generating the output. LoRA Scale**: The scale factor for LoRA (Local Reparameterization) additions. Num Outputs**: The number of output images to generate. Refine Steps**: The number of refinement steps to perform. Guidance Scale**: The scale factor for classifier-free guidance. Apply Watermark**: A flag to control whether a watermark is applied to the output images. High Noise Frac**: The fraction of high noise to use for expert ensemble refinement. Negative Prompt**: An optional negative prompt to guide the generation process. Prompt Strength**: The strength of the input prompt when using image-to-image or inpainting. Num Inference Steps**: The number of denoising steps to perform during the generation process. Outputs Output Images**: The generated Mortal Kombat 1 fighter and character skin images. Capabilities The sdxl-mk1 model is capable of generating high-quality images of Mortal Kombat 1 fighters and character skins. It can produce a wide variety of characters and styles, and the input parameters allow for fine-tuning the output to match specific preferences. What can I use it for? The sdxl-mk1 model can be used to create custom Mortal Kombat 1-inspired artwork, character designs, or even fan projects. Potential use cases include generating content for games, websites, social media, or other Mortal Kombat-themed applications. The model's capabilities could also be leveraged to create unique and engaging marketing materials or merchandise for Mortal Kombat fans. Things to try With the sdxl-mk1 model, you can experiment with different prompts, input images, and parameter settings to see how they affect the generated output. Try describing specific Mortal Kombat characters or themes, or use the image-to-image and inpainting capabilities to refine or modify existing Mortal Kombat-inspired artwork. The model's flexibility allows for a wide range of creative possibilities.

Updated Invalid Date