Dribnet

Models by this creator

pixray-text2image

223

pixray-text2image is a powerful image generation system that combines various techniques, including perception engines, CLIP-guided GAN imagery, and methods for navigating latent space. Developed by the maintainer dribnet, pixray-text2image is capable of generating images from text prompts, with similarities to models like dreamshaper, All-In-One-Pixel-Model, and majicmix. However, pixray-text2image offers its own unique approach and capabilities. Model inputs and outputs pixray-text2image takes a text prompt as input and generates an image as output. The model can be customized with various settings, such as the rendering engine ("drawer") and additional settings in a "name: value" format. Inputs Prompts**: The text prompt that describes the desired image, such as "Cairo skyline at sunset." Drawer**: The rendering engine to use, with a default of "vqgan." Settings**: Additional settings in a "name: value" format, such as custom loss functions. Outputs Image**: The generated image, returned as an array of image URIs. Capabilities pixray-text2image can generate a wide range of images, from photorealistic scenes to abstract and stylized art. The model's flexible architecture allows for customization and experimentation, making it a versatile tool for creative endeavors. What can I use it for? pixray-text2image can be used for a variety of applications, such as generating concept art, designing illustrations, or creating visual assets for games, movies, or other media. The model's ability to translate text prompts into visual outputs makes it a valuable tool for artists, designers, and content creators who want to quickly explore and iterate on their ideas. Things to try One interesting aspect of pixray-text2image is its ability to combine various techniques, including perception engines, CLIP-guided GAN imagery, and latent space navigation. Users can experiment with different settings and loss functions to see how they affect the generated images, unlocking new creative possibilities.

Updated 9/18/2024

pixray-genesis

160

pixray-genesis is a generative AI model created by dribnet. It is similar to other models like pixray, pixray-text2image, redshift-diffusion, and stable-diffusion. These models are all capable of generating detailed, photorealistic images from text prompts. Model inputs and outputs The pixray-genesis model takes a few inputs to generate an output. These include a title, a quality setting, and optional settings. The output is an array of image URLs. Inputs Title**: A text string that describes the desired image Quality**: The quality setting for the generated image, with options like "draft" and "final" Optional Settings**: Additional settings that can be used to customize the image generation process Outputs An array of image URLs, representing the generated images Capabilities The pixray-genesis model is capable of generating a wide variety of photorealistic images from text prompts. It can create images of people, animals, landscapes, and more. The model is particularly adept at generating detailed, high-quality images that are difficult to distinguish from real-world photographs. What can I use it for? The pixray-genesis model can be used for a variety of applications, such as content creation, marketing, and design. For example, you could use it to generate images for blog posts, social media campaigns, or product mockups. You could also use it to create custom artwork or illustrations. Things to try One interesting thing to try with the pixray-genesis model is experimenting with different input prompts to see how the model interprets and generates the images. You could try prompts that are more specific or abstract, and see how the model responds. Additionally, you could try adjusting the optional settings to see how they affect the generated images.

Updated 9/18/2024

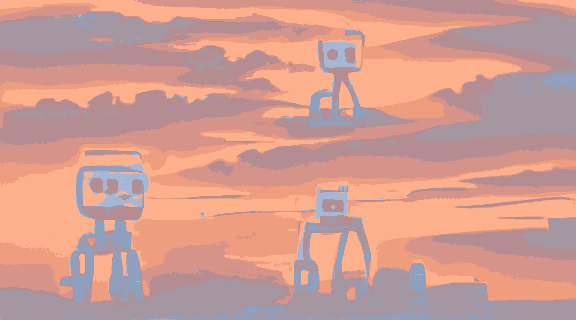

pixray-text2pixel-0x42

148

pixray-text2pixel-0x42 is a text-to-image AI model developed by the creator dribnet. It uses the pixray system to generate pixel art images from text prompts. pixray-text2pixel-0x42 builds on previous work in image generation, combining ideas from Perception Engines, CLIP-guided GAN imagery, and techniques for navigating latent space. This model can be used to turn any text description into a unique pixel art image. Model inputs and outputs pixray-text2pixel-0x42 takes in text prompts as input and generates pixel art images as output. The model can handle a variety of prompts, from specific descriptions to more abstract concepts. Inputs Prompts**: A text description of what to draw, such as "Robots skydiving high above the city". Aspect**: The aspect ratio of the output image, with options for widescreen, square, or portrait. Quality**: The trade-off between speed and quality of the generated image, with options for draft, normal, better, and best. Outputs Image files**: The generated pixel art images. Metadata**: Text descriptions or other relevant information about the generated images. Capabilities pixray-text2pixel-0x42 can turn a wide range of text prompts into unique pixel art images. For example, it could generate an image of "an extremely hairy panda bear" or "sunrise over a serene lake". The model's capabilities extend beyond just realistic scenes, and it can also handle more abstract or fantastical prompts. What can I use it for? With pixray-text2pixel-0x42, you can generate custom pixel art for a variety of applications, such as: Creating unique artwork and illustrations for personal or commercial projects Generating pixel art assets for retro-style games or digital experiences Experimenting with different text prompts to explore the model's capabilities and generate novel, imaginative imagery Things to try One interesting aspect of pixray-text2pixel-0x42 is its ability to capture nuanced details in the generated pixel art. For example, try prompts that combine contrasting elements, like "a tiny spaceship flying through a giant forest" or "a fluffy kitten made of metal". Explore how the model translates these kinds of descriptions into cohesive pixel art compositions.

Updated 9/17/2024

pixray-text2pixel

103

The pixray-text2pixel model is a powerful AI tool created by Replicate's dribnet that allows you to turn any text description into pixel art. This model is part of the broader pixray family of models, which also includes tools like pixray-tiler for creating wallpaper tiles and 8bidoug for generating 24x24 pixel art. The pixray-text2image model is also related, generating images directly from text prompts. Model inputs and outputs The pixray-text2pixel model takes a text prompt as its main input, which can describe anything from a specific scene to a general concept. You can also adjust the aspect ratio (wide or narrow) and the pixel scale to control the output. The model then generates a set of images that visually represent the provided text prompt in a pixelated art style. Inputs Prompts**: The text prompt describing what you want the model to generate Aspect**: The aspect ratio of the output, either "wide" or "narrow" Pixel Scale**: The size of the pixels in the output, ranging from 0.5 to 2 Outputs A set of images in a pixelated art style representing the input text prompt Capabilities The pixray-text2pixel model is incredibly versatile, allowing you to create unique and interesting pixel art from simple text descriptions. Whether you want to generate a pixelated skyline, a retro-inspired character, or an abstract design, this model can bring your ideas to life in a charming, 8-bit-inspired visual style. What can I use it for? The pixray-text2pixel model has a wide range of potential applications. You could use it to quickly generate pixel art assets for video games, create unique social media avatars or backgrounds, or even produce pixel art illustrations for blog posts or other creative projects. The model's ability to translate text into visuals also makes it a powerful tool for designers, artists, and anyone looking to bring their ideas to life in a unique and engaging way. Things to try One interesting thing to try with the pixray-text2pixel model is experimenting with different text prompts to see the variety of pixel art styles it can generate. You could also play with the aspect ratio and pixel scale settings to create different looks and see how they impact the output. Additionally, you might try combining the pixray-text2pixel model with other pixray tools, like the pixray-tiler, to create more complex and layered pixel art compositions.

Updated 9/18/2024

pixray-vqgan

87

pixray-vqgan is an AI-powered image generation system developed by dribnet. It combines previous ideas like Perception Engines, which use image augmentation and iterative optimization against an ensemble of classifiers, as well as CLIP-guided GAN imagery and techniques for navigating latent space. pixray-vqgan is a Python library and command-line utility that can also be run in Google Colab notebooks. It is similar to other models created by dribnet, such as pixray, clipit, 8bidoug, pixray-api, and pixray-text2image. Model inputs and outputs pixray-vqgan takes text prompts as input and generates images as output. The model can be configured with various input parameters, such as the desired aspect ratio, image quality, and the text prompt itself. Inputs Prompts**: Text prompts that describe the desired image Aspect**: The aspect ratio of the generated image, with options like "widescreen" or "square" Quality**: The quality level of the generated image, with options like "normal" or "better" (which is slower) Outputs The model generates one or more images based on the provided inputs Capabilities pixray-vqgan can create a wide variety of images based on text prompts, ranging from photorealistic scenes to abstract, surreal, or stylized visuals. The model is particularly adept at generating images with a distinctive visual style, such as pixel art or illustrations. It can be used to quickly generate sample images for creative projects, explore concepts, or test ideas. What can I use it for? pixray-vqgan can be used for a variety of creative and experimental purposes, such as: Generating concept art or illustrations for visual design projects Exploring abstract or surreal visual ideas Creating pixel art or other stylized images Generating sample images for user interface or product mockups Experimenting with different artistic styles and visual aesthetics Things to try One interesting aspect of pixray-vqgan is its ability to navigate the latent space of the model, allowing for subtle variations and refinements of generated images. Users can experiment with adjusting the input parameters, such as the aspect ratio or image quality, to see how they affect the output. Additionally, the model's incorporation of various techniques like CLIP-guided GAN imagery and latent space navigation can lead to unexpected and fascinating results, making it a valuable tool for creative exploration and experimentation.

Updated 9/18/2024

pixray

59

pixray is an image generation system that combines previous ideas from Perception Engines, CLIP-guided GAN imagery, and other techniques. It allows users to generate images based on text prompts, with capabilities for pixel art, photorealistic, and other styles. pixray can be run in Docker using Cog, and there are demo notebooks available to get started. Similar models include ControlNet-Scribble for generating detailed images from scribbled drawings, Realistic Vision V3 Inpainting for realistic image inpainting, and Stable Diffusion for generating photo-realistic images from text prompts. Model inputs and outputs pixray takes two main inputs: prompts, which are the text descriptions used to generate the image, and optional settings, which allow customizing the generation process. The outputs are one or more generated images. Inputs Prompts**: The text prompts describing the desired image, such as "Manhattan skyline at sunset. #pixelart" Settings**: Optional YAML settings to customize the image generation Outputs Generated images**: One or more images generated based on the provided prompts and settings Capabilities pixray can generate a wide variety of image styles, from pixel art to photorealistic. It combines techniques like image augmentation, CLIP-guided optimization, and latent space navigation to produce high-quality, customized images from text prompts. What can I use it for? You can use pixray to create custom images for various applications, such as game assets, illustrations, concept art, or even product mockups. The ability to generate images from text prompts can streamline the creative process and allow for rapid experimentation. Users with the Replicate creator profile have also found success in monetizing their work with pixray. Things to try One interesting aspect of pixray is its ability to produce pixel art images. You could experiment with prompts that incorporate pixel art hashtags or styles to see the unique results. Additionally, you could try combining pixray with other models, such as ControlNet-Scribble, to generate images with specific characteristics or effects.

Updated 9/18/2024

pixray-api

29

pixray-api is an image generation system developed by dribnet. It combines previous ideas from various AI research, including Perception Engines, CLIP guided GAN imagery, and CLIPDraw. The model is similar to other Replicate models like pixray, pixray-text2image, and pixray-tiler, as well as controlnet-scribble and stable-diffusion, all of which focus on generating or manipulating images. Model inputs and outputs pixray-api takes a yaml-formatted string as input, which contains the settings for the image generation process. The model then outputs an array of image URLs, representing the generated images. Inputs Settings**: A string containing yaml-formatted settings to control the image generation process Outputs Images**: An array of image URLs representing the generated images Capabilities pixray-api can generate a wide variety of images based on the settings provided. The model can create pixel art, abstract art, and photorealistic images, among other styles. It uses techniques like iterative optimization against an ensemble of classifiers to create the desired images. What can I use it for? You can use pixray-api to generate unique and visually interesting images for a variety of purposes, such as art projects, video game assets, or social media content. The model's flexibility allows you to experiment with different styles and settings to create images that fit your specific needs. Things to try Try experimenting with different settings in the yaml input to see how it affects the generated images. You can also try combining pixray-api with other image manipulation or generation tools to create even more complex and interesting visuals.

Updated 9/18/2024

pixray-tiler

21

The pixray-tiler model is a unique AI tool developed by Replicate's maintainer, dribnet, that allows you to turn any text description into a visually appealing wallpaper. Unlike similar models like pixray and pixray-text2image which generate images from text, pixray-tiler focuses on creating seamless, repeating tile patterns that can be used as wallpapers or backgrounds. Model inputs and outputs The pixray-tiler model takes a few key inputs to generate its unique tiled outputs. Users can provide a text prompts input to describe the desired pattern, toggle pixelart mode for a retro 8-bit style, mirror the pattern, and customize the settings in YAML format. Inputs Prompts**: Text prompt describing the desired tiled pattern Pixelart**: Toggle a retro 8-bit pixel art style Mirror**: Shift the tiled pattern to create a mirrored effect Settings**: YAML-formatted settings to customize the model Outputs Tiled images**: An array of generated tile images that can be used as seamless wallpaper Capabilities The pixray-tiler model excels at transforming text descriptions into visually striking wallpaper tiles. With its ability to generate pixel art styles and mirrored patterns, it can produce a wide variety of creative and unique designs. This makes it a powerful tool for artists, designers, or anyone looking to add some flair to their digital backgrounds. What can I use it for? The pixray-tiler model is perfect for creating custom wallpapers, website backgrounds, or even textures for 3D models. By providing a simple text prompt, you can generate an entire set of tiles that can be repeated seamlessly. This makes it easy to add a personal touch to your digital spaces or bring your creative visions to life. Things to try Experiment with different text prompts to see the variety of patterns the pixray-tiler model can produce. Try combining it with other models like controlnet-scribble or material-diffusion-sdxl to create even more unique and visually stunning results. The possibilities are endless with this versatile AI tool.

Updated 9/18/2024

pixray-pixel

19

pixray-pixel is an image generation system that combines various previous ideas, including Perception Engines, CLIP guided GAN imagery, and techniques from Ryan Murdoch, Katherine Crowson, and Kevin Frans. It can generate detailed pixel art images from text prompts, with the ability to control the aspect ratio and rendering engine. This model is similar to others like controlnet-scribble, sd_pixelart_spritesheet_generator, and All-In-One-Pixel-Model, which also generate pixel art from text prompts. Model inputs and outputs pixray-pixel takes text prompts as input and generates corresponding pixel art images as output. The model allows users to control the aspect ratio (wide vs. square) and the rendering engine (pixel) used to generate the images. Inputs Prompts**: Text prompts that describe the desired image Aspect**: Aspect ratio of the output image (wide vs. square) Drawer**: Rendering engine to use (pixel) Outputs Output images**: Pixel art images generated from the input prompts Capabilities pixray-pixel can generate a wide variety of pixel art scenes and objects, from fantastical landscapes to detailed characters and objects. The images have a distinct, retro-inspired aesthetic that can be useful for creating pixel art assets for games, animations, and other digital media. What can I use it for? You can use pixray-pixel to create pixel art for a variety of projects, such as: Game assets (sprites, backgrounds, UI elements) Pixel art illustrations and animations Pixel art-inspired digital art and designs Retro-themed social media content and branding The model's ability to generate diverse pixel art from text prompts makes it a versatile tool for creators looking to incorporate this aesthetic into their work. Things to try Experiment with different types of prompts to see the range of pixel art the model can generate. Try prompts that evoke specific genres, styles, or themes (e.g., "cyberpunk cityscape", "fantasy forest", "retro arcade game"). You can also try combining prompts with the different aspect ratio and rendering engine options to see how the output changes.

Updated 9/18/2024

homage1

9

homage1 is a text-to-image model created by Replicate user dribnet. It is similar to other "pixelart" models like 8bidoug, pixray-text2pixel, pixray-text2image, pixray-tiler, and pixray also developed by dribnet. These models use text prompts to generate pixel art or other stylized images. Model inputs and outputs homage1 takes a text prompt as input and generates an array of six color squares as output. The model is designed to capture a specific aesthetic inspired by pixel art. Inputs prompt**: The text prompt that describes the desired output. Outputs An array of six color square images, each represented as a URI string. Capabilities homage1 can generate a variety of pixel-inspired art from text prompts. The outputs have a consistent visual style and color palette, making them well-suited for design work or creating pixel art assets. What can I use it for? You can use homage1 to quickly generate pixel-inspired artwork for a variety of applications, such as game development, graphic design, or digital art projects. The model's fast inference time and simple input/output make it easy to experiment and iterate on ideas. Things to try Try providing different types of descriptive prompts to homage1 and see how the model interprets them. Experiment with prompts that describe colors, moods, or specific pixel art styles to see the range of outputs the model can produce.

Updated 9/17/2024