Gwang-kim

Models by this creator

diffusionclip

5

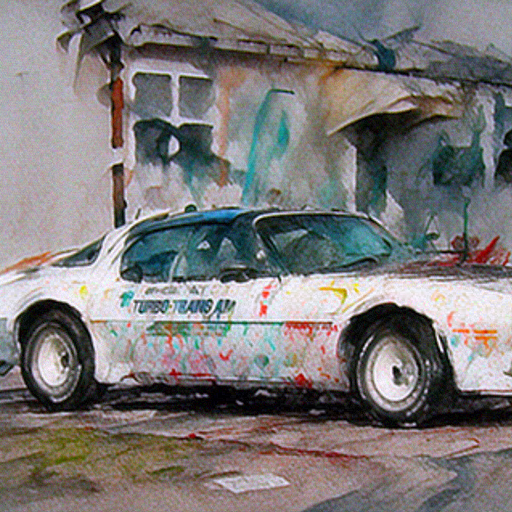

DiffusionCLIP is a novel method that performs text-driven image manipulation using diffusion models. It was proposed by Gwanghyun Kim, Taesung Kwon, and Jong Chul Ye in their CVPR 2022 paper. Unlike prior GAN-based approaches, DiffusionCLIP leverages the full inversion capability and high-quality image generation power of recent diffusion models to enable zero-shot image manipulation, even between unseen domains. This allows for robust and faithful manipulation of real images, going beyond the limited capabilities of GAN inversion methods. DiffusionCLIP is similar in spirit to other text-guided image manipulation models like StyleCLIP and StyleGAN-NADA, but with key technical differences enabled by its diffusion-based approach. Model inputs and outputs Inputs Image**: An input image to be manipulated. Edit type**: The desired attribute or style to apply to the input image (e.g. "ImageNet style transfer - Watercolor art"). Manipulation**: The type of manipulation to perform (e.g. "ImageNet style transfer"). Degree of change**: The intensity or amount of the desired edit, from 0 (no change) to 1 (maximum change). N test step**: The number of steps to use in the image generation process, between 5 and 100. Outputs Output image**: The manipulated image, with the desired attribute or style applied. Capabilities DiffusionCLIP enables high-quality, zero-shot image manipulation even on real-world images from diverse datasets like ImageNet. It can accurately edit images while preserving the original identity and content, unlike prior GAN-based approaches. The model also supports multi-attribute manipulation by blending noise from multiple fine-tuned models. Additionally, DiffusionCLIP can translate images between unseen domains, generating new images from scratch based on text prompts. What can I use it for? DiffusionCLIP can be a powerful tool for a variety of image editing and generation tasks. Its ability to manipulate real-world images in diverse domains makes it suitable for applications like photo editing, digital art creation, and even product visualization. Businesses could leverage DiffusionCLIP to quickly generate product mockups or visualizations based on textual descriptions. Creators could use it to explore creative possibilities by manipulating images in unexpected ways guided by text prompts. Things to try One interesting aspect of DiffusionCLIP is its ability to translate images between unseen domains, such as generating a "watercolor art" version of an input image. Try experimenting with different text prompts to see how the model can transform images in surprising ways, going beyond simple attribute edits. You could also explore the model's multi-attribute manipulation capabilities, blending different text-guided changes to create unique hybrid outputs.

Updated 9/16/2024