Jbilcke

Models by this creator

sdxl-panorama

1

The sdxl-panorama model is a version of the Stable Diffusion XL (SDXL) model that has been fine-tuned for panoramic image generation. This model builds on the capabilities of similar SDXL-based models, such as sdxl-recur, sdxl-controlnet-lora, sdxl-outpainting-lora, sdxl-black-light, and sdxl-deep-down, each of which focuses on a specific aspect of image generation. Model inputs and outputs The sdxl-panorama model takes a variety of inputs, including a prompt, image, seed, and various parameters to control the output. It generates panoramic images based on the provided input. Inputs Prompt**: The text prompt that describes the desired image. Image**: An input image for img2img or inpaint mode. Mask**: An input mask for inpaint mode, where black areas will be preserved and white areas will be inpainted. Seed**: A random seed to control the output. Width and Height**: The desired dimensions of the output image. Refine**: The refine style to use. Scheduler**: The scheduler to use for the diffusion process. LoRA Scale**: The LoRA additive scale, which is only applicable on trained models. Num Outputs**: The number of images to output. Refine Steps**: The number of steps to refine, which defaults to num_inference_steps. Guidance Scale**: The scale for classifier-free guidance. Apply Watermark**: A boolean to determine whether to apply a watermark to the output image. High Noise Frac**: The fraction of noise to use for the expert_ensemble_refiner. Negative Prompt**: An optional negative prompt to guide the image generation. Prompt Strength**: The prompt strength when using img2img or inpaint mode. Num Inference Steps**: The number of denoising steps to perform. Outputs Output Images**: The generated panoramic images. Capabilities The sdxl-panorama model is capable of generating high-quality panoramic images based on the provided inputs. It can produce detailed and visually striking landscapes, cityscapes, and other panoramic scenes. The model can also be used for image inpainting and manipulation, allowing users to refine and enhance existing images. What can I use it for? The sdxl-panorama model can be useful for a variety of applications, such as creating panoramic images for virtual tours, film and video production, architectural visualization, and landscape photography. The model's ability to generate and manipulate panoramic images can be particularly valuable for businesses and creators looking to showcase their products, services, or artistic visions in an immersive and engaging way. Things to try One interesting aspect of the sdxl-panorama model is its ability to generate seamless and coherent panoramic images from a variety of input prompts and images. You could try experimenting with different types of scenes, architectural styles, or natural landscapes to see how the model handles the challenges of panoramic image generation. Additionally, you could explore the model's inpainting capabilities by providing partial images or masked areas and observing how it fills in the missing details.

Updated 10/4/2024

sdxl-cinematic-2

1

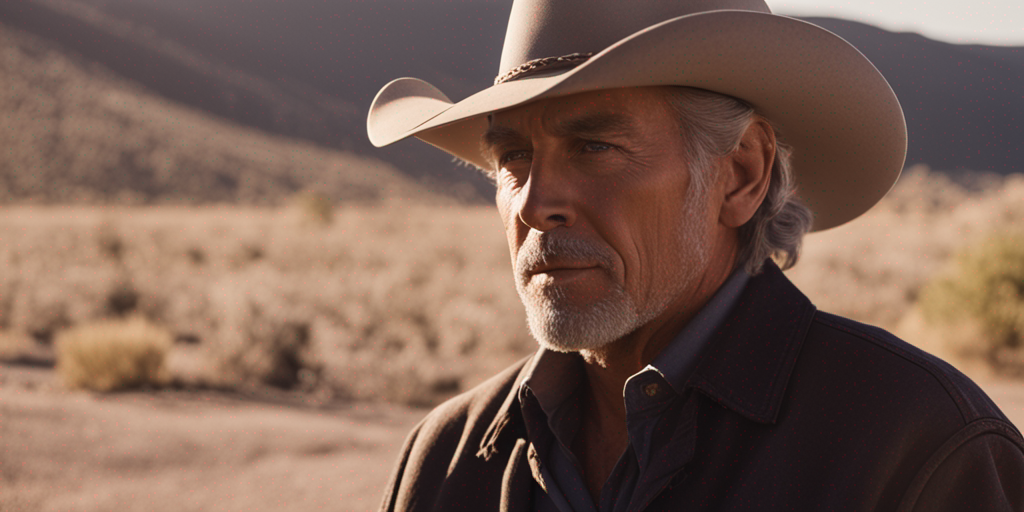

sdxl-cinematic-2 is a variation of the Stable Diffusion XL (SDXL) model, developed by the maintainer jbilcke. It is designed to generate cinematic and visually striking images. The model builds upon the capabilities of the original SDXL model, with customizations that aim to produce more immersive and atmospheric visual outputs. Similar models like sdxl-recur, sdxl-betterup, and sdxl-shining explore related image generation capabilities. Model inputs and outputs sdxl-cinematic-2 takes a variety of inputs, including an image, a prompt, and optional parameters like seed, width, height, and scheduler. The model can generate one or more output images based on the provided inputs. Inputs Prompt**: The text prompt that describes the desired image. Negative prompt**: Additional text to guide the model away from unwanted elements. Image**: An input image to be used for image-to-image or inpainting tasks. Mask**: A mask for the input image, used in inpainting mode. Width and height**: The desired dimensions of the output image. Seed**: A random seed value to control the image generation process. Refine**: The type of refinement to apply to the generated image. Scheduler**: The algorithm used to schedule the denoising steps. Lora scale**: The additive scale for LoRA (Low-Rank Adaptation) models. Num outputs**: The number of images to generate. Refine steps**: The number of refinement steps to apply. Guidance scale**: The scale for classifier-free guidance. Apply watermark**: Whether to apply a watermark to the generated images. High noise frac**: The fraction of high noise to use for the expert ensemble refiner. Outputs Output images**: One or more generated images, returned as URIs. Capabilities sdxl-cinematic-2 is capable of generating highly detailed and visually immersive images. The model's cinematic style lends itself well to the creation of atmospheric scenes, dramatic landscapes, and fantastical environments. By leveraging the power of Stable Diffusion, the model can produce a wide range of image types, from photorealistic to surreal and imaginative. What can I use it for? The sdxl-cinematic-2 model can be used for a variety of creative and artistic applications, such as concept art, illustration, visual effects, and game development. Its ability to generate cinematic-style images makes it a valuable tool for filmmakers, photographers, and visual storytellers. Additionally, the model's flexibility allows it to be used in a range of commercial and personal projects, from advertising and marketing to personal creative expression. Things to try Experiment with different prompts and input parameters to see how they affect the generated images. Try combining sdxl-cinematic-2 with other models, such as BLIP-2, to explore more advanced image-to-text and image-to-image tasks. You can also try using the model for image inpainting or image-to-image tasks, leveraging the provided mask and image input options.

Updated 10/4/2024

sdxl-cyberpunk-2077

1

The sdxl-cyberpunk-2077 model is a text-to-image generative AI model created by jbilcke that can create detailed and visually striking images inspired by the Cyberpunk 2077 universe. It is similar to other popular text-to-image models like SDXL-Lightning, Stable Diffusion, and SDXL, but with a unique focus on generating imagery with a distinct Cyberpunk aesthetic. Model inputs and outputs The sdxl-cyberpunk-2077 model takes in a text prompt as the main input, along with optional parameters like image dimensions, seed, and more. The model then generates one or more images that attempt to visually capture the essence of the input prompt. The output is a list of image URLs that can be downloaded and used. Inputs Prompt**: The text description of the image you want to generate Image**: An optional input image that the model can use as a starting point for generation Mask**: An optional input mask that specifies which areas of the image should be preserved or inpainted Seed**: A random seed value to control the randomness of the generated image Width/Height**: The desired dimensions of the output image Number of outputs**: The number of images to generate Outputs Image URLs**: A list of URLs pointing to the generated images Capabilities The sdxl-cyberpunk-2077 model excels at generating high-quality, photorealistic images with a distinct Cyberpunk theme. It can create detailed cityscapes, futuristic technology, moody lighting, and more, all infused with the gritty, neon-drenched aesthetic of the Cyberpunk genre. The model is particularly adept at combining various elements like characters, vehicles, and environments to produce cohesive and visually striking scenes. What can I use it for? The sdxl-cyberpunk-2077 model could be useful for a variety of applications, such as concept art for Cyberpunk-themed video games, movies, or books, as well as for creating unique backgrounds or visuals for websites, presentations, or social media posts. It could also be used for personal creative projects, allowing users to explore the Cyberpunk aesthetic and bring their ideas to life. Things to try One interesting thing to try with the sdxl-cyberpunk-2077 model is to experiment with different types of prompts, from specific scene descriptions to more abstract or evocative prompts. See how the model interprets and translates these prompts into its unique Cyberpunk-inspired imagery. You can also try using the model's image input and mask capabilities to refine or build upon existing visuals, blending the Cyberpunk aesthetic with other elements.

Updated 10/4/2024