Naklecha

Models by this creator

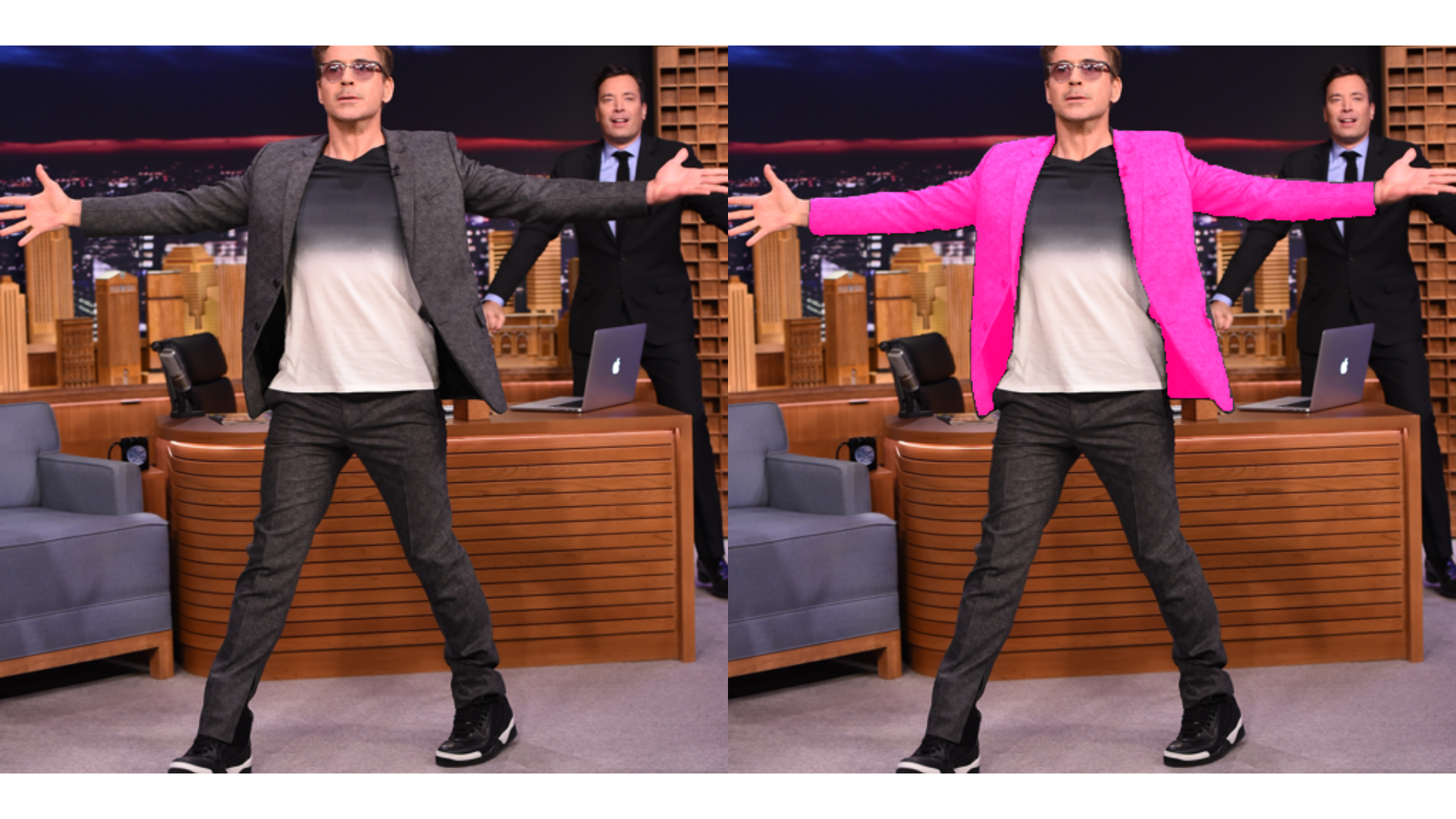

fashion-ai

60

The fashion-ai model is a powerful AI tool that can edit clothing found within an image. Developed by naklecha, this model utilizes a state-of-the-art clothing segmentation algorithm to enable seamless editing of clothing elements in a given image. While similar to models like stable-diffusion and real-esrgan in its image editing capabilities, the fashion-ai model is specifically tailored for fashion-related tasks, making it a valuable asset for fashion designers, e-commerce platforms, and visual content creators. Model inputs and outputs The fashion-ai model takes two key inputs: an image and a prompt. The image should depict clothing that the model will edit, while the prompt specifies the desired changes to the clothing. The model supports editing two types of clothing: topwear and bottomwear. When provided with the necessary inputs, the fashion-ai model outputs an array of edited image URIs, showcasing the results of the clothing edits. Inputs Image**: The input image to be edited, which will be center-cropped and resized to 512x512 resolution. Prompt**: The text prompt that describes the desired changes to the clothing in the image. Clothing**: The type of clothing to be edited, which can be either "topwear" or "bottomwear". Outputs Array of image URIs**: The model outputs an array of URIs representing the edited images, where the clothing has been modified according to the provided prompt. Capabilities The fashion-ai model excels at seamlessly editing clothing elements within an image. By leveraging state-of-the-art clothing segmentation algorithms, the model can precisely identify and manipulate specific clothing items, enabling users to experiment with various design ideas or product alterations. This capability makes the fashion-ai model particularly valuable for fashion designers, e-commerce platforms, and content creators who need to quickly and effectively modify clothing in their visual assets. What can I use it for? The fashion-ai model can be utilized in a variety of fashion-related applications, such as: Virtual clothing try-on**: By integrating the fashion-ai model into an e-commerce platform, customers can visualize how different clothing items would look on them, enhancing the online shopping experience. Fashion design prototyping**: Fashion designers can use the fashion-ai model to experiment with different clothing designs, quickly testing ideas and iterating on their concepts. Content creation for social media**: Visual content creators can leverage the fashion-ai model to easily edit and enhance clothing elements in their fashion-focused social media posts, improving the overall aesthetic and appeal. Things to try One interesting aspect of the fashion-ai model is its ability to handle different types of clothing. Users can experiment with editing both topwear and bottomwear, opening up a world of creative possibilities. For example, you could try mixing and matching different clothing items, swapping out colors and patterns, or even completely transforming the style of a garment. By pushing the boundaries of the model's capabilities, you may uncover innovative ways to streamline your fashion-related workflows or generate unique visual content.

Updated 5/19/2024

search-autocomplete

19

The search-autocomplete model is an autocomplete API that runs on the CPU, as described by the maintainer naklecha. This model is similar to other autocomplete and text generation models like styletts2 and the Meta LLaMA models and [meta-llama-3-8b-instruct-meta], which are also fine-tuned for chat completions. The search-autocomplete model is designed to provide quick and efficient autocomplete suggestions based on input text. Model inputs and outputs The search-autocomplete model takes a single input, a prompt, which is a string of text. It then outputs an array of strings, which are the autocomplete suggestions for that prompt. Inputs prompt**: The input text for which the model should generate autocomplete suggestions. Outputs Output**: An array of string autocomplete suggestions based on the input prompt. Capabilities The search-autocomplete model is capable of generating relevant autocomplete suggestions quickly and efficiently on the CPU. This can be useful for applications that require real-time autocomplete functionality, such as search bars, chat interfaces, or code editors. What can I use it for? The search-autocomplete model can be leveraged in a variety of applications that require autocomplete functionality. For example, it could be used to power the autocomplete feature in a search engine, providing users with relevant suggestions as they type. It could also be integrated into a chat application, suggesting possible responses or commands as the user types. Additionally, the model could be used in a code editor to provide autocomplete suggestions for variable names, function calls, or other programming constructs. Things to try One interesting thing to try with the search-autocomplete model is to experiment with different prompts and observe the resulting autocomplete suggestions. You could try prompts that are related to specific topics or domains, or prompts that are intentionally ambiguous or open-ended, to see how the model responds. Additionally, you could try incorporating the search-autocomplete model into a larger application or system to see how it performs in a real-world setting.

Updated 5/19/2024

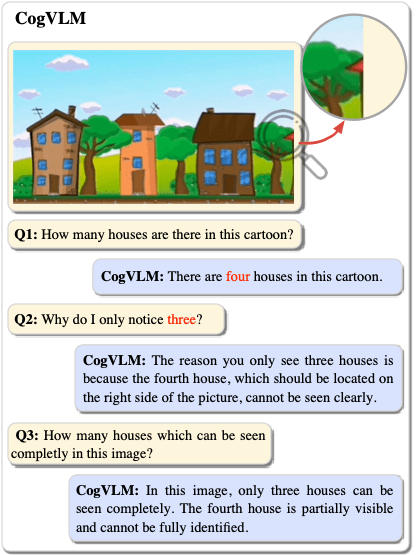

cogvlm

9

cogvlm is a powerful open-source visual language model (VLM) developed by the team at Tsinghua University. Compared to similar visual-language models like cogvlm and llava-13b, cogvlm stands out with its state-of-the-art performance on a wide range of cross-modal benchmarks, including NoCaps, Flickr30k captioning, and various visual question answering tasks. The model has 10 billion visual parameters and 7 billion language parameters, allowing it to understand and generate detailed descriptions of images. Unlike some previous VLMs that struggled with hallucination, cogvlm is known for its ability to provide accurate and factual information about the visual content. Model Inputs and Outputs Inputs Image**: An image in a standard image format (e.g. JPEG, PNG) provided as a URL. Prompt**: A text prompt describing the task or question to be answered about the image. Outputs Output**: An array of strings, where each string represents the model's response to the provided prompt and image. Capabilities cogvlm excels at a variety of visual understanding and reasoning tasks. It can provide detailed descriptions of images, answer complex visual questions, and even perform visual grounding - identifying and localizing specific objects or elements in an image based on a textual description. For example, when shown an image of a park scene and asked "Can you describe what you see in the image?", cogvlm might respond with a detailed paragraph capturing the key elements, such as the lush green grass, the winding gravel path, the trees in the distance, and the clear blue sky overhead. Similarly, if presented with an image of a kitchen and the prompt "Where is the microwave located in the image?", cogvlm would be able to identify the microwave's location and provide the precise bounding box coordinates. What Can I Use It For? The broad capabilities of cogvlm make it a versatile tool for a wide range of applications. Developers and researchers could leverage the model for tasks such as: Automated image captioning and visual question answering for media or educational content Visual interface agents that can understand and interact with graphical user interfaces Multimodal search and retrieval systems that can match images to relevant textual information Visual data analysis and reporting, where the model can extract insights from visual data By tapping into cogvlm's powerful visual understanding, these applications can offer more natural and intuitive experiences for users. Things to Try One interesting way to explore cogvlm's capabilities is to try various types of visual prompts and see how the model responds. For example, you could provide complex scenes with multiple objects and ask the model to identify and localize specific elements. Or you could give it abstract or artistic images and see how it interprets and describes the visual content. Another interesting avenue to explore is the model's ability to handle visual grounding tasks. By providing textual descriptions of objects or elements in an image, you can test how accurately cogvlm can pinpoint their locations and extents. Ultimately, the breadth of cogvlm's visual understanding makes it a valuable tool for a wide range of applications. As you experiment with the model, be sure to share your findings and insights with the broader AI community.

Updated 5/19/2024

clothing-segmentation

2

The clothing-segmentation model is a state-of-the-art clothing segmentation algorithm developed by naklecha. This model can detect and segment clothing within an image, making it a powerful tool for a variety of applications. It builds upon similar models like fashion-ai, which can edit clothing within an image, and segformer_b2_clothes, a model fine-tuned for clothes segmentation. Model inputs and outputs The clothing-segmentation model takes two inputs: an image and a clothing type (either "topwear" or "bottomwear"). The model then outputs an array of strings, which are the URIs of the segmented clothing regions within the input image. Inputs image**: The input image to be processed. The image will be center cropped and resized to 512x512 pixels. clothing**: The type of clothing to segment, either "topwear" or "bottomwear". Outputs Output**: An array of strings, each representing the URI of a segmented clothing region within the input image. Capabilities The clothing-segmentation model can accurately detect and segment clothing within an image, even in complex scenes with multiple people or objects. This makes it a powerful tool for applications like virtual try-on, fashion e-commerce, and image editing. What can I use it for? The clothing-segmentation model can be used in a variety of applications, such as: Virtual Try-on**: By segmenting clothing in an image, the model can enable virtual try-on experiences, where users can see how a garment would look on them. Fashion E-commerce**: Clothing retailers can use the model to automatically extract clothing regions from product images, improving search and recommendation systems. Image Editing**: The segmented clothing regions can be used as input to other models, like the fashion-ai model, to edit or manipulate the clothing in an image. Things to try One interesting thing to try with the clothing-segmentation model is to use it in combination with other AI models, like stable-diffusion or blip, to create unique and creative fashion-related content. By leveraging the clothing segmentation capabilities of this model, you can unlock new possibilities for image editing, virtual try-on, and more.

Updated 5/19/2024