Philz1337x

Models by this creator

clarity-upscaler

5.5K

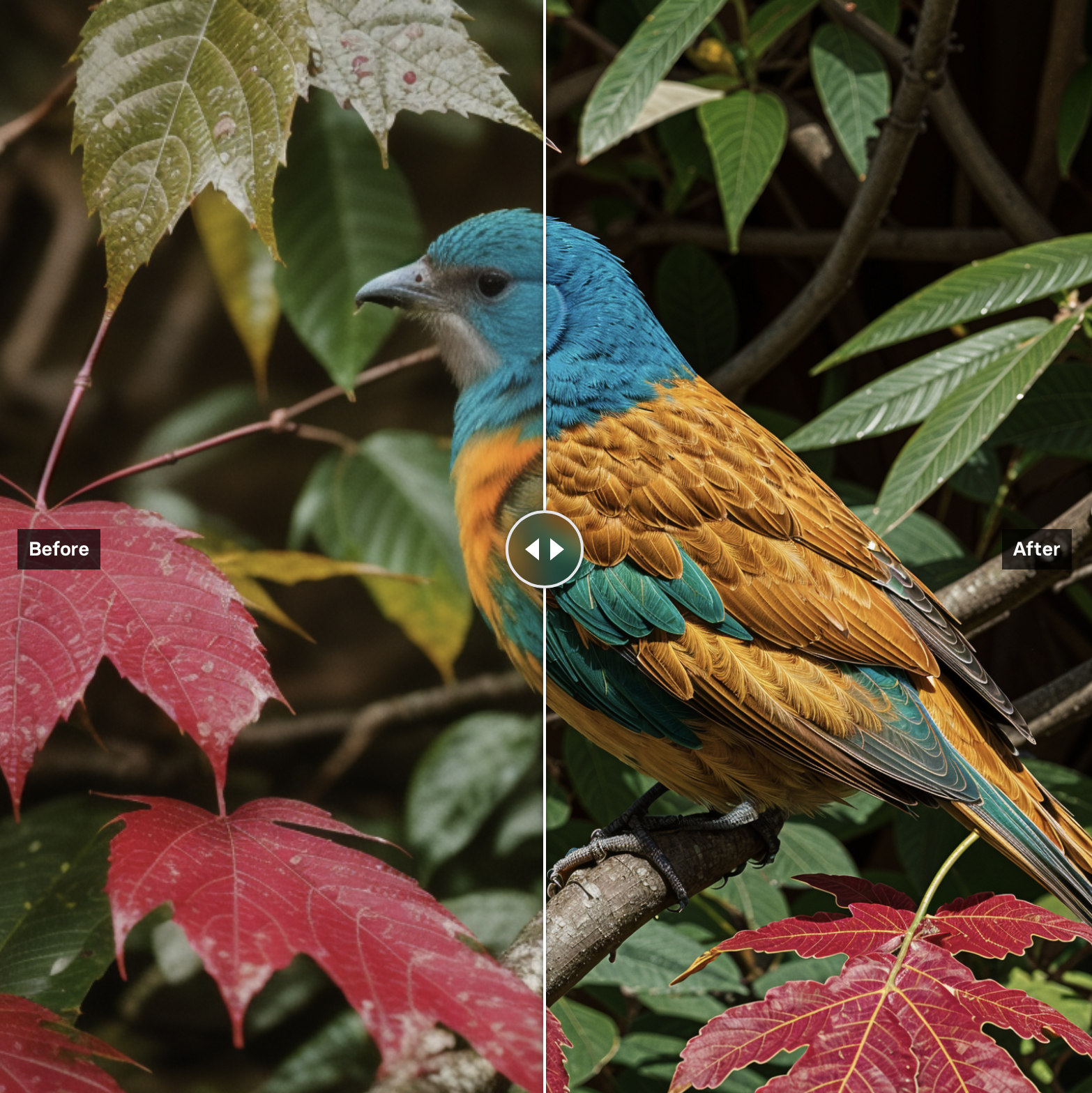

The clarity-upscaler is a high-resolution image upscaler and enhancer developed by AI model creator philz1337x. It is a free and open-source alternative to the commercial Magnific tool, allowing users to upscale and improve image quality. The model can handle a variety of input images and provides a range of customization options to fine-tune the upscaling process. Model inputs and outputs The clarity-upscaler takes an input image and a set of parameters to control the upscaling process. Users can adjust the seed, prompt, dynamic range, creativity, resemblance, scale factor, tiling, and more. The model outputs one or more high-resolution, enhanced versions of the input image. Inputs Image**: The input image to be upscaled and enhanced Prompt**: A textual description to guide the upscaling process Seed**: A random seed value to control the output randomness Dynamic**: The HDR range to use, from 3 to 9 Creativity**: The level of creativity to apply, from 0.3 to 0.9 Resemblance**: The level of resemblance to the input image, from 0.3 to 1.6 Scale Factor**: The factor to scale the image up, typically 2x Tiling Width/Height**: The size of tiles used for fractality, lower values increase fractality Lora Links**: Links to Lora models to use during upscaling Downscaling**: Whether to downscale the input image before upscaling Outputs One or more high-resolution, enhanced images based on the input and parameters Capabilities The clarity-upscaler can dramatically improve the quality and detail of input images through its advanced upscaling and enhancement algorithms. It can handle a wide range of input images, from photographs to digital art, and provide customizable results. The model has been optimized for speed and can produce high-quality outputs quickly. What can I use it for? The clarity-upscaler is a versatile tool that can be used for a variety of creative and practical applications. Some potential use cases include: Enhancing low-resolution images for print or web use Upscaling and improving the quality of digital art and illustrations Generating high-quality backgrounds, textures, or elements for visual design projects Improving the visual quality of images for use in presentations, social media, or other digital content Things to try One interesting feature of the clarity-upscaler is its ability to adjust the "fractality" of the output image by manipulating the tiling width and height parameters. Lower values for these parameters can introduce more fractality, creating a unique and visually striking effect. Users can experiment with different combinations of these settings to achieve their desired aesthetic. Another useful feature is the ability to incorporate Lora models into the upscaling process. Lora models can introduce additional style, details, and characteristics to the output, allowing users to further customize the results. Exploring different Lora models and mixing them with the clarity-upscaler settings can lead to a wide range of creative possibilities.

Updated 6/29/2024

controlnet-deliberate

787

controlnet-deliberate is a model that allows you to modify images using canny edge detection and the Deliberate model. It is maintained by philz1337x. Similar models include controlnet-hough, which uses M-LSD line detection, controlnet-v1-1-multi, which combines clip interrogator with controlnet sdxl for canny and controlnet v1.1 for other features, and controlnet-scribble, which generates detailed images from scribbled drawings. Model inputs and outputs controlnet-deliberate takes in an input image, a prompt, and various parameters to control the image generation process. It then outputs one or more images modified based on the provided inputs. Inputs Image**: The input image to be modified Prompt**: The text prompt to guide the image generation Seed**: The seed for the random number generator Scale**: The scale for classifier-free guidance Weight**: The weight of the ControlNet Additional Prompt**: Additional text to be appended to the prompt Negative Prompt**: Prompt to avoid certain undesirable image features Denoising Steps**: The number of denoising steps to perform Number of Samples**: The number of output images to generate Canny Edge Detection Thresholds**: The low and high thresholds for Canny edge detection Detection Resolution**: The resolution at which the detection method will be applied Outputs One or more modified images based on the provided inputs Capabilities controlnet-deliberate allows you to generate images by combining an input image with a text prompt, while preserving the structure of the original image using canny edge detection and the Deliberate model. This can be useful for tasks like image editing, photo manipulation, or creating visualizations. What can I use it for? You can use controlnet-deliberate for a variety of image-related tasks, such as: Modifying existing images to match a specific prompt Creating unique and interesting visual art Generating images for use in presentations, publications, or other media Experimenting with different image generation techniques Things to try Some ideas for things to try with controlnet-deliberate include: Exploring the effects of different canny edge detection thresholds on the output images Combining the model with other image processing techniques, such as image segmentation or depth estimation Using the model to generate images for a specific theme or style Experimenting with different prompts to see how they affect the generated images

Updated 6/29/2024

style-transfer

50

The style-transfer model allows you to apply the style of one image to another. This can be useful for creating artistic renditions of your photos or generating unique artwork. Compared to similar models like clarity-upscaler, style-transfer focuses on transferring the artistic style rather than just enhancing image quality. It can produce results that are more expressive and visually striking than a pure upscaler. Model inputs and outputs The style-transfer model takes two key inputs: the image you want to apply the style to, and the reference image that will provide the artistic style. You can also adjust parameters like the strength of the style transfer and the number of inference steps to control the output. Inputs image**: The image you want to apply the style to image_style**: The reference image that will provide the artistic style prompt**: A text prompt to guide the style transfer (optional) negative_prompt**: A text prompt to avoid certain styles or elements (optional) guidance_scale**: The scale for classifier-free guidance (default 8) style_strength**: How much the style should be applied (default 0.4) structure_strength**: How much the structure should be preserved (default 0.6) num_inference_steps**: The number of denoising steps (default 30) seed**: A random seed value (optional) Outputs An array of one or more images with the applied style transfer Capabilities The style-transfer model can take a wide variety of input images and apply diverse artistic styles to them. It can create impressionist, abstract, or surrealist interpretations of your photos, seamlessly blending the content and style. The results are often striking and unique, opening up new creative possibilities. What can I use it for? You can use the style-transfer model to create visually arresting artwork from your own photos. This could be for personal projects, to sell as digital art, or to enhance marketing materials. The model works well with human portraits, landscapes, and other common photographic subjects. If you're not comfortable using the command-line tools, you can also try the paid version at ClarityAI.cc, which provides a user-friendly web interface for the style-transfer model. Things to try Experiment with different reference images to see how the style transfer affects the output. Try using abstract paintings, classic works of art, or even unusual textures as the style source. You can also play with the strength parameters to find the right balance between preserving the original image and applying the new style.

Updated 6/29/2024

multidiffusion-upscaler

4

The multidiffusion-upscaler is a high-resolution image upscaler and enhancer created by Replicate user philz1337x. It is similar to models like the clarity-upscaler, style-transfer, and real-esrgan which also focus on image upscaling and enhancement. The model is designed to produce high-quality, detailed images from lower-resolution inputs. Model inputs and outputs The multidiffusion-upscaler takes in an image, along with various parameters like seed, width, height, prompts, and control net settings. It then outputs one or more upscaled and enhanced images based on the input. The model can handle a variety of input image types and sizes, and the output resolution can be adjusted as needed. Inputs Image**: The input image to be upscaled and enhanced Seed**: A random seed value to control the output Width/Height**: The desired output image dimensions Prompt**: A text prompt to guide the image generation SD VAE, SD Model, Scheduler, Controlnet settings**: Various model checkpoint and configuration settings Outputs Output Image(s)**: One or more upscaled and enhanced versions of the input image Capabilities The multidiffusion-upscaler is capable of producing high-quality, detailed images from lower-resolution inputs. It can effectively enlarge and sharpen images while preserving important details and features. The model also allows for fine-tuning of the output through prompts and control net settings, enabling users to customize the style and content of the generated images. What can I use it for? The multidiffusion-upscaler can be useful for a variety of applications, such as: Enhancing low-resolution images for use in presentations, publications, or websites Upscaling and improving the quality of images for social media or e-commerce Generating high-quality images for use in creative projects, such as digital art or visual design Experimenting with different prompts and control net settings to explore the creative potential of the model Users can leverage the clarity-upscaler or style-transfer models in conjunction with the multidiffusion-upscaler to further enhance and refine their image outputs. Things to try One interesting aspect of the multidiffusion-upscaler is its use of tiled diffusion, which allows for efficient processing of large images. Users can experiment with the various tiled diffusion settings, such as tile size and overlap, to find the optimal balance between speed and output quality. Additionally, the model's integration with control net technology provides opportunities for users to explore how different control net models and configurations can impact the final image. Experimenting with different control net settings, such as the control mode and guidance, can lead to unique and unexpected results.

Updated 6/29/2024