InstantID

Maintainer: InstantX

616

🌀

| Property | Value |

|---|---|

| Model Link | View on HuggingFace |

| API Spec | View on HuggingFace |

| Github Link | No Github link provided |

| Paper Link | No paper link provided |

Create account to get full access

Model overview

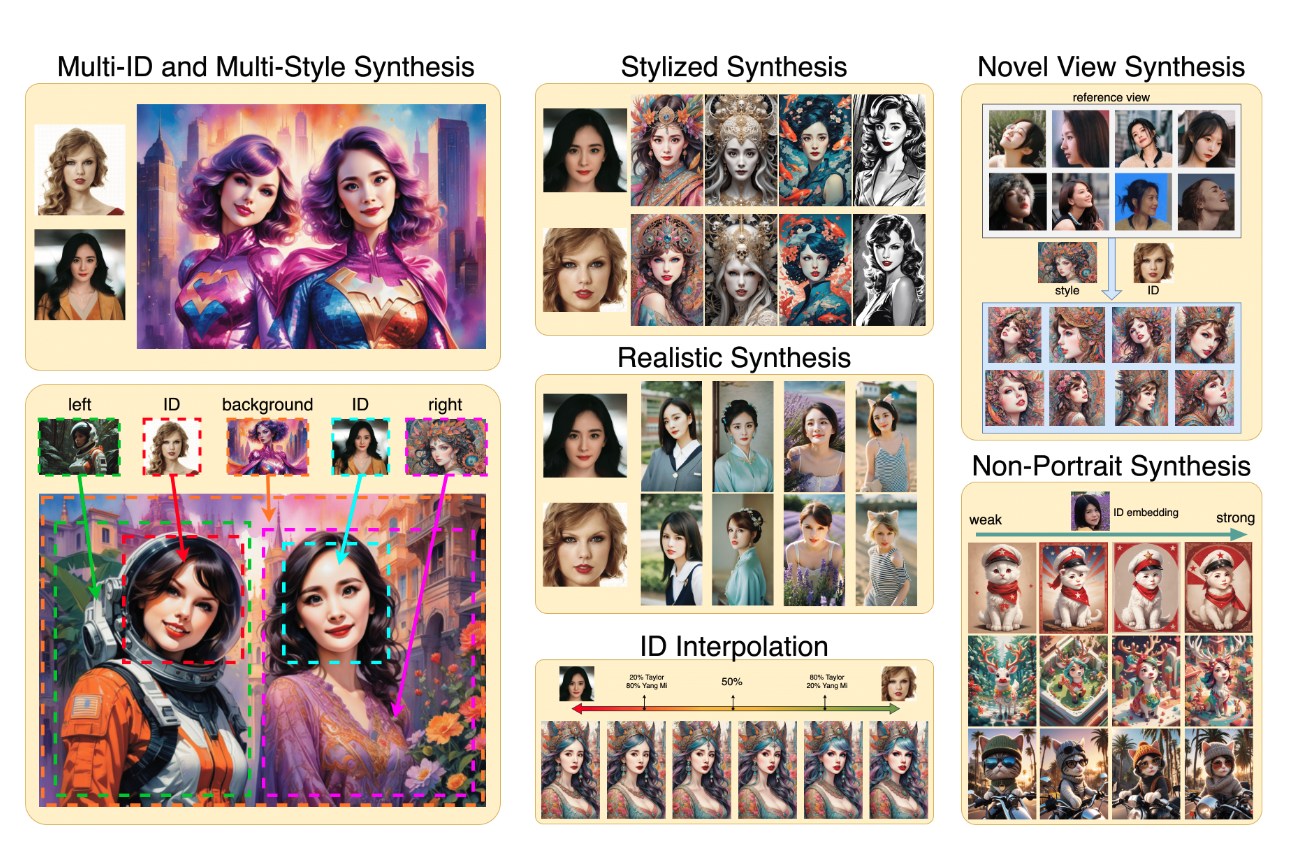

InstantID is a state-of-the-art AI model developed by InstantX that enables ID-Preserving image generation from a single input image. Unlike traditional generative models that produce random images, InstantID can generate diverse images while preserving the identity of the person in the input image. This makes it a powerful tool for applications such as virtual try-on, digital avatar creation, and photo manipulation. InstantID builds on recent advancements in image-to-image translation, such as the IP-Adapter-FaceID model, to achieve this capability.

Model inputs and outputs

Inputs

- A single input image containing a face

- (Optional) A text prompt to guide the generation process

Outputs

- Diverse images of the same person in the input image, with varying styles, poses, and expressions

- The generated images preserve the identity of the person in the input image

Capabilities

InstantID can generate a wide range of images while preserving the identity of the person in the input image. This includes different artistic styles, such as photorealistic or more abstract renderings, as well as changes in pose, expression, and clothing. The model is able to achieve this through its novel tuning-free approach, which leverages a combination of techniques like CLIP-based image encoding and ID-preserving generation.

What can I use it for?

InstantID can be used for a variety of applications that require generating images of real people, while preserving their identity. This includes virtual try-on of clothing or accessories, creating digital avatars or virtual personas, and photo manipulation tasks like changing the style or expression of a person in an image. The model's ability to generate diverse outputs from a single input image also makes it useful for content creation and creative applications.

Things to try

One interesting aspect of InstantID is its ability to generate images with varying degrees of photorealism or artistic interpretation. By adjusting the text prompt, you can explore how the model balances preserving the person's identity with creating more abstract or stylized renderings. Additionally, the model's tuning-free approach means that it can be readily applied to new tasks or domains without the need for extensive fine-tuning, making it a versatile tool for experimentation and rapid prototyping.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

🔮

IP-Adapter-FaceID

1.3K

The IP-Adapter-FaceID is an experimental AI model developed by h94 that can generate various style images conditioned on a face with only text prompts. It uses face ID embedding from a face recognition model instead of CLIP image embedding, and additionally uses LoRA to improve ID consistency. The model has seen several updates, including IP-Adapter-FaceID-Plus which uses both face ID embedding and CLIP image embedding, and IP-Adapter-FaceID-PlusV2 which allows for controllable CLIP image embedding for the face structure. More recently, an SDXL version called IP-Adapter-FaceID-SDXL and IP-Adapter-FaceID-PlusV2-SDXL have been introduced. The model is similar to other face-focused AI models like IP-Adapter-FaceID, IP_Adapter-SDXL-Face, GFPGAN, and IP_Adapter-Face-Inpaint. Model inputs and outputs Inputs Face ID embedding from a face recognition model like InsightFace Outputs Various style images conditioned on the input face ID embedding Capabilities The IP-Adapter-FaceID model can generate images of faces in different artistic styles based solely on the face ID embedding, without the need for full image prompts. This can be useful for applications like portrait generation, face modification, and artistic expression. What can I use it for? The IP-Adapter-FaceID model is intended for research purposes, such as exploring the capabilities and limitations of face-focused generative models, understanding the impacts of biases, and developing educational or creative tools. However, it is important to note that the model is not intended to produce factual or true representations of people, and using it for such purposes would be out of scope. Things to try One interesting aspect to explore with the IP-Adapter-FaceID model is the impact of the face ID embedding on the generated images. By adjusting the weight of the face structure using the IP-Adapter-FaceID-PlusV2 version, users can experiment with different levels of face similarity and artistic interpretation. Additionally, the SDXL variants offer opportunities to study the performance and capabilities of the model in the high-resolution image domain.

Updated Invalid Date

instant-id

485

instant-id is a state-of-the-art AI model developed by the InstantX team that can generate realistic images of real people instantly. It utilizes a tuning-free approach to achieve identity-preserving generation with only a single input image. The model is capable of various downstream tasks such as stylized synthesis, where it can blend the facial features and style of the input image. Compared to similar models like AbsoluteReality V1.8.1, Reliberate v3, Stable Diffusion, Photomaker, and Photomaker Style, instant-id achieves better fidelity and retains good text editability, allowing the generated faces and styles to blend more seamlessly. Model inputs and outputs instant-id takes a single input image of a face and a text prompt, and generates one or more realistic images that preserve the identity of the input face while incorporating the desired style and content from the text prompt. The model utilizes a novel identity-preserving generation technique that allows it to generate high-quality, identity-preserving images in a matter of seconds. Inputs Image**: The input face image used as a reference for the generated images. Prompt**: The text prompt describing the desired style and content of the generated images. Seed** (optional): A random seed value to control the randomness of the generated images. Pose Image** (optional): A reference image used to guide the pose of the generated images. Outputs Images**: One or more realistic images that preserve the identity of the input face while incorporating the desired style and content from the text prompt. Capabilities instant-id is capable of generating highly realistic images of people in a variety of styles and settings, while preserving the identity of the input face. The model can seamlessly blend the facial features and style of the input image, allowing for unique and captivating results. This makes the model a powerful tool for a wide range of applications, from creative content generation to virtual avatars and character design. What can I use it for? instant-id can be used for a variety of applications, such as: Creative Content Generation**: Quickly generate unique and realistic images for use in art, design, and multimedia projects. Virtual Avatars**: Create personalized virtual avatars that can be used in games, social media, or other digital environments. Character Design**: Develop realistic and expressive character designs for use in animation, films, or video games. Augmented Reality**: Integrate generated images into augmented reality experiences, allowing for the seamless blending of real and virtual elements. Things to try With instant-id, you can experiment with a wide range of text prompts and input images to generate unique and captivating results. Try prompts that explore different styles, genres, or themes, and see how the model can blend the facial features and aesthetics in unexpected ways. You can also experiment with different input images, from close-up portraits to more expressive or stylized faces, to see how the model adapts and responds. By pushing the boundaries of what's possible with identity-preserving generation, you can unlock a world of creative possibilities.

Updated Invalid Date

sdxl-lightning-4step

158.8K

sdxl-lightning-4step is a fast text-to-image model developed by ByteDance that can generate high-quality images in just 4 steps. It is similar to other fast diffusion models like AnimateDiff-Lightning and Instant-ID MultiControlNet, which also aim to speed up the image generation process. Unlike the original Stable Diffusion model, these fast models sacrifice some flexibility and control to achieve faster generation times. Model inputs and outputs The sdxl-lightning-4step model takes in a text prompt and various parameters to control the output image, such as the width, height, number of images, and guidance scale. The model can output up to 4 images at a time, with a recommended image size of 1024x1024 or 1280x1280 pixels. Inputs Prompt**: The text prompt describing the desired image Negative prompt**: A prompt that describes what the model should not generate Width**: The width of the output image Height**: The height of the output image Num outputs**: The number of images to generate (up to 4) Scheduler**: The algorithm used to sample the latent space Guidance scale**: The scale for classifier-free guidance, which controls the trade-off between fidelity to the prompt and sample diversity Num inference steps**: The number of denoising steps, with 4 recommended for best results Seed**: A random seed to control the output image Outputs Image(s)**: One or more images generated based on the input prompt and parameters Capabilities The sdxl-lightning-4step model is capable of generating a wide variety of images based on text prompts, from realistic scenes to imaginative and creative compositions. The model's 4-step generation process allows it to produce high-quality results quickly, making it suitable for applications that require fast image generation. What can I use it for? The sdxl-lightning-4step model could be useful for applications that need to generate images in real-time, such as video game asset generation, interactive storytelling, or augmented reality experiences. Businesses could also use the model to quickly generate product visualization, marketing imagery, or custom artwork based on client prompts. Creatives may find the model helpful for ideation, concept development, or rapid prototyping. Things to try One interesting thing to try with the sdxl-lightning-4step model is to experiment with the guidance scale parameter. By adjusting the guidance scale, you can control the balance between fidelity to the prompt and diversity of the output. Lower guidance scales may result in more unexpected and imaginative images, while higher scales will produce outputs that are closer to the specified prompt.

Updated Invalid Date

instant-id-artistic

1

instant-id-artistic is a state-of-the-art AI model created by grandlineai that enables zero-shot identity-preserving generation in seconds. The model is built upon Dreamshaper-XL as the base to encourage artistic generations. It offers capabilities that set it apart from similar models like instant-id-photorealistic, gfpgan, instant-id, instant-id-multicontrolnet, and sdxl-lightning-4step. Model inputs and outputs instant-id-artistic takes in an image, a text prompt, and several additional parameters to control the output. The model generates a new image that preserves the identity of the input face while applying the specified artistic style based on the text prompt. Inputs image**: The input image containing a face to preserve prompt**: The text prompt describing the desired artistic style negative_prompt**: The text prompt describing styles to avoid width**: The desired width of the output image height**: The desired height of the output image guidance_scale**: The scale for classifier-free guidance ip_adapter_scale**: The scale for the IP adapter controlnet_conditioning_scale**: The scale for ControlNet conditioning num_inference_steps**: The number of denoising steps Outputs Output image**: The generated image that preserves the identity of the input face while applying the desired artistic style Capabilities instant-id-artistic can generate highly customized, identity-preserving images in a matter of seconds. The model is capable of blending the input face with a wide range of artistic styles, from analog film to vintage photography to ink sketches. Unlike previous works, instant-id-artistic achieves better fidelity and retains good text editability, allowing the generated images to seamlessly blend the face and background. What can I use it for? instant-id-artistic can be a powerful tool for creatives, artists, and designers who need to generate personalized, stylized images quickly. The model can be used to create unique portraits, character designs, or even to enhance existing images with a specific artistic look. The zero-shot nature of the model also makes it appealing for applications where a large dataset of labeled images is not available. Things to try One interesting aspect of instant-id-artistic is its ability to adapt to various base models, such as Dreamshaper-XL, to encourage different types of artistic generations. Users can experiment with different base models and prompts to explore the range of styles and aesthetics that the model can produce. Additionally, the model's compatibility with LCM-LoRA allows for faster inference times, making it more suitable for real-time applications or large-scale image generation.

Updated Invalid Date