Bytedance

Models by this creator

sdxl-lightning-4step

47.2K

sdxl-lightning-4step is a fast text-to-image model developed by ByteDance that can generate high-quality images in just 4 steps. It is similar to other fast diffusion models like AnimateDiff-Lightning and Instant-ID MultiControlNet, which also aim to speed up the image generation process. Unlike the original Stable Diffusion model, these fast models sacrifice some flexibility and control to achieve faster generation times. Model inputs and outputs The sdxl-lightning-4step model takes in a text prompt and various parameters to control the output image, such as the width, height, number of images, and guidance scale. The model can output up to 4 images at a time, with a recommended image size of 1024x1024 or 1280x1280 pixels. Inputs Prompt**: The text prompt describing the desired image Negative prompt**: A prompt that describes what the model should not generate Width**: The width of the output image Height**: The height of the output image Num outputs**: The number of images to generate (up to 4) Scheduler**: The algorithm used to sample the latent space Guidance scale**: The scale for classifier-free guidance, which controls the trade-off between fidelity to the prompt and sample diversity Num inference steps**: The number of denoising steps, with 4 recommended for best results Seed**: A random seed to control the output image Outputs Image(s)**: One or more images generated based on the input prompt and parameters Capabilities The sdxl-lightning-4step model is capable of generating a wide variety of images based on text prompts, from realistic scenes to imaginative and creative compositions. The model's 4-step generation process allows it to produce high-quality results quickly, making it suitable for applications that require fast image generation. What can I use it for? The sdxl-lightning-4step model could be useful for applications that need to generate images in real-time, such as video game asset generation, interactive storytelling, or augmented reality experiences. Businesses could also use the model to quickly generate product visualization, marketing imagery, or custom artwork based on client prompts. Creatives may find the model helpful for ideation, concept development, or rapid prototyping. Things to try One interesting thing to try with the sdxl-lightning-4step model is to experiment with the guidance scale parameter. By adjusting the guidance scale, you can control the balance between fidelity to the prompt and diversity of the output. Lower guidance scales may result in more unexpected and imaginative images, while higher scales will produce outputs that are closer to the specified prompt.

Updated 5/17/2024

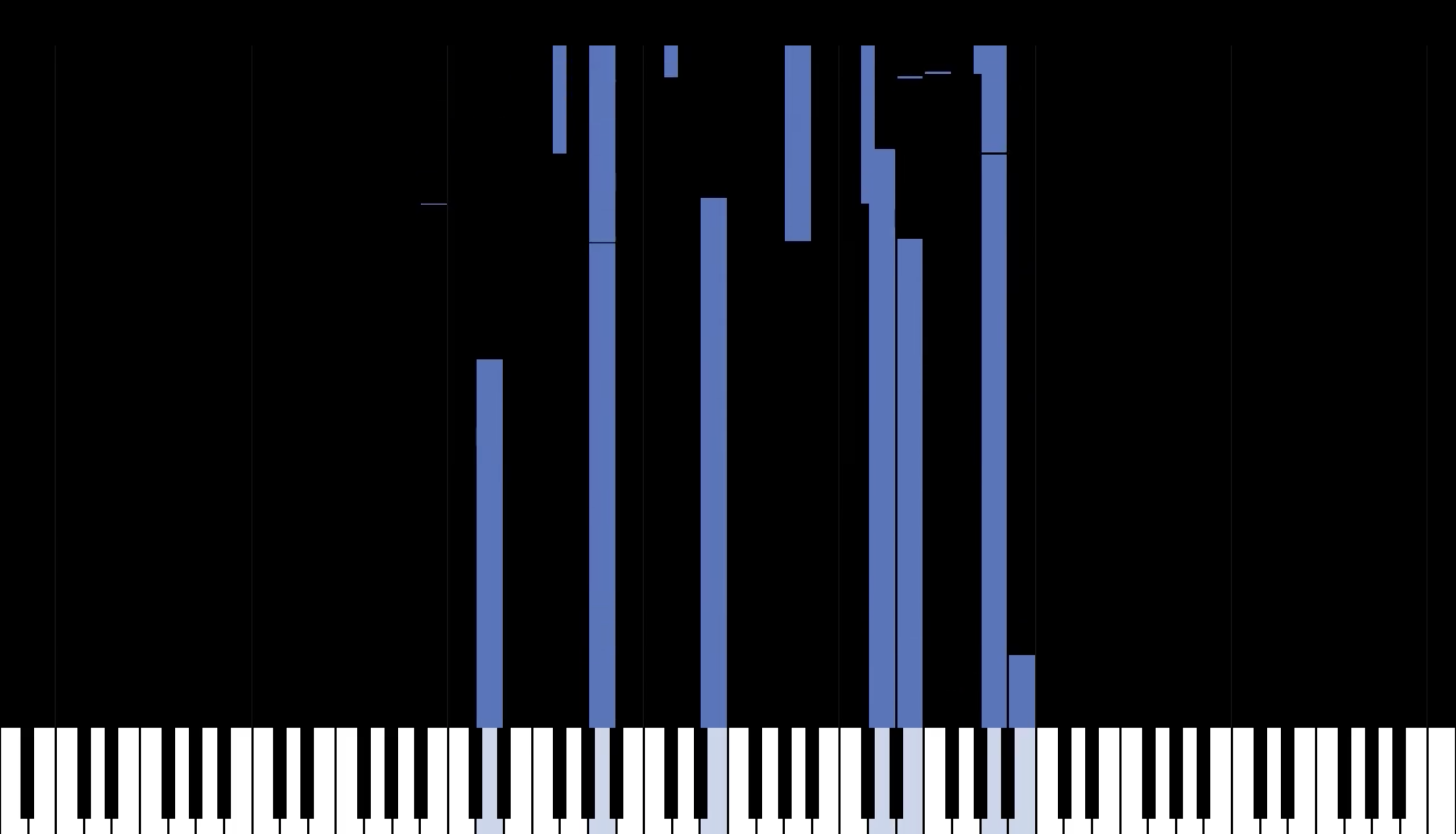

piano-transcription

3

The piano-transcription model is a high-resolution piano transcription system developed by ByteDance that can detect piano notes from audio. It is a powerful tool for converting piano recordings into MIDI files, enabling efficient storage and manipulation of musical performances. This model can be compared to similar music AI models like cantable-diffuguesion for generating and harmonizing Bach chorales, stable-diffusion for generating photorealistic images from text, musicgen-fine-tuner for fine-tuning music generation models, and whisperx for accelerated audio transcription. Model inputs and outputs The piano-transcription model takes an audio file as input and outputs a MIDI file representing the transcribed piano performance. The model can detect piano notes, their onsets, offsets, and velocities with high accuracy, enabling detailed, high-resolution transcription. Inputs audio_input**: The input audio file to be transcribed Outputs Output**: The transcribed MIDI file representing the piano performance Capabilities The piano-transcription model is capable of accurately detecting and transcribing piano performances, even for complex, virtuosic pieces. It can capture nuanced details like pedal use, note velocity, and precise onset and offset times. This makes it a valuable tool for musicians, composers, and music enthusiasts who want to digitize and analyze piano recordings. What can I use it for? The piano-transcription model can be used for a variety of applications, such as converting legacy analog recordings into digital MIDI files, creating sheet music from live performances, and building large-scale classical piano MIDI datasets like the GiantMIDI-Piano dataset developed by the model's creators. This can enable further research and development in areas like music information retrieval, score-informed source separation, and music generation. Things to try Experiment with the piano-transcription model by transcribing a variety of piano performances, from classical masterpieces to modern pop songs. Observe how the model handles different styles, dynamics, and pedal use. You can also try combining the transcribed MIDI files with other music AI tools, such as musicgen, to create new and innovative music compositions.

Updated 5/17/2024

res-adapter

1

res-adapter is a plug-and-play resolution adapter developed by ByteDance's AutoML team. It enables any diffusion model to generate resolution-free images without additional training, inference, or style transfer. This is in contrast to similar models like real-esrgan which require separate upscaling, or kandinsky-2 and kandinsky-2.2 which are trained on specific datasets. ResAdapter is designed to be compatible with a wide range of diffusion models. Model inputs and outputs ResAdapter takes a text prompt as input and generates an image as output. The model is able to generate images at resolutions outside of its original training domain, allowing for more flexible and diverse outputs. Inputs Prompt**: The text prompt describing the desired image Width/Height**: The target output image dimensions Resadapter Alpha**: The weight to apply the ResAdapter, ranging from 0 to 1 Outputs Image**: The generated image at the specified resolution Capabilities ResAdapter can generate high-quality, consistent images at resolutions beyond a model's original training domain. This allows for more flexibility in the types of images that can be produced, such as generating large, detailed images from small models or vice versa. The model can also be used in combination with other techniques like ControlNet and IP-Adapter to further enhance the outputs. What can I use it for? ResAdapter can be used for a variety of text-to-image generation tasks, from creating detailed fantasy scenes to generating stylized portraits. Its ability to produce resolution-free images makes it suitable for use cases where flexibility in image size is important, such as in graphic design, video production, or virtual environments. Additionally, the model's compatibility with other techniques allows for even more creative and customized output. Things to try Experiment with different values for the Resadapter Alpha parameter to see how it affects the quality and consistency of the generated images. Try using ResAdapter in combination with other models and techniques to see how they complement each other. Explore a wide range of prompts to discover the model's versatility and capability in generating diverse, high-quality images.

Updated 5/17/2024