instruct-pix2pix

Maintainer: timbrooks

860

✅

| Property | Value |

|---|---|

| Run this model | Run on HuggingFace |

| API spec | View on HuggingFace |

| Github link | No Github link provided |

| Paper link | No paper link provided |

Create account to get full access

Model overview

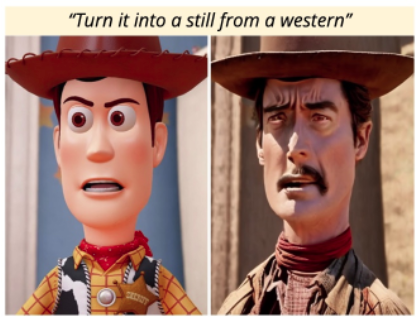

instruct-pix2pix is a text-to-image model developed by Tim Brooks that can generate images based on natural language instructions. It builds upon the InstructPix2Pix paper, which introduced the concept of "instruction tuning" to enable vision-language models to better follow image editing instructions. Unlike previous text-to-image models, instruct-pix2pix focuses on generating images that adhere to specific textual instructions, making it well-suited for applications that require controlled image generation.

Similar models like cartoonizer and stable-diffusion-xl-1.0-inpainting-0.1 also leverage instruction tuning to enable more precise control over image generation, but they focus on different tasks like cartoonization and inpainting, respectively. In contrast, instruct-pix2pix is designed for general-purpose image generation guided by textual instructions.

Model inputs and outputs

Inputs

- Prompt: A natural language description of the desired image, such as "turn him into cyborg".

- Image: An optional input image that the model can use as a starting point for generating the final image.

Outputs

- Generated Image: The model outputs a new image that adheres to the provided instructions, either by modifying the input image or generating a new image from scratch.

Capabilities

The instruct-pix2pix model excels at generating images that closely match textual instructions. For example, you can use it to transform an existing image into a new one with specific desired characteristics, like "turn him into a cyborg". The model is able to understand the semantic meaning of the instruction and generate an appropriate image in response.

What can I use it for?

instruct-pix2pix could be useful for a variety of applications that require controlled image generation, such as:

- Creative tools: Allowing artists and designers to quickly generate images that match their creative vision, streamlining the ideation and prototyping process.

- Educational applications: Helping students or hobbyists create custom illustrations to accompany their written work or presentations.

- Assistive technology: Enabling individuals with disabilities or limited artistic skills to generate images to support their needs or express their ideas.

Things to try

One interesting aspect of instruct-pix2pix is its ability to generate images that adhere to specific instructions, even when starting with an existing image. This could be useful for tasks like image editing, where you might want to transform an image in a controlled way based on textual guidance. For example, you could try using the model to modify an existing portrait by instructing it to "turn the subject into a cyborg" or "make the background more futuristic".

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

instruct-pix2pix

819

instruct-pix2pix is a powerful image editing model that allows users to edit images based on natural language instructions. Developed by Timothy Brooks, this model is similar to other instructable AI models like InstructPix2Pix and InstructIR, which enable image editing and restoration through textual guidance. It can be considered an extension of the widely-used Stable Diffusion model, adding the ability to edit existing images rather than generating new ones from scratch. Model inputs and outputs The instruct-pix2pix model takes in an image and a textual prompt as inputs, and outputs a new edited image based on the provided instructions. The model is designed to be versatile, allowing users to guide the image editing process through natural language commands. Inputs Image**: An existing image that will be edited according to the provided prompt Prompt**: A textual description of the desired edits to be made to the input image Outputs Edited Image**: The resulting image after applying the specified edits to the input image Capabilities The instruct-pix2pix model excels at a wide range of image editing tasks, from simple modifications like changing the color scheme or adding visual elements, to more complex transformations like turning a person into a cyborg or altering the composition of a scene. The model's ability to understand and interpret natural language instructions allows for a highly intuitive and flexible editing experience. What can I use it for? The instruct-pix2pix model can be utilized in a variety of applications, such as photo editing, digital art creation, and even product visualization. For example, a designer could use the model to quickly experiment with different design ideas by providing textual prompts, or a marketer could create custom product images for their e-commerce platform by instructing the model to make specific changes to stock photos. Things to try One interesting aspect of the instruct-pix2pix model is its potential for creative and unexpected image transformations. Users could try providing prompts that push the boundaries of what the model is capable of, such as combining different artistic styles, merging multiple objects or characters, or exploring surreal and fantastical imagery. The model's versatility and natural language understanding make it a compelling tool for those seeking to unleash their creativity through image editing.

Updated Invalid Date

🌐

sdxl-instructpix2pix-768

42

The sdxl-instructpix2pix-768 is an AI model developed by the Diffusers team that is based on the Stable Diffusion XL (SDXL) model. It has been fine-tuned using the InstructPix2Pix training methodology, which allows the model to follow specific image editing instructions. This model can perform tasks like turning the sky into a cloudy one, making an image look like a Picasso painting, or making a person in an image appear older. Similar models include the instruction-tuned Stable Diffusion for Cartoonization, the InstructPix2Pix model, and the SD-XL Inpainting 0.1 model. These models all explore ways to fine-tune diffusion-based text-to-image models to better follow specific instructions or perform image editing tasks. Model inputs and outputs Inputs Prompt**: A text description of the desired image edit, such as "Turn sky into a cloudy one" or "Make it a picasso painting". Image**: An input image that the model will use as a starting point for the edit. Outputs Edited Image**: The output image, generated based on the input prompt and the provided image. Capabilities The sdxl-instructpix2pix-768 model has the ability to follow specific image editing instructions, going beyond simple text-to-image generation. As shown in the examples, it can perform tasks like changing the sky, applying a Picasso-like style, and making a person appear older. This level of control and precision over the image generation process is a key capability of this model. What can I use it for? The sdxl-instructpix2pix-768 model can be useful for a variety of creative and artistic applications. Artists and designers could use it to quickly explore different image editing ideas and concepts, speeding up their workflow. Educators could incorporate it into lesson plans, allowing students to experiment with image manipulation. Researchers may also find it useful for studying the capabilities and limitations of instruction-based image generation models. Things to try One interesting aspect of the sdxl-instructpix2pix-768 model is its ability to interpret and follow specific instructions related to image editing. You could try providing the model with more complex or nuanced instructions, such as "Make the person in the image look happier" or "Turn the background into a futuristic cityscape." Experimenting with the level of detail and specificity in the prompts can help you better understand the model's capabilities and limitations. Another interesting area to explore would be the model's performance on different types of input images. You could try providing it with a range of images, from simple landscapes to more complex scenes, to see how it handles varying levels of visual complexity. This could help you identify the model's strengths and weaknesses in terms of the types of images it can effectively edit.

Updated Invalid Date

🧪

cartoonizer

54

The cartoonizer model is an "instruction-tuned" version of the Stable Diffusion (v1.5) model, fine-tuned from the existing InstructPix2Pix checkpoints. This pipeline was created by the instruction-tuning-sd team to make Stable Diffusion better at following specific instructions that involve image transformation operations. The training process involved creating an instruction-prompted dataset and then conducting InstructPix2Pix-style training. Model inputs and outputs Inputs Image**: An input image to be cartoonized Prompt**: A text description of the desired cartoonization Outputs Cartoonized image**: The input image transformed into a cartoon-style representation based on the given prompt Capabilities The cartoonizer model is capable of taking an input image and a text prompt, and generating a cartoon-style version of the image that matches the prompt. This can be useful for a variety of artistic and creative applications, such as generating concept art, illustrations, or stylized images for design projects. What can I use it for? The cartoonizer model can be used to create unique and personalized cartoon-style images based on your ideas and prompts. For example, you could use it to generate cartoon portraits of yourself or your friends, or to create illustrations for a children's book or an animated short film. The model's ability to follow specific instructions makes it a powerful tool for creative professionals looking to quickly and easily produce cartoon-style content. Things to try One interesting thing to try with the cartoonizer model is to experiment with different types of prompts, beyond just simple descriptions of the desired output. You could try prompts that incorporate more complex ideas or narratives, and see how the model translates those into a cartoon-style image. Additionally, you could try combining the cartoonizer with other image-to-image models, such as the stable-diffusion-2-inpainting model, to create even more complex and unique cartoon-style compositions.

Updated Invalid Date

instruct-pix2pix

39

instruct-pix2pix is a versatile AI model that allows users to edit images by providing natural language instructions. It is similar to other image-to-image translation models like instructir and deoldify_image, which can perform tasks like face restoration and colorization. However, instruct-pix2pix stands out by allowing users to control the edits through free-form textual instructions, rather than relying solely on predefined editing operations. Model inputs and outputs instruct-pix2pix takes an input image and a natural language instruction as inputs, and produces an edited image as output. The model is trained to understand a wide range of editing instructions, from simple changes like "turn him into a cyborg" to more complex transformations. Inputs Input Image**: The image you want to edit Instruction Text**: The natural language instruction describing the desired edit Outputs Output Image**: The edited image, following the provided instruction Capabilities instruct-pix2pix can perform a diverse range of image editing tasks, from simple modifications like changing an object's appearance, to more complex operations like adding or removing elements from a scene. The model is able to understand and faithfully execute a wide variety of instructions, allowing users to be highly creative and expressive in their edits. What can I use it for? instruct-pix2pix can be a powerful tool for creative projects, product design, and content creation. For example, you could use it to quickly mock up different design concepts, experiment with character designs, or generate visuals to accompany creative writing. The model's flexibility and ease of use make it an attractive option for a wide range of applications. Things to try One interesting aspect of instruct-pix2pix is its ability to preserve details from the original image while still making significant changes based on the provided instruction. Try experimenting with different levels of the "Cfg Text" and "Cfg Image" parameters to find the right balance between preserving the source image and following the editing instruction. You can also try different phrasing of the instructions to see how the model's interpretation and output changes.

Updated Invalid Date