kosmos-2

Maintainer: lucataco

1

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | View on Github |

| Paper link | No paper link provided |

Create account to get full access

Model overview

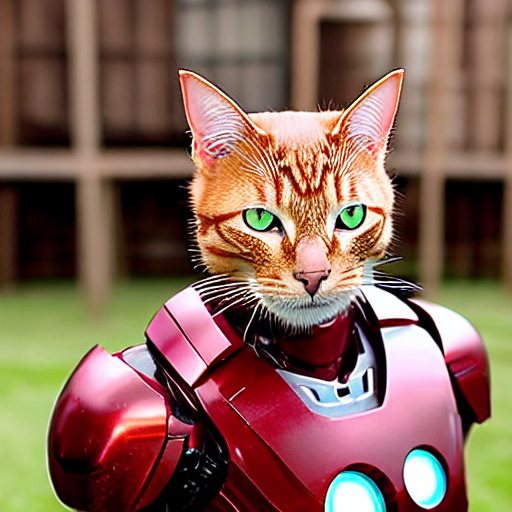

kosmos-2 is a large language model developed by Microsoft that aims to ground multimodal language models to the real world. It is similar to other models created by the same maintainer, such as Kosmos-G, Moondream1, and DeepSeek-VL, which focus on generating images, performing vision-language tasks, and understanding real-world applications.

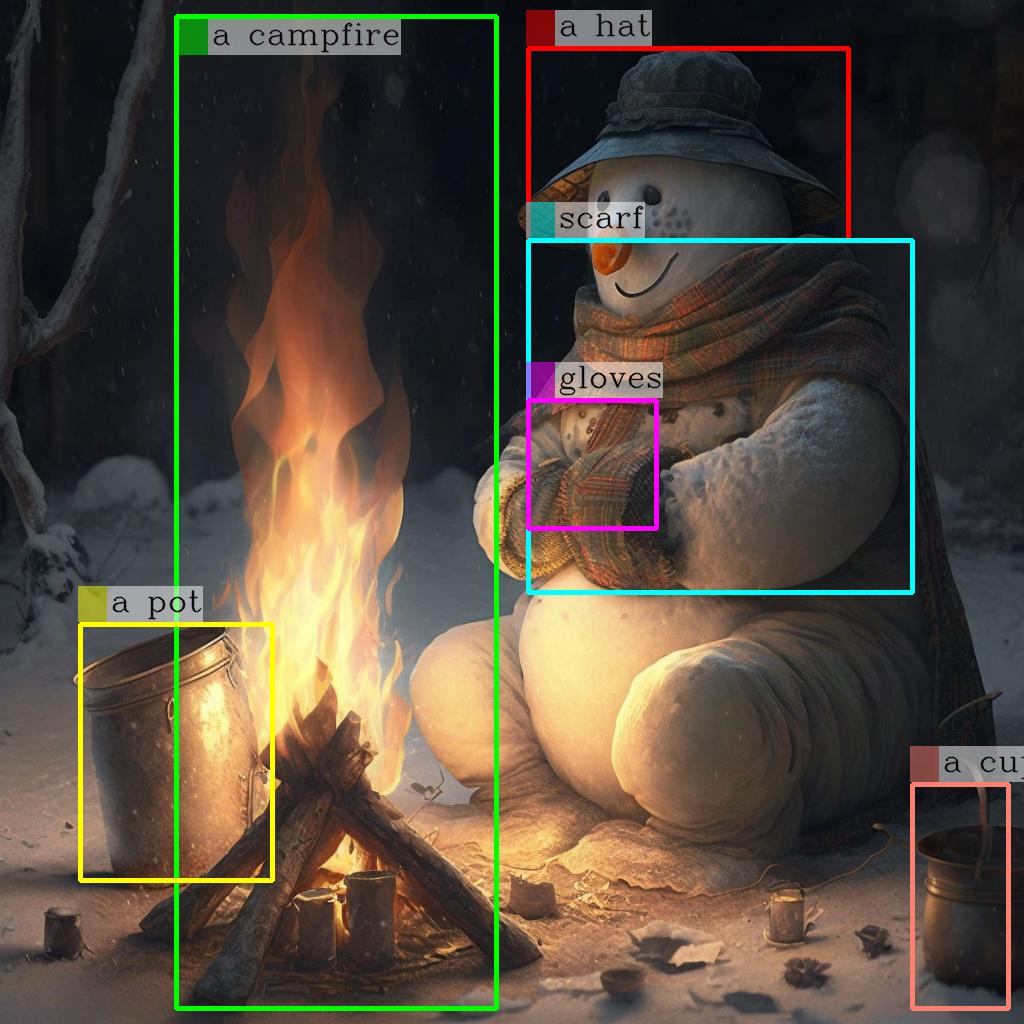

Model inputs and outputs

kosmos-2 takes an image as input and outputs a text description of the contents of the image, including bounding boxes around detected objects. The model can also provide a more detailed description if requested.

Inputs

- Image: An input image to be analyzed

Outputs

- Text: A description of the contents of the input image

- Image: The input image with bounding boxes around detected objects

Capabilities

kosmos-2 is capable of detecting and describing various objects, scenes, and activities in an input image. It can identify and localize multiple objects within an image and provide a textual summary of its contents.

What can I use it for?

kosmos-2 can be useful for a variety of applications that require image understanding, such as visual search, image captioning, and scene understanding. It could be used to enhance user experiences in e-commerce, social media, or other image-driven applications. The model's ability to ground language to the real world also makes it potentially useful for tasks like image-based question answering or visual reasoning.

Things to try

One interesting aspect of kosmos-2 is its potential to be used in conjunction with other models like Kosmos-G to enable multimodal applications that combine image generation and understanding. Developers could explore ways to leverage kosmos-2's capabilities to build novel applications that seamlessly integrate visual and language processing.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

kosmos-g

3

Kosmos-G is a multimodal large language model developed by adirik that can generate images based on text prompts. It builds upon previous work in text-to-image generation, such as the stylemc model, to enable more contextual and versatile image creation. Kosmos-G can take multiple input images and a text prompt to generate new images that blend the visual and semantic information. This allows for more nuanced and compelling image generation compared to models that only use text prompts. Model inputs and outputs Kosmos-G takes a variety of inputs to generate new images, including one or two starting images, a text prompt, and various configuration settings. The model outputs a set of generated images that match the provided prompt and visual context. Inputs image1**: The first input image, used as a starting point for the generation image2**: An optional second input image, which can provide additional visual context prompt**: The text prompt describing the desired output image negative_prompt**: An optional text prompt specifying elements to avoid in the generated image num_images**: The number of images to generate num_inference_steps**: The number of steps to use during the image generation process text_guidance_scale**: A parameter controlling the influence of the text prompt on the generated images Outputs Output**: An array of generated image URLs Capabilities Kosmos-G can generate unique and contextual images based on a combination of input images and text prompts. It is able to blend the visual information from the starting images with the semantic information in the text prompt to create new compositions that maintain the essence of the original visuals while incorporating the desired conceptual elements. This allows for more flexible and expressive image generation compared to models that only use text prompts. What can I use it for? Kosmos-G can be used for a variety of creative and practical applications, such as: Generating concept art or illustrations for creative projects Producing visuals for marketing and advertising campaigns Enhancing existing images by blending them with new text-based elements Aiding in the ideation and visualization process for product design or other visual projects The model's ability to leverage both visual and textual inputs makes it a powerful tool for users looking to create unique and expressive imagery. Things to try One interesting aspect of Kosmos-G is its ability to generate images that seamlessly integrate multiple visual and conceptual elements. Try providing the model with a starting image and a prompt that describes a specific scene or environment, then observe how it blends the visual elements from the input image with the new conceptual elements to create a cohesive and compelling result. You can also experiment with different combinations of input images and text prompts to see the range of outputs the model can produce.

Updated Invalid Date

moondream1

10

moondream1 is a compact vision language model developed by Replicate researcher lucataco. Compared to larger models like LLaVA-1.5 and MC-LLaVA-3B, moondream1 has a smaller parameter count of 1.6 billion but can still achieve competitive performance on visual understanding benchmarks like VQAv2, GQA, VizWiz, and TextVQA. This makes moondream1 a potentially useful model for applications where compute resources are constrained, such as on edge devices. Model inputs and outputs moondream1 is a multimodal model that takes both image and text inputs. The image input is an arbitrary grayscale image, while the text input is a prompt or question about the image. The model then generates a textual response that answers the provided prompt. Inputs Image**: A grayscale image in URI format Prompt**: A textual prompt or question about the input image Outputs Textual response**: The model's generated answer or description based on the input image and prompt Capabilities moondream1 demonstrates strong visual understanding capabilities, as evidenced by its performance on benchmark tasks like VQAv2 and GQA. The model can accurately answer a variety of questions about the content, objects, and context of input images. It also shows the ability to generate detailed descriptions and explanations, as seen in the example responses provided in the README. What can I use it for? moondream1 could be useful for applications that require efficient visual understanding, such as image captioning, visual question answering, or visual reasoning. Given its small size, the model could be deployed on edge devices or in other resource-constrained environments to provide interactive visual AI capabilities. Things to try One interesting aspect of moondream1 is its ability to provide nuanced, contextual responses to prompts about images. For example, in the provided examples, the model not only identifies objects and attributes but also discusses the potential reasons for the dog's aggressive behavior and the likely purpose of the "Little Book of Deep Learning." Exploring the model's capacity for this type of holistic, contextual understanding could lead to interesting applications in areas like visual reasoning and multimodal interaction.

Updated Invalid Date

phi-2

2

The phi-2 model is a Cog implementation of the Microsoft Phi-2 model, developed by the Replicate team member lucataco. The Phi-2 model is a large language model trained by Microsoft, designed for tasks such as question answering, text generation, and text summarization. It can be thought of as a more capable version of the earlier Phi-3-Mini-4K-Instruct model, with enhanced prompt understanding and stylistic capabilities approaching that of the Proteus v0.2 model. Model inputs and outputs The phi-2 model takes a text prompt as input and generates a text output in response. The input prompt can be up to 2048 characters in length, and the model will generate a response up to 200 characters long. Inputs Prompt**: The text prompt that the model will use to generate a response. Outputs Output**: The text generated by the model in response to the input prompt. Capabilities The phi-2 model is a powerful language model that can be used for a variety of tasks, such as question answering, text generation, and text summarization. It has been trained on a large amount of data and has demonstrated strong performance on a range of language understanding and generation tasks. What can I use it for? The phi-2 model can be used for a variety of applications, such as: Content Generation**: The model can be used to generate high-quality text content, such as blog posts, articles, or stories. Question Answering**: The model can be used to answer questions by generating relevant and informative responses. Summarization**: The model can be used to summarize long text documents or articles, highlighting the key points and ideas. Dialogue Systems**: The model can be used to power conversational agents or chatbots, engaging in natural language interactions. Things to try One interesting thing to try with the phi-2 model is to experiment with different prompts and see how the model responds. For example, you could try prompts that involve creative writing, analytical tasks, or open-ended questions, and observe how the model generates unique and insightful responses. Additionally, you could explore using the model in combination with other AI tools or frameworks to create more sophisticated applications.

Updated Invalid Date

sdxs-512-0.9

22

sdxs-512-0.9 can generate high-resolution images in real-time based on prompt texts. It was trained using score distillation and feature matching techniques. This model is similar to other text-to-image models like SDXL, SDXL-Lightning, and SSD-1B, all created by the same maintainer, lucataco. These models offer varying levels of speed, quality, and model size. Model inputs and outputs The sdxs-512-0.9 model takes in a text prompt, an optional image, and various parameters to control the output. It generates one or more high-resolution images based on the input. Inputs Prompt**: The text prompt that describes the image to be generated Seed**: A random seed value to control the randomness of the generated image Image**: An optional input image for an "img2img" style generation Width/Height**: The desired size of the output image Num Images**: The number of images to generate per prompt Guidance Scale**: A value to control the influence of the text prompt on the generated image Negative Prompt**: A text prompt describing aspects to avoid in the generated image Prompt Strength**: The strength of the text prompt when using an input image Sizing Strategy**: How to resize the input image Num Inference Steps**: The number of denoising steps to perform during generation Disable Safety Checker**: Whether to disable the safety checker for the generated images Outputs One or more high-resolution images matching the input prompt Capabilities sdxs-512-0.9 can generate a wide variety of images with high levels of detail and realism. It is particularly well-suited for generating photorealistic portraits, scenes, and objects. The model is capable of producing images with a specific artistic style or mood based on the input prompt. What can I use it for? sdxs-512-0.9 could be used for various creative and commercial applications, such as: Generating concept art or illustrations for games, films, or books Creating stock photography or product images for e-commerce Producing personalized artwork or portraits for customers Experimenting with different artistic styles and techniques Enhancing existing images through "img2img" generation Things to try Try experimenting with different prompts to see the range of images the sdxs-512-0.9 model can produce. You can also explore the effects of adjusting parameters like guidance scale, prompt strength, and the number of inference steps. For a more interactive experience, you can integrate the model into a web application or use it within a creative coding environment.

Updated Invalid Date