pix2struct-ai2d-base

Maintainer: google

42

🛠️

| Property | Value |

|---|---|

| Run this model | Run on HuggingFace |

| API spec | View on HuggingFace |

| Github link | No Github link provided |

| Paper link | No paper link provided |

Create account to get full access

Model overview

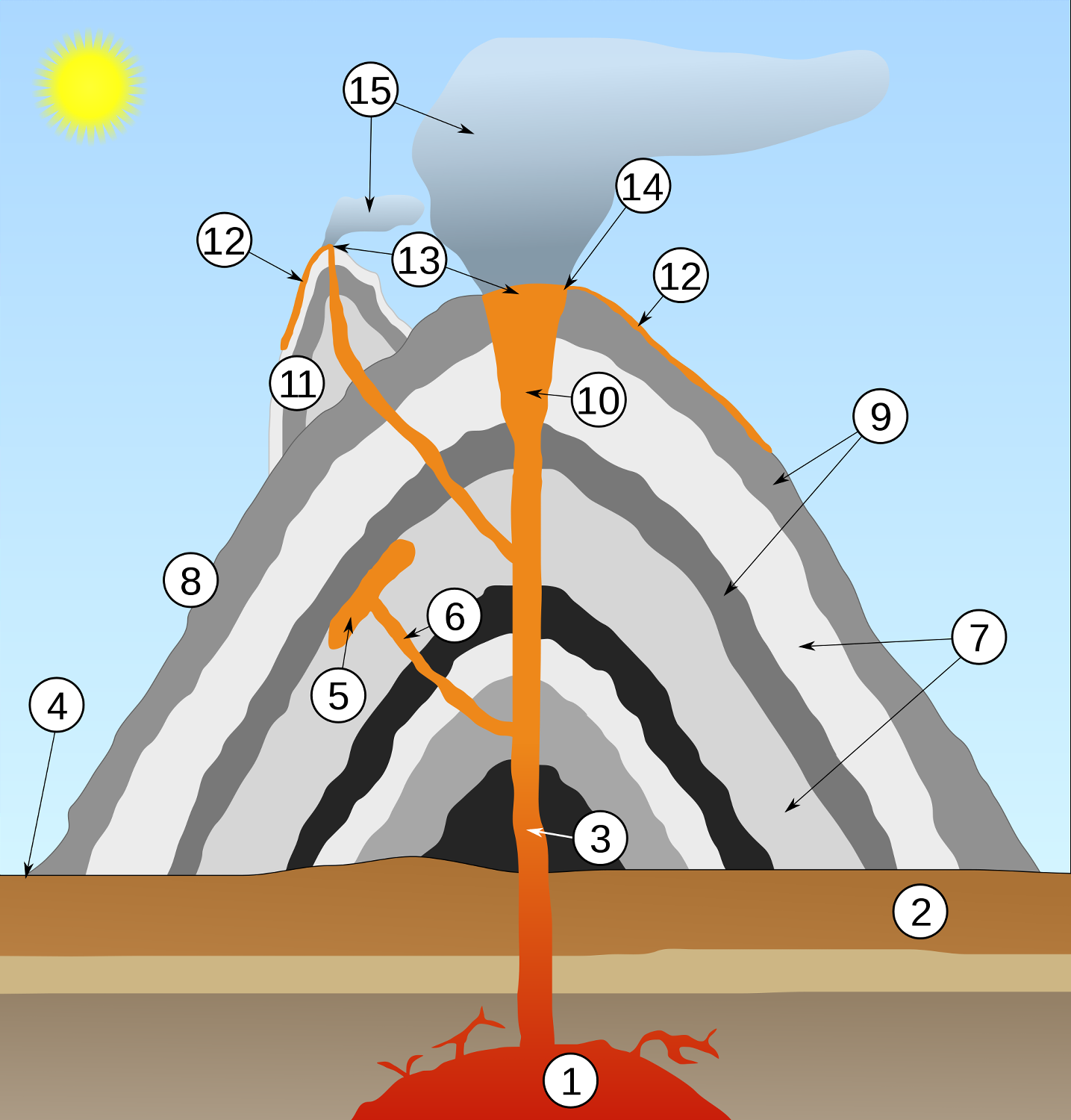

The pix2struct-ai2d-base model is an image encoder-text decoder model developed by Google that is trained on image-text pairs for various tasks, including image captioning and visual question answering. The model is based on the Pix2Struct architecture, which is pre-trained by learning to parse masked screenshots of web pages into simplified HTML. This pretraining strategy allows the model to develop strong visual understanding capabilities that can be fine-tuned for a variety of downstream tasks. The model has been further fine-tuned on the AI2D dataset for scientific diagram visual question answering.

Model inputs and outputs

Inputs

- Image: The model takes an image as input, which can be of various visual domains including documents, illustrations, user interfaces, and natural images.

- Text prompt: The model can also take a text prompt as input, such as a question about the contents of the image.

Outputs

- Text response: The model outputs a text response to the given image and text prompt, which can be an answer to a question, a caption describing the image, or other visually-grounded language.

Capabilities

The pix2struct-ai2d-base model demonstrates strong visual understanding capabilities that allow it to excel at a variety of visually-situated language tasks. For example, the model can answer questions about the content and structure of scientific diagrams, generate captions for images of user interfaces, and describe the relationships between elements in a document. By leveraging its broad pretraining on web page screenshots, the model is able to generalize well to diverse visual domains.

What can I use it for?

The pix2struct-ai2d-base model can be useful for a variety of applications that involve understanding and generating visually-grounded language, such as:

- Scientific diagram VQA: The model can be used to build applications that can answer questions about the content and structure of scientific diagrams, which can be helpful for educational and research purposes.

- User interface understanding: The model can be used to build applications that can interpret and describe the elements and functionality of user interfaces, which can be useful for accessibility, design, and testing purposes.

- Multimodal document understanding: The model can be used to build applications that can extract information from documents that contain both text and visual elements, which can be useful for a variety of enterprise and academic use cases.

Things to try

One interesting aspect of the pix2struct-ai2d-base model is its ability to integrate language prompts directly into the input image, which allows for a more flexible and natural interaction between the visual and textual modalities. This could be a useful feature to explore for applications that involve iterative or interactive visual language understanding, such as educational tools or design workflows.

Another interesting direction could be to investigate the model's ability to generalize to new visual domains beyond the web pages and scientific diagrams it was trained on. By fine-tuning the model on additional datasets or applying transfer learning techniques, it may be possible to expand the model's capabilities to handle an even wider range of visually-situated language tasks.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

👀

pix2struct-base

61

The pix2struct-base model is a pretrained image encoder-text decoder model developed by Google. It is part of the Pix2Struct family of models, which are trained on image-text pairs for various tasks like image captioning and visual question answering. The full list of available Pix2Struct models can be found in Table 1 of the paper. The Pix2Struct model is trained to parse masked screenshots of web pages into simplified HTML, leveraging the richness of visual elements in web content to learn a diverse set of visually-situated language understanding skills. Model inputs and outputs Inputs Images**: The model takes images as input, which can be used for tasks like image captioning and visual question answering. Text**: The model can also take text prompts or questions as input, which are then used to generate relevant outputs. Outputs Text**: The primary output of the Pix2Struct model is text, such as captions, answers to questions, or HTML representations of visual content. Capabilities The pix2struct-base model is capable of understanding and reasoning about visually-situated language, outperforming previous domain-specific models on a range of tasks across documents, illustrations, user interfaces, and natural images. By learning to parse web page screenshots into HTML, the model gains broad capabilities that can be fine-tuned for various downstream applications. What can I use it for? The Pix2Struct model can be used for a variety of tasks involving visually-situated language, such as: Image captioning**: Generating textual descriptions of images. Visual question answering**: Answering questions about the contents of an image. Document understanding**: Extracting relevant information from documents with text and visual elements. User interface comprehension**: Interpreting and reasoning about user interface elements and interactions. To use the model, you can fine-tune it on your specific task and dataset using the provided conversion script to convert the model from the original T5X checkpoint to a Hugging Face-compatible format. Things to try One interesting aspect of the Pix2Struct model is its ability to learn visually-situated language understanding by training on web page screenshots and HTML. This allows the model to develop broad capabilities that can be applied to a range of downstream tasks, rather than being limited to a specific domain. When fine-tuning the model, it would be interesting to explore how it performs on tasks that require integrating visual and textual information, such as question answering about diagrams or tables in documents.

Updated Invalid Date

✨

deplot

203

The deplot model is a powerful tool developed by Google that aims to revolutionize the way we interact with visual data such as charts and plots. Unlike previous state-of-the-art models that require extensive training on thousands of examples, deplot takes a novel approach by decomposing the challenge of visual language reasoning into two steps: (1) plot-to-text translation and (2) reasoning over the translated text. This one-shot solution leverages the few-shot reasoning capabilities of large language models (LLMs) to achieve significant improvements in understanding human-written queries related to chart analysis. The key component of deplot is a modality conversion module that translates the image of a plot or chart into a linearized table. This output can then be directly used to prompt a pre-trained LLM, allowing it to exploit its powerful reasoning abilities. This innovative approach sets deplot apart from traditional models, which often struggle with complex human-written queries. Similar models like gfpgan, pixart-xl-2, llava-13b, thinkdiffusionxl, and sdxl focus on different aspects of image-to-text or text-to-image generation, but deplot stands out with its unique approach to visual language reasoning. Model inputs and outputs Inputs Image**: The image of a chart or plot that the model will process and translate to text. Text**: A human-written query or question about the information contained in the chart or plot. Outputs Linearized table**: The output of the modality conversion module, which translates the input image into a tabular format that can be readily used to prompt a large language model. Answers**: The response generated by the LLM based on the linearized table, addressing the original human-written query or question. Capabilities The deplot model excels at comprehending complex visual data and answering human-written queries about charts and plots. By bridging the gap between image and text, deplot allows users to leverage the powerful reasoning capabilities of LLMs to gain insights from visual data. This approach significantly outperforms previous state-of-the-art models, especially on challenging, human-written queries. What can I use it for? The deplot model can be employed in a variety of applications where the understanding of visual data is crucial. Some potential use cases include: Data analysis and visualization**: Researchers, analysts, and data scientists can use deplot to quickly extract insights from complex charts and plots, enabling more efficient data exploration and decision-making. Automated report generation**: Businesses can leverage deplot to generate summaries and insights from visual data, streamlining the creation of reports and presentations. Educational applications**: Educators can use deplot to help students better comprehend and analyze visual information, enhancing their learning experience. Things to try One interesting aspect of the deplot model is its ability to handle a wide range of chart types and formats. Try experimenting with different types of visualizations, such as line charts, scatter plots, and bar graphs, to see how the model performs. Additionally, you can explore the model's capabilities in answering open-ended, human-written questions about the data presented in the charts, pushing the boundaries of visual language reasoning.

Updated Invalid Date

pix2struct

6

pix2struct is a powerful image-to-text model developed by researchers at Google. It uses a novel pretraining strategy, learning to parse masked screenshots of web pages into simplified HTML. This approach allows the model to learn a general understanding of visually-situated language, which can then be fine-tuned on a variety of downstream tasks. The model is related to other visual language models developed by the same team, such as pix2struct-base and cogvlm. These models share similar architectures and pretraining objectives, aiming to create versatile foundations for understanding the interplay between images and text. Model inputs and outputs Inputs Text**: Input text for the model to process Image**: Input image for the model to analyze Model name**: The specific pix2struct model to use, e.g. screen2words Outputs Output**: The model's generated response, which could be a caption, a structured representation, or an answer to a question, depending on the specific task. Capabilities pix2struct is a highly capable model that can be applied to a wide range of visual language understanding tasks. It has demonstrated state-of-the-art performance on a variety of benchmarks, including documents, illustrations, user interfaces, and natural images. The model's ability to learn from web-based data makes it well-suited for handling the diversity of visually-situated language found in the real world. What can I use it for? pix2struct can be used for a variety of applications that involve understanding the relationship between images and text, such as: Image Captioning**: Generating descriptive captions for images Visual Question Answering**: Answering questions about the content of an image Document Understanding**: Extracting structured information from document images User Interface Analysis**: Parsing and understanding the layout and functionality of user interface screenshots Given its broad capabilities, pix2struct could be a valuable tool for developers, researchers, and businesses working on projects that require visually-grounded language understanding. Things to try One interesting aspect of pix2struct is its flexible integration of language and vision inputs. The model can accept language prompts, such as questions, that are rendered directly on top of the input image. This allows for more nuanced and interactive task formulations, where the model can reason about the image in the context of a specific query or instruction. Developers and researchers could explore this feature to create novel applications that blend image analysis and language understanding in creative ways. For example, building interactive visual assistants that can answer questions about the contents of an image or provide guidance based on a user's instructions.

Updated Invalid Date

💬

PixArt-XL-2-1024-MS

128

The PixArt-XL-2-1024-MS is a diffusion-transformer-based text-to-image generative model developed by PixArt-alpha. It can directly generate 1024px images from text prompts within a single sampling process, using a fixed, pretrained T5 text encoder and a VAE latent feature encoder. The model is similar to other transformer latent diffusion models like stable-diffusion-xl-refiner-1.0 and pixart-xl-2, which also leverage transformer architectures for text-to-image generation. However, the PixArt-XL-2-1024-MS is specifically optimized for generating high-resolution 1024px images in a single pass. Model inputs and outputs Inputs Text prompts**: The model can generate images directly from natural language text descriptions. Outputs 1024px images**: The model outputs visually impressive, high-resolution 1024x1024 pixel images based on the input text prompts. Capabilities The PixArt-XL-2-1024-MS model excels at generating detailed, photorealistic images from a wide range of text descriptions. It can create realistic scenes, objects, and characters with a high level of visual fidelity. The model's ability to produce 1024px images in a single step sets it apart from other text-to-image models that may require multiple stages or lower-resolution outputs. What can I use it for? The PixArt-XL-2-1024-MS model can be a powerful tool for a variety of applications, including: Art and design**: Generating unique, high-quality images for use in art, illustration, graphic design, and other creative fields. Education and training**: Creating visual aids and educational materials to complement lesson plans or research. Entertainment and media**: Producing images for use in video games, films, animations, and other media. Research and development**: Exploring the capabilities and limitations of advanced text-to-image generative models. The model's maintainers provide access to the model through a Hugging Face demo, a GitHub project page, and a free trial on Google Colab, making it readily available for a wide range of users and applications. Things to try One interesting aspect of the PixArt-XL-2-1024-MS model is its ability to generate highly detailed and photorealistic images. Try experimenting with specific, descriptive prompts that challenge the model's capabilities, such as: "A futuristic city skyline at night, with neon-lit skyscrapers and flying cars in the background" "A close-up portrait of a dragon, with intricate scales and glowing eyes" "A serene landscape of a snow-capped mountain range, with a crystal-clear lake in the foreground" By pushing the boundaries of the model's abilities, you can uncover its strengths, limitations, and unique qualities, ultimately gaining a deeper understanding of its potential applications and the field of text-to-image generation as a whole.

Updated Invalid Date