arcane-diffusion

Maintainer: tstramer

100

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | No Github link provided |

| Paper link | No paper link provided |

Create account to get full access

Model overview

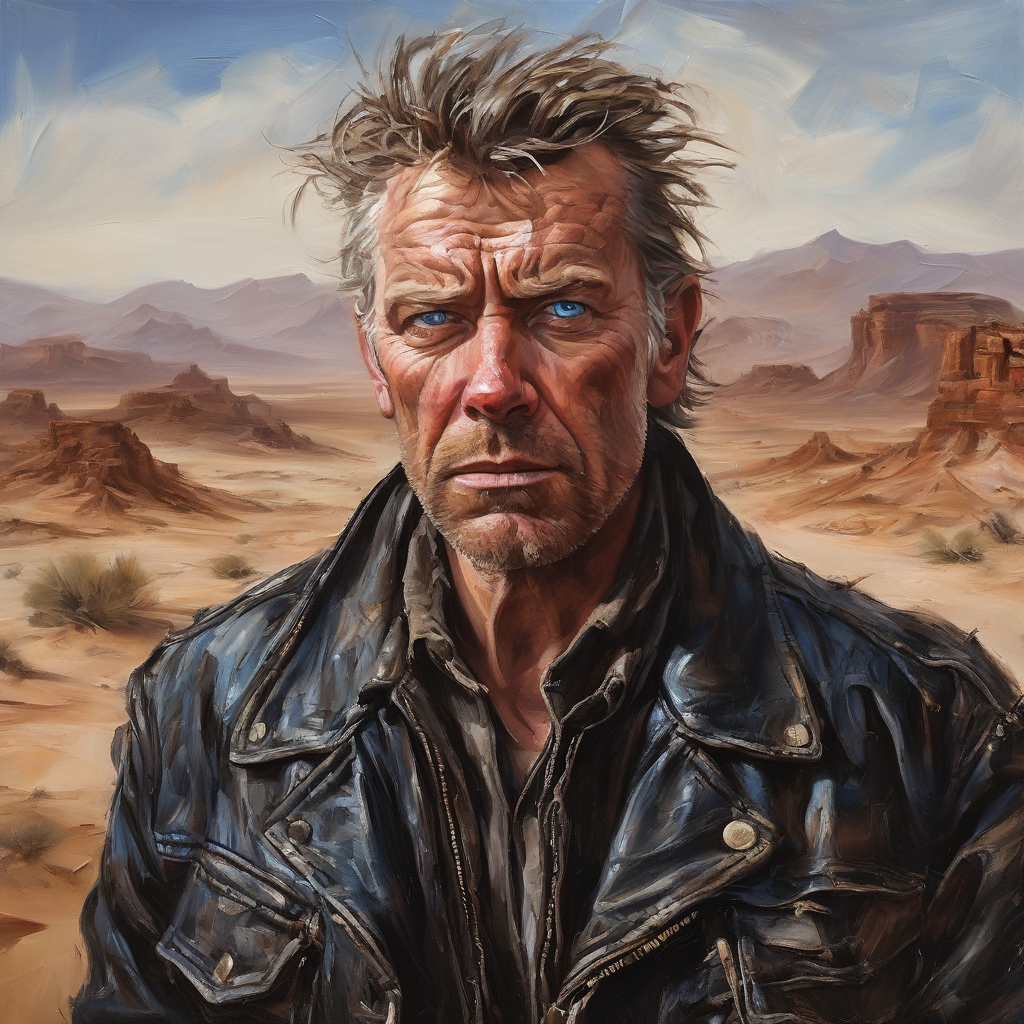

arcane-diffusion is a fine-tuned Stable Diffusion model trained on images from the TV show Arcane. It can generate images with a distinct "Arcane style" when prompted with the token

Model inputs and outputs

arcane-diffusion takes a text prompt as input and generates one or more images as output. The model can generate images up to 1024x768 or 768x1024 pixels in size. Users can also specify a seed value, scheduler, number of outputs, guidance scale, negative prompt, and number of inference steps.

Inputs

- Prompt: The text prompt describing the desired image

- Seed: A random seed value (leave blank to randomize)

- Width: The width of the output image (max 1024)

- Height: The height of the output image (max 768)

- Scheduler: The denoising scheduler to use

- Num Outputs: The number of images to generate (1-4)

- Guidance Scale: The scale for classifier-free guidance

- Negative Prompt: Text describing elements to exclude from the output

- Prompt Strength: The strength of the prompt when using an init image

- Num Inference Steps: The number of denoising steps (1-500)

Outputs

- Image(s): One or more generated images matching the input prompt

Capabilities

arcane-diffusion can generate a wide variety of high-quality images with a distinct "Arcane style" inspired by the visual aesthetics of the TV show. The model is capable of producing detailed, photorealistic images of characters, environments, and objects in the Arcane universe.

What can I use it for?

You can use arcane-diffusion to create custom artwork, concept designs, and promotional materials inspired by the Arcane series. The model's specialized training allows it to capture the unique visual style of the show, making it a valuable tool for Arcane fans, artists, and content creators. You could potentially monetize the model's capabilities by offering custom image generation services or creating and selling Arcane-themed digital art and assets.

Things to try

Experiment with different prompts to see the range of images the arcane-diffusion model can produce. Try including details about characters, locations, or objects from the Arcane universe, and see how the model interprets and renders them. You can also play with the various input parameters like seed, guidance scale, and number of inference steps to fine-tune the output and achieve different visual effects.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

material-diffusion

2.2K

material-diffusion is a fork of the popular Stable Diffusion AI model, created by Replicate user tstramer. This model is designed for generating tileable outputs, building on the capabilities of the v1.5 Stable Diffusion model. It shares similarities with other Stable Diffusion forks like material-diffusion-sdxl and stable-diffusion-v2, as well as more experimental models like multidiffusion and stable-diffusion. Model inputs and outputs material-diffusion takes a variety of inputs, including a text prompt, a mask image, an initial image, and various settings to control the output. The model then generates one or more images based on the provided inputs. Inputs Prompt**: The text prompt that describes the desired image. Mask**: A black and white image used to mask the initial image, with black pixels inpainted and white pixels preserved. Init Image**: An initial image to generate variations of, which will be resized to the specified dimensions. Seed**: A random seed value to control the output image. Scheduler**: The diffusion scheduler algorithm to use, such as K-LMS. Guidance Scale**: A scale factor for the classifier-free guidance, which controls the balance between the input prompt and the initial image. Prompt Strength**: The strength of the input prompt when using an initial image, with 1.0 corresponding to full destruction of the initial image information. Num Inference Steps**: The number of denoising steps to perform during the image generation process. Outputs Output Images**: One or more images generated by the model, based on the provided inputs. Capabilities material-diffusion is capable of generating high-quality, photorealistic images from text prompts, similar to the base Stable Diffusion model. However, the key differentiator is its ability to generate tileable outputs, which can be useful for creating seamless patterns, textures, or backgrounds. What can I use it for? material-diffusion can be useful for a variety of applications, such as: Generating unique and customizable patterns, textures, or backgrounds for design projects, websites, or products. Creating tiled artwork or wallpapers for personal or commercial use. Exploring creative text-to-image generation with a focus on tileable outputs. Things to try With material-diffusion, you can experiment with different prompts, masks, and initial images to create a wide range of tileable outputs. Try using the model to generate seamless patterns or textures, or to create variations on a theme by modifying the prompt or other input parameters.

Updated Invalid Date

qrcode-stable-diffusion

42

The qrcode-stable-diffusion model, created by nateraw, is a unique AI model that combines the power of Stable Diffusion with the functionality of QR code generation. This model allows users to create stylish, AI-generated QR codes that can be used for a variety of purposes, such as linking to websites, sharing digital content, or even adding a touch of artistic flair to physical products. The qrcode-stable-diffusion model builds upon the capabilities of the popular Stable Diffusion model, which is a latent text-to-image diffusion model capable of generating photo-realistic images from text prompts. By integrating this technology with QR code generation, the qrcode-stable-diffusion model offers a novel way to create visually appealing and functional QR codes that can enhance various applications. Model inputs and outputs The qrcode-stable-diffusion model takes several inputs to generate the QR code images, including a prompt to guide the generation, the content to be encoded in the QR code, the seed value, and various settings to control the diffusion process and output quality. Inputs Prompt**: The text prompt that guides the Stable Diffusion model in generating the QR code image. Qr Code Content**: The website or content that the generated QR code will point to. Seed**: A numerical value used to initialize the random number generator, allowing for reproducible results. Strength**: A value between 0 and 1 that indicates how much the generated image should be transformed based on the prompt. Batch Size**: The number of QR code images to generate at once, up to a maximum of 4. Guidance Scale**: A scale factor that controls the influence of the text prompt on the generated image. Negative Prompt**: A text prompt that helps the model avoid generating undesirable elements in the QR code image. Num Inference Steps**: The number of diffusion steps to use during the image generation process, ranging from 20 to 100. Controlnet Conditioning Scale**: A factor that adjusts the influence of the Controlnet on the final output. Outputs The qrcode-stable-diffusion model generates one or more (up to 4) QR code images based on the input parameters, which are returned as a list of image URLs. Capabilities The qrcode-stable-diffusion model excels at creating visually striking QR codes that can be tailored to a user's specific needs. By leveraging the power of Stable Diffusion, the model can generate QR codes with a wide range of artistic styles, from minimalist and geometric designs to more intricate, abstract patterns. What can I use it for? The qrcode-stable-diffusion model opens up a world of creative possibilities for QR code usage. Some potential applications include: Generating unique and eye-catching QR codes for product packaging, marketing materials, or business cards. Creating personalized QR codes for event invitations, digital artwork, or social media profiles. Experimenting with different artistic styles and designs to make QR codes more visually engaging and memorable. Incorporating AI-generated QR codes into various design projects, such as website layouts, mobile apps, or physical installations. Things to try With the qrcode-stable-diffusion model, users can explore the intersection of AI-generated art and practical QR code functionality. Some ideas to experiment with include: Trying different prompts to see how they influence the style and appearance of the generated QR codes. Exploring the effects of adjusting the various input parameters, such as the guidance scale, number of inference steps, or controlnet conditioning scale. Generating a series of QR codes with the same content but different artistic styles to see how the visual presentation can impact the user experience. Combining the qrcode-stable-diffusion model with other AI-powered tools or creative workflows to enhance the overall design and functionality of QR code-based applications.

Updated Invalid Date

dreamlike-diffusion

1

The dreamlike-diffusion model is a diffusion model developed by replicategithubwc that generates surreal and dreamlike artwork. It is part of a suite of "Dreamlike" models created by the same maintainer, including Dreamlike Photoreal and Dreamlike Anime. The dreamlike-diffusion model is trained to produce imaginative and visually striking images from text prompts, with a unique artistic style. Model inputs and outputs The dreamlike-diffusion model takes a text prompt as the primary input, along with optional parameters like image dimensions, number of outputs, and the guidance scale. The model then generates one or more images based on the provided prompt. Inputs Prompt**: The text that describes the desired image Width**: The width of the output image Height**: The height of the output image Num Outputs**: The number of images to generate Guidance Scale**: The scale for classifier-free guidance, which controls the balance between the text prompt and the model's own creative generation Negative Prompt**: Text describing things you don't want to see in the output Scheduler**: The algorithm used for diffusion sampling Seed**: A random seed value to control the image generation Outputs Output Images**: An array of generated image URLs Capabilities The dreamlike-diffusion model excels at producing surreal, imaginative artwork with a unique visual style. It can generate images depicting fantastical scenes, abstract concepts, and imaginative interpretations of real-world objects and environments. The model's outputs often have a sense of visual poetry and dreamlike abstraction, making it well-suited for creative applications like art, illustration, and visual storytelling. What can I use it for? The dreamlike-diffusion model could be useful for a variety of creative projects, such as: Generating concept art or illustrations for stories, games, or other creative works Producing unique and eye-catching visuals for marketing, advertising, or branding Exploring surreal and imaginative themes in art and design Inspiring new ideas and creative directions through the model's dreamlike outputs Things to try One interesting aspect of the dreamlike-diffusion model is its ability to blend multiple concepts and styles in a single image. Try experimenting with prompts that combine seemingly disparate elements, such as "a mechanical dragon flying over a neon-lit city" or "a portrait of a robot mermaid in a thunderstorm." The model's unique artistic interpretation can lead to unexpected and visually captivating results.

Updated Invalid Date

dream

1

dream is a text-to-image generation model created by Replicate user xarty8932. It is similar to other popular text-to-image models like SDXL-Lightning, k-diffusion, and Stable Diffusion, which can generate photorealistic images from textual descriptions. However, the specific capabilities and inner workings of dream are not clearly documented. Model inputs and outputs dream takes in a variety of inputs to generate images, including a textual prompt, image dimensions, a seed value, and optional modifiers like guidance scale and refine steps. The model outputs one or more generated images in the form of image URLs. Inputs Prompt**: The text description that the model will use to generate the image Width/Height**: The desired dimensions of the output image Seed**: A random seed value to control the image generation process Refine**: The style of refinement to apply to the image Scheduler**: The scheduler algorithm to use during image generation Lora Scale**: The additive scale for LoRA (Low-Rank Adaptation) weights Num Outputs**: The number of images to generate Refine Steps**: The number of steps to use for refine-based image generation Guidance Scale**: The scale for classifier-free guidance Apply Watermark**: Whether to apply a watermark to the generated images High Noise Frac**: The fraction of noise to use for the expert_ensemble_refiner Negative Prompt**: A text description for content to avoid in the generated image Prompt Strength**: The strength of the input prompt when using img2img or inpaint modes Replicate Weights**: LoRA weights to use for the image generation Outputs One or more generated image URLs Capabilities dream is a text-to-image generation model, meaning it can create images based on textual descriptions. It appears to have similar capabilities to other popular models like Stable Diffusion, being able to generate a wide variety of photorealistic images from diverse prompts. However, the specific quality and fidelity of the generated images is not clear from the available information. What can I use it for? dream could be used for a variety of creative and artistic applications, such as generating concept art, illustrations, or product visualizations. The ability to create images from text descriptions opens up possibilities for automating image creation, enhancing creative workflows, or even generating custom visuals for things like video games, films, or marketing materials. However, the limitations and potential biases of the model should be carefully considered before deploying it in a production setting. Things to try Some ideas for experimenting with dream include: Trying out a wide range of prompts to see the diversity of images the model can generate Exploring the impact of different hyperparameters like guidance scale, refine steps, and lora scale on the output quality Comparing the results of dream to other text-to-image models like Stable Diffusion or SDXL-Lightning to understand its unique capabilities Incorporating dream into a creative workflow or production pipeline to assess its practical usefulness and limitations

Updated Invalid Date