cantable-diffuguesion

Maintainer: andreasjansson

5

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | View on Github |

| Paper link | No paper link provided |

Create account to get full access

Model overview

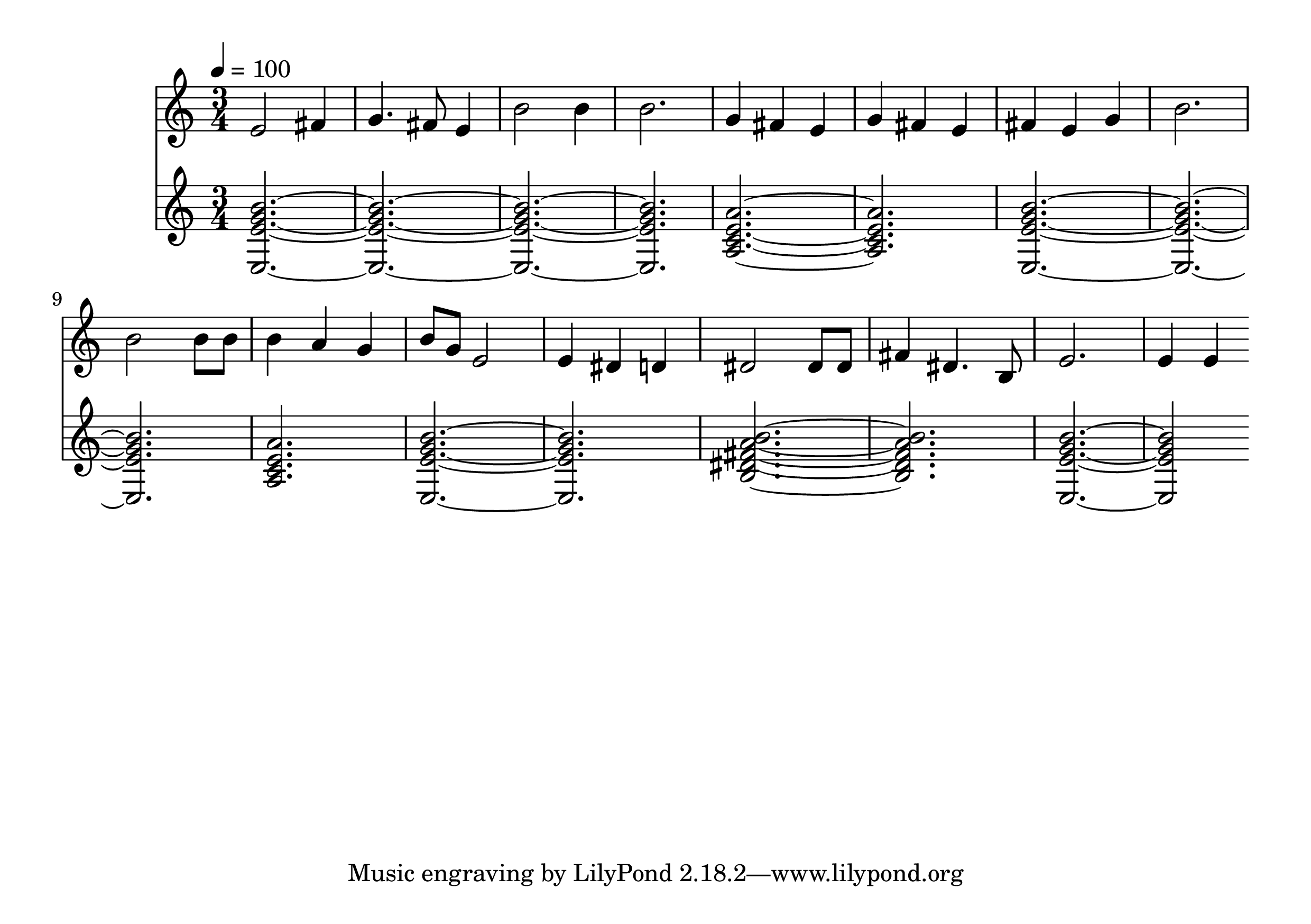

Cantable Diffuguesion is an AI model developed by andreasjansson that can generate and harmonize Bach chorales. It is a diffusion model trained on a dataset of four-part Bach chorales, similar to models like Riffusion and DeepBach. The model can be used to generate new Bach-style chorales unconditionally, or to harmonize melodies or parts of melodies provided as input.

Model inputs and outputs

The Cantable Diffuguesion model takes in a few key inputs to control the generation process:

Inputs

- Seed: A random seed value to control the randomness of the generated output. Setting this to a specific value allows for reproducible results.

- Tempo: The tempo of the generated chorale in quarter notes per minute.

- Melody: A melody in "tinyNotation" format, which allows specifying sections to be inpainted by the model.

- Duration: The total duration of the generated chorale in quarter notes.

- Return mp3 and Return midi: Flags to control whether the model should return the generated chorale as an mp3 audio file or a midi file.

Outputs

- Mp3: If requested, the generated chorale as an mp3 audio file.

- Midi: If requested, the generated chorale as a midi file.

Capabilities

The Cantable Diffuguesion model is capable of generating high-quality, Bach-style four-part chorales both unconditionally and by harmonizing user-provided melodies. The model leverages diffusion techniques similar to those used in Stable Diffusion, but applied to the domain of music generation and harmonization.

What can I use it for?

The Cantable Diffuguesion model could be used for a variety of applications, such as:

- Generating new Bach-style chorales for use in musical compositions, performances, or educational materials.

- Harmonizing melodies or parts of melodies to create full four-part chorale arrangements.

- Experimenting with different musical styles and techniques by adjusting the model inputs like tempo or melody.

- Integrating the model into larger music generation or composition systems.

Things to try

Some interesting things to try with the Cantable Diffuguesion model include:

- Experimenting with different melodic inputs, including both familiar and novel melodies, to see how the model harmonizes them.

- Trying the model's inpainting capabilities by providing partially completed melodies and letting the model fill in the missing sections.

- Investigating the model's ability to capture the stylistic nuances of Bach's chorale writing by generating multiple samples and analyzing their musical characteristics.

- Exploring ways to combine the

Cantable Diffuguesionmodel with other music-related AI models, such as those for music transcription, analysis, or generation, to create more sophisticated musical applications.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

music-inpainting-bert

8

The music-inpainting-bert model is a custom BERT model developed by Andreas Jansson that can jointly inpaint both melody and chords in a piece of music. This model is similar to other models created by Andreas Jansson, such as cantable-diffuguesion for Bach chorale generation and harmonization, stable-diffusion-wip for inpainting in Stable Diffusion, and clip-features for extracting CLIP features. Model inputs and outputs The music-inpainting-bert model takes as input beat-quantized chord labels and beat-quantized melodic patterns, and can output a completion of the melody and chords. The inputs are represented using a look-up table, where melodies are split into beat-sized chunks and quantized to 16th notes. Inputs Notes**: Notes in tinynotation, with each bar separated by '|'. Use '?' for bars you want in-painted. Chords**: Chords (one chord per bar), with each bar separated by '|'. Use '?' for bars you want in-painted. Tempo**: Tempo in beats per minute. Time Signature**: The time signature. Sample Width**: The number of potential predictions to sample from. The higher the value, the more chaotic the output. Seed**: The random seed, with -1 for a random seed. Outputs Mp3**: The generated music as an MP3 file. Midi**: The generated music as a MIDI file. Score**: The generated music as a score. Capabilities The music-inpainting-bert model can be used to jointly inpaint both melody and chords in a piece of music. This can be useful for tasks like music composition, where the model can be used to generate new musical content or complete partial compositions. What can I use it for? The music-inpainting-bert model can be used for a variety of music-related projects, such as: Generating new musical compositions by providing partial input and letting the model fill in the gaps Completing or extending existing musical pieces by providing a starting point and letting the model generate the rest Experimenting with different musical styles and genres by providing prompts and exploring the model's outputs Things to try One interesting thing to try with the music-inpainting-bert model is to provide partial input with a mix of known and unknown elements, and see how the model fills in the gaps. This can be a great way to spark new musical ideas or explore different compositional possibilities.

Updated Invalid Date

musicgen-looper

47

The musicgen-looper is a Cog implementation of the MusicGen model, a simple and controllable model for music generation developed by Facebook Research. Unlike existing music generation models like MusicLM, MusicGen does not require a self-supervised semantic representation and generates all four audio codebooks in a single pass. By introducing a small delay between the codebooks, MusicGen can predict them in parallel, reducing the number of auto-regressive steps per second of audio. The model was trained on 20,000 hours of licensed music data, including an internal dataset of 10,000 high-quality tracks as well as music from ShutterStock and Pond5. The musicgen-looper model is similar to other music generation models like music-inpainting-bert, cantable-diffuguesion, and looptest in its ability to generate music from prompts. However, the key differentiator of musicgen-looper is its focus on generating fixed-BPM loops from text prompts. Model inputs and outputs The musicgen-looper model takes in a text prompt describing the desired music, as well as various parameters to control the generation process, such as tempo, seed, and sampling parameters. It outputs a WAV file containing the generated audio loop. Inputs Prompt**: A description of the music you want to generate. BPM**: Tempo of the generated loop in beats per minute. Seed**: Seed for the random number generator. If not provided, a random seed will be used. Top K**: Reduces sampling to the k most likely tokens. Top P**: Reduces sampling to tokens with cumulative probability of p. When set to 0 (default), top_k sampling is used. Temperature**: Controls the "conservativeness" of the sampling process. Higher temperature means more diversity. Classifier Free Guidance**: Increases the influence of inputs on the output. Higher values produce lower-variance outputs that adhere more closely to the inputs. Max Duration**: Maximum duration of the generated loop in seconds. Variations**: Number of variations to generate. Model Version**: Selects the model to use for generation. Output Format**: Specifies the output format for the generated audio (currently only WAV is supported). Outputs WAV file**: The generated audio loop. Capabilities The musicgen-looper model can generate a wide variety of musical styles and textures from text prompts, including tense, dissonant strings, plucked strings, and more. By controlling parameters like tempo, sampling, and classifier free guidance, users can fine-tune the generated output to match their desired style and mood. What can I use it for? The musicgen-looper model could be useful for a variety of applications, such as: Soundtrack generation**: Generating background music or sound effects for videos, games, or other multimedia projects. Music composition**: Providing a starting point or inspiration for composers and musicians to build upon. Audio manipulation**: Experimenting with different prompts and parameters to create unique and interesting musical textures. The model's ability to generate fixed-BPM loops makes it particularly well-suited for applications where a seamless, loopable audio track is required. Things to try One interesting aspect of the musicgen-looper model is its ability to generate variations on a given prompt. By adjusting the "Variations" parameter, users can explore how the model interprets and reinterprets a prompt in different ways. This could be a useful tool for composers and musicians looking to generate a diverse set of ideas or explore the model's creative boundaries. Another interesting feature is the model's use of classifier free guidance, which helps the generated output adhere more closely to the input prompt. By experimenting with different levels of classifier free guidance, users can find the right balance between adhering to the prompt and introducing their own creative flair.

Updated Invalid Date

clip-features

61.1K

The clip-features model, developed by Replicate creator andreasjansson, is a Cog model that outputs CLIP features for text and images. This model builds on the powerful CLIP architecture, which was developed by researchers at OpenAI to learn about robustness in computer vision tasks and test the ability of models to generalize to arbitrary image classification in a zero-shot manner. Similar models like blip-2 and clip-embeddings also leverage CLIP capabilities for tasks like answering questions about images and generating text and image embeddings. Model inputs and outputs The clip-features model takes a set of newline-separated inputs, which can either be strings of text or image URIs starting with http[s]://. The model then outputs an array of named embeddings, where each embedding corresponds to one of the input entries. Inputs Inputs**: Newline-separated inputs, which can be strings of text or image URIs starting with http[s]://. Outputs Output**: An array of named embeddings, where each embedding corresponds to one of the input entries. Capabilities The clip-features model can be used to generate CLIP features for text and images, which can be useful for a variety of downstream tasks like image classification, retrieval, and visual question answering. By leveraging the powerful CLIP architecture, this model can enable researchers and developers to explore zero-shot and few-shot learning approaches for their computer vision applications. What can I use it for? The clip-features model can be used in a variety of applications that involve understanding the relationship between images and text. For example, you could use it to: Perform image-text similarity search, where you can find the most relevant images for a given text query, or vice versa. Implement zero-shot image classification, where you can classify images into categories without any labeled training data. Develop multimodal applications that combine vision and language, such as visual question answering or image captioning. Things to try One interesting aspect of the clip-features model is its ability to generate embeddings that capture the semantic relationship between text and images. You could try using these embeddings to explore the similarities and differences between various text and image pairs, or to build applications that leverage this cross-modal understanding. For example, you could calculate the cosine similarity between the embeddings of different text inputs and the embedding of a given image, as demonstrated in the provided example code. This could be useful for tasks like image-text retrieval or for understanding the model's perception of the relationship between visual and textual concepts.

Updated Invalid Date

riffusion

950

riffusion is a library for real-time music and audio generation using the Stable Diffusion text-to-image diffusion model. It was developed by Seth Forsgren and Hayk Martiros as a hobby project. riffusion fine-tunes Stable Diffusion to generate spectrogram images that can be converted into audio clips, allowing for the creation of music based on text prompts. This is in contrast to other similar models like inkpunk-diffusion and multidiffusion which focus on visual art generation. Model inputs and outputs riffusion takes in a text prompt, an optional second prompt for interpolation, a seed image ID, and parameters controlling the diffusion process. It outputs a spectrogram image and the corresponding audio clip. Inputs Prompt A**: The primary text prompt describing the desired audio Prompt B**: An optional second prompt to interpolate with the first Alpha**: The interpolation value between the two prompts, from 0 to 1 Denoising**: How much to transform the input spectrogram, from 0 to 1 Seed Image ID**: The ID of a seed spectrogram image to use Num Inference Steps**: The number of steps to run the diffusion model Outputs Spectrogram Image**: A spectrogram visualization of the generated audio Audio Clip**: The generated audio clip in MP3 format Capabilities riffusion can generate a wide variety of musical styles and genres based on the provided text prompts. For example, it can create "funky synth solos", "jazz with piano", or "church bells on Sunday". The model is able to capture complex musical concepts and translate them into coherent audio clips. What can I use it for? The riffusion model is intended for research and creative applications. It could be used to generate audio for educational or creative tools, or as part of artistic projects exploring the intersection of language and music. Additionally, researchers studying generative models and the connection between text and audio may find riffusion useful for their work. Things to try One interesting aspect of riffusion is its ability to interpolate between two text prompts. By adjusting the alpha parameter, you can create a smooth transition from one style of music to another, allowing for the generation of unique and unexpected audio clips. Another interesting area to explore is the model's handling of seed images - by providing different starting spectrograms, you can influence the character and direction of the generated music.

Updated Invalid Date