docentr

Maintainer: cjwbw

3

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | View on Github |

| Paper link | View on Arxiv |

Create account to get full access

Model overview

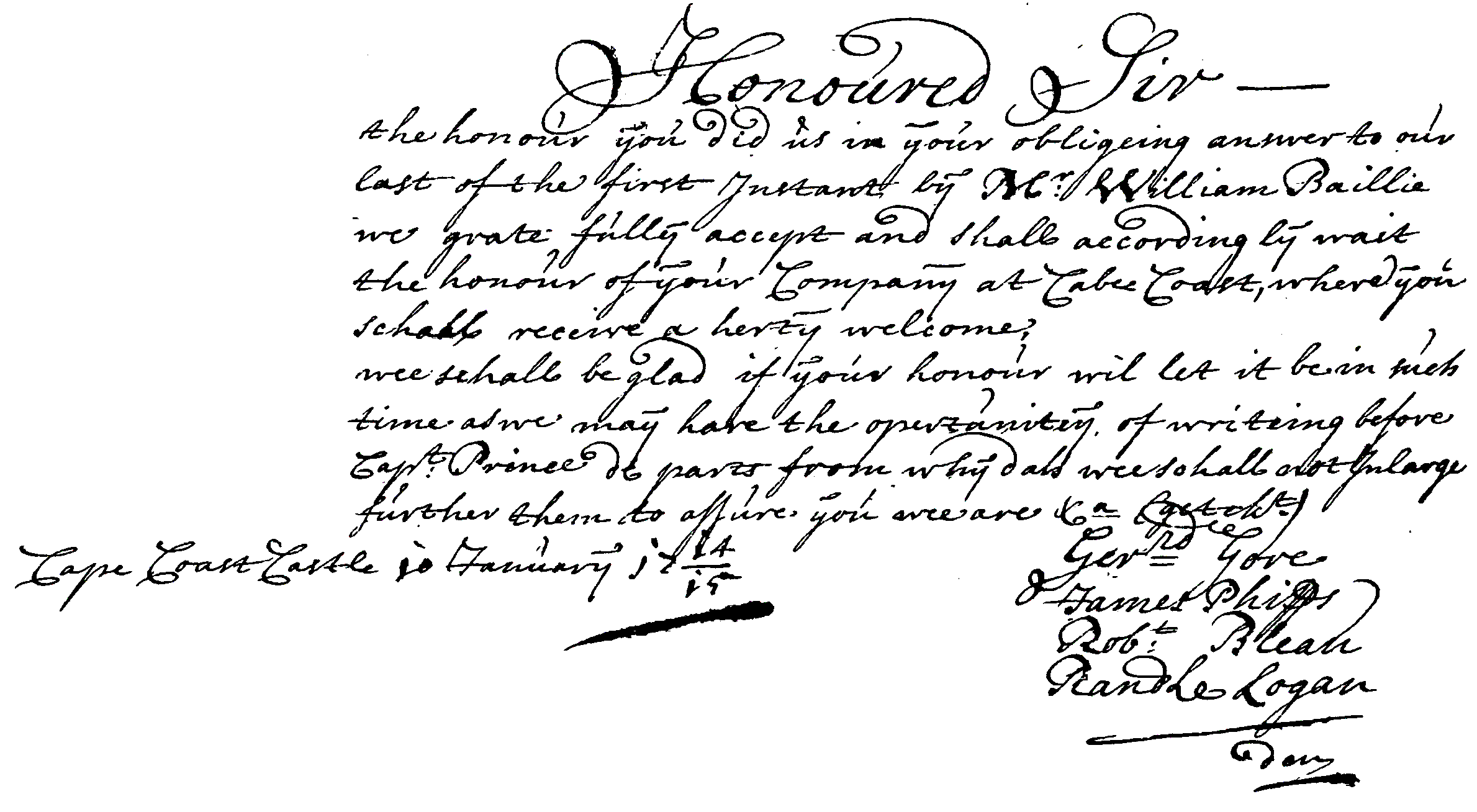

The docentr model is an end-to-end document image enhancement transformer developed by cjwbw. It is a PyTorch implementation of the paper "DocEnTr: An End-to-End Document Image Enhancement Transformer" and is built on top of the vit-pytorch vision transformers library. The model is designed to enhance and binarize degraded document images, as demonstrated in the provided examples.

Model inputs and outputs

The docentr model takes an image as input and produces an enhanced, binarized output image. The input image can be a degraded or low-quality document, and the model aims to improve its visual quality by performing tasks such as binarization, noise removal, and contrast enhancement.

Inputs

- image: The input image, which should be in a valid image format (e.g., PNG, JPEG).

Outputs

- Output: The enhanced, binarized output image.

Capabilities

The docentr model is capable of performing end-to-end document image enhancement, including binarization, noise removal, and contrast improvement. It can be used to improve the visual quality of degraded or low-quality document images, making them more readable and easier to process. The model has shown promising results on benchmark datasets such as DIBCO, H-DIBCO, and PALM.

What can I use it for?

The docentr model can be useful for a variety of applications that involve processing and analyzing document images, such as optical character recognition (OCR), document archiving, and image-based document retrieval. By enhancing the quality of the input images, the model can help improve the accuracy and reliability of downstream tasks. Additionally, the model's capabilities can be leveraged in projects related to document digitization, historical document restoration, and automated document processing workflows.

Things to try

You can experiment with the docentr model by testing it on your own degraded document images and observing the binarization and enhancement results. The model is also available as a pre-trained Replicate model, which you can use to quickly apply the image enhancement without training the model yourself. Additionally, you can explore the provided demo notebook to gain a better understanding of how to use the model and customize its configurations.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

mindall-e

1

minDALL-E is a 1.3B text-to-image generation model trained on 14 million image-text pairs for non-commercial purposes. It is named after the minGPT model and is similar to other text-to-image models like DALL-E and ImageBART. The model uses a two-stage approach, with the first stage generating high-quality image samples using a VQGAN [2] model, and the second stage training a 1.3B transformer from scratch on the image-text pairs. The model was created by cjwbw, who has also developed other text-to-image models like anything-v3.0, animagine-xl-3.1, latent-diffusion-text2img, future-diffusion, and hasdx. Model inputs and outputs minDALL-E takes in a text prompt and generates corresponding images. The model can generate a variety of images based on the provided prompt, including paintings, photos, and digital art. Inputs Prompt**: The text prompt that describes the desired image. Seed**: An optional integer seed value to control the randomness of the generated images. Num Samples**: The number of images to generate based on the input prompt. Outputs Images**: The generated images that match the input prompt. Capabilities minDALL-E can generate high-quality, detailed images across a wide range of topics and styles, including paintings, photos, and digital art. The model is able to handle diverse prompts, from specific scene descriptions to open-ended creative prompts. It can generate images with natural elements, abstract compositions, and even fantastical or surreal content. What can I use it for? minDALL-E could be used for a variety of creative applications, such as concept art, illustration, and visual storytelling. The model's ability to generate unique images from text prompts could be useful for designers, artists, and content creators who need to quickly generate visual assets. Additionally, the model's performance on the MS-COCO dataset suggests it could be applied to tasks like image captioning or visual question answering. Things to try One interesting aspect of minDALL-E is its ability to handle prompts with multiple options, such as "a painting of a cat with sunglasses in the frame" or "a large pink/black elephant walking on the beach". The model can generate diverse samples that capture the different variations within the prompt. Experimenting with these types of prompts can reveal the model's flexibility and creativity. Additionally, the model's strong performance on the ImageNet dataset when fine-tuned suggests it could be a powerful starting point for transfer learning to other image generation tasks. Trying to fine-tune the model on specialized datasets or custom image styles could unlock additional capabilities.

Updated Invalid Date

night-enhancement

41

The night-enhancement model is an unsupervised method for enhancing night images that integrates a layer decomposition network and a light-effects suppression network. Unlike most existing night visibility enhancement methods that focus mainly on boosting low-light regions, this model aims to suppress the uneven distribution of light effects, such as glare and floodlight, while simultaneously enhancing the intensity of dark regions. The model was developed by Yeying Jin, Wenhan Yang and Robby T. Tan, and was published at the European Conference on Computer Vision (ECCV) in 2022. Similar models developed by the same maintainer, cjwbw, include supir, which focuses on photo-realistic image restoration, docentr, an end-to-end document image enhancement transformer, and analog-diffusion, a Dreambooth model trained on analog photographs. Model inputs and outputs The night-enhancement model takes a single night image as input and outputs an enhanced version of the image with suppressed light effects and boosted intensity in dark regions. Inputs Image**: The input image, which should be a night scene with uneven lighting. Outputs Enhanced Image**: The output image with improved visibility and reduced light effects. Capabilities The night-enhancement model is capable of effectively suppressing the light effects in bright regions of night images while boosting the intensity of dark regions. This is achieved through the integration of a layer decomposition network and a light-effects suppression network. The layer decomposition network learns to separate the input image into shading, reflectance, and light-effects layers, while the light-effects suppression network exploits the estimated light-effects layer as guidance to focus on the light-effects regions and suppress them. What can I use it for? The night-enhancement model can be useful for a variety of applications that involve improving the visibility and clarity of night images, such as surveillance, autonomous driving, and night photography. By suppressing the uneven distribution of light effects and enhancing the intensity of dark regions, the model can help improve the overall quality and usability of night images. Things to try One interesting aspect of the night-enhancement model is its ability to handle a wide range of light effects, including glare, floodlight, and various light colors. Users can experiment with different types of night scenes to see how the model performs in various lighting conditions. Additionally, the model's unsupervised nature allows it to be applied to a diverse set of night images without the need for labeled training data, making it a versatile tool for a wide range of applications.

Updated Invalid Date

openpsg

1

openpsg is a powerful AI model for Panoptic Scene Graph Generation (PSG). Developed by researchers at Nanyang Technological University and SenseTime Research, openpsg aims to provide a comprehensive scene understanding by generating a scene graph representation that is grounded by pixel-accurate segmentation masks. This contrasts with classic Scene Graph Generation (SGG) datasets that use bounding boxes, which can result in coarse localization, inability to ground backgrounds, and trivial relationships. The openpsg model addresses these issues by using the COCO panoptic segmentation dataset to annotate relations based on segmentation masks rather than bounding boxes. It also carefully defines 56 predicates to avoid trivial or duplicated relationships. Similar models like gfpgan for face restoration, segmind-vega for accelerated Stable Diffusion, stable-diffusion for text-to-image generation, cogvlm for powerful visual language modeling, and real-esrgan for blind super-resolution, also tackle complex visual understanding tasks. Model inputs and outputs The openpsg model takes an input image and generates a scene graph representation of the content in the image. The scene graph consists of a set of nodes (objects) and edges (relationships) that comprehensively describe the scene. Inputs Image**: The input image to be analyzed. Num Rel**: The desired number of relationships to be generated in the scene graph, ranging from 1 to 20. Outputs Scene Graph**: An array of scene graph elements, where each element represents a relationship in the form of a subject, predicate, and object, all grounded by their corresponding segmentation masks in the input image. Capabilities openpsg excels at holistically understanding complex scenes by generating a detailed scene graph representation. Unlike classic SGG approaches that focus on objects and their relationships, openpsg considers both "things" (objects) and "stuff" (backgrounds) to provide a more comprehensive interpretation of the scene. What can I use it for? The openpsg model can be useful for a variety of applications that require a deep understanding of visual scenes, such as: Robotic Vision**: Enabling robots to better comprehend their surroundings and interact with objects and environments. Autonomous Driving**: Improving scene understanding for self-driving cars to navigate more safely and effectively. Visual Question Answering**: Enhancing the ability to answer questions about the contents and relationships in an image. Image Captioning**: Generating detailed captions that describe not just the objects, but also the interactions and spatial relationships in a scene. Things to try With the openpsg model, you can experiment with various types of images to see how it generates the scene graph representation. Try uploading photos of everyday scenes, like a living room or a park, and observe how the model identifies the objects, their attributes, and the relationships between them. You can also explore the potential of using the scene graph output for downstream tasks like visual reasoning or image-text matching.

Updated Invalid Date

rembg

6.7K

rembg is an AI model developed by cjwbw that can remove the background from images. It is similar to other background removal models like rmgb, rembg, background_remover, and remove_bg, all of which aim to separate the subject from the background in an image. Model inputs and outputs The rembg model takes an image as input and outputs a new image with the background removed. This can be a useful preprocessing step for various computer vision tasks, like object detection or image segmentation. Inputs Image**: The input image to have its background removed. Outputs Output**: The image with the background removed. Capabilities The rembg model can effectively remove the background from a wide variety of images, including portraits, product shots, and nature scenes. It is trained to work well on complex backgrounds and can handle partial occlusions or overlapping objects. What can I use it for? You can use rembg to prepare images for further processing, such as creating cut-outs for design work, enhancing product photography, or improving the performance of other computer vision models. For example, you could use it to extract the subject of an image and overlay it on a new background, or to remove distracting elements from an image before running an object detection algorithm. Things to try One interesting thing to try with rembg is using it on images with multiple subjects or complex backgrounds. See how it handles separating individual elements and preserving fine details. You can also experiment with using the model's output as input to other computer vision tasks, like image segmentation or object tracking, to see how it impacts the performance of those models.

Updated Invalid Date