mindall-e

Maintainer: cjwbw

1

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | View on Github |

| Paper link | No paper link provided |

Create account to get full access

Model overview

minDALL-E is a 1.3B text-to-image generation model trained on 14 million image-text pairs for non-commercial purposes. It is named after the minGPT model and is similar to other text-to-image models like DALL-E and ImageBART. The model uses a two-stage approach, with the first stage generating high-quality image samples using a VQGAN [2] model, and the second stage training a 1.3B transformer from scratch on the image-text pairs.

The model was created by cjwbw, who has also developed other text-to-image models like anything-v3.0, animagine-xl-3.1, latent-diffusion-text2img, future-diffusion, and hasdx.

Model inputs and outputs

minDALL-E takes in a text prompt and generates corresponding images. The model can generate a variety of images based on the provided prompt, including paintings, photos, and digital art.

Inputs

- Prompt: The text prompt that describes the desired image.

- Seed: An optional integer seed value to control the randomness of the generated images.

- Num Samples: The number of images to generate based on the input prompt.

Outputs

- Images: The generated images that match the input prompt.

Capabilities

minDALL-E can generate high-quality, detailed images across a wide range of topics and styles, including paintings, photos, and digital art. The model is able to handle diverse prompts, from specific scene descriptions to open-ended creative prompts. It can generate images with natural elements, abstract compositions, and even fantastical or surreal content.

What can I use it for?

minDALL-E could be used for a variety of creative applications, such as concept art, illustration, and visual storytelling. The model's ability to generate unique images from text prompts could be useful for designers, artists, and content creators who need to quickly generate visual assets. Additionally, the model's performance on the MS-COCO dataset suggests it could be applied to tasks like image captioning or visual question answering.

Things to try

One interesting aspect of minDALL-E is its ability to handle prompts with multiple options, such as "a painting of a cat with sunglasses in the frame" or "a large pink/black elephant walking on the beach". The model can generate diverse samples that capture the different variations within the prompt. Experimenting with these types of prompts can reveal the model's flexibility and creativity.

Additionally, the model's strong performance on the ImageNet dataset when fine-tuned suggests it could be a powerful starting point for transfer learning to other image generation tasks. Trying to fine-tune the model on specialized datasets or custom image styles could unlock additional capabilities.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

docentr

3

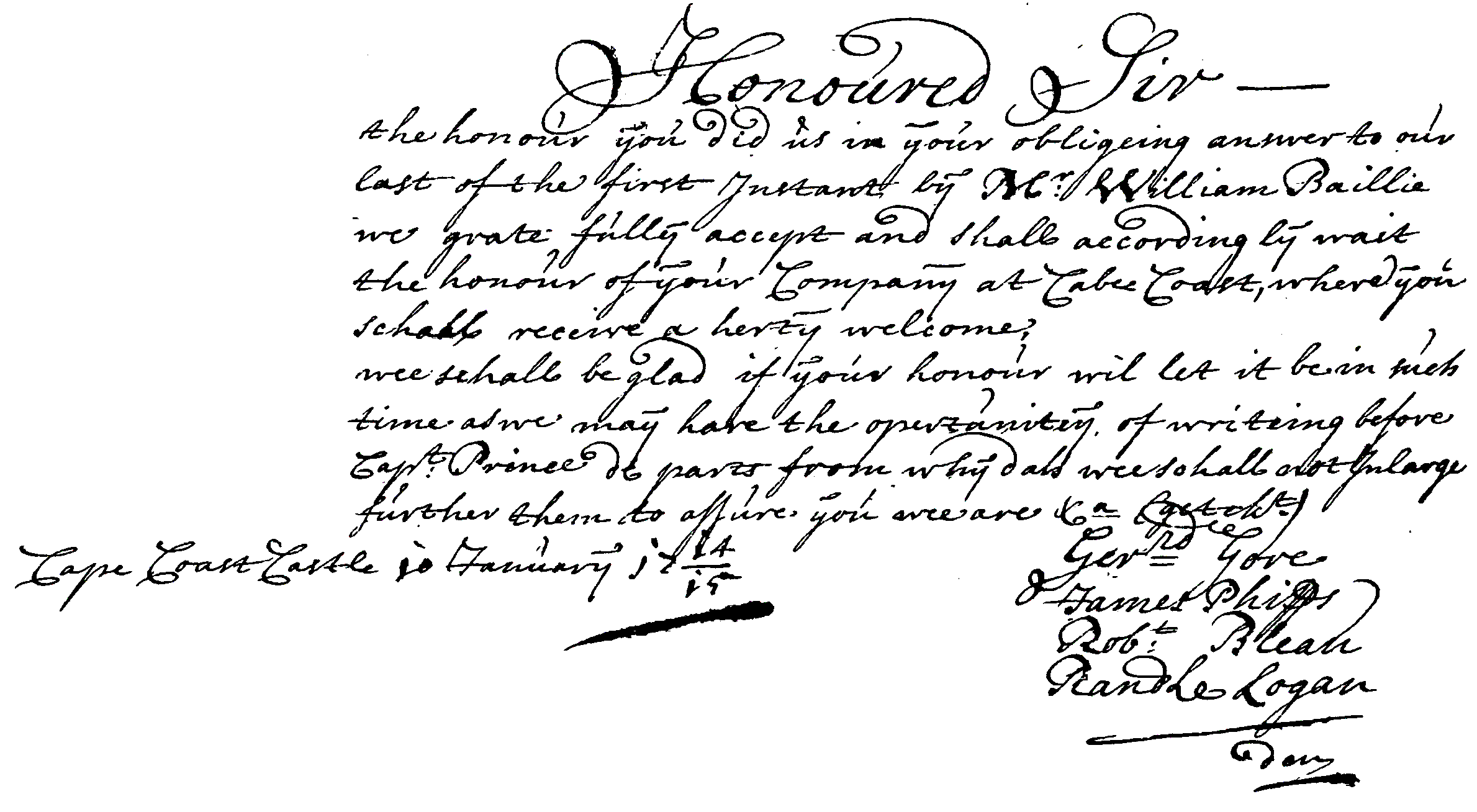

The docentr model is an end-to-end document image enhancement transformer developed by cjwbw. It is a PyTorch implementation of the paper "DocEnTr: An End-to-End Document Image Enhancement Transformer" and is built on top of the vit-pytorch vision transformers library. The model is designed to enhance and binarize degraded document images, as demonstrated in the provided examples. Model inputs and outputs The docentr model takes an image as input and produces an enhanced, binarized output image. The input image can be a degraded or low-quality document, and the model aims to improve its visual quality by performing tasks such as binarization, noise removal, and contrast enhancement. Inputs image**: The input image, which should be in a valid image format (e.g., PNG, JPEG). Outputs Output**: The enhanced, binarized output image. Capabilities The docentr model is capable of performing end-to-end document image enhancement, including binarization, noise removal, and contrast improvement. It can be used to improve the visual quality of degraded or low-quality document images, making them more readable and easier to process. The model has shown promising results on benchmark datasets such as DIBCO, H-DIBCO, and PALM. What can I use it for? The docentr model can be useful for a variety of applications that involve processing and analyzing document images, such as optical character recognition (OCR), document archiving, and image-based document retrieval. By enhancing the quality of the input images, the model can help improve the accuracy and reliability of downstream tasks. Additionally, the model's capabilities can be leveraged in projects related to document digitization, historical document restoration, and automated document processing workflows. Things to try You can experiment with the docentr model by testing it on your own degraded document images and observing the binarization and enhancement results. The model is also available as a pre-trained Replicate model, which you can use to quickly apply the image enhancement without training the model yourself. Additionally, you can explore the provided demo notebook to gain a better understanding of how to use the model and customize its configurations.

Updated Invalid Date

segmind-vega

1

segmind-vega is an open-source AI model developed by cjwbw that is a distilled and accelerated version of Stable Diffusion, achieving a 100% speedup. It is similar to other AI models created by cjwbw, such as animagine-xl-3.1, tokenflow, and supir, as well as the cog-a1111-ui model created by brewwh. Model inputs and outputs segmind-vega is a text-to-image AI model that takes a text prompt as input and generates a corresponding image. The input prompt can include details about the desired content, style, and other characteristics of the generated image. The model also accepts a negative prompt, which specifies elements that should not be included in the output. Additionally, users can set a random seed value to control the stochastic nature of the generation process. Inputs Prompt**: The text prompt describing the desired image Negative Prompt**: Specifications for elements that should not be included in the output Seed**: A random seed value to control the stochastic generation process Outputs Output Image**: The generated image corresponding to the input prompt Capabilities segmind-vega is capable of generating a wide variety of photorealistic and imaginative images based on the provided text prompts. The model has been optimized for speed, allowing it to generate images more quickly than the original Stable Diffusion model. What can I use it for? With segmind-vega, you can create custom images for a variety of applications, such as social media content, marketing materials, product visualizations, and more. The model's speed and flexibility make it a useful tool for rapid prototyping and experimentation. You can also explore the model's capabilities by trying different prompts and comparing the results to those of similar models like animagine-xl-3.1 and tokenflow. Things to try One interesting aspect of segmind-vega is its ability to generate images with consistent styles and characteristics across multiple prompts. By experimenting with different prompts and studying the model's outputs, you can gain insights into how it understands and represents visual concepts. This can be useful for a variety of applications, such as the development of novel AI-powered creative tools or the exploration of the relationships between language and visual perception.

Updated Invalid Date

cogvlm

560

CogVLM is a powerful open-source visual language model developed by the maintainer cjwbw. It comprises a vision transformer encoder, an MLP adapter, a pretrained large language model (GPT), and a visual expert module. CogVLM-17B has 10 billion vision parameters and 7 billion language parameters, and it achieves state-of-the-art performance on 10 classic cross-modal benchmarks, including NoCaps, Flicker30k captioning, RefCOCO, and more. It can also engage in conversational interactions about images. Similar models include segmind-vega, an open-source distilled Stable Diffusion model with 100% speedup, animagine-xl-3.1, an anime-themed text-to-image Stable Diffusion model, cog-a1111-ui, a collection of anime Stable Diffusion models, and videocrafter, a text-to-video and image-to-video generation and editing model. Model inputs and outputs CogVLM is a powerful visual language model that can accept both text and image inputs. It can generate detailed image descriptions, answer various types of visual questions, and even engage in multi-turn conversations about images. Inputs Image**: The input image that CogVLM will process and generate a response for. Query**: The text prompt or question that CogVLM will use to generate a response related to the input image. Outputs Text response**: The generated text response from CogVLM based on the input image and query. Capabilities CogVLM is capable of accurately describing images in detail with very few hallucinations. It can understand and answer various types of visual questions, and it has a visual grounding version that can ground the generated text to specific regions of the input image. CogVLM sometimes captures more detailed content than GPT-4V(ision). What can I use it for? With its powerful visual and language understanding capabilities, CogVLM can be used for a variety of applications, such as image captioning, visual question answering, image-based dialogue systems, and more. Developers and researchers can leverage CogVLM to build advanced multimodal AI systems that can effectively process and understand both visual and textual information. Things to try One interesting aspect of CogVLM is its ability to engage in multi-turn conversations about images. You can try providing a series of related queries about a single image and observe how the model responds and maintains context throughout the conversation. Additionally, you can experiment with different prompting strategies to see how CogVLM performs on various visual understanding tasks, such as detailed image description, visual reasoning, and visual grounding.

Updated Invalid Date

karlo

1

karlo is a text-conditional image generation model developed by Kakao Brain, a leading AI research institute. It is based on OpenAI's unCLIP, a state-of-the-art model for generating images from text prompts. karlo allows users to create high-quality images by simply describing what they want to see. This makes it a powerful tool for applications such as creative content generation, product visualization, and educational materials. When compared to similar models like Stable Diffusion, karlo offers improved image quality and can generate more detailed and realistic outputs. However, it may require more computational resources to run. The model has also been favorably compared to other text-to-image diffusion models like wuerstchen, shap-e, and text2video-zero, all of which were also developed by the maintainer cjwbw. Model inputs and outputs karlo takes a text prompt as input and generates corresponding images as output. The model is highly customizable, allowing users to control various parameters such as the number of inference steps, guidance scales, and random seed. Inputs Prompt**: The text description of the image you want to generate. Seed**: A random seed value that can be used to control the randomness of the output. Prior Guidance Scale**: A parameter that balances the influence of the text prompt on the generated image. Decoder Guidance Scale**: Another parameter that controls the balance between the text prompt and the generated image. Prior Num Inference Steps**: The number of denoising steps for the prior, which affects the quality of the generated image. Decoder Num Inference Steps**: The number of denoising steps for the decoder, which also affects the quality of the generated image. Super Res Num Inference Steps**: The number of denoising steps for the super-resolution process, which can improve the sharpness of the generated image. Outputs Image**: The generated image corresponding to the input text prompt. Capabilities karlo is capable of generating a wide range of high-quality images based on text prompts. The model can produce detailed, realistic, and visually appealing images across a variety of subjects, including landscapes, objects, animals, and more. It can also handle complex prompts with multiple elements and can generate images with a high level of realism and visual complexity. What can I use it for? karlo can be used for a variety of applications, such as: Creative content generation**: Generate unique, visually striking images for use in digital art, social media, advertising, and other creative projects. Product visualization**: Create realistic product images and visualizations to showcase new products or concepts. Educational materials**: Generate images to illustrate educational content, such as textbooks, presentations, and online courses. Prototyping and mockups**: Quickly generate visual assets for prototyping and mockups, speeding up the design process. Things to try Some interesting things to try with karlo include: Experimenting with different prompts to see the range of images the model can generate. Adjusting the various input parameters, such as the guidance scales and number of inference steps, to find the optimal settings for your use case. Combining karlo with other models, such as Stable Diffusion 2-1-unclip, to explore more advanced image generation capabilities. Exploring the model's ability to generate images with a high level of detail and realism, and using it to create visually striking and compelling content.

Updated Invalid Date