flux-softserve-anime

Maintainer: aramintak

3

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | No Github link provided |

| Paper link | View on Arxiv |

Create account to get full access

Model overview

flux-softserve-anime is a text-to-image AI model developed by aramintak. It uses the FLUX architecture and can generate anime-style illustrations based on text prompts. This model can be compared to similar anime-focused text-to-image models like sdxl-lightning-4step, flux-dev-multi-lora, and cog-a1111-ui.

Model inputs and outputs

flux-softserve-anime takes in a text prompt and generates an anime-style illustration. The model allows for customization of the image size, aspect ratio, and inference steps, as well as the ability to control the strength of the LORA (Low-Rank Adaptation) applied to the model.

Inputs

- Prompt: The text prompt describing the desired image

- Seed: A random seed for reproducible generation

- Model: The specific model to use for inference (e.g. "dev" or "schnell")

- Width & Height: The desired size of the generated image (optional, used when aspect ratio is set to "custom")

- Aspect Ratio: The aspect ratio of the generated image (e.g. "1:1", "16:9", "custom")

- LORA Scale: The strength of the LORA to apply

- Num Outputs: The number of images to generate

- Guidance Scale: The guidance scale for the diffusion process

- Num Inference Steps: The number of inference steps to perform

- Disable Safety Checker: An option to disable the safety checker for the generated images

Outputs

- The generated anime-style illustration(s) in the specified format (e.g. WEBP)

Capabilities

flux-softserve-anime can generate high-quality anime-style illustrations based on text prompts. The model is capable of producing a variety of anime art styles and can capture intricate details and diverse scenes. By adjusting the LORA scale and number of inference steps, users can fine-tune the balance between image quality and generation speed.

What can I use it for?

flux-softserve-anime can be used to create illustrations for a variety of applications, such as anime-themed videos, games, or digital art. The model's ability to generate diverse, high-quality images based on text prompts makes it a powerful tool for artists, designers, and content creators looking to incorporate anime-style elements into their work. Additionally, the model could be used to rapidly prototype or visualize ideas for anime-inspired projects.

Things to try

One interesting aspect of flux-softserve-anime is the ability to control the strength of the LORA applied to the model. By adjusting the LORA scale, users can experiment with different levels of artistic fidelity and stylization in the generated images. Additionally, playing with the number of inference steps can reveal a balance between image quality and generation speed, allowing users to find the optimal settings for their specific needs.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

flux-koda

1

flux-koda is a Lora-based model created by Replicate user aramintak. It is part of the "Flux" series of models, which includes similar models like flux-cinestill, flux-dev-multi-lora, and flux-softserve-anime. These models are designed to produce images with a distinctive visual style by applying Lora techniques. Model inputs and outputs The flux-koda model accepts a variety of inputs, including the prompt, seed, aspect ratio, and guidance scale. The output is an array of image URLs, with the number of outputs determined by the "Num Outputs" parameter. Inputs Prompt**: The text prompt that describes the desired image. Seed**: The random seed value used for reproducible image generation. Width/Height**: The size of the generated image, in pixels. Aspect Ratio**: The aspect ratio of the generated image, which can be set to a predefined value or to "custom" for arbitrary dimensions. Num Outputs**: The number of images to generate, up to a maximum of 4. Guidance Scale**: A parameter that controls the influence of the prompt on the generated image. Num Inference Steps**: The number of steps used in the diffusion process to generate the image. Extra Lora**: An additional Lora model to be combined with the primary model. Lora Scale**: The strength of the primary Lora model. Extra Lora Scale**: The strength of the additional Lora model. Outputs Image URLs**: An array of URLs pointing to the generated images. Capabilities The flux-koda model is capable of generating images with a unique visual style by combining the core Stable Diffusion model with Lora techniques. The resulting images often have a painterly, cinematic quality that is distinct from the output of more generic Stable Diffusion models. What can I use it for? The flux-koda model could be used for a variety of creative projects, such as generating concept art, illustrations, or background images for films, games, or other media. Its distinctive style could also be leveraged for branding, marketing, or advertising purposes. Additionally, the model's ability to generate multiple images at once could make it useful for rapid prototyping or experimentation. Things to try One interesting aspect of the flux-koda model is the ability to combine it with additional Lora models, as demonstrated by the flux-dev-multi-lora and flux-softserve-anime models. By experimenting with different Lora combinations, users may be able to create even more unique and compelling visual styles.

Updated Invalid Date

↗️

softserve_anime

54

The softserve_anime model, created by alvdansen, is a newly trained Flux Dev model capable of generating stylized anime-inspired illustrations. This model is similar to other Flux models like flux-softserve-anime and flux_film_foto, which also aim to produce unique artistic styles. The softserve_anime model has a Dim/Rank of 64, making it a bit larger than some of the other Flux models. Model inputs and outputs The softserve_anime model takes text prompts as input and generates corresponding anime-style illustrations as output. The text prompts should include the phrase "sftsrv style illustration" to trigger the desired style. Inputs Text prompts describing the desired image, such as "a smart person, sftsrv style" or "a girl wearing a duck themed raincaot, sftsrv style" Outputs Unique anime-inspired illustrations matching the provided text prompts Capabilities The softserve_anime model is capable of generating a wide range of stylized anime-inspired illustrations based on the provided text prompts. The images exhibit a distinctive soft, pastel-like aesthetic with an emphasis on fantasy and whimsical elements. The model can depict a variety of subjects, from people and animals to food and landscapes, all in the characteristic "sftsrv" style. What can I use it for? The softserve_anime model could be useful for a variety of creative projects, such as generating illustrations for anime-themed games, books, or merchandise. The unique style of the images could also be leveraged for social media content, product design, or other visual art applications. Given the model's sponsorship by Glif, it may be particularly well-suited for projects related to or supported by that platform. Things to try One interesting aspect of the softserve_anime model is its ability to depict a wide range of subject matter in a consistent, recognizable style. Experimenting with different prompts, from realistic scenes to more fantastical elements, can showcase the model's flexibility and versatility. Additionally, combining the "sftsrv style illustration" prompt with other descriptors, such as "vintage" or "faded film," could lead to intriguing variations on the model's output.

Updated Invalid Date

sdxl-lightning-4step

409.9K

sdxl-lightning-4step is a fast text-to-image model developed by ByteDance that can generate high-quality images in just 4 steps. It is similar to other fast diffusion models like AnimateDiff-Lightning and Instant-ID MultiControlNet, which also aim to speed up the image generation process. Unlike the original Stable Diffusion model, these fast models sacrifice some flexibility and control to achieve faster generation times. Model inputs and outputs The sdxl-lightning-4step model takes in a text prompt and various parameters to control the output image, such as the width, height, number of images, and guidance scale. The model can output up to 4 images at a time, with a recommended image size of 1024x1024 or 1280x1280 pixels. Inputs Prompt**: The text prompt describing the desired image Negative prompt**: A prompt that describes what the model should not generate Width**: The width of the output image Height**: The height of the output image Num outputs**: The number of images to generate (up to 4) Scheduler**: The algorithm used to sample the latent space Guidance scale**: The scale for classifier-free guidance, which controls the trade-off between fidelity to the prompt and sample diversity Num inference steps**: The number of denoising steps, with 4 recommended for best results Seed**: A random seed to control the output image Outputs Image(s)**: One or more images generated based on the input prompt and parameters Capabilities The sdxl-lightning-4step model is capable of generating a wide variety of images based on text prompts, from realistic scenes to imaginative and creative compositions. The model's 4-step generation process allows it to produce high-quality results quickly, making it suitable for applications that require fast image generation. What can I use it for? The sdxl-lightning-4step model could be useful for applications that need to generate images in real-time, such as video game asset generation, interactive storytelling, or augmented reality experiences. Businesses could also use the model to quickly generate product visualization, marketing imagery, or custom artwork based on client prompts. Creatives may find the model helpful for ideation, concept development, or rapid prototyping. Things to try One interesting thing to try with the sdxl-lightning-4step model is to experiment with the guidance scale parameter. By adjusting the guidance scale, you can control the balance between fidelity to the prompt and diversity of the output. Lower guidance scales may result in more unexpected and imaginative images, while higher scales will produce outputs that are closer to the specified prompt.

Updated Invalid Date

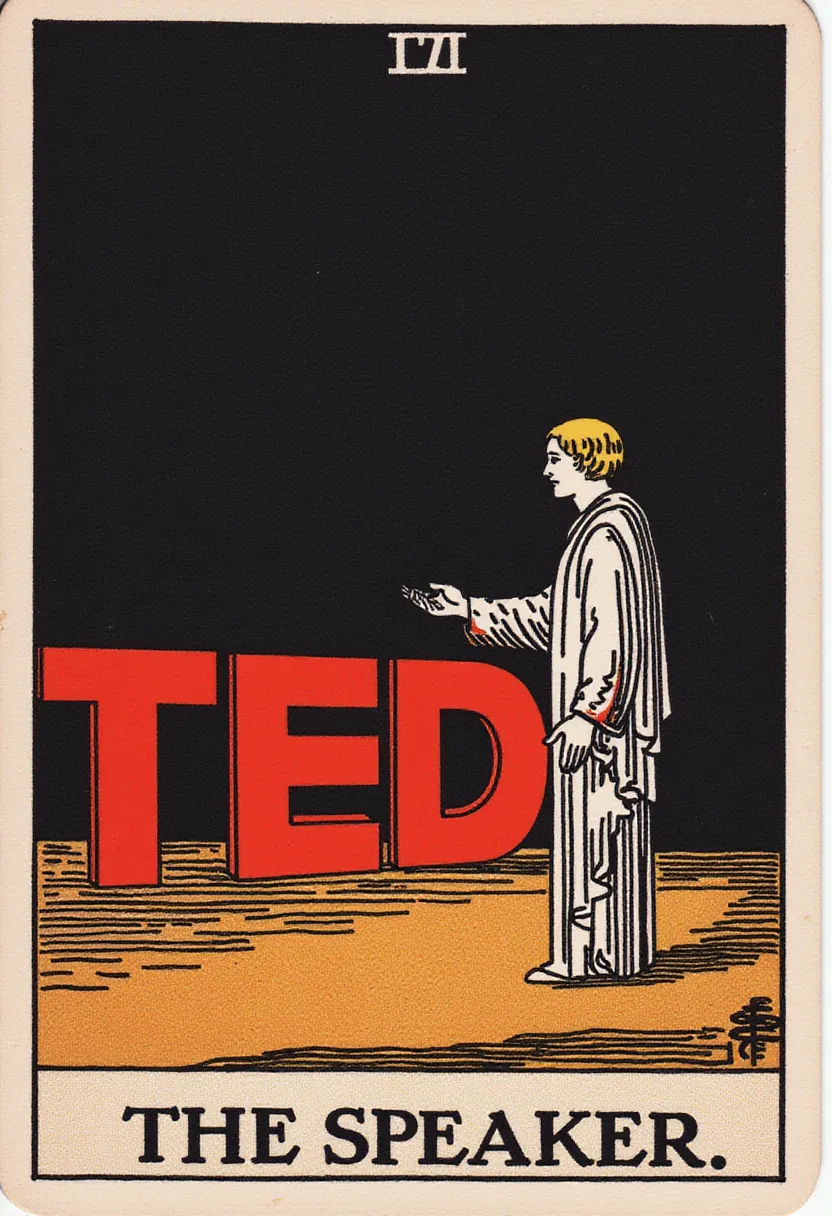

flux-tarot-v1

3

flux-tarot-v1 is a Stable Diffusion model created by Apolinario that can generate images in the style of tarot cards. This model is similar to other Flux lora models like flux-koda, flux-ps1-style, and flux-cinestill which also explore different artistic styles. Model inputs and outputs flux-tarot-v1 takes a prompt as input and generates one or more tarot-style images. The model supports a variety of parameters, including the ability to set the seed, output size, number of steps, and more. The output is a set of image URLs that can be downloaded. Inputs Prompt**: The text prompt that describes the desired image Seed**: A random seed value for reproducible generation Model**: The specific model to use for inference (e.g. "dev" or "schnell") Width/Height**: The size of the generated image (only used when aspect ratio is set to "custom") Aspect Ratio**: The aspect ratio of the generated image (e.g. "1:1", "16:9") Num Outputs**: The number of images to generate Guidance Scale**: The guidance scale for the diffusion process Num Inference Steps**: The number of inference steps to perform Extra LoRA**: Additional LoRA models to combine with the main model LoRA Scale**: The scale factor for applying the LoRA model Extra LoRA Scale**: The scale factor for applying the additional LoRA model Outputs Image URLs**: A set of URLs representing the generated images Capabilities flux-tarot-v1 can generate unique tarot-style images based on a text prompt. The model is able to capture the aesthetic and symbolism of traditional tarot cards, while still allowing for a wide range of creative interpretations. This could be useful for projects involving tarot, divination, or esoteric imagery. What can I use it for? flux-tarot-v1 could be used to create custom tarot decks, tarot-inspired art, or illustrations for divination-themed products and services. Apolinario, the creator of the model, has used it to explore the intersection of AI and esoteric practices. Things to try Experiment with different prompts to see the range of styles and interpretations the model can produce. Try combining flux-tarot-v1 with other LoRA models to create unique hybrid styles. You could also use the model to generate a full tarot deck or explore the narrative and symbolic potential of the tarot through AI-generated images.

Updated Invalid Date