grounded_sam

Maintainer: schananas

606

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | View on Github |

| Paper link | View on Arxiv |

Create account to get full access

Model overview

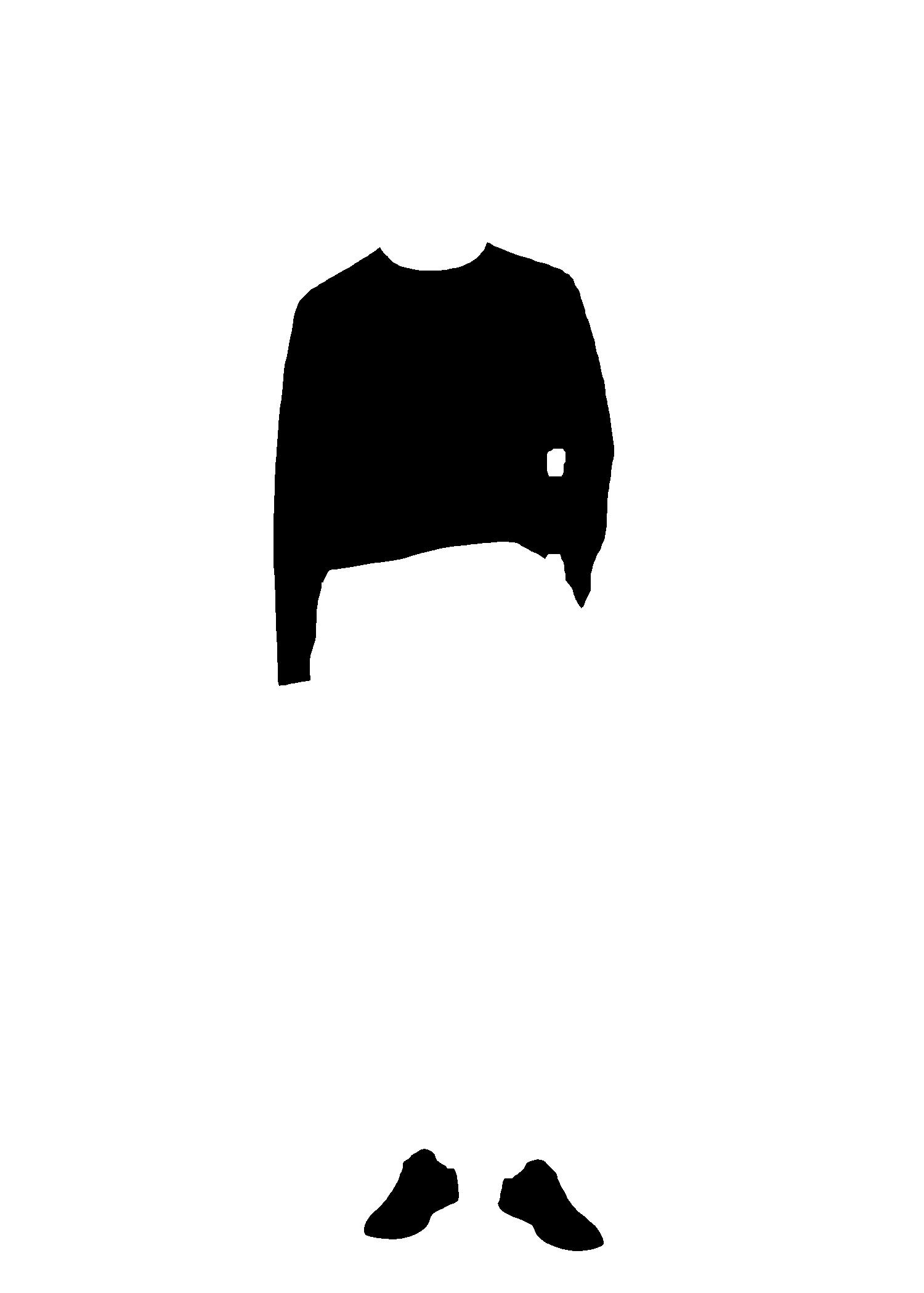

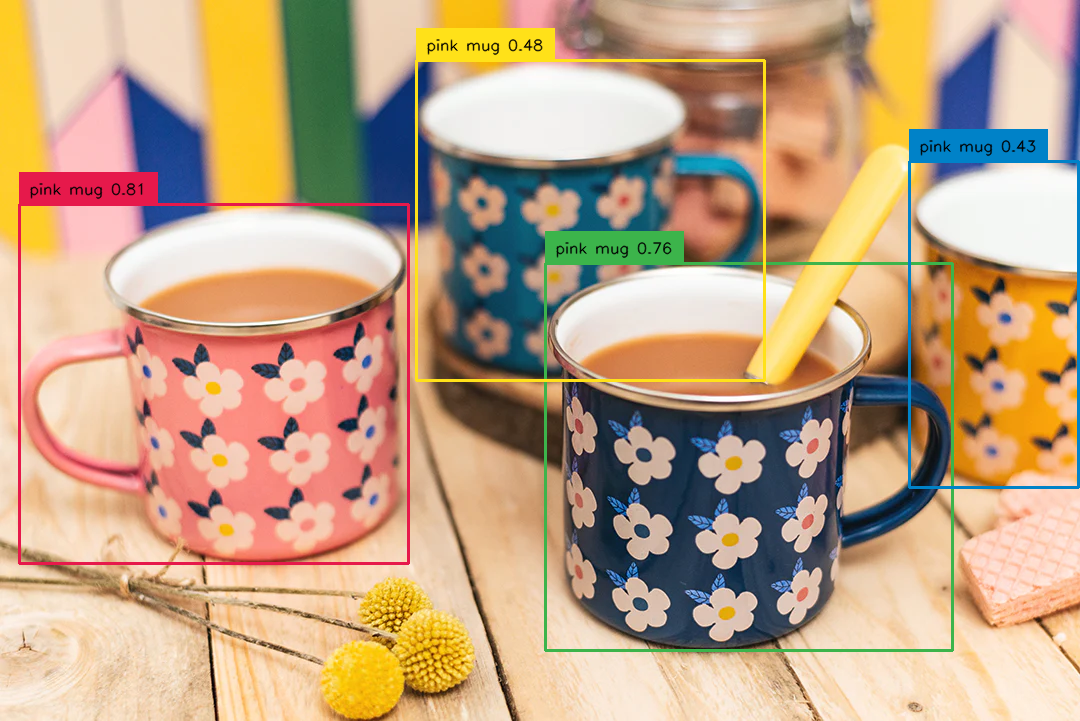

grounded_sam is an AI model that combines the strengths of Grounding DINO and Segment Anything to provide a powerful pipeline for solving complex masking problems. Grounding DINO is a strong zero-shot object detector that can generate high-quality bounding boxes and labels from free-form text, while Segment Anything is an advanced segmentation model that can generate masks for all objects in an image. This project adds the ability to prompt multiple masks and combine them, as well as to subtract negative masks for fine-grained control.

Model inputs and outputs

grounded_sam takes an image, a positive mask prompt, a negative mask prompt, and an adjustment factor as inputs. It then generates a set of masks that match the provided prompts. The positive prompt is used to identify the objects or regions of interest, while the negative prompt is used to exclude certain areas from the mask. The adjustment factor can be used to dilate or erode the masks.

Inputs

- Image: The input image to be masked.

- Mask Prompt: The text prompt used to identify the objects or regions of interest.

- Negative Mask Prompt: The text prompt used to exclude certain areas from the mask.

- Adjustment Factor: An integer value that can be used to dilate (+) or erode (-) the generated masks.

Outputs

- Masks: An array of image URIs representing the generated masks.

Capabilities

grounded_sam is a powerful tool for programmed inpainting and selective masking. It can be used to precisely target and mask specific objects or regions in an image based on text prompts, while also excluding unwanted areas. This makes it useful for tasks like image editing, content creation, and data annotation.

What can I use it for?

grounded_sam can be used for a variety of applications, such as:

- Image Editing: Precisely mask and modify specific elements in an image, such as removing objects, replacing backgrounds, or adjusting the appearance of specific regions.

- Content Creation: Generate custom masks for use in digital art, compositing, or other creative projects.

- Data Annotation: Automate the process of annotating images for tasks like object detection, instance segmentation, and more.

Things to try

One interesting thing to try with grounded_sam is using it to create masks for programmed inpainting. By combining the positive and negative prompts, you can precisely target the areas you want to keep or remove, and then use the adjustment factor to fine-tune the masks as needed. This can be a powerful tool for tasks like object removal, image restoration, or content-aware fill.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

🤔

ram-grounded-sam

1.3K

ram-grounded-sam is an AI model that combines the strengths of the Recognize Anything Model (RAM) and the Grounded-Segment-Anything model. It exhibits exceptional recognition abilities, capable of detecting and segmenting a wide range of common objects in images using free-form text prompts. This model builds upon the powerful Segment Anything Model (SAM) and the Grounding DINO detector to provide a robust and versatile tool for visual understanding tasks. Model inputs and outputs The ram-grounded-sam model takes an input image and a text prompt as inputs, and generates segmentation masks for the objects and regions described in the prompt. The text prompt can be a free-form description of the objects or scenes of interest, allowing for flexible and expressive control over the model's behavior. Inputs Image**: The input image for which the model will generate segmentation masks. Text Prompt**: A free-form text description of the objects or scenes of interest in the input image. Outputs Segmentation Masks**: The model outputs a set of segmentation masks, each corresponding to an object or region described in the text prompt. These masks precisely outline the boundaries of the detected entities. Bounding Boxes**: The model also provides bounding boxes around the detected objects, which can be useful for tasks like object detection or localization. Confidence Scores**: The model outputs confidence scores for each detected object, indicating the model's certainty about the presence and precise segmentation of the corresponding entity. Capabilities The ram-grounded-sam model is capable of detecting and segmenting a wide variety of common objects and scenes in images, ranging from everyday household items to complex natural landscapes. It can handle prompts that describe multiple objects or scenes, and can accurately segment all the relevant entities. The model's strong zero-shot performance allows it to generalize to new domains and tasks beyond its training data. What can I use it for? ram-grounded-sam can be a powerful tool for a variety of computer vision and image understanding tasks. Some potential applications include: Automated Image Annotation**: The model can be used to automatically generate detailed labels and masks for the contents of images, which can be valuable for building and annotating large-scale image datasets. Interactive Image Editing**: By providing precise segmentation of objects and regions, the model can enable intuitive and fine-grained image editing capabilities, where users can select and manipulate specific elements of an image. Visual Question Answering**: The model's ability to understand and segment image contents based on text prompts can be leveraged to build more advanced visual question answering systems. Robotic Perception**: The model's real-time segmentation capabilities could be integrated into robotic systems to enable more fine-grained visual understanding and interaction with the environment. Things to try One interesting aspect of the ram-grounded-sam model is its ability to handle complex and open-ended text prompts. Try providing prompts that describe multiple objects or scenes, or use more abstract or descriptive language to see how the model responds. You can also experiment with providing the model with challenging or unusual images to test its generalization capabilities. Another interesting direction to explore is combining ram-grounded-sam with other AI models, such as language models or generative models, to enable more advanced image understanding and manipulation tasks. For example, you could use the model's segmentation outputs to guide the generation of new image content or the editing of existing images.

Updated Invalid Date

grounding-dino

132

grounding-dino is an AI model that can detect arbitrary objects in images using human text inputs such as category names or referring expressions. It combines a Transformer-based detector called DINO with grounded pre-training to achieve open-vocabulary and text-guided object detection. The model was developed by IDEA Research and is available as a Cog model on Replicate. Similar models include GroundingDINO, which also uses the Grounding DINO approach, as well as other object detection models like stable-diffusion and text-extract-ocr. Model inputs and outputs grounding-dino takes an image and a comma-separated list of text queries describing the objects you want to detect. It then outputs the detected objects with bounding boxes and predicted labels. The model also allows you to adjust the confidence thresholds for the box and text predictions. Inputs image**: The input image to query query**: Comma-separated text queries describing the objects to detect box_threshold**: Confidence level threshold for object detection text_threshold**: Confidence level threshold for predicted labels show_visualisation**: Option to draw and visualize the bounding boxes on the image Outputs Detected objects with bounding boxes and predicted labels Capabilities grounding-dino can detect a wide variety of objects in images using just natural language descriptions. This makes it a powerful tool for tasks like content moderation, image retrieval, and visual analysis. The model is particularly adept at handling open-vocabulary detection, allowing you to query for any object, not just a predefined set. What can I use it for? You can use grounding-dino for a variety of applications that require object detection, such as: Visual search**: Quickly find specific objects in large image databases using text queries. Automated content moderation**: Detect inappropriate or harmful objects in user-generated content. Augmented reality**: Overlay relevant information on objects in the real world using text-guided object detection. Robotic perception**: Enable robots to understand and interact with their environment using language-guided object detection. Things to try Try experimenting with different types of text queries to see how the model handles various object descriptions. You can also play with the confidence thresholds to balance the precision and recall of the object detections. Additionally, consider integrating grounding-dino into your own applications to add powerful object detection capabilities.

Updated Invalid Date

segment-anything-automatic

3

The segment-anything-automatic model, created by pablodawson, is a version of the Segment Anything Model (SAM) that can automatically generate segmentation masks for all objects in an image. SAM is a powerful AI model developed by Meta AI Research that can produce high-quality object masks from simple input prompts like points or bounding boxes. Similar models include segment-anything-everything and the official segment-anything model. Model inputs and outputs The segment-anything-automatic model takes an image as its input and automatically generates segmentation masks for all objects in the image. The model supports various input parameters to control the mask generation process, such as the resize width, the number of crop layers, the non-maximum suppression thresholds, and more. Inputs image**: The input image to generate segmentation masks for. resize_width**: The width to resize the image to before running inference (default is 1024). crop_n_layers**: The number of layers to run mask prediction on crops of the image (default is 0). box_nms_thresh**: The box IoU cutoff used by non-maximal suppression to filter duplicate masks (default is 0.7). crop_nms_thresh**: The box IoU cutoff used by non-maximal suppression to filter duplicate masks between different crops (default is 0.7). points_per_side**: The number of points to be sampled along one side of the image (default is 32). pred_iou_thresh**: A filtering threshold between 0 and 1 using the model's predicted mask quality (default is 0.88). crop_overlap_ratio**: The degree to which crops overlap (default is 0.3413333333333333). min_mask_region_area**: The minimum area of a mask region to keep after postprocessing (default is 0). stability_score_offset**: The amount to shift the cutoff when calculating the stability score (default is 1). stability_score_thresh**: A filtering threshold between 0 and 1 using the stability of the mask under changes to the cutoff (default is 0.95). crop_n_points_downscale_factor**: The factor to scale down the number of points-per-side sampled in each layer (default is 1). Outputs Output**: A URI to the generated segmentation masks for the input image. Capabilities The segment-anything-automatic model can automatically generate high-quality segmentation masks for all objects in an image, without requiring any manual input prompts. This makes it a powerful tool for tasks like image analysis, object detection, and image editing. The model's strong zero-shot performance allows it to work well on a variety of image types and scenes. What can I use it for? The segment-anything-automatic model can be used for a wide range of applications, including: Image analysis**: Automatically detect and segment all objects in an image for further analysis. Object detection**: Use the generated masks to identify and locate specific objects within an image. Image editing**: Leverage the precise segmentation masks to selectively modify or remove objects in an image. Automation**: Integrate the model into image processing pipelines to automate repetitive segmentation tasks. Things to try Some interesting things to try with the segment-anything-automatic model include: Experiment with the various input parameters to see how they affect the generated masks, and find the optimal settings for your specific use case. Combine the segmentation masks with other computer vision techniques, such as object classification or instance segmentation, to build more advanced image processing applications. Explore using the model for creative applications, such as image compositing or digital artwork, where the precise segmentation capabilities can be valuable. Compare the performance of the segment-anything-automatic model to similar models, such as segment-anything-everything or the official segment-anything model, to find the best fit for your needs.

Updated Invalid Date

sam-vit

48

The sam-vit model is a variation of the Segment Anything Model (SAM), a powerful AI model developed by Facebook research that can generate high-quality object masks from input prompts such as points or bounding boxes. The SAM model has been trained on a dataset of 11 million images and 1.1 billion masks, giving it strong zero-shot performance on a variety of segmentation tasks. The sam-vit model specifically uses a Vision Transformer (ViT) as the image encoder, compared to other SAM variants like the sam-vit-base and sam-vit-huge models. This ViT-based encoder computes image embeddings using attention on patches of the image, with relative positional embedding used. Similar models to sam-vit include the fastsam model, which aims to provide fast segment-anything capabilities, and the ram-grounded-sam model, which combines the SAM model with a strong image tagging model. Model inputs and outputs Inputs source_image**: The input image file to generate segmentation masks for. Outputs Output**: The generated segmentation masks for the input image. Capabilities The sam-vit model can be used to generate high-quality segmentation masks for objects in an image, based on input prompts such as points or bounding boxes. This allows for precise object-level segmentation, going beyond traditional image segmentation approaches. What can I use it for? The sam-vit model can be used in a variety of applications that require accurate object-level segmentation, such as: Object detection and instance segmentation for computer vision tasks Automated image editing and content-aware image manipulation Robotic perception and scene understanding Medical image analysis and disease diagnosis Things to try One interesting aspect of the sam-vit model is its ability to perform "zero-shot" segmentation, where it can automatically generate masks for all objects in an image without any specific prompts. This can be a powerful tool for exploratory data analysis or generating segmentation masks at scale. Another interesting direction to explore is combining the sam-vit model with other AI models, such as the ram-grounded-sam model, to leverage both the segmentation capabilities and the image understanding abilities of these models.

Updated Invalid Date