neuralneighborstyletransfer

Maintainer: nkolkin13

7

| Property | Value |

|---|---|

| Model Link | View on Replicate |

| API Spec | View on Replicate |

| Github Link | View on Github |

| Paper Link | View on Arxiv |

Create account to get full access

Model overview

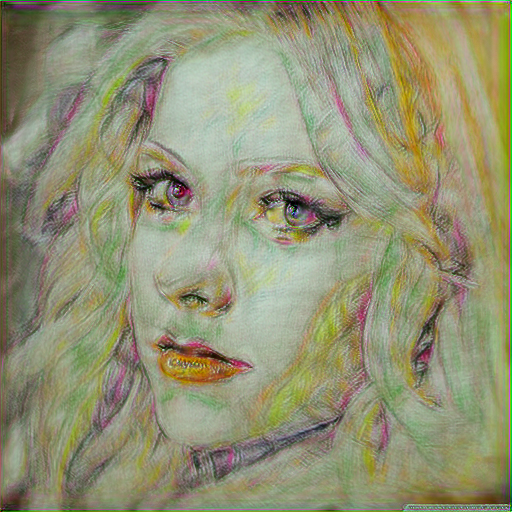

The neuralneighborstyletransfer model is a technique that can transfer the texture and style of one image onto another. It is similar to other style transfer models like style-transfer, clipstyler, and style-transfer-clip, but has some unique capabilities. The model was created by nkolkin13, a researcher at the Toyota Technological Institute at Chicago.

Model inputs and outputs

The neuralneighborstyletransfer model takes two inputs - a content image and a style image. The content image is the image you want to apply the style to, while the style image provides the artistic style. The model then generates an output image that combines the content of the first image with the style of the second.

Inputs

- Content: The image you want to apply the style to.

- Style: The image that provides the artistic style to be transferred.

Outputs

- Output: The image that combines the content of the first image with the style of the second.

Capabilities

The neuralneighborstyletransfer model can effectively transfer the texture and style of one image onto another, preserving the content and structure of the original image. It is able to capture a wide range of artistic styles, from impressionist paintings to abstract expressionism. The model also allows for fine-tuning of the balance between content preservation and style transfer through an adjustable "alpha" parameter.

What can I use it for?

The neuralneighborstyletransfer model can be useful for a variety of creative and artistic applications. It could be used to create unique artwork by applying the style of famous paintings to personal photos or digital illustrations. It could also be used to generate stylized video frames for creative video editing or animation projects. Additionally, the model could be integrated into various design and creative applications to enhance visual content.

Things to try

One interesting thing to try with the neuralneighborstyletransfer model is experimenting with different style images to see how they affect the final output. The model seems to work particularly well with style images that have large, distinct visual elements, such as cubist paintings or abstract expressionist works. You can also try adjusting the "alpha" parameter to find the right balance between content preservation and style transfer for your specific use case.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

style-transfer

130

The style-transfer model allows you to transfer the style of one image to another. This can be useful for creating artistic and visually interesting images by blending the content of one image with the style of another. The model is similar to other image manipulation models like become-image and image-merger, which can be used to adapt or combine images in different ways. Model inputs and outputs The style-transfer model takes in a content image and a style image, and generates a new image that combines the content of the first image with the style of the second. Users can also provide additional inputs like a prompt, negative prompt, and various parameters to control the output. Inputs Style Image**: An image to copy the style from Content Image**: An image to copy the content from Prompt**: A description of the desired output image Negative Prompt**: Things you do not want to see in the output image Width/Height**: The size of the output image Output Format/Quality**: The format and quality of the output image Number of Images**: The number of images to generate Structure Depth/Denoising Strength**: Controls for the depth and denoising of the output image Outputs Output Images**: One or more images generated by the model Capabilities The style-transfer model can be used to create unique and visually striking images by blending the content of one image with the style of another. It can be used to transform photographs into paintings, cartoons, or other artistic styles, or to create surreal and imaginative compositions. What can I use it for? The style-transfer model could be used for a variety of creative projects, such as generating album covers, book illustrations, or promotional materials. It could also be used to create unique artwork for personal use or to sell on platforms like Etsy or DeviantArt. Additionally, the model could be incorporated into web applications or mobile apps that allow users to experiment with different artistic styles. Things to try One interesting thing to try with the style-transfer model is to experiment with different combinations of content and style images. For example, you could take a photograph of a landscape and blend it with the style of a Van Gogh painting, or take a portrait and blend it with the style of a comic book. The model allows for a lot of creative exploration and experimentation.

Updated Invalid Date

clipstyler

25

clipstyler is an AI model developed by Gihyun Kwon and Jong Chul Ye that enables image style transfer with a single text condition. It is similar to models like stable-diffusion, styleclip, and style-clip-draw that leverage text-to-image generation capabilities. However, clipstyler is unique in its ability to transfer the style of an image based on a single text prompt, rather than relying on a reference image. Model inputs and outputs The clipstyler model takes two inputs: an image and a text prompt. The image is used as the content that will have its style transferred, while the text prompt specifies the desired style. The model then outputs the stylized image, where the content of the input image has been transformed to match the requested style. Inputs Image**: The input image that will have its style transferred Text**: A text prompt describing the desired style to be applied to the input image Outputs Image**: The output image with the input content stylized according to the provided text prompt Capabilities clipstyler is capable of transferring the style of an image based on a single text prompt, without requiring a reference image. This allows for more flexibility and creativity in the style transfer process, as users can experiment with a wide range of styles by simply modifying the text prompt. The model leverages the CLIP text-image encoder to learn the relationship between textual style descriptions and visual styles, enabling it to produce high-quality stylized images. What can I use it for? The clipstyler model can be used for a variety of creative applications, such as: Artistic image generation**: Quickly generate stylized versions of your own images or photos, experimenting with different artistic styles and techniques. Concept visualization**: Bring your ideas to life by generating images that match a specific textual description, useful for designers, artists, and product developers. Content creation**: Enhance your digital content, such as blog posts, social media graphics, or marketing materials, by applying unique and custom styles to your images. Things to try One interesting aspect of clipstyler is its ability to produce diverse and unexpected results by experimenting with different text prompts. Try prompts that combine multiple styles or emotions, or explore abstract concepts like "surreal" or "futuristic" to see how the model interprets and translates these ideas into visual form. The variety of outcomes can spark new creative ideas and inspire you to push the boundaries of what's possible with text-driven style transfer.

Updated Invalid Date

adaattn

141

adaattn is an AI model for Arbitrary Neural Style Transfer, developed by Huage001. It is a re-implementation of the paper "AdaAttN: Revisit Attention Mechanism in Arbitrary Neural Style Transfer" published at ICCV 2021. This model aims to improve upon traditional neural style transfer approaches by introducing a novel attention mechanism. Similar models like stable-diffusion, gfpgan, stylemc, stylegan3-clip, and stylized-neural-painting-oil also explore different techniques for image generation and manipulation. Model inputs and outputs The adaattn model takes two inputs: a content image and a style image. It then generates a new image that combines the content of the first image with the artistic style of the second. This allows users to apply various artistic styles to their own photos or other images. Inputs Content**: The input content image Style**: The input style image Outputs Output**: The generated image that combines the content and style Capabilities The adaattn model can be used to apply a wide range of artistic styles to input images, from impressionist paintings to abstract expressionist works. It does this by learning the style features from the input style image and then transferring those features to the content image in a seamless way. What can I use it for? The adaattn model can be useful for various creative and artistic applications, such as generating unique artwork, enhancing photos with artistic filters, or creating custom images for design projects. It can also be used as a tool for educational or experimental purposes, allowing users to explore the interplay between content and style in visual media. Things to try One interesting aspect of the adaattn model is its ability to handle a wide range of style inputs, from classical paintings to modern digital art. Users can experiment with different style images to see how the model interprets and applies them to various content. Additionally, the model provides options for user control, allowing for more fine-tuned adjustments to the output.

Updated Invalid Date

magic-style-transfer

3

The magic-style-transfer model is a powerful tool for restyling images with the style of another image. Developed by batouresearch, this model is a great alternative to other style transfer models like style-transfer and style-transfer. It can also be used in conjunction with the magic-image-refiner model to further enhance the quality and detail of the results. Model inputs and outputs The magic-style-transfer model takes several inputs, including an input image, a prompt, and optional parameters like seed, IP image, and LoRA weights. The model then generates one or more output images that have the style of the input image applied to them. Inputs Image**: The input image to be restyled Prompt**: A text prompt describing the desired output Seed**: A random seed to control the output IP Image**: An additional input image for img2img or inpaint mode IP Scale**: The strength of the IP Adapter Strength**: The denoising strength when img2img is active Scheduler**: The scheduler to use LoRA Scale**: The LoRA additive scale Num Outputs**: The number of images to generate LoRA Weights**: The Replicate LoRA weights to use Guidance Scale**: The scale for classifier-free guidance Resizing Scale**: The scale of the solid margin Apply Watermark**: Whether to apply a watermark to the output Negative Prompt**: A negative prompt to guide the output Background Color**: The color to replace the alpha channel with Num Inference Steps**: The number of denoising steps Condition Canny Scale**: The scale for the Canny edge condition Condition Depth Scale**: The scale for the depth condition Outputs Output Images**: One or more images with the input image's style applied Capabilities The magic-style-transfer model can effectively apply the style of one image to another, creating unique and visually striking results. It can handle a wide range of input images and prompts, and the ability to fine-tune the model with LoRA weights adds an extra level of customization. What can I use it for? The magic-style-transfer model is a great tool for creative projects, such as generating art, designing album covers, or creating unique visual content for social media. By combining the style of one image with the content of another, you can produce highly compelling and original imagery. The model can also be used in commercial applications, such as product visualizations or marketing materials, where a distinctive visual style is desired. Things to try One interesting aspect of the magic-style-transfer model is its ability to handle a variety of input types, from natural images to more abstract or stylized artwork. Try experimenting with different input images and prompts to see how the model responds, and don't be afraid to push the boundaries of what it can do. You might be surprised by the unique and unexpected results you can achieve.

Updated Invalid Date