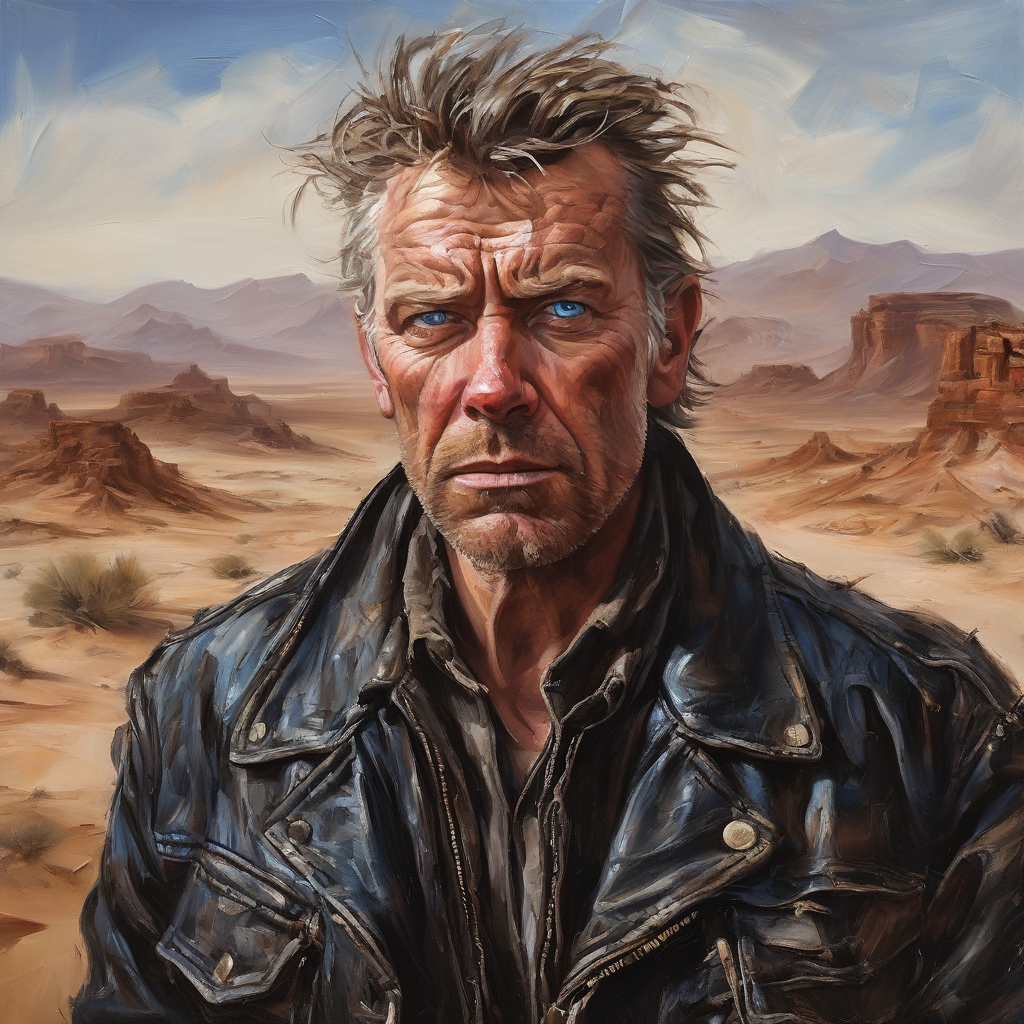

profile-avatar

Maintainer: jyoung105

1

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | No Github link provided |

| Paper link | No paper link provided |

Create account to get full access

Model overview

The profile-avatar model is a powerful AI-powered tool for creating high-quality, photorealistic profile images. Developed by Replicate creator jyoung105, this model boasts impressive capabilities that set it apart from similar tools like instant-style, playground-v2.5, sdxl-lightning-4step, openjourney-img2img, and gfpgan. By leveraging advanced techniques like HDR improvement, pose reference, and depth control, the profile-avatar model can generate highly realistic, personalized profile images that capture the user's unique characteristics.

Model inputs and outputs

The profile-avatar model accepts a variety of input parameters, including an image, a prompt, a pose reference, and various settings to control the output. These inputs allow users to fine-tune the generated image to their specific preferences. The model then generates a high-quality, photorealistic profile image as output.

Inputs

- Image: The input face image

- Prompt: The text prompt that describes the desired image

- Pose Image: A reference image to guide the pose of the generated profile

- Width: The width of the output image

- Height: The height of the output image

- Gender: The gender of the character in the generated profile

- Guidance Scale: The scale for classifier-free guidance

- Num Inference Steps: The number of denoising steps used to generate the image

- Resemblance: The conditioning scale for the ControlNet

- Creativity: The denoising strength, with 1 meaning total destruction of the original image

- Pose Strength: The Openpose ControlNet strength

- Depth Strength: The Depth ControlNet strength

- Seed: The random seed used to generate the image

Outputs

- Output Images: An array of generated profile images

Capabilities

The profile-avatar model excels at creating high-quality, photorealistic profile images that capture the user's unique characteristics. By leveraging advanced techniques like HDR improvement, pose reference, and depth control, the model can generate images that are both visually stunning and highly personalized.

What can I use it for?

The profile-avatar model is a versatile tool that can be used for a variety of applications, such as creating profile pictures for social media, generating custom avatars for online platforms, or even producing professional-looking headshots for business purposes. The model's ability to generate photorealistic images that closely resemble the user's appearance makes it a valuable asset for individuals and businesses alike.

Things to try

One interesting thing to try with the profile-avatar model is to experiment with the various input parameters, such as the pose reference, depth control, and creativity settings. By adjusting these settings, users can create a wide range of unique and personalized profile images that capture different moods, styles, and characteristics. Additionally, users can try mixing and matching the profile-avatar model with other AI-powered tools, such as the gfpgan model, to enhance and refine the generated images further.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

playground-v2.5

52

The playground-v2.5 model is a state-of-the-art text-to-image model developed by jyoung105. It is described as offering "turbo speed" performance for text-to-image generation, making it a fast and efficient option compared to similar models like clip-interrogator-turbo and playground-v2.5-1024px-aesthetic. Model inputs and outputs The playground-v2.5 model takes a variety of inputs, including a prompt, an optional input image, and various settings to control the output. The outputs are one or more generated images, which can be customized in terms of resolution and other parameters. Inputs Prompt**: The input text prompt that describes the desired image. Image**: An optional input image that can be used for image-to-image or inpainting tasks. Width/Height**: The desired width and height of the output image. Num Outputs**: The number of images to generate (up to 4). Guidance Scale**: A parameter that controls the strength of the guidance during the image generation process. Negative Prompt**: A text prompt that describes undesirable elements to exclude from the generated image. Num Inference Steps**: The number of denoising steps to perform during the image generation process. Outputs Generated Images**: The model outputs one or more images based on the provided input prompt and settings. Capabilities The playground-v2.5 model is capable of generating high-quality, photorealistic images from text prompts. It can handle a wide range of subject matter and styles, and is particularly well-suited for tasks like product visualization, scene generation, and concept art. The model's speed and efficiency make it a practical choice for real-world applications. What can I use it for? The playground-v2.5 model can be used for a variety of creative and commercial applications. For example, it could be used to generate product renderings, concept art for games or movies, or custom stock imagery. Businesses could leverage the model to create visuals for marketing materials, website design, or e-commerce product listings. Creatives could use it to explore and visualize ideas, or to quickly generate reference images for their own artwork. Things to try One interesting aspect of the playground-v2.5 model is its ability to handle complex, multi-part prompts. Try experimenting with prompts that combine various elements, such as specific objects, characters, environments, and styles. You can also try using the model for image-to-image tasks, such as inpainting or style transfer, to see how it handles more complex input scenarios.

Updated Invalid Date

portraitplus

23

portraitplus is a model developed by Replicate user cjwbw that focuses on generating high-quality portraits in the "portrait+" style. It is similar to other Stable Diffusion models created by cjwbw, such as stable-diffusion-v2-inpainting, stable-diffusion-2-1-unclip, analog-diffusion, and anything-v4.0. These models aim to produce highly detailed and realistic images, often with a particular artistic style. Model inputs and outputs portraitplus takes a text prompt as input and generates one or more images as output. The input prompt can describe the desired portrait, including details about the subject, style, and other characteristics. The model then uses this prompt to create a corresponding image. Inputs Prompt**: The text prompt describing the desired portrait Seed**: A random seed value to control the initial noise used for image generation Width and Height**: The desired dimensions of the output image Scheduler**: The algorithm used to control the diffusion process Guidance Scale**: The amount of guidance the model should use to adhere to the provided prompt Negative Prompt**: Text describing what the model should avoid including in the generated image Outputs Image(s)**: One or more images generated based on the input prompt Capabilities portraitplus can generate highly detailed and realistic portraits in a variety of styles, from photorealistic to more stylized or artistic renderings. The model is particularly adept at capturing the nuances of facial features, expressions, and lighting to create compelling and lifelike portraits. What can I use it for? portraitplus could be used for a variety of applications, such as digital art, illustration, concept design, and even personalized portrait commissions. The model's ability to generate unique and expressive portraits can make it a valuable tool for creative professionals or hobbyists looking to explore new artistic avenues. Things to try One interesting aspect of portraitplus is its ability to generate portraits with a diverse range of subjects and styles. You could experiment with prompts that describe historical figures, fictional characters, or even abstract concepts to see how the model interprets and visualizes them. Additionally, you could try adjusting the input parameters, such as the guidance scale or number of inference steps, to find the optimal settings for your desired output.

Updated Invalid Date

dream

1

dream is a text-to-image generation model created by Replicate user xarty8932. It is similar to other popular text-to-image models like SDXL-Lightning, k-diffusion, and Stable Diffusion, which can generate photorealistic images from textual descriptions. However, the specific capabilities and inner workings of dream are not clearly documented. Model inputs and outputs dream takes in a variety of inputs to generate images, including a textual prompt, image dimensions, a seed value, and optional modifiers like guidance scale and refine steps. The model outputs one or more generated images in the form of image URLs. Inputs Prompt**: The text description that the model will use to generate the image Width/Height**: The desired dimensions of the output image Seed**: A random seed value to control the image generation process Refine**: The style of refinement to apply to the image Scheduler**: The scheduler algorithm to use during image generation Lora Scale**: The additive scale for LoRA (Low-Rank Adaptation) weights Num Outputs**: The number of images to generate Refine Steps**: The number of steps to use for refine-based image generation Guidance Scale**: The scale for classifier-free guidance Apply Watermark**: Whether to apply a watermark to the generated images High Noise Frac**: The fraction of noise to use for the expert_ensemble_refiner Negative Prompt**: A text description for content to avoid in the generated image Prompt Strength**: The strength of the input prompt when using img2img or inpaint modes Replicate Weights**: LoRA weights to use for the image generation Outputs One or more generated image URLs Capabilities dream is a text-to-image generation model, meaning it can create images based on textual descriptions. It appears to have similar capabilities to other popular models like Stable Diffusion, being able to generate a wide variety of photorealistic images from diverse prompts. However, the specific quality and fidelity of the generated images is not clear from the available information. What can I use it for? dream could be used for a variety of creative and artistic applications, such as generating concept art, illustrations, or product visualizations. The ability to create images from text descriptions opens up possibilities for automating image creation, enhancing creative workflows, or even generating custom visuals for things like video games, films, or marketing materials. However, the limitations and potential biases of the model should be carefully considered before deploying it in a production setting. Things to try Some ideas for experimenting with dream include: Trying out a wide range of prompts to see the diversity of images the model can generate Exploring the impact of different hyperparameters like guidance scale, refine steps, and lora scale on the output quality Comparing the results of dream to other text-to-image models like Stable Diffusion or SDXL-Lightning to understand its unique capabilities Incorporating dream into a creative workflow or production pipeline to assess its practical usefulness and limitations

Updated Invalid Date

my_comfyui

175

my_comfyui is an AI model developed by 135arvin that allows users to run ComfyUI, a popular open-source AI tool, via an API. This model provides a convenient way to integrate ComfyUI functionality into your own applications or workflows without the need to set up and maintain the full ComfyUI environment. It can be particularly useful for those who want to leverage the capabilities of ComfyUI without the overhead of installing and configuring the entire system. Model inputs and outputs The my_comfyui model accepts two key inputs: an input file (image, tar, or zip) and a JSON workflow. The input file can be a source image, while the workflow JSON defines the specific image generation or manipulation steps to be performed. The model also allows for optional parameters, such as randomizing seeds and returning temporary files for debugging purposes. Inputs Input File**: Input image, tar or zip file. Read guidance on workflows and input files on the ComfyUI GitHub repository. Workflow JSON**: Your ComfyUI workflow as JSON. You must use the API version of your workflow, which can be obtained from ComfyUI using the "Save (API format)" option. Randomise Seeds**: Automatically randomize seeds (seed, noise_seed, rand_seed). Return Temp Files**: Return any temporary files, such as preprocessed controlnet images, which can be useful for debugging. Outputs Output**: An array of URIs representing the generated or manipulated images. Capabilities The my_comfyui model allows you to leverage the full capabilities of the ComfyUI system, which is a powerful open-source tool for image generation and manipulation. With this model, you can integrate ComfyUI's features, such as text-to-image generation, image-to-image translation, and various image enhancement and post-processing techniques, into your own applications or workflows. What can I use it for? The my_comfyui model can be particularly useful for developers and creators who want to incorporate advanced AI-powered image generation and manipulation capabilities into their projects. This could include applications such as generative art, content creation, product visualization, and more. By using the my_comfyui model, you can save time and effort in setting up and maintaining the ComfyUI environment, allowing you to focus on building and integrating the AI functionality into your own solutions. Things to try With the my_comfyui model, you can explore a wide range of creative and practical applications. For example, you could use it to generate unique and visually striking images for your digital art projects, or to enhance and refine existing images for use in your design work. Additionally, you could integrate the model into your own applications or services to provide automated image generation or manipulation capabilities to your users.

Updated Invalid Date