piano-transcription

Maintainer: bytedance

4

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | View on Github |

| Paper link | View on Arxiv |

Create account to get full access

Model overview

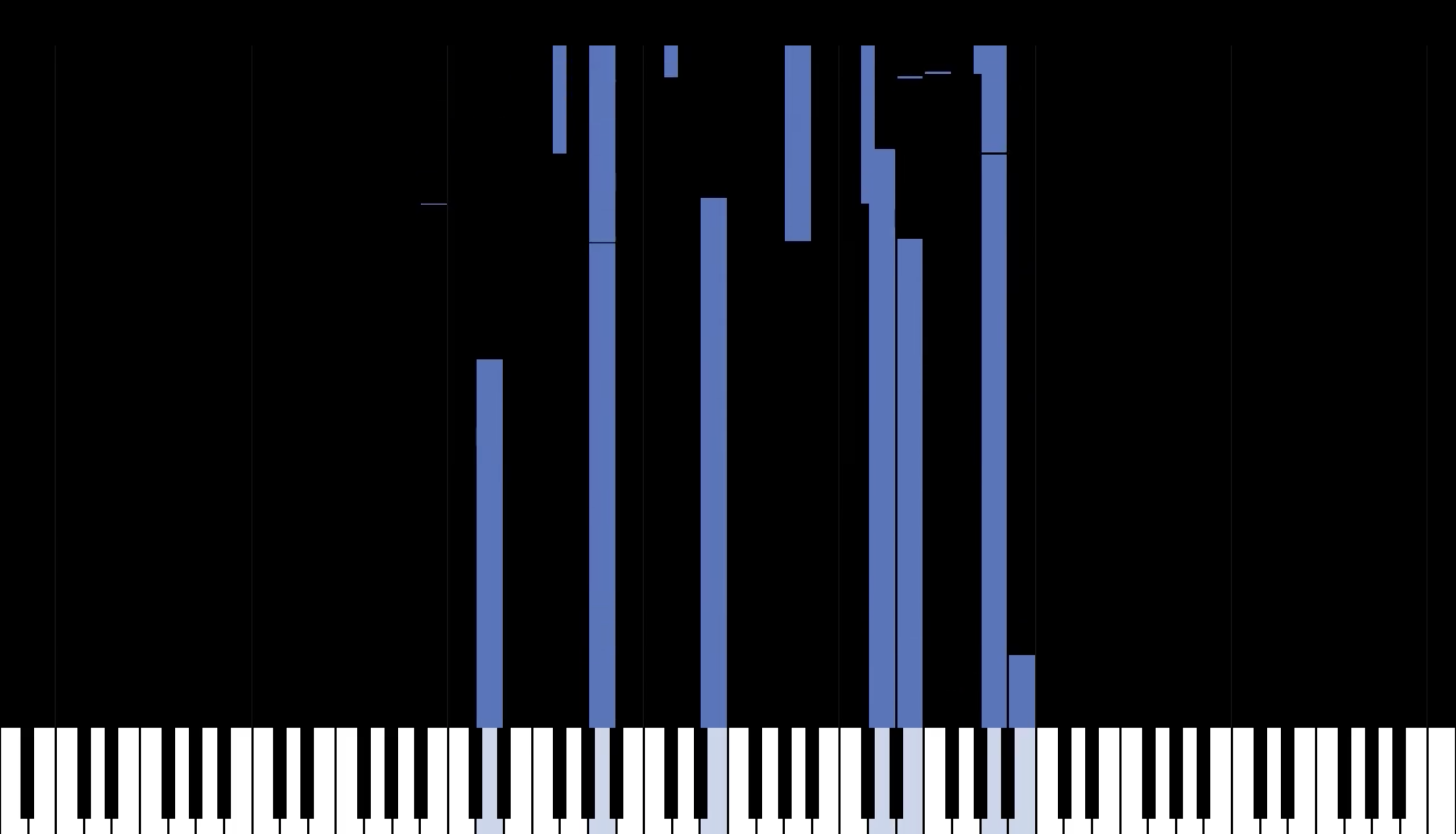

The piano-transcription model is a high-resolution piano transcription system developed by ByteDance that can detect piano notes from audio. It is a powerful tool for converting piano recordings into MIDI files, enabling efficient storage and manipulation of musical performances. This model can be compared to similar music AI models like cantable-diffuguesion for generating and harmonizing Bach chorales, stable-diffusion for generating photorealistic images from text, musicgen-fine-tuner for fine-tuning music generation models, and whisperx for accelerated audio transcription.

Model inputs and outputs

The piano-transcription model takes an audio file as input and outputs a MIDI file representing the transcribed piano performance. The model can detect piano notes, their onsets, offsets, and velocities with high accuracy, enabling detailed, high-resolution transcription.

Inputs

- audio_input: The input audio file to be transcribed

Outputs

- Output: The transcribed MIDI file representing the piano performance

Capabilities

The piano-transcription model is capable of accurately detecting and transcribing piano performances, even for complex, virtuosic pieces. It can capture nuanced details like pedal use, note velocity, and precise onset and offset times. This makes it a valuable tool for musicians, composers, and music enthusiasts who want to digitize and analyze piano recordings.

What can I use it for?

The piano-transcription model can be used for a variety of applications, such as converting legacy analog recordings into digital MIDI files, creating sheet music from live performances, and building large-scale classical piano MIDI datasets like the GiantMIDI-Piano dataset developed by the model's creators. This can enable further research and development in areas like music information retrieval, score-informed source separation, and music generation.

Things to try

Experiment with the piano-transcription model by transcribing a variety of piano performances, from classical masterpieces to modern pop songs. Observe how the model handles different styles, dynamics, and pedal use. You can also try combining the transcribed MIDI files with other music AI tools, such as musicgen, to create new and innovative music compositions.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

sdxl-lightning-4step

414.6K

sdxl-lightning-4step is a fast text-to-image model developed by ByteDance that can generate high-quality images in just 4 steps. It is similar to other fast diffusion models like AnimateDiff-Lightning and Instant-ID MultiControlNet, which also aim to speed up the image generation process. Unlike the original Stable Diffusion model, these fast models sacrifice some flexibility and control to achieve faster generation times. Model inputs and outputs The sdxl-lightning-4step model takes in a text prompt and various parameters to control the output image, such as the width, height, number of images, and guidance scale. The model can output up to 4 images at a time, with a recommended image size of 1024x1024 or 1280x1280 pixels. Inputs Prompt**: The text prompt describing the desired image Negative prompt**: A prompt that describes what the model should not generate Width**: The width of the output image Height**: The height of the output image Num outputs**: The number of images to generate (up to 4) Scheduler**: The algorithm used to sample the latent space Guidance scale**: The scale for classifier-free guidance, which controls the trade-off between fidelity to the prompt and sample diversity Num inference steps**: The number of denoising steps, with 4 recommended for best results Seed**: A random seed to control the output image Outputs Image(s)**: One or more images generated based on the input prompt and parameters Capabilities The sdxl-lightning-4step model is capable of generating a wide variety of images based on text prompts, from realistic scenes to imaginative and creative compositions. The model's 4-step generation process allows it to produce high-quality results quickly, making it suitable for applications that require fast image generation. What can I use it for? The sdxl-lightning-4step model could be useful for applications that need to generate images in real-time, such as video game asset generation, interactive storytelling, or augmented reality experiences. Businesses could also use the model to quickly generate product visualization, marketing imagery, or custom artwork based on client prompts. Creatives may find the model helpful for ideation, concept development, or rapid prototyping. Things to try One interesting thing to try with the sdxl-lightning-4step model is to experiment with the guidance scale parameter. By adjusting the guidance scale, you can control the balance between fidelity to the prompt and diversity of the output. Lower guidance scales may result in more unexpected and imaginative images, while higher scales will produce outputs that are closer to the specified prompt.

Updated Invalid Date

📊

omnizart

music-and-culture-technology-lab

3

Omnizart is a Python library developed by the Music and Culture Technology (MCT) Lab that aims to democratize automatic music transcription. It can transcribe various musical elements such as pitched instruments, vocal melody, chords, drum events, and beat from polyphonic audio. Omnizart is powered by research outcomes from the MCT Lab and has been published in the Journal of Open Source Software (JOSS). Similar AI models in this domain include music-classifiers for music classification, piano-transcription for high-resolution piano transcription, mustango for controllable text-to-music generation, and musicgen for music generation from prompts or melodies. Model inputs and outputs Omnizart takes an audio file in MP3 or WAV format as input and can output transcriptions for various musical elements. Inputs audio**: Path to the input music file in MP3 or WAV format. mode**: The specific transcription task to perform, such as music-piano, chord, drum, vocal, vocal-contour, or beat. Outputs The output is an array of objects, where each object contains: file: The path to the input audio file. text: The transcription result as text. Capabilities Omnizart can transcribe a wide range of musical elements, including pitched instruments, vocal melody, chords, drum events, and beat. This allows users to extract structured musical information from audio recordings, enabling applications such as music analysis, music information retrieval, and computer-assisted music composition. What can I use it for? With Omnizart, you can transcribe your favorite songs and explore the underlying musical structure. The transcriptions can be used for various purposes, such as: Music analysis**: Analyze the harmonic progressions, rhythmic patterns, and melodic lines of a piece of music. Music information retrieval**: Extract relevant metadata from audio recordings, such as chord changes, drum patterns, and melody, to enable more sophisticated music search and recommendations. Computer-assisted music composition**: Use the transcribed musical elements as a starting point for creating new compositions or arrangements. Things to try Try using Omnizart to transcribe different genres of music and explore the nuances in how it handles various musical elements. You can also experiment with the different transcription modes to see how the results vary and gain insights into the strengths and limitations of the model.

Updated Invalid Date

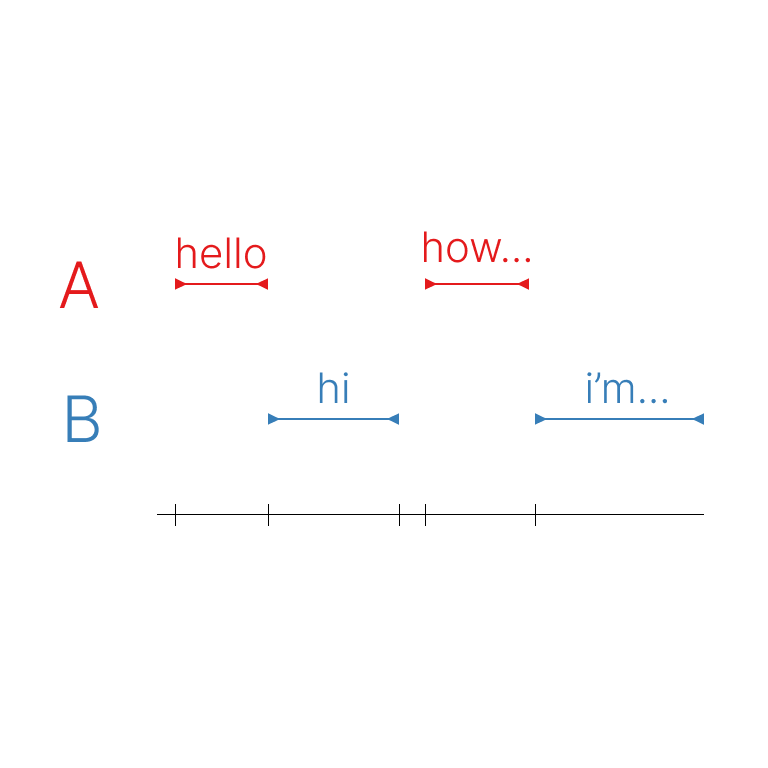

speaker-transcription

21

The speaker-transcription model is a powerful AI system that combines speaker diarization and speech transcription capabilities. It was developed by Meronym, a creator on the Replicate platform. This model builds upon two main components: the pyannote.audio speaker diarization pipeline and OpenAI's whisper model for general-purpose English speech transcription. The speaker-transcription model outperforms similar models like whisper-diarization and whisperx by providing more accurate speaker segmentation and identification, as well as high-quality transcription. It can be particularly useful for tasks that require both speaker information and verbatim transcripts, such as interview analysis, podcast processing, or meeting recordings. Model inputs and outputs The speaker-transcription model takes an audio file as input and can optionally accept a prompt string to guide the transcription. The model outputs a JSON file containing the transcribed segments, with each segment associated with a speaker label and timestamps. Inputs Audio**: An audio file in a supported format, such as MP3, AAC, FLAC, OGG, OPUS, or WAV. Prompt (optional)**: A text prompt that can be used to provide additional context for the transcription. Outputs JSON file**: A JSON file with the following structure: segments: A list of transcribed segments, each with a speaker label, start and stop timestamps, and the segment transcript. speakers: Information about the detected speakers, including the total count, labels for each speaker, and embeddings (a vector representation of each speaker's voice). Capabilities The speaker-transcription model excels at accurately identifying and labeling different speakers within an audio recording, while also providing high-quality transcripts of the spoken content. This makes it a valuable tool for a variety of applications, such as interview analysis, podcast processing, or meeting recordings. What can I use it for? The speaker-transcription model can be used for data augmentation and segmentation tasks, where the speaker information and timestamps can be used to improve the accuracy and effectiveness of transcription and captioning models. Additionally, the speaker embeddings generated by the model can be used for speaker recognition, allowing you to match voice profiles against a database of known speakers. Things to try One interesting aspect of the speaker-transcription model is the ability to use a prompt to guide the transcription. By providing additional context about the topic or subject matter, you can potentially improve the accuracy and relevance of the transcripts. Try experimenting with different prompts to see how they affect the output. Another useful feature is the generation of speaker embeddings, which can be used for speaker recognition and identification tasks. Consider exploring ways to leverage these embeddings, such as building a speaker verification system or clustering speakers in large audio datasets.

Updated Invalid Date

music-inpainting-bert

8

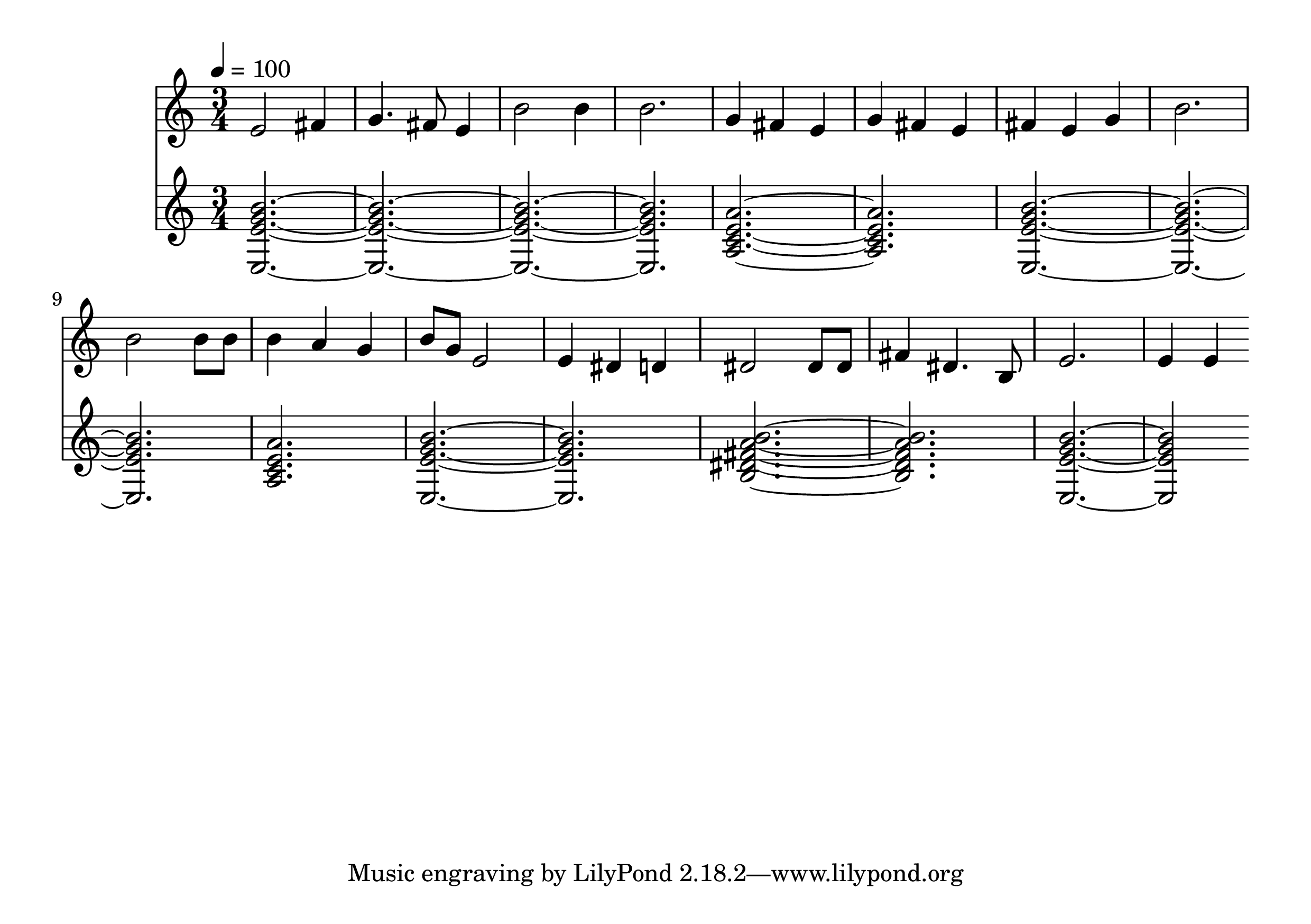

The music-inpainting-bert model is a custom BERT model developed by Andreas Jansson that can jointly inpaint both melody and chords in a piece of music. This model is similar to other models created by Andreas Jansson, such as cantable-diffuguesion for Bach chorale generation and harmonization, stable-diffusion-wip for inpainting in Stable Diffusion, and clip-features for extracting CLIP features. Model inputs and outputs The music-inpainting-bert model takes as input beat-quantized chord labels and beat-quantized melodic patterns, and can output a completion of the melody and chords. The inputs are represented using a look-up table, where melodies are split into beat-sized chunks and quantized to 16th notes. Inputs Notes**: Notes in tinynotation, with each bar separated by '|'. Use '?' for bars you want in-painted. Chords**: Chords (one chord per bar), with each bar separated by '|'. Use '?' for bars you want in-painted. Tempo**: Tempo in beats per minute. Time Signature**: The time signature. Sample Width**: The number of potential predictions to sample from. The higher the value, the more chaotic the output. Seed**: The random seed, with -1 for a random seed. Outputs Mp3**: The generated music as an MP3 file. Midi**: The generated music as a MIDI file. Score**: The generated music as a score. Capabilities The music-inpainting-bert model can be used to jointly inpaint both melody and chords in a piece of music. This can be useful for tasks like music composition, where the model can be used to generate new musical content or complete partial compositions. What can I use it for? The music-inpainting-bert model can be used for a variety of music-related projects, such as: Generating new musical compositions by providing partial input and letting the model fill in the gaps Completing or extending existing musical pieces by providing a starting point and letting the model generate the rest Experimenting with different musical styles and genres by providing prompts and exploring the model's outputs Things to try One interesting thing to try with the music-inpainting-bert model is to provide partial input with a mix of known and unknown elements, and see how the model fills in the gaps. This can be a great way to spark new musical ideas or explore different compositional possibilities.

Updated Invalid Date