Mtg

Models by this creator

effnet-discogs

114

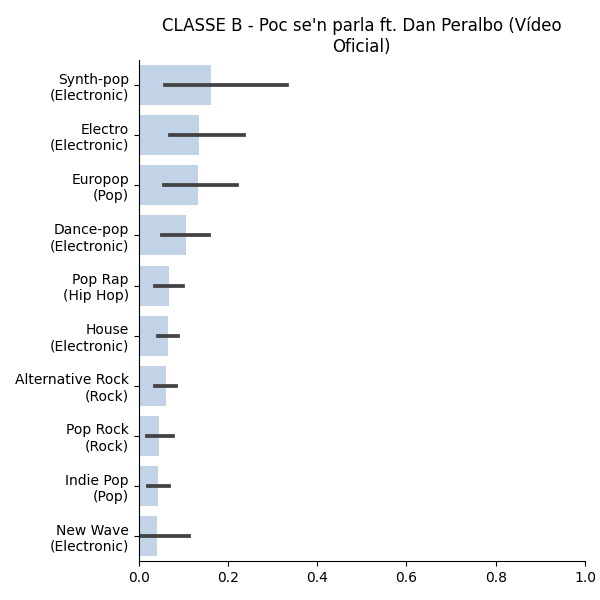

The effnet-discogs model is an EfficientNet model trained for music style classification. It can identify 400 different music styles from the Discogs taxonomy. This model is part of a collection of music classification models developed by the Music Technology Group (MTG) at Universitat Pompeu Fabra. The effnet-discogs model is similar to other music classification models from MTG, such as music-classifiers, which can identify music genres, moods, and instrumentation, and music-approachability-engagement, which classifies music based on approachability and engagement. Model inputs and outputs The effnet-discogs model takes either a YouTube URL or an audio file as input, and outputs a list of the top N most likely music styles from the Discogs taxonomy, along with their probabilities. Inputs Url**: A YouTube URL to process (overrides the audio input) Audio**: An audio file to process Top N**: The number of top music styles to return (default is 10) Output Format**: The output format, which can be either a bar chart visualization or a JSON blob Outputs Output**: A URI (URL) pointing to the model's output, which can be either a visualization or a JSON blob Capabilities The effnet-discogs model can accurately classify music into 400 different styles from the Discogs taxonomy. This can be useful for music discovery, recommendation, and analysis applications. What can I use it for? You can use the effnet-discogs model to build music classification and discovery applications. For example, you could integrate it into a music streaming service to provide detailed genre and style information for the songs in your library. You could also use it to build a music discovery tool that recommends new artists and genres based on a user's listening history. Things to try Try using the effnet-discogs model to classify a diverse set of music, including both popular and obscure genres. This can help you understand the model's capabilities and limitations, and identify potential areas for improvement or fine-tuning.

Updated 9/17/2024

music-classifiers

9

The music-classifiers model is a set of transfer learning models developed by MTG for classifying music by genres, moods, and instrumentation. It is part of the Essentia replicate demos hosted on Replicate.ai's MTG site. The model is built on top of the open-source Essentia library and provides pre-trained models for a variety of music classification tasks. This model can be seen as complementary to other music-related models like music-approachability-engagement, music-arousal-valence, and the MusicGen and MusicGen-melody models from Meta/Facebook, which focus on music generation and analysis. Model inputs and outputs Inputs Url**: YouTube URL to process (overrides audio input) Audio**: Audio file to process model_type**: Model type (embeddings) Outputs Output**: URI representing the processed audio Capabilities The music-classifiers model can be used to classify music along several dimensions, including genre, mood, and instrumentation. This can be useful for applications like music recommendation, audio analysis, and music information retrieval. The model provides pre-trained embeddings that can be used as features for a variety of downstream music-related tasks. What can I use it for? Researchers and developers working on music-related applications can use the music-classifiers model to add music classification capabilities to their projects. For example, the model could be integrated into a music recommendation system to suggest songs based on genre or mood, or used in an audio analysis tool to automatically extract information about the instrumentation in a piece of music. The model's pre-trained embeddings can also be used as input features for custom machine learning models, enabling more sophisticated music analysis and classification tasks. Things to try One interesting thing to try with the music-classifiers model is to experiment with different music genres, moods, and instrumentation combinations to see how the model classifies and represents them. You could also try using the model's embeddings as input features for your own custom music classification models, and see how they perform compared to other approaches. Additionally, you could explore ways to combine the music-classifiers model with other music-related models, such as the MusicGen and MusicGen-melody models, to create more comprehensive music analysis and generation pipelines.

Updated 9/17/2024

📈

music-approachability-engagement

6

The music-approachability-engagement model is a classification model developed by the MTG team that aims to assess the approachability and engagement of music. This model can be useful for research on AI-based music generation, as it provides insights into how humans perceive the "approachability" and "engagement" of generated music. The model is part of a suite of Essentia models hosted on Replicate. Similar models in this space include MusicGen from Meta, which can generate music from text or melody, and Stable Diffusion, a text-to-image diffusion model. These models showcase the capabilities of AI in music and creative domains, although they have different specific use cases compared to the music-approachability-engagement model. Model inputs and outputs Inputs URL**: A YouTube URL to process (overrides audio input) Audio**: An audio file to process Model type**: Specifies the downstream task, such as regression or classification (2-class or 3-class) Outputs Output**: A URI representing the processed output from the model Capabilities The music-approachability-engagement model can classify the "approachability" and "engagement" of music, which can be useful for understanding how humans perceive the accessibility and captivating nature of generated music. This type of insight can inform the development of more user-friendly and engaging AI-generated music. What can I use it for? Researchers in audio, machine learning, and artificial intelligence can use the music-approachability-engagement model to better understand the capabilities and limitations of current generative music models. By analyzing the model's classifications, researchers can gain insights into how to improve the perceptual qualities of AI-generated music. Additionally, amateur users interested in understanding generative music models can experiment with the model to see how it responds to different audio inputs. Things to try One interesting thing to try with the music-approachability-engagement model is to input a variety of music genres and styles, and observe how the model classifies the "approachability" and "engagement" of each. This could reveal patterns or biases in the model's understanding of music perception. Additionally, experimenting with different input audio and comparing the model's classifications could provide valuable feedback for improving the model's capabilities.

Updated 9/17/2024

music-arousal-valence

5

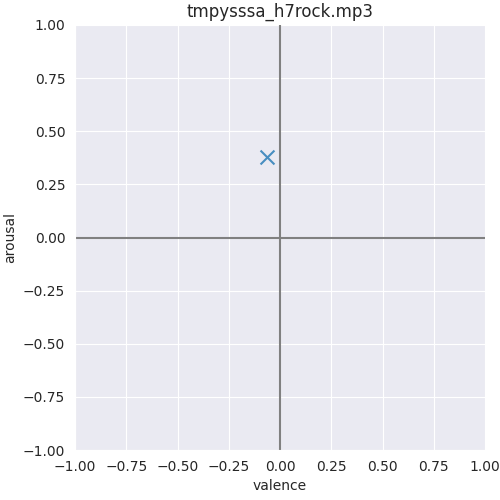

The music-arousal-valence model is a regression model developed by the Music Technology Group (MTG) that can predict the arousal and valence values of music. Arousal refers to the emotional intensity or energy of the music, while valence describes the emotional positivity or negativity. This model can be useful for research into music emotion recognition and generation of music with desired emotional characteristics. Similar models include the music-approachability-engagement model for classifying music approachability and engagement, and the musicgen and musicgen-melody models for generating music from text or melody. Model inputs and outputs The music-arousal-valence model takes either a YouTube URL or an audio file as input, along with a dataset and embedding type. The model outputs a URI representing the predicted arousal and valence values for the input audio. Inputs Url**: The YouTube URL of the audio to process Audio**: An audio file to process Dataset**: The dataset used for training the arousal/valence model, defaulting to "emomusic" Embedding_type**: The type of audio embedding to use, defaulting to "msd-musicnn" Outputs Output**: A URI representing the predicted arousal and valence values for the input audio Capabilities The music-arousal-valence model can accurately predict the arousal and valence values of music, which can be useful for music emotion recognition and generation applications. The model is trained on the "emomusic" dataset and uses the "msd-musicnn" audio embedding by default, but it can be customized to use other datasets and embedding types. What can I use it for? The music-arousal-valence model can be used for a variety of research and applications related to music emotion recognition and generation. For example, it could be used to automatically classify the emotional characteristics of a music library, or to generate music with specific arousal and valence characteristics for use in media or therapeutic applications. The model could also be fine-tuned on specialized datasets to better capture the emotional properties of particular genres or cultures. Things to try One interesting thing to try with the music-arousal-valence model is to experiment with different audio embeddings and datasets to see how they impact the model's performance. You could also try combining the model's outputs with other music analysis tools to gain a more holistic understanding of the emotional qualities of a piece of music.

Updated 9/17/2024

maest

1

MAEST is a family of Transformer models focused on music analysis applications. It is based on the PASST model and developed by the Music Technology Group (MTG) at Universitat Pompeu Fabra. The MAEST models are available for inference through the Essentia library, Hugging Face, and Replicate. Model Inputs and Outputs The MAEST models take audio data as input, either as a 1D audio waveform, 2D mel-spectrogram, or 3D/4D batched mel-spectrogram. The models output both logits and embeddings. The logits can be used for tasks like music style classification, while the embeddings capture high-level musical representations that can be used for downstream applications. Inputs Audio waveform Mel-spectrogram Batched mel-spectrogram Outputs Logits for music analysis tasks Embeddings for downstream applications Capabilities The MAEST models excel at music analysis tasks like genre, mood, and instrumentation classification. They can also be used to extract high-level musical representations for applications like music recommendation or similarity search. What Can I Use It For? The MAEST models are well-suited for a variety of music-related applications. Some potential use cases include: Music classification**: Use the logits to classify audio into genres, moods, or instrumentation categories, similar to the music-classifiers model. Music recommendation**: Use the embeddings to find similar music or build personalized recommendations, similar to the music-approachability-engagement and music-arousal-valence models. Music analysis**: Leverage the models' understanding of musical features to build applications that analyze or understand music, such as mood or emotion detection. Things to Try One interesting aspect of the MAEST models is their support for different input sequence lengths, ranging from 5 to 30 seconds. You can experiment with how the model's performance and capabilities change as you adjust the input length. Additionally, the models are available with different initialization strategies, such as random weights or pre-trained weights from the PASST model. Trying out these different configurations could yield insights into the model's behavior and performance.

Updated 9/17/2024