Tommoore515

Models by this creator

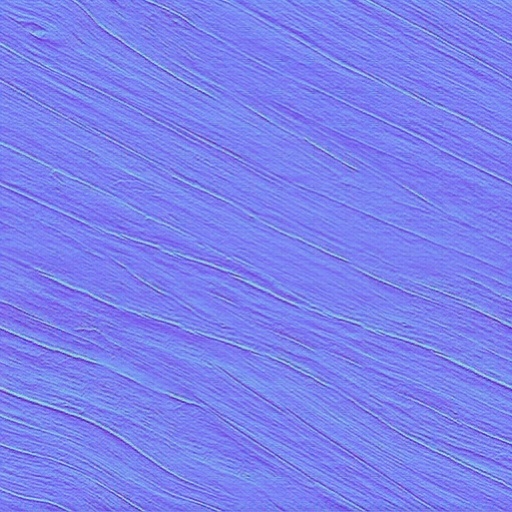

material_stable_diffusion

386

material_stable_diffusion is a fork of the popular Stable Diffusion model, created by tommoore515, that is optimized for generating tileable outputs. This makes it well-suited for use in 3D applications such as Monaverse. Unlike the original stable-diffusion model, which is capable of generating photo-realistic images from any text input, material_stable_diffusion focuses on producing seamless, tileable textures and materials. Other similar models like material-diffusion and material-diffusion-sdxl also share this specialized focus. Model inputs and outputs material_stable_diffusion takes in a text prompt, an optional initial image, and several parameters to control the output, including the image size, number of outputs, and guidance scale. The model then generates one or more images that match the provided prompt and initial image (if used). Inputs Prompt**: The text description of the desired output image Init Image**: An optional initial image to use as a starting point for the generation Mask**: A black and white image used as a mask for inpainting over the init_image Seed**: A random seed value to control the generation Width/Height**: The desired size of the output image(s) Num Outputs**: The number of images to generate Guidance Scale**: The strength of the text guidance during the generation process Prompt Strength**: The strength of the prompt when using an init image Num Inference Steps**: The number of denoising steps to perform during generation Outputs Output Image(s)**: One or more generated images that match the provided prompt and initial image (if used) Capabilities material_stable_diffusion is capable of generating high-quality, tileable textures and materials for use in 3D applications. The model's specialized focus on producing seamless outputs makes it a valuable tool for artists, designers, and 3D creators looking to quickly generate custom assets. What can I use it for? You can use material_stable_diffusion to generate a wide variety of tileable textures and materials, such as stone walls, wood patterns, fabrics, and more. These generated assets can be used in 3D modeling, game development, architectural visualization, and other creative applications that require high-quality, repeatable textures. Things to try One interesting aspect of material_stable_diffusion is its ability to generate variations on a theme. By adjusting the prompt, seed, and other parameters, you can explore different interpretations of the same general concept and find the perfect texture or material for your project. Additionally, the model's inpainting capabilities allow you to refine or edit the generated outputs, making it a versatile tool for 3D artists and designers.

Updated 9/19/2024

pix2pix_tf_albedo2pbrmaps

3

pix2pix_tf_albedo2pbrmaps is a machine learning model that generates PBR (Physically-Based Rendering) texture maps from an input albedo texture. It is an implementation of the pix2pix conditional adversarial network, a general-purpose image-to-image translation model. The model was created by tommoore515 for the Monaverse AI Material Generator. Similar models include stable-diffusion, sdxl-allaprima, gfpgan, instruct-pix2pix, and pixray-text2image. Model inputs and outputs The pix2pix_tf_albedo2pbrmaps model takes an input image of an albedo texture and generates a set of PBR texture maps as output, including normal, roughness, and metallic maps. Inputs Imagepath**: The path to the input albedo texture image. Outputs Output**: A URI pointing to the generated PBR texture maps. Capabilities The pix2pix_tf_albedo2pbrmaps model is capable of generating high-quality PBR texture maps from albedo inputs. The examples provided show the model's ability to accurately predict normal, roughness, and metallic maps that can be used in 3D rendering and game development. What can I use it for? The pix2pix_tf_albedo2pbrmaps model can be used to speed up the creation of PBR textures for 3D assets and environments. Instead of manually creating all the necessary maps, you can use this model to generate them from a simple albedo input. This can be particularly useful for game developers, 3D artists, and anyone working on 3D rendering projects. Things to try One interesting thing to try with the pix2pix_tf_albedo2pbrmaps model is to experiment with different input albedo textures and observe how the generated PBR maps change. You could also try using the model in a larger 3D asset creation workflow to see how the generated textures perform in a real-world rendering context.

Updated 9/19/2024