pix2pix_tf_albedo2pbrmaps

Maintainer: tommoore515

3

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | View on Github |

| Paper link | No paper link provided |

Create account to get full access

Model overview

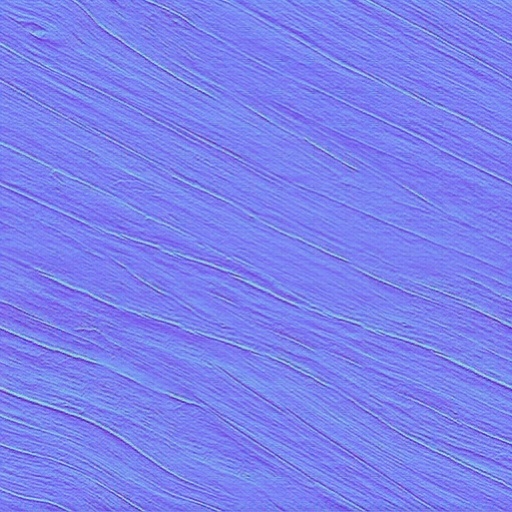

pix2pix_tf_albedo2pbrmaps is a machine learning model that generates PBR (Physically-Based Rendering) texture maps from an input albedo texture. It is an implementation of the pix2pix conditional adversarial network, a general-purpose image-to-image translation model. The model was created by tommoore515 for the Monaverse AI Material Generator. Similar models include stable-diffusion, sdxl-allaprima, gfpgan, instruct-pix2pix, and pixray-text2image.

Model inputs and outputs

The pix2pix_tf_albedo2pbrmaps model takes an input image of an albedo texture and generates a set of PBR texture maps as output, including normal, roughness, and metallic maps.

Inputs

- Imagepath: The path to the input albedo texture image.

Outputs

- Output: A URI pointing to the generated PBR texture maps.

Capabilities

The pix2pix_tf_albedo2pbrmaps model is capable of generating high-quality PBR texture maps from albedo inputs. The examples provided show the model's ability to accurately predict normal, roughness, and metallic maps that can be used in 3D rendering and game development.

What can I use it for?

The pix2pix_tf_albedo2pbrmaps model can be used to speed up the creation of PBR textures for 3D assets and environments. Instead of manually creating all the necessary maps, you can use this model to generate them from a simple albedo input. This can be particularly useful for game developers, 3D artists, and anyone working on 3D rendering projects.

Things to try

One interesting thing to try with the pix2pix_tf_albedo2pbrmaps model is to experiment with different input albedo textures and observe how the generated PBR maps change. You could also try using the model in a larger 3D asset creation workflow to see how the generated textures perform in a real-world rendering context.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

material_stable_diffusion

386

material_stable_diffusion is a fork of the popular Stable Diffusion model, created by tommoore515, that is optimized for generating tileable outputs. This makes it well-suited for use in 3D applications such as Monaverse. Unlike the original stable-diffusion model, which is capable of generating photo-realistic images from any text input, material_stable_diffusion focuses on producing seamless, tileable textures and materials. Other similar models like material-diffusion and material-diffusion-sdxl also share this specialized focus. Model inputs and outputs material_stable_diffusion takes in a text prompt, an optional initial image, and several parameters to control the output, including the image size, number of outputs, and guidance scale. The model then generates one or more images that match the provided prompt and initial image (if used). Inputs Prompt**: The text description of the desired output image Init Image**: An optional initial image to use as a starting point for the generation Mask**: A black and white image used as a mask for inpainting over the init_image Seed**: A random seed value to control the generation Width/Height**: The desired size of the output image(s) Num Outputs**: The number of images to generate Guidance Scale**: The strength of the text guidance during the generation process Prompt Strength**: The strength of the prompt when using an init image Num Inference Steps**: The number of denoising steps to perform during generation Outputs Output Image(s)**: One or more generated images that match the provided prompt and initial image (if used) Capabilities material_stable_diffusion is capable of generating high-quality, tileable textures and materials for use in 3D applications. The model's specialized focus on producing seamless outputs makes it a valuable tool for artists, designers, and 3D creators looking to quickly generate custom assets. What can I use it for? You can use material_stable_diffusion to generate a wide variety of tileable textures and materials, such as stone walls, wood patterns, fabrics, and more. These generated assets can be used in 3D modeling, game development, architectural visualization, and other creative applications that require high-quality, repeatable textures. Things to try One interesting aspect of material_stable_diffusion is its ability to generate variations on a theme. By adjusting the prompt, seed, and other parameters, you can explore different interpretations of the same general concept and find the perfect texture or material for your project. Additionally, the model's inpainting capabilities allow you to refine or edit the generated outputs, making it a versatile tool for 3D artists and designers.

Updated Invalid Date

text2image

1.4K

text2image by pixray is an AI-powered image generation system that can create unique visual outputs from text prompts. It combines various approaches, including perception engines, CLIP-guided GAN imagery, and techniques for navigating latent space. The model is capable of generating diverse and imaginative images that capture the essence of the provided text prompt. Compared to similar models like pixray-text2image, pixray-text2pixel, dreamshaper, prompt-parrot, and majicmix, text2image by pixray offers a unique combination of capabilities that allow for the generation of highly detailed and visually captivating images from textual descriptions. Model Inputs and Outputs The text2image model takes a text prompt as input and generates an image as output. The text prompt can be a description, scene, or concept that the user wants the model to visualize. The output is an image that represents the given prompt. Inputs Prompts**: A text description or concept that the model should use to generate an image. Settings**: Optional additional settings in a name: value format to customize the model's behavior. Drawer**: The rendering engine to use, with the default being "vqgan". Outputs Output Images**: The generated image(s) based on the provided text prompt. Capabilities The text2image model by pixray is capable of generating a wide range of images, from realistic scenes to abstract and surreal compositions. The model can capture various themes, styles, and visual details based on the input prompt, showcasing its versatility and imagination. What Can I Use It For? The text2image model can be useful for a variety of applications, such as: Concept art and visualization: Generate images to illustrate ideas, stories, or designs. Creative exploration: Experiment with different text prompts to discover unique and unexpected visual outputs. Educational and research purposes: Use the model to explore the relationship between language and visual representation. Prototyping and ideation: Quickly generate visual sketches to explore design concepts or product ideas. Things to Try With text2image, you can experiment with different types of text prompts to see how the model responds. Try describing specific scenes, objects, or emotions, and observe how the generated images capture the essence of your prompts. Additionally, you can explore the model's settings and different rendering engines to customize the visual style of the output.

Updated Invalid Date

text2image-future

24

text2image-future is an image generation AI model created by pixray. It combines previous ideas from Perception Engines, CLIP guided GAN imagery, and CLIPDraw. The model can take a text prompt and generate a corresponding image, similar to other pixray models like text2image, pixray-text2image, and pixray-text2pixel. Model inputs and outputs text2image-future takes a text prompt as input and generates one or more corresponding images as output. The model can be run from the command line, within Python code, or using a Colab notebook. Inputs Prompts**: A text prompt describing the desired image Outputs Images**: One or more images generated based on the input prompt Capabilities text2image-future can generate a wide variety of images from text prompts, spanning genres like landscapes, portraits, abstract art, and more. The model leverages techniques like image augmentation, latent space optimization, and CLIP-guided generation to produce high-quality, visually compelling outputs. What can I use it for? You can use text2image-future to generate images for a variety of creative and practical applications, such as: Concept art and visualization for digital art, games, or films Rapid prototyping and ideation for product design Illustration and visual storytelling Social media content and marketing assets Things to try Some interesting things to explore with text2image-future include: Experimenting with different types of prompts, from specific descriptions to more abstract, evocative language Trying out the various rendering engines and settings to see how they affect the output Combining the model with other tools and techniques, such as image editing or 3D modeling Exploring the limits of the model's capabilities and trying to push it to generate unexpected or surreal imagery

Updated Invalid Date

instruct-pix2pix

819

instruct-pix2pix is a powerful image editing model that allows users to edit images based on natural language instructions. Developed by Timothy Brooks, this model is similar to other instructable AI models like InstructPix2Pix and InstructIR, which enable image editing and restoration through textual guidance. It can be considered an extension of the widely-used Stable Diffusion model, adding the ability to edit existing images rather than generating new ones from scratch. Model inputs and outputs The instruct-pix2pix model takes in an image and a textual prompt as inputs, and outputs a new edited image based on the provided instructions. The model is designed to be versatile, allowing users to guide the image editing process through natural language commands. Inputs Image**: An existing image that will be edited according to the provided prompt Prompt**: A textual description of the desired edits to be made to the input image Outputs Edited Image**: The resulting image after applying the specified edits to the input image Capabilities The instruct-pix2pix model excels at a wide range of image editing tasks, from simple modifications like changing the color scheme or adding visual elements, to more complex transformations like turning a person into a cyborg or altering the composition of a scene. The model's ability to understand and interpret natural language instructions allows for a highly intuitive and flexible editing experience. What can I use it for? The instruct-pix2pix model can be utilized in a variety of applications, such as photo editing, digital art creation, and even product visualization. For example, a designer could use the model to quickly experiment with different design ideas by providing textual prompts, or a marketer could create custom product images for their e-commerce platform by instructing the model to make specific changes to stock photos. Things to try One interesting aspect of the instruct-pix2pix model is its potential for creative and unexpected image transformations. Users could try providing prompts that push the boundaries of what the model is capable of, such as combining different artistic styles, merging multiple objects or characters, or exploring surreal and fantastical imagery. The model's versatility and natural language understanding make it a compelling tool for those seeking to unleash their creativity through image editing.

Updated Invalid Date