EchoMimic

Maintainer: BadToBest

89

🌀

| Property | Value |

|---|---|

| Run this model | Run on HuggingFace |

| API spec | View on HuggingFace |

| Github link | No Github link provided |

| Paper link | No paper link provided |

Create account to get full access

Model overview

The EchoMimic model is a powerful tool for generating lifelike audio-driven portrait animations. Developed by the team at BadToBest, this model leverages advanced machine learning techniques to create highly realistic facial animations that are seamlessly synchronized with audio input. Unlike some similar models that rely on fixed landmark conditioning, EchoMimic allows for editable landmark conditioning, providing users with greater control and flexibility in shaping the final output.

Model inputs and outputs

The EchoMimic model takes in two primary inputs: audio data and landmark coordinates. The audio data can be in the form of speech, singing, or other vocalizations, which the model then uses to drive the facial animations. The landmark coordinates provide a reference for the model to map the audio to specific facial features, enabling a high degree of realism and synchronization.

Inputs

- Audio data: Speech, singing, or other vocalizations

- Landmark coordinates: Coordinates defining the position of facial features

Outputs

- Lifelike portrait animations: Highly realistic facial animations that are synchronized with the input audio

Capabilities

The EchoMimic model excels at generating stunningly realistic portrait animations that capture the nuances and expressiveness of human facial movements. By leveraging the editable landmark conditioning, users can fine-tune the animations to their specific needs, making it a versatile tool for a wide range of applications, from video production to interactive experiences.

What can I use it for?

The EchoMimic model has numerous potential use cases, including:

- Video production: Seamlessly integrate audio-driven facial animations into videos, creating more engaging and lifelike content.

- Virtual assistants: Enhance the realism and responsiveness of virtual assistants by incorporating

EchoMimic-generated facial animations. - Interactive experiences: Develop immersive, audio-driven experiences that leverage the model's capabilities, such as interactive storytelling or virtual performances.

Things to try

One of the key features of the EchoMimic model is its ability to handle a diverse range of audio inputs, from speech to singing. Experiment with different types of audio to see how the model responds and how you can leverage the editable landmark conditioning to fine-tune the animations. Additionally, explore the model's potential for generating animations in various styles or cultural contexts, unlocking new creative possibilities.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

🌀

EchoMimic

89

The EchoMimic model is a powerful tool for generating lifelike audio-driven portrait animations. Developed by the team at BadToBest, this model leverages advanced machine learning techniques to create highly realistic facial animations that are seamlessly synchronized with audio input. Unlike some similar models that rely on fixed landmark conditioning, EchoMimic allows for editable landmark conditioning, providing users with greater control and flexibility in shaping the final output. Model inputs and outputs The EchoMimic model takes in two primary inputs: audio data and landmark coordinates. The audio data can be in the form of speech, singing, or other vocalizations, which the model then uses to drive the facial animations. The landmark coordinates provide a reference for the model to map the audio to specific facial features, enabling a high degree of realism and synchronization. Inputs Audio data**: Speech, singing, or other vocalizations Landmark coordinates**: Coordinates defining the position of facial features Outputs Lifelike portrait animations**: Highly realistic facial animations that are synchronized with the input audio Capabilities The EchoMimic model excels at generating stunningly realistic portrait animations that capture the nuances and expressiveness of human facial movements. By leveraging the editable landmark conditioning, users can fine-tune the animations to their specific needs, making it a versatile tool for a wide range of applications, from video production to interactive experiences. What can I use it for? The EchoMimic model has numerous potential use cases, including: Video production**: Seamlessly integrate audio-driven facial animations into videos, creating more engaging and lifelike content. Virtual assistants**: Enhance the realism and responsiveness of virtual assistants by incorporating EchoMimic-generated facial animations. Interactive experiences**: Develop immersive, audio-driven experiences that leverage the model's capabilities, such as interactive storytelling or virtual performances. Things to try One of the key features of the EchoMimic model is its ability to handle a diverse range of audio inputs, from speech to singing. Experiment with different types of audio to see how the model responds and how you can leverage the editable landmark conditioning to fine-tune the animations. Additionally, explore the model's potential for generating animations in various styles or cultural contexts, unlocking new creative possibilities.

Updated Invalid Date

👀

MuseTalk

56

MuseTalk is a real-time high-quality audio-driven lip-syncing model developed by TMElyralab. It can be applied with input videos, such as those generated by MuseV, to create a complete virtual human solution. The model is trained in the latent space of ft-mse-vae and can modify an unseen face according to the input audio, with a face region size of 256 x 256. MuseTalk supports audio in various languages, including Chinese, English, and Japanese, and can run in real-time at 30fps+ on an NVIDIA Tesla V100 GPU. Model inputs and outputs Inputs Audio in various languages (e.g., Chinese, English, Japanese) A face region of size 256 x 256 Outputs A modified face region with synchronized lip movements based on the input audio Capabilities MuseTalk can generate realistic lip-synced animations in real-time, making it a powerful tool for creating virtual human experiences. The model supports modification of the center point of the face region, which significantly affects the generation results. Additionally, a checkpoint trained on the HDTF dataset is available. What can I use it for? MuseTalk can be used to bring static images or videos to life by animating the subjects' lips in sync with the audio. This can be particularly useful for creating virtual avatars, dubbing videos, or enhancing the realism of computer-generated characters. The model's real-time capabilities make it suitable for live applications, such as virtual presentations or interactive experiences. Things to try Experiment with MuseTalk by using it to animate the lips of various subjects, from famous portraits to your own photos. Try adjusting the center point of the face region to see how it impacts the generation results. Additionally, you can explore integrating MuseTalk with other virtual human solutions, such as MuseV, to create a complete virtual human experience.

Updated Invalid Date

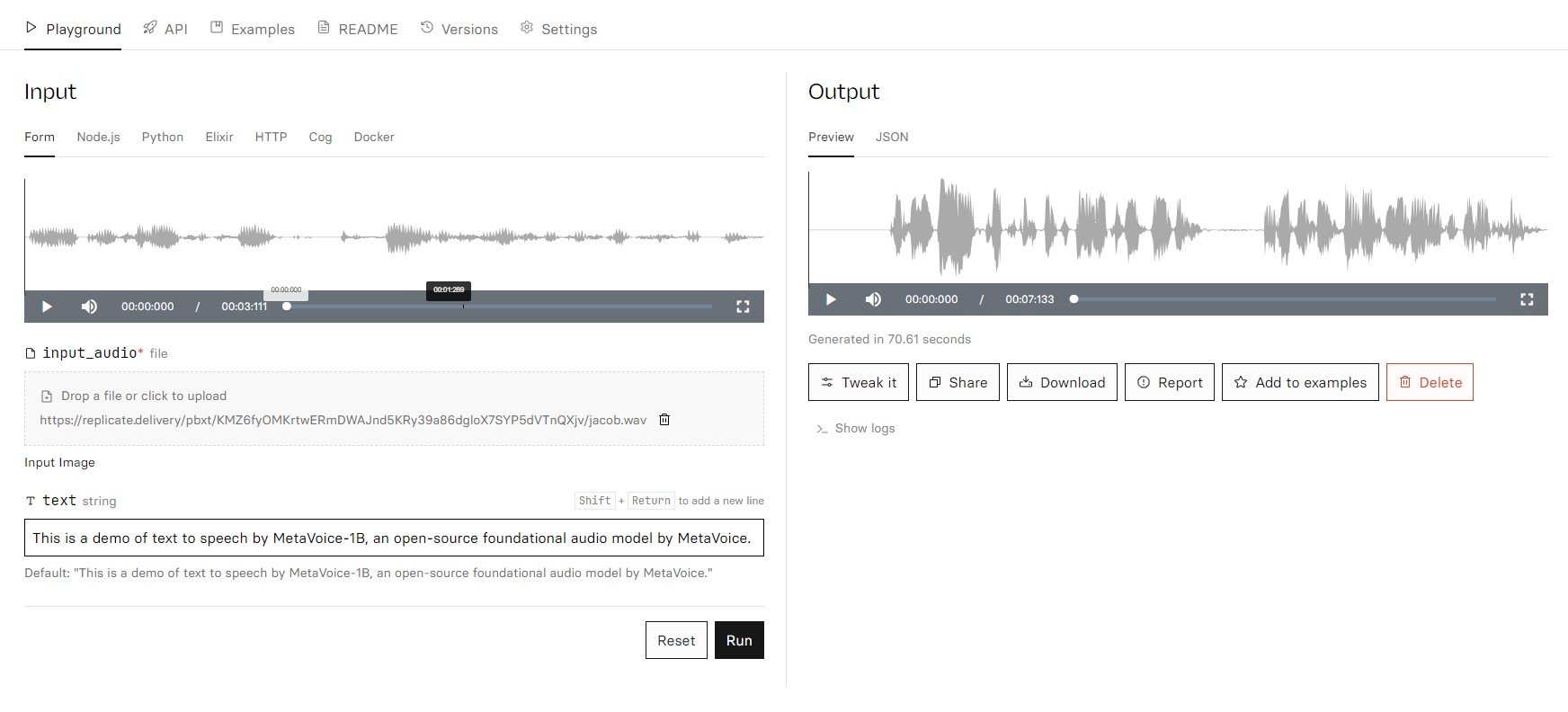

metavoice

9

MetaVoice-1B is a 1.2 billion parameter base model trained on 100,000 hours of speech, developed by the MetaVoice team. This large-scale speech model can be used for a variety of text-to-speech and audio generation tasks, similar to models like ml-mgie, meta-llama-3-8b-instruct, whisperspeech-small, voicecraft, and whisperx. Model inputs and outputs MetaVoice-1B takes in text as input and generates audio as output. The model can be used for a wide range of text-to-speech and audio generation tasks. Inputs Text**: The text to be converted to speech. Outputs Audio**: The generated audio in a URI format. Capabilities MetaVoice-1B is a powerful foundational audio model capable of generating high-quality speech from text inputs. It can be used for tasks like text-to-speech, audio synthesis, and voice cloning. What can I use it for? The MetaVoice-1B model can be used for a variety of applications, such as creating audiobooks, podcasts, or voice assistants. It can also be used to generate synthetic voices for video games, movies, or other multimedia projects. Additionally, the model can be fine-tuned for specific use cases, such as language learning or accessibility applications. Things to try With MetaVoice-1B, you can experiment with generating speech in different styles, emotions, or languages. You can also explore using the model for tasks like audio editing, voice conversion, or multi-speaker audio generation.

Updated Invalid Date

👀

MuseTalk

56

MuseTalk is a real-time high-quality audio-driven lip-syncing model developed by TMElyralab. It can be applied with input videos, such as those generated by MuseV, to create a complete virtual human solution. The model is trained in the latent space of ft-mse-vae and can modify an unseen face according to the input audio, with a face region size of 256 x 256. MuseTalk supports audio in various languages, including Chinese, English, and Japanese, and can run in real-time at 30fps+ on an NVIDIA Tesla V100 GPU. Model inputs and outputs Inputs Audio in various languages (e.g., Chinese, English, Japanese) A face region of size 256 x 256 Outputs A modified face region with synchronized lip movements based on the input audio Capabilities MuseTalk can generate realistic lip-synced animations in real-time, making it a powerful tool for creating virtual human experiences. The model supports modification of the center point of the face region, which significantly affects the generation results. Additionally, a checkpoint trained on the HDTF dataset is available. What can I use it for? MuseTalk can be used to bring static images or videos to life by animating the subjects' lips in sync with the audio. This can be particularly useful for creating virtual avatars, dubbing videos, or enhancing the realism of computer-generated characters. The model's real-time capabilities make it suitable for live applications, such as virtual presentations or interactive experiences. Things to try Experiment with MuseTalk by using it to animate the lips of various subjects, from famous portraits to your own photos. Try adjusting the center point of the face region to see how it impacts the generation results. Additionally, you can explore integrating MuseTalk with other virtual human solutions, such as MuseV, to create a complete virtual human experience.

Updated Invalid Date