grounding-dino-base

Maintainer: IDEA-Research

46

🗣️

| Property | Value |

|---|---|

| Run this model | Run on HuggingFace |

| API spec | View on HuggingFace |

| Github link | No Github link provided |

| Paper link | No paper link provided |

Create account to get full access

Model overview

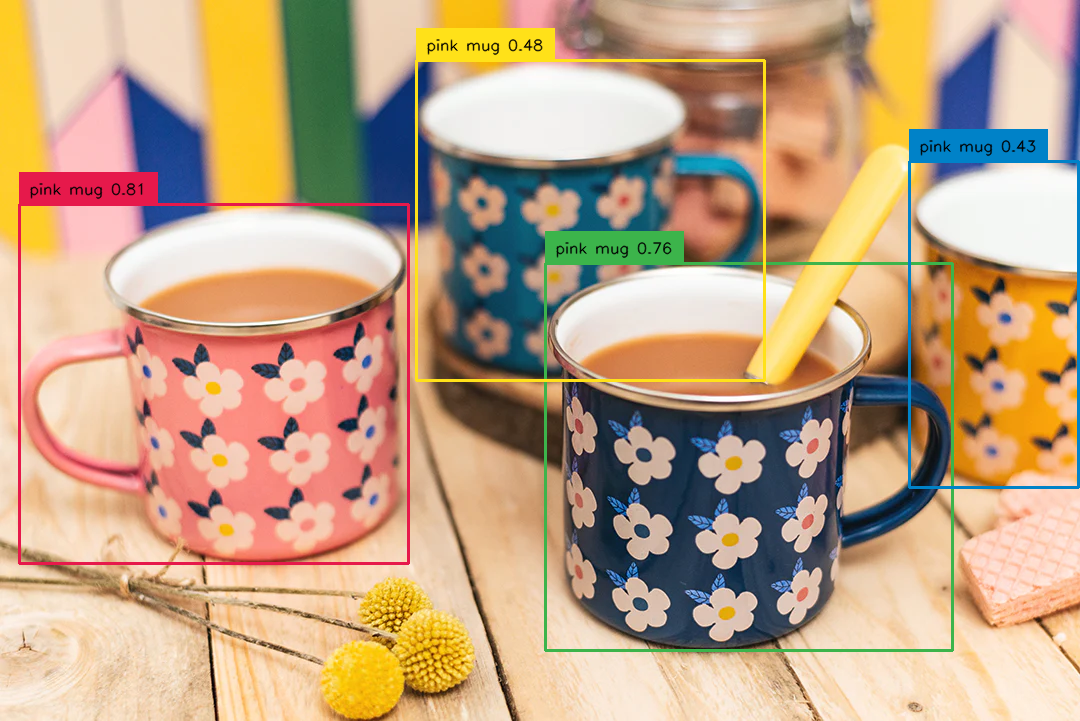

The grounding-dino-base model, developed by IDEA-Research, is an extension of the closed-set object detection model DINO (Detecting Objects with Noisy Guidance). Grounding DINO adds a text encoder, enabling the model to perform open-set object detection - the ability to detect objects in an image without any labeled data. This model achieves impressive results, such as 52.5 AP on the COCO zero-shot dataset, as detailed in the original paper.

Similar models include the GroundingDINO and grounding-dino models, which also focus on zero-shot object detection, as well as the dino-vitb16 and dinov2-base models, which use self-supervised training approaches like DINO and DINOv2 on Vision Transformers.

Model inputs and outputs

Inputs

- Images: The model takes in an image for which it will perform zero-shot object detection.

- Text: The model also takes in a text prompt that specifies the objects to detect in the image, such as "a cat. a remote control."

Outputs

- Bounding boxes: The model outputs bounding boxes around the detected objects in the image, along with corresponding confidence scores.

- Object labels: The model also outputs the object labels that correspond to the detected bounding boxes.

Capabilities

The grounding-dino-base model excels at zero-shot object detection, which means it can detect objects in images without any labeled training data. This is achieved by the model's ability to ground the text prompt to the visual features in the image. The model can detect a wide variety of objects, from common household items to more obscure objects.

What can I use it for?

You can use the grounding-dino-base model for a variety of applications that require open-set object detection, such as robotic assistants, autonomous vehicles, and image analysis tools. By providing a simple text prompt, the model can quickly identify and localize objects in an image without the need for labeled training data.

Things to try

One interesting thing to try with the grounding-dino-base model is to experiment with different text prompts. The model's performance can be influenced by the specific wording and phrasing of the prompt, so you can explore how to craft prompts that elicit the desired object detection results. Additionally, you can try combining the model with other computer vision techniques, such as image segmentation or instance recognition, to create more advanced applications.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

🤷

GroundingDINO

110

GroundingDINO is a novel object detection model developed by Shilong Liu and colleagues. It combines the power of the DINO self-supervised vision transformer with grounded pre-training on image-text pairs, enabling it to perform open-set zero-shot object detection. In contrast to traditional object detectors that require extensive annotated training data, GroundingDINO can detect objects in images using only natural language descriptions, without needing any bounding box labels. The model was trained on a large-scale dataset of image-text pairs, allowing it to ground visual concepts to their linguistic representations. This enables GroundingDINO to recognize a diverse set of object categories beyond the typical closed-set of a standard object detector. The paper demonstrates the model's impressive performance on a variety of benchmarks, outperforming prior zero-shot and few-shot object detectors. Model Inputs and Outputs Inputs Natural language text**: A free-form text description of the objects to be detected in an image. Outputs Bounding boxes**: The model outputs a set of bounding boxes corresponding to the detected objects, along with their class labels. Confidence scores**: Each detected object is associated with a confidence score indicating the model's certainty of the detection. Capabilities GroundingDINO exhibits strong zero-shot object detection capabilities, allowing it to identify a wide variety of objects in images using only text descriptions, without requiring any bounding box annotations. The model achieves state-of-the-art performance on several open-set detection benchmarks, demonstrating its ability to generalize beyond a fixed set of categories. One compelling example showcased in the paper is GroundingDINO's ability to detect unusual objects like "a person riding a unicycle" or "a dog wearing sunglasses." These types of compositional and unconventional object descriptions highlight the model's flexibility and understanding of complex visual concepts. What can I use it for? GroundingDINO has numerous potential applications in computer vision and multimedia understanding. Its zero-shot capabilities make it well-suited for tasks like: Image and video annotation**: Automatically generating detailed textual descriptions of the contents of images and videos, without the need for extensive manual labeling. Robotic perception**: Allowing robots to recognize and interact with a wide range of objects in unstructured environments, using natural language commands. Intelligent assistants**: Powering AI assistants that can understand and respond to queries about the visual world using grounded language understanding. The maintainer, ShilongLiu, has also provided a Colab demo to showcase the model's capabilities. Things to try One interesting aspect of GroundingDINO is its ability to detect objects using complex, compositional language descriptions. Try experimenting with prompts that combine multiple attributes or describe unusual object configurations, such as "a person riding a unicycle" or "a dog wearing sunglasses." Observe how the model's performance compares to more straightforward object descriptions. Additionally, you could explore the model's ability to generalize to new, unseen object categories by trying prompts for objects that are not part of the standard object detection datasets. This can help uncover the model's true open-set capabilities and understanding of visual concepts. Overall, GroundingDINO represents an exciting advancement in the field of object detection, showcasing the potential of language-guided vision models to tackle complex and open-ended visual understanding tasks.

Updated Invalid Date

grounding-dino

132

grounding-dino is an AI model that can detect arbitrary objects in images using human text inputs such as category names or referring expressions. It combines a Transformer-based detector called DINO with grounded pre-training to achieve open-vocabulary and text-guided object detection. The model was developed by IDEA Research and is available as a Cog model on Replicate. Similar models include GroundingDINO, which also uses the Grounding DINO approach, as well as other object detection models like stable-diffusion and text-extract-ocr. Model inputs and outputs grounding-dino takes an image and a comma-separated list of text queries describing the objects you want to detect. It then outputs the detected objects with bounding boxes and predicted labels. The model also allows you to adjust the confidence thresholds for the box and text predictions. Inputs image**: The input image to query query**: Comma-separated text queries describing the objects to detect box_threshold**: Confidence level threshold for object detection text_threshold**: Confidence level threshold for predicted labels show_visualisation**: Option to draw and visualize the bounding boxes on the image Outputs Detected objects with bounding boxes and predicted labels Capabilities grounding-dino can detect a wide variety of objects in images using just natural language descriptions. This makes it a powerful tool for tasks like content moderation, image retrieval, and visual analysis. The model is particularly adept at handling open-vocabulary detection, allowing you to query for any object, not just a predefined set. What can I use it for? You can use grounding-dino for a variety of applications that require object detection, such as: Visual search**: Quickly find specific objects in large image databases using text queries. Automated content moderation**: Detect inappropriate or harmful objects in user-generated content. Augmented reality**: Overlay relevant information on objects in the real world using text-guided object detection. Robotic perception**: Enable robots to understand and interact with their environment using language-guided object detection. Things to try Try experimenting with different types of text queries to see how the model handles various object descriptions. You can also play with the confidence thresholds to balance the precision and recall of the object detections. Additionally, consider integrating grounding-dino into your own applications to add powerful object detection capabilities.

Updated Invalid Date

🧠

dino-vitb16

96

The dino-vitb16 model is a Vision Transformer (ViT) trained using the DINO self-supervised learning method. Like other ViT models, it takes images as input and divides them into a sequence of fixed-size patches, which are then linearly embedded and processed by transformer encoder layers. The DINO training approach allows the model to learn an effective inner representation of images without requiring labeled data, making it a versatile foundation for a variety of downstream tasks. In contrast to the vit-base-patch16-224-in21k and vit-base-patch16-224 models which were pre-trained on ImageNet-21k in a supervised manner, the dino-vitb16 model was trained using the self-supervised DINO approach on a large collection of unlabeled images. This allows it to learn visual features and representations in a more general and open-ended way, without being constrained to the specific classes and labels of ImageNet. The nsfw_image_detection model is another ViT-based model, but one that has been fine-tuned on a specialized task of classifying images as "normal" or "NSFW" (not safe for work). This demonstrates how the general capabilities of ViT models can be adapted to more specific use cases through further training. Model inputs and outputs Inputs Images**: The model takes images as input, which are divided into a sequence of 16x16 pixel patches and linearly embedded. Outputs Image features**: The model outputs a set of feature representations for the input image, which can be used for various downstream tasks like image classification, object detection, and more. Capabilities The dino-vitb16 model is a powerful general-purpose image feature extractor, capable of capturing rich visual representations from input images. Unlike models trained solely on labeled datasets like ImageNet, the DINO training approach allows this model to learn more versatile and transferable visual features. This makes the dino-vitb16 model well-suited for a wide range of computer vision tasks, from image classification and object detection to image retrieval and visual reasoning. The learned representations can be easily fine-tuned or used as features for building more specialized models. What can I use it for? You can use the dino-vitb16 model as a pre-trained feature extractor for your own image-based machine learning projects. By leveraging the model's general-purpose visual representations, you can build and train more sophisticated computer vision systems with less labeled data and computational resources. For example, you could fine-tune the model on a smaller dataset of labeled images to perform image classification, or use the features as input to an object detection or segmentation model. The model could also be used for tasks like image retrieval, where you need to find similar images in a large database. Things to try One interesting aspect of the dino-vitb16 model is its ability to learn visual features in a self-supervised manner, without relying on labeled data. This suggests that the model may be able to generalize well to a variety of visual domains and tasks, not just those seen during pre-training. To explore this, you could try fine-tuning the model on datasets that are very different from the ones used for pre-training, such as medical images, satellite imagery, or even artistic depictions. Observing how the model's performance and learned representations transfer to these new domains could provide valuable insights into the model's underlying capabilities and limitations. Additionally, you could experiment with using the dino-vitb16 model as a feature extractor for multi-modal tasks, such as image-text retrieval or visual question answering. The rich visual representations learned by the model could complement text-based features to enable more powerful and versatile AI systems.

Updated Invalid Date

🤖

dinov2-base

57

The dinov2-base model is a Vision Transformer (ViT) model trained using the DINOv2 self-supervised learning method. It was developed by researchers at Facebook. The DINOv2 method allows the model to learn robust visual features without direct supervision, by pre-training on a large collection of images. This contrasts with models like dino-vitb16 and vit-base-patch16-224-in21k, which were trained in a supervised fashion on ImageNet. Model inputs and outputs The dinov2-base model takes images as input and outputs a sequence of hidden feature representations. These features can then be used for a variety of downstream computer vision tasks, such as image classification, object detection, or visual question answering. Inputs Images**: The model accepts images as input, which are divided into a sequence of fixed-size patches and linearly embedded. Outputs Image feature representations**: The final output of the model is a sequence of hidden feature representations, where each feature corresponds to a patch in the input image. These features can be used for further processing in downstream tasks. Capabilities The dinov2-base model is a powerful pre-trained vision model that can be used as a feature extractor for a wide range of computer vision applications. Because it was trained in a self-supervised manner on a large dataset of images, the model has learned robust visual representations that can be effectively transferred to various tasks, even with limited labeled data. What can I use it for? You can use the dinov2-base model for feature extraction in your computer vision projects. By feeding your images through the model and extracting the final hidden representations, you can leverage the model's powerful visual understanding for tasks like image classification, object detection, and visual question answering. This can be particularly useful when you have a small dataset and want to leverage the model's pre-trained knowledge. Things to try One interesting aspect of the dinov2-base model is its self-supervised pre-training approach, which allows it to learn visual features without the need for expensive manual labeling. You could experiment with fine-tuning the model on your own dataset, or using the pre-trained features as input to a custom downstream model. Additionally, you could compare the performance of the dinov2-base model to other self-supervised and supervised vision models, such as dino-vitb16 and vit-base-patch16-224-in21k, to see how the different pre-training approaches impact performance on your specific task.

Updated Invalid Date