arc2face

Maintainer: camenduru

1

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | View on Github |

| Paper link | View on Arxiv |

Create account to get full access

Model overview

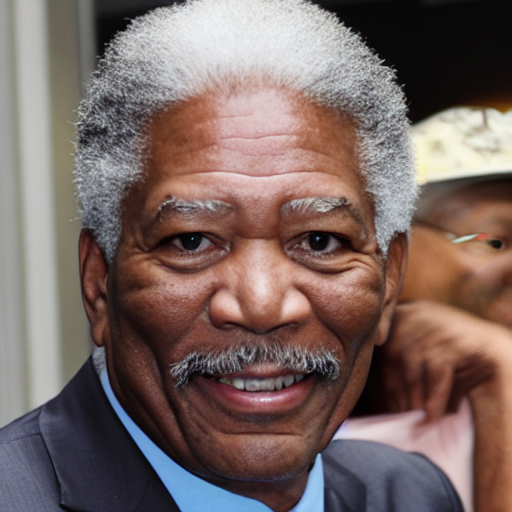

arc2face is an AI model developed by Replicate creator camenduru that aims to be a "Foundation Model of Human Faces". It is similar to other face-focused models like real-esrgan, instantmesh, and face-to-many, which can generate, manipulate, and transform human faces. However, arc2face appears to be a more general foundation model trained on a broader set of face data.

Model inputs and outputs

arc2face takes in an input image, a guidance scale, a seed value, and a number of steps and images to generate. The model then outputs a set of generated face images based on the provided inputs.

Inputs

- Input Image: The input image to use as a starting point for generation.

- Guidance Scale: A value controlling the strength of the guidance from the text encoding.

- Seed: A value used to initialize the random number generator for reproducible results.

- Num Steps: The number of steps to run the diffusion process.

- Num Images: The number of output images to generate.

Outputs

- Output Images: A set of generated face images based on the provided inputs.

Capabilities

arc2face is a powerful model that can generate high-quality, photorealistic human faces. It can be used to create diverse and unique faces, as well as to manipulate and transform existing faces. The model's foundation in human faces allows it to capture a wide range of facial features, expressions, and characteristics.

What can I use it for?

arc2face could be used for a variety of applications, such as:

- Generating synthetic faces for use in media, art, or training datasets

- Transforming existing faces into different styles or expressions

- Experimenting with facial features and characteristics

- Potentially aiding in tasks like facial recognition or animation

Things to try

Some interesting things to try with arc2face include:

- Generating a set of diverse faces and exploring the range of expressions and characteristics

- Providing an existing face as input and seeing how the model transforms it

- Experimenting with different guidance scale and step values to see their impact on the generated faces

- Trying to recreate specific individuals or characters using the model

Overall, arc2face is a versatile and powerful model that could be a valuable tool for a variety of creative and technical applications.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

one-shot-talking-face

1

one-shot-talking-face is an AI model that enables the creation of realistic talking face animations from a single input image. It was developed by Camenduru, an AI model creator. This model is similar to other talking face animation models like AniPortrait: Audio-Driven Synthesis of Photorealistic Portrait Animation, Make any Image Talk, and AnimateLCM Cartoon3D Model. These models aim to bring static images to life by animating the subject's face in response to audio input. Model inputs and outputs one-shot-talking-face takes two input files: a WAV audio file and an image file. The model then generates an output video file that animates the face in the input image to match the audio. Inputs Wav File**: The audio file that will drive the facial animation. Image File**: The input image containing the face to be animated. Outputs Output**: A video file that shows the face in the input image animated to match the audio. Capabilities one-shot-talking-face can create highly realistic and expressive talking face animations from a single input image. The model is able to capture subtle facial movements and expressions, resulting in animations that appear natural and lifelike. What can I use it for? one-shot-talking-face can be a powerful tool for a variety of applications, such as creating engaging video content, developing virtual assistants or digital avatars, or even enhancing existing videos by animating static images. The model's ability to generate realistic talking face animations from a single image makes it a versatile and accessible tool for creators and developers. Things to try One interesting aspect of one-shot-talking-face is its potential to bring historical or artistic figures to life. By providing a portrait image and appropriate audio, the model can animate the subject's face, allowing users to hear the figure speak in a lifelike manner. This could be a captivating way to bring the past into the present or to explore the expressive qualities of iconic artworks.

Updated Invalid Date

aniportrait-vid2vid

3

aniportrait-vid2vid is an AI model developed by camenduru that enables audio-driven synthesis of photorealistic portrait animation. It builds upon similar models like Champ, AnimateLCM Cartoon3D Model, and Arc2Face, which focus on controllable and consistent human image animation, creating cartoon-style 3D models, and generating human faces, respectively. Model inputs and outputs aniportrait-vid2vid takes in a reference image and a source video as inputs, and generates a series of output images that animate the portrait in the reference image to match the movements and expressions in the source video. Inputs Ref Image Path**: The input image used as the reference for the portrait animation Source Video Path**: The input video that provides the source of movement and expression for the animation Outputs Output**: An array of generated image URIs that depict the animated portrait Capabilities aniportrait-vid2vid can synthesize photorealistic portrait animations that are driven by audio input. This allows for the creation of expressive and dynamic portrait animations that can be used in a variety of applications, such as digital avatars, virtual communication, and multimedia productions. What can I use it for? The aniportrait-vid2vid model can be used to create engaging and lifelike portrait animations for a range of applications, such as virtual conferencing, interactive media, and digital marketing. By leveraging the model's ability to animate portraits in a photorealistic manner, users can generate compelling content that captures the nuances of human expression and movement. Things to try One interesting aspect of aniportrait-vid2vid is its potential for creating personalized and interactive content. By combining the model's portrait animation capabilities with other AI technologies, such as natural language processing or generative text, users could develop conversational digital assistants or interactive storytelling experiences that feature realistic, animated portraits.

Updated Invalid Date

champ

13

champ is a model developed by Fudan University that enables controllable and consistent human image animation with 3D parametric guidance. It allows users to animate human images by specifying 3D motion parameters, resulting in realistic and coherent animations. This model can be particularly useful for applications such as video game character animation, virtual avatar creation, and visual effects in films and videos. In contrast to similar models like InstantMesh, Arc2Face, and Real-ESRGAN, champ focuses specifically on human image animation with detailed 3D control. Model inputs and outputs champ takes two main inputs: a reference image and 3D motion guidance data. The reference image is used as the basis for the animated character, while the 3D motion guidance data specifies the desired movement and animation. The model then generates an output image that depicts the animated human figure based on the provided inputs. Inputs Ref Image Path**: The path to the reference image used as the basis for the animated character. Guidance Data**: The 3D motion data that specifies the desired movement and animation of the character. Outputs Output**: The generated image depicting the animated human figure based on the provided inputs. Capabilities champ can generate realistic and coherent human image animations by leveraging 3D parametric guidance. The model is capable of producing animations that are both controllable and consistent, allowing users to fine-tune the movement and expression of the animated character. This can be particularly useful for applications that require precise control over character animation, such as video games, virtual reality experiences, and visual effects. What can I use it for? The champ model can be used for a variety of applications that involve human image animation, such as: Video game character animation: Developers can use champ to create realistic and expressive character animations for their games. Virtual avatar creation: Businesses and individuals can use champ to generate animated avatars for use in virtual meetings, social media, and other online interactions. Visual effects in films and videos: Filmmakers and video content creators can leverage champ to enhance the realism and expressiveness of human characters in their productions. Things to try With champ, users can experiment with different 3D motion guidance data to create a wide range of human animations, from subtle gestures to complex dance routines. Additionally, users can explore the model's ability to maintain consistency in the animated character's appearance and movements, which can be particularly useful for creating seamless and natural-looking animations.

Updated Invalid Date

modelscope-facefusion

7

modelscope-facefusion is a Cog model that allows you to automatically fuse a user's face onto a template image, resulting in an image with the user's face and the template body. This model is developed by lucataco, who has created other interesting AI models like demofusion-enhance, ip_adapter-face-inpaint, and codeformer. Model inputs and outputs This model takes two inputs: a template image and a user image. The template image is the body image that the user's face will be fused onto. The user image is the face image that will be used for the fusion. Inputs user_image**: The input face image template_image**: The input body image Outputs Output**: The resulting image with the user's face fused onto the template body Capabilities modelscope-facefusion can automatically blend a user's face onto a template image, creating a seamless fusion that looks natural and realistic. This can be useful for a variety of applications, such as creating personalized avatars, generating product images with human models, or even for fun, creative projects. What can I use it for? This model could be used for a range of applications, such as creating personalized product images, generating virtual avatars, or even for fun, creative projects. For example, a e-commerce company could use modelscope-facefusion to generate product images with the customer's face, allowing them to visualize how a product would look on them. Or a social media platform could offer a feature that allows users to fuse their face onto various templates, creating unique and engaging content. Things to try One interesting thing to try with modelscope-facefusion would be to experiment with different template images and see how the fusion results vary. You could try using a variety of body types, poses, and backgrounds to see how the model handles different scenarios. Additionally, you could try combining modelscope-facefusion with other models, like stable-diffusion, to create even more unique and creative content.

Updated Invalid Date