aura-sr

Maintainer: zsxkib

5

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | View on Github |

| Paper link | View on Arxiv |

Create account to get full access

Model overview

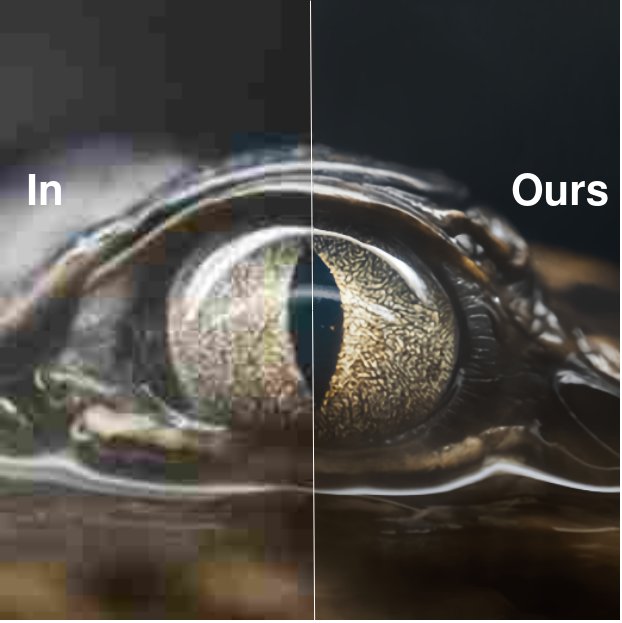

aura-sr is a GAN-based super-resolution model designed to upscale real-world images. It is based on the GigaGAN approach and can produce impressive results for certain types of images. The model is developed by zsxkib and is available through the Replicate platform. Similar models like SeeSR, ArbSR, ESRGAN, and Real-ESRGAN also aim to improve image super-resolution in various ways.

Model inputs and outputs

The aura-sr model takes an input image file and a scale factor as its inputs. The scale factor determines how much the image will be upscaled, with options for 2, 4, 8, 16, or 32 times the original size. The model outputs a higher-resolution version of the input image.

Inputs

- image: The input image file to be upscaled.

- scale_factor: The factor by which to upscale the image (2, 4, 8, 16, or 32).

- max_batch_size: Controls the number of image tiles processed simultaneously. Higher values may increase speed but require more GPU memory.

Outputs

- Output: The upscaled image file.

Capabilities

aura-sr is particularly effective at upscaling PNG, lossless WebP, and high-quality JPEG XL images. It can handle different sized jobs and work quickly, making it a useful tool for tasks that require enlarging images while preserving quality.

What can I use it for?

The aura-sr model can be used to upscale AI-generated images or high-quality photographs, making them larger and clearer without losing important details. This can be useful for a variety of applications, such as creating larger promotional materials, improving image quality for websites or social media, or enhancing the visual impact of visualizations and data presentations.

Things to try

While aura-sr is a powerful tool, it does have some limitations. It works best with certain image formats and may not perform well on heavily compressed or low-quality images. Experimenting with different input images and scale factors can help you find the optimal use cases for this model.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

seesr

36

seesr is an AI model developed by cswry that aims to perform semantics-aware real-world image super-resolution. It builds upon the Stable Diffusion model and incorporates additional components to enhance the quality of real-world image upscaling. Unlike similar models like supir, supir-v0q, supir-v0f, real-esrgan, and gfpgan, seesr focuses on leveraging semantic information to improve the fidelity and perception of the upscaled images. Model inputs and outputs seesr takes in a low-resolution real-world image and generates a high-resolution version of the same image, aiming to preserve the semantic content and visual quality. The model can handle a variety of input images, from natural scenes to portraits and close-up shots. Inputs Image**: The input low-resolution real-world image Outputs Output image**: The high-resolution version of the input image, with improved fidelity and perception Capabilities seesr demonstrates the ability to perform semantics-aware real-world image super-resolution, preserving the semantic content and visual quality of the input images. It can handle a diverse range of real-world scenes, from buildings and landscapes to people and animals, and produces high-quality upscaled results. What can I use it for? seesr can be used for a variety of applications that require high-resolution real-world images, such as photo editing, digital art, and content creation. Its semantic awareness allows for more faithful and visually pleasing upscaling, making it a valuable tool for professionals and enthusiasts alike. Additionally, the model can be utilized in applications where high-quality image assets are needed, such as virtual reality, gaming, and architectural visualization. Things to try One interesting aspect of seesr is its ability to balance the trade-off between fidelity and perception in the upscaled images. Users can experiment with the various parameters, such as the number of inference steps and the guidance scale, to find the right balance for their specific use cases. Additionally, users can try manually specifying prompts to further enhance the quality of the results, as the automatic prompt extraction by the DAPE component may not always be perfect.

Updated Invalid Date

srrescgan

40

srrescgan is an intelligent image scaling model developed by raoumer. It is designed to upscale low-resolution images to a 4x higher resolution, while preserving details and reducing artifacts. The model is based on a Super-Resolution Residual Convolutional Generative Adversarial Network (SRResCGAN) architecture, which aims to handle real-world image degradations like sensor noise and JPEG compression. Similar models like real-esrgan, seesr, and rvision-inp-slow also focus on enhancing real-world images, but with different approaches and capabilities. Unlike these models, srrescgan specifically targets 4x super-resolution while maintaining image quality. Model inputs and outputs The srrescgan model takes a low-resolution image as input and outputs a 4x higher resolution image. This can be useful for upscaling images from mobile devices, low-quality scans, or other sources where the original high-resolution image is not available. Inputs Image**: The input image to be upscaled, in a standard image format (e.g. JPEG, PNG). Outputs Output Image**: The 4x higher resolution image, in the same format as the input. Capabilities The srrescgan model is designed to handle real-world image degradations such as sensor noise and JPEG compression, which can significantly reduce the performance of traditional super-resolution methods. By leveraging a residual convolutional network and generative adversarial training, the model is able to produce high-quality 4x upscaled images even in the presence of these challenging artifacts. What can I use it for? The srrescgan model can be useful in a variety of applications that require high-resolution images from low-quality inputs, such as: Enhancing low-resolution photos from mobile devices or older cameras Improving the quality of scanned documents or historical images Upscaling images for use in web or print media Super-resolving frames from video footage By providing a robust super-resolution solution that can handle real-world image degradations, srrescgan can help to improve the visual quality of images in these and other applications. Things to try One interesting aspect of srrescgan is its use of residual learning and adversarial training to produce high-quality super-resolved images. You might try experimenting with the model on a variety of input images, from different sources and with different types of degradations, to see how it performs. Additionally, you could investigate how the model's performance compares to other super-resolution approaches, both in terms of quantitative metrics and visual quality.

Updated Invalid Date

swin2sr

3.5K

swin2sr is a state-of-the-art AI model for photorealistic image super-resolution and restoration, developed by the mv-lab research team. It builds upon the success of the SwinIR model by incorporating the novel Swin Transformer V2 architecture, which improves training convergence and performance, especially for compressed image super-resolution tasks. The model outperforms other leading solutions in classical, lightweight, and real-world image super-resolution, JPEG compression artifact reduction, and compressed input super-resolution. It was a top-5 solution in the "AIM 2022 Challenge on Super-Resolution of Compressed Image and Video". Similar models in the image restoration and enhancement space include supir, stable-diffusion, instructir, gfpgan, and seesr. Model inputs and outputs swin2sr takes low-quality, low-resolution JPEG compressed images as input and generates high-quality, high-resolution images as output. The model can upscale the input by a factor of 2, 4, or other scales, depending on the task. Inputs Low-quality, low-resolution JPEG compressed images Outputs High-quality, high-resolution images with reduced compression artifacts and enhanced visual details Capabilities swin2sr can effectively tackle various image restoration and enhancement tasks, including: Classical image super-resolution Lightweight image super-resolution Real-world image super-resolution JPEG compression artifact reduction Compressed input super-resolution The model's excellent performance is achieved through the use of the Swin Transformer V2 architecture, which improves training stability and data efficiency compared to previous transformer-based approaches like SwinIR. What can I use it for? swin2sr can be particularly useful in applications where image quality and resolution are crucial, such as: Enhancing images for high-resolution displays and printing Improving image quality for streaming services and video conferencing Restoring old or damaged photos Generating high-quality images for virtual reality and gaming The model's ability to handle compressed input super-resolution makes it a valuable tool for efficient image and video transmission and storage in bandwidth-limited systems. Things to try One interesting aspect of swin2sr is its potential to be used in combination with other image processing and generation models, such as instructir or stable-diffusion. By integrating swin2sr into a workflow that starts with text-to-image generation or semantic-aware image manipulation, users can achieve even more impressive and realistic results. Additionally, the model's versatility in handling various image restoration tasks makes it a valuable tool for researchers and developers working on computational photography, low-level vision, and image signal processing applications.

Updated Invalid Date

arbsr

21

The arbsr model, developed by Longguang Wang, is a plug-in module that extends a baseline super-resolution (SR) network to a scale-arbitrary SR network with a small additional cost. This allows the model to perform non-integer and asymmetric scale factor SR, while maintaining state-of-the-art performance for integer scale factors. This is useful for real-world applications where arbitrary zoom levels are required, beyond the typical integer scale factors. The arbsr model is related to other SR models like GFPGAN, ESRGAN, SuPeR, and HCFlow-SR, which focus on various aspects of image restoration and enhancement. Model inputs and outputs Inputs image**: The input image to be super-resolved target_width**: The desired width of the output image, which can be 1-4 times the input width target_height**: The desired height of the output image, which can be 1-4 times the input width Outputs Output**: The super-resolved image at the desired target size Capabilities The arbsr model is capable of performing scale-arbitrary super-resolution, including non-integer and asymmetric scale factors. This allows for more flexible and customizable image enlargement compared to typical integer-only scale factors. What can I use it for? The arbsr model can be useful for a variety of real-world applications where arbitrary zoom levels are required, such as image editing, content creation, and digital asset management. By enabling non-integer and asymmetric scale factor SR, the model provides more flexibility and control over the final image resolution, allowing users to zoom in on specific details or adapt the image size to their specific needs. Things to try One interesting aspect of the arbsr model is its ability to handle continuous scale factors, which can be explored using the interactive viewer provided by the maintainer. This allows you to experiment with different zoom levels and observe the model's performance in real-time.

Updated Invalid Date