chat-tts

Maintainer: thlz998

29

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | View on Github |

| Paper link | View on Arxiv |

Create account to get full access

Model overview

chat-tts is an implementation of the ChatTTS model as a Cog model, developed by maintainer thlz998. It is similar to other text-to-speech models like bel-tts, neon-tts, and xtts-v2, which also aim to convert text into human-like speech.

Model inputs and outputs

chat-tts takes in text that it will synthesize into speech. It also allows for adjusting various parameters like voice, temperature, and top-k sampling to control the generated audio output.

Inputs

- text: The text to be synthesized into speech.

- voice: A number that determines the voice tone, with options like 2222, 7869, 6653, 4099, 5099.

- prompt: Sets laughter, pauses, and other audio cues.

- temperature: Adjusts the sampling temperature.

- top_p: Sets the nucleus sampling top-p value.

- top_k: Sets the top-k sampling value.

- skip_refine: Determines whether to skip the text refinement step.

- custom_voice: Allows specifying a seed value for custom voice tone generation.

Outputs

- The generated speech audio based on the provided text and parameters.

Capabilities

chat-tts can generate human-like speech from text, allowing for customization of the voice, tone, and other audio characteristics. It can be useful for applications that require text-to-speech functionality, such as audio books, virtual assistants, or multimedia content.

What can I use it for?

chat-tts could be used in projects that require text-to-speech capabilities, such as:

- Creating audio books or audiobook samples

- Developing virtual assistants or chatbots with voice output

- Generating spoken content for educational materials or podcasts

- Enhancing multimedia presentations or videos with narration

Things to try

With chat-tts, you can experiment with different voice settings, prompts, and sampling parameters to create unique speech outputs. For example, you could try generating speech with different emotional tones or accents by adjusting the voice and prompt inputs. Additionally, you could explore using the custom voice feature to generate more personalized speech outputs.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

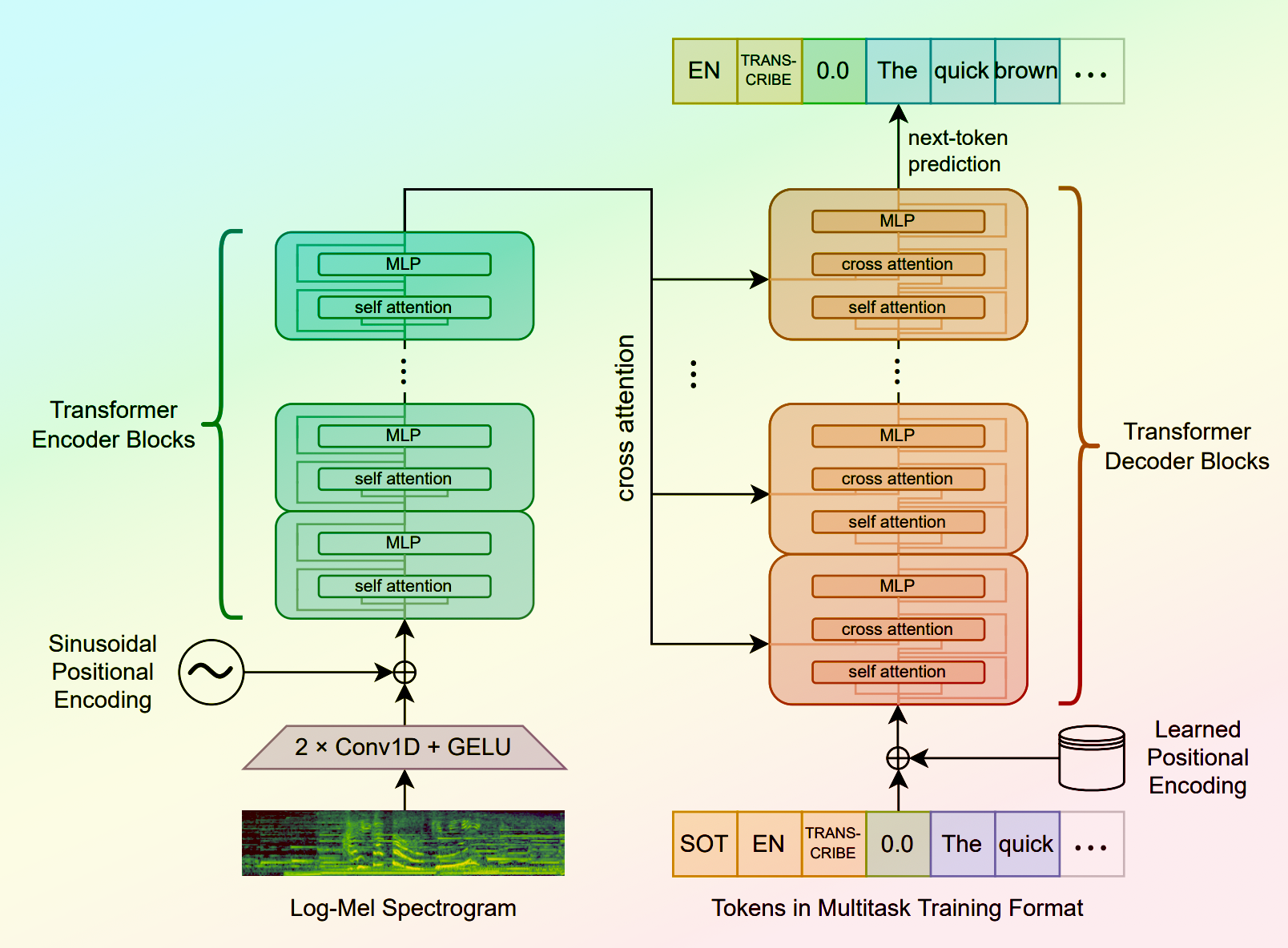

whisper

31.3K

Whisper is a general-purpose speech recognition model developed by OpenAI. It is capable of converting speech in audio to text, with the ability to translate the text to English if desired. Whisper is based on a large Transformer model trained on a diverse dataset of multilingual and multitask speech recognition data. This allows the model to handle a wide range of accents, background noises, and languages. Similar models like whisper-large-v3, incredibly-fast-whisper, and whisper-diarization offer various optimizations and additional features built on top of the core Whisper model. Model inputs and outputs Whisper takes an audio file as input and outputs a text transcription. The model can also translate the transcription to English if desired. The input audio can be in various formats, and the model supports a range of parameters to fine-tune the transcription, such as temperature, patience, and language. Inputs Audio**: The audio file to be transcribed Model**: The specific version of the Whisper model to use, currently only large-v3 is supported Language**: The language spoken in the audio, or None to perform language detection Translate**: A boolean flag to translate the transcription to English Transcription**: The format for the transcription output, such as "plain text" Initial Prompt**: An optional initial text prompt to provide to the model Suppress Tokens**: A list of token IDs to suppress during sampling Logprob Threshold**: The minimum average log probability threshold for a successful transcription No Speech Threshold**: The threshold for considering a segment as silence Condition on Previous Text**: Whether to provide the previous output as a prompt for the next window Compression Ratio Threshold**: The maximum compression ratio threshold for a successful transcription Temperature Increment on Fallback**: The temperature increase when the decoding fails to meet the specified thresholds Outputs Transcription**: The text transcription of the input audio Language**: The detected language of the audio (if language input is None) Tokens**: The token IDs corresponding to the transcription Timestamp**: The start and end timestamps for each word in the transcription Confidence**: The confidence score for each word in the transcription Capabilities Whisper is a powerful speech recognition model that can handle a wide range of accents, background noises, and languages. The model is capable of accurately transcribing audio and optionally translating the transcription to English. This makes Whisper useful for a variety of applications, such as real-time captioning, meeting transcription, and audio-to-text conversion. What can I use it for? Whisper can be used in various applications that require speech-to-text conversion, such as: Captioning and Subtitling**: Automatically generate captions or subtitles for videos, improving accessibility for viewers. Meeting Transcription**: Transcribe audio recordings of meetings, interviews, or conferences for easy review and sharing. Podcast Transcription**: Convert audio podcasts to text, making the content more searchable and accessible. Language Translation**: Transcribe audio in one language and translate the text to another, enabling cross-language communication. Voice Interfaces**: Integrate Whisper into voice-controlled applications, such as virtual assistants or smart home devices. Things to try One interesting aspect of Whisper is its ability to handle a wide range of languages and accents. You can experiment with the model's performance on audio samples in different languages or with various background noises to see how it handles different real-world scenarios. Additionally, you can explore the impact of the different input parameters, such as temperature, patience, and language detection, on the transcription quality and accuracy.

Updated Invalid Date

parler-tts

4.2K

parler-tts is a lightweight text-to-speech (TTS) model developed by cjwbw, a creator at Replicate. It is trained on 10.5K hours of audio data and can generate high-quality, natural-sounding speech with controllable features like gender, background noise, speaking rate, pitch, and reverberation. parler-tts is related to models like voicecraft, whisper, and sabuhi-model, which also focus on speech-related tasks. Additionally, the parler_tts_mini_v0.1 model provides a lightweight version of the parler-tts system. Model inputs and outputs The parler-tts model takes two main inputs: a text prompt and a text description. The prompt is the text to be converted into speech, while the description provides additional details to control the characteristics of the generated audio, such as the speaker's gender, pitch, speaking rate, and environmental factors. Inputs Prompt**: The text to be converted into speech. Description**: A text description that provides details about the desired characteristics of the generated audio, such as the speaker's gender, pitch, speaking rate, and environmental factors. Outputs Audio**: The generated audio file in WAV format, which can be played back or further processed as needed. Capabilities The parler-tts model can generate high-quality, natural-sounding speech with a range of customizable features. Users can control the gender, pitch, speaking rate, and environmental factors of the generated audio by carefully crafting the text description. This allows for a high degree of flexibility and creativity in the generated output, making it useful for a variety of applications, such as audio production, virtual assistants, and language learning. What can I use it for? The parler-tts model can be used in a variety of applications that require text-to-speech functionality. Some potential use cases include: Audio production**: The model can be used to generate natural-sounding voice-overs, narrations, or audio content for videos, podcasts, or other multimedia projects. Virtual assistants**: The model's ability to generate speech with customizable characteristics can be used to create more personalized and engaging virtual assistants. Language learning**: The model can be used to generate sample audio for language learning materials, providing learners with high-quality examples of pronunciation and intonation. Accessibility**: The model can be used to generate audio versions of text content, improving accessibility for individuals with visual impairments or reading difficulties. Things to try One interesting aspect of the parler-tts model is its ability to generate speech with a high degree of control over the output characteristics. Users can experiment with different text descriptions to explore the range of speech styles and environmental factors that the model can produce. For example, try using different descriptors for the speaker's gender, pitch, and speaking rate, or add details about the recording environment, such as the level of background noise or reverberation. By fine-tuning the text description, users can create a wide variety of speech samples that can be used for various applications.

Updated Invalid Date

xtts-v2

314

The xtts-v2 model is a multilingual text-to-speech voice cloning system developed by lucataco, the maintainer of this Cog implementation. This model is part of the Coqui TTS project, an open-source text-to-speech library. The xtts-v2 model is similar to other text-to-speech models like whisperspeech-small, styletts2, and qwen1.5-110b, which also generate speech from text. Model inputs and outputs The xtts-v2 model takes three main inputs: text to synthesize, a speaker audio file, and the output language. It then produces a synthesized audio file of the input text spoken in the voice of the provided speaker. Inputs Text**: The text to be synthesized Speaker**: The original speaker audio file (wav, mp3, m4a, ogg, or flv) Language**: The output language for the synthesized speech Outputs Output**: The synthesized audio file Capabilities The xtts-v2 model can generate high-quality multilingual text-to-speech audio by cloning the voice of a provided speaker. This can be useful for a variety of applications, such as creating personalized audio content, improving accessibility, or enhancing virtual assistants. What can I use it for? The xtts-v2 model can be used to create personalized audio content, such as audiobooks, podcasts, or video narrations. It could also be used to improve accessibility by generating audio versions of written content for users with visual impairments or other disabilities. Additionally, the model could be integrated into virtual assistants or chatbots to provide a more natural, human-like voice interface. Things to try One interesting thing to try with the xtts-v2 model is to experiment with different speaker audio files to see how the synthesized voice changes. You could also try using the model to generate audio in various languages and compare the results. Additionally, you could explore ways to integrate the model into your own applications or projects to enhance the user experience.

Updated Invalid Date

neon-tts

72

The neon-tts model is a Mycroft-compatible Text-to-Speech (TTS) plugin developed by Replicate user awerks. It utilizes the Coqui AI Text-to-Speech library to provide support for a wide range of languages, including all major European Union languages. As noted by the maintainer awerks, the model's performance is impressive, with real-time factors (RTF) ranging from 0.05 on high-end AMD/Intel machines to 0.5 on a Raspberry Pi 4. This makes the neon-tts model well-suited for a variety of applications, from desktop assistants to embedded systems. Model inputs and outputs The neon-tts model takes two inputs: a text string and a language code. The text is the input that will be converted to speech, and the language code specifies the language of the input text. The model outputs a URI representing the generated audio file. Inputs text**: The text to be converted to speech language**: The language of the input text, defaults to "en" (English) Outputs Output**: A URI representing the generated audio file Capabilities The neon-tts model is a powerful tool for generating high-quality speech from text. It supports a wide range of languages, making it useful for applications targeting international audiences. The model's impressive performance, with real-time factors as low as 0.05, allows for seamless integration into a variety of systems, from desktop assistants to embedded devices. What can I use it for? The neon-tts model can be used in a variety of applications that require text-to-speech functionality. Some potential use cases include: Virtual assistants: Integrate the neon-tts model into a virtual assistant to provide natural-sounding speech output. Accessibility tools: Use the model to convert written content to speech, making it more accessible for users with visual impairments or reading difficulties. Multimedia applications: Incorporate the neon-tts model into video, audio, or gaming applications to add voice narration or spoken dialogue. Educational resources: Create interactive learning materials that use the neon-tts model to read aloud text or provide audio instructions. Things to try One interesting aspect of the neon-tts model is its ability to support a wide range of languages, including less common ones like Irish and Maltese. This makes it a versatile tool for creating multilingual applications or content. You could experiment with generating speech in various languages to see how the model handles different linguistic structures and phonologies. Another interesting feature of the neon-tts model is its low resource requirements, allowing it to run efficiently on devices like the Raspberry Pi. This makes it a compelling choice for embedded systems or edge computing applications where performance and portability are important.

Updated Invalid Date