demucs

Maintainer: cjwbw

131

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | View on Github |

| Paper link | View on Arxiv |

Create account to get full access

Model overview

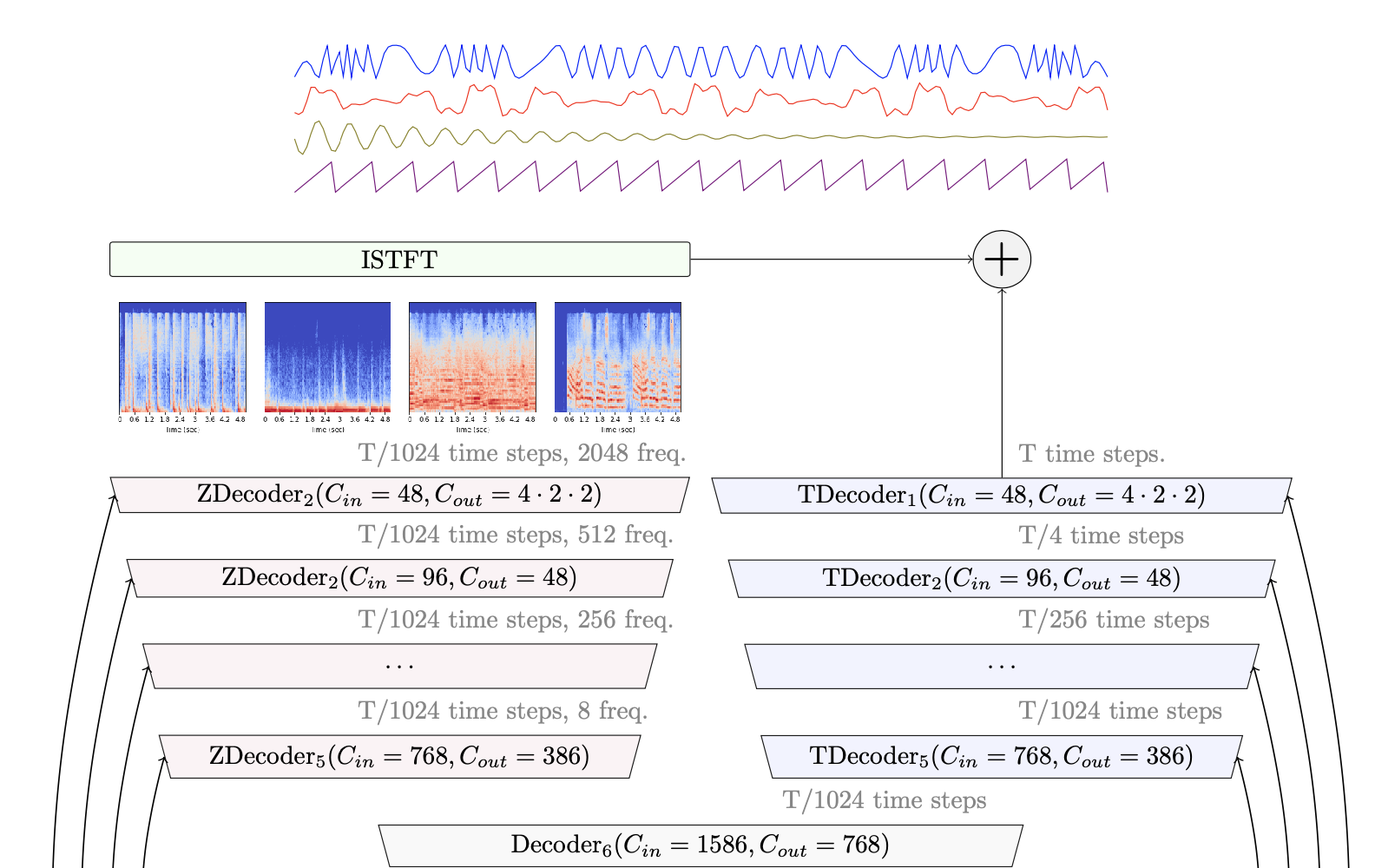

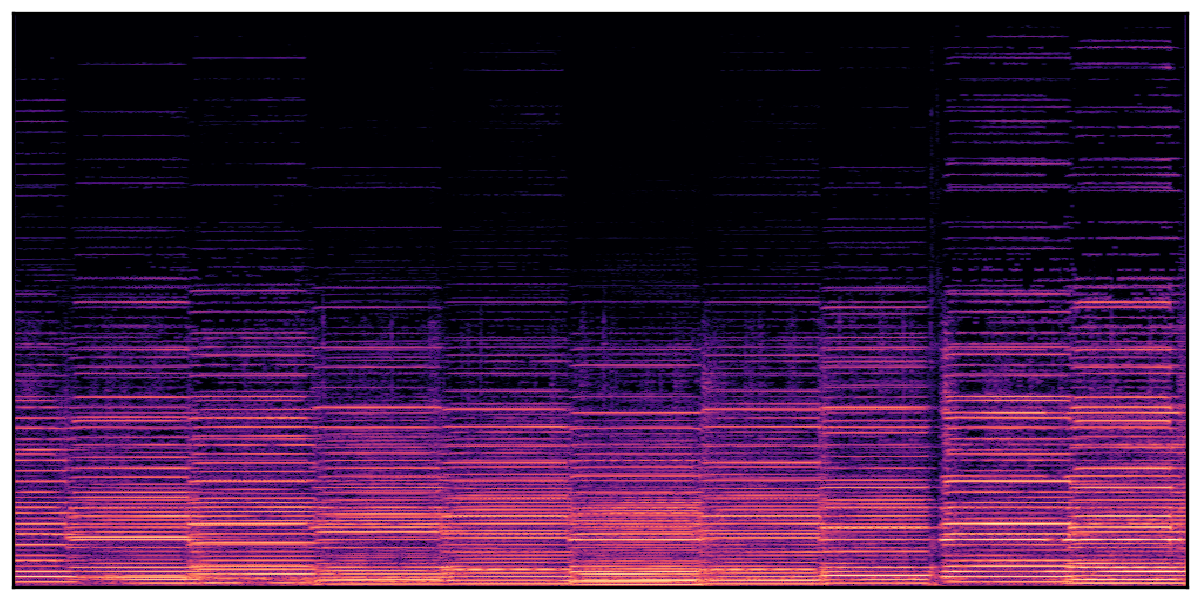

demucs is a state-of-the-art music source separation model developed by researchers at Facebook AI Research. It is capable of separating drums, bass, vocals, and other accompaniment from audio tracks. The latest version, Hybrid Transformer Demucs (v4), uses a hybrid spectrogram and waveform architecture with a Transformer encoder-decoder to achieve high-quality separation performance. This builds on the previous Hybrid Demucs (v3) model, which won the Sony MDX challenge. demucs is similar to other advanced source separation models like Wave-U-Net, Open-Unmix, and D3Net, but achieves state-of-the-art results on standard benchmarks.

Model inputs and outputs

demucs takes as input an audio file in a variety of formats including WAV, MP3, FLAC, and more. It outputs the separated audio stems for drums, bass, vocals, and other accompaniment as individual stereo WAV or MP3 files. Users can also choose to output just the vocals or other specific stems.

Inputs

- audio: The input audio file to be separated

- stem: The specific stem to separate (e.g. vocals, drums, bass) or "no_stem" to separate all stems

- model_name: The pre-trained model to use for separation, such as

htdemucs,htdemucs_ft, ormdx_extra - shifts: The number of random shifts to use for equivariant stabilization, which improves quality but increases inference time

- overlap: The amount of overlap between prediction windows

- clip_mode: The strategy for avoiding clipping in the output, either "rescale" or "clamp"

- float32: Whether to output the audio as 32-bit float instead of 16-bit integer

- mp3_bitrate: The bitrate to use when outputting the audio as MP3

Outputs

- drums.wav: The separated drums stem

- bass.wav: The separated bass stem

- vocals.wav: The separated vocals stem

- other.wav: The separated other/accompaniment stem

Capabilities

demucs is a highly capable music source separation model that can extract individual instrument and vocal tracks from complex audio mixes with high accuracy. It outperforms many previous state-of-the-art models on standard benchmarks like the MUSDB18 dataset. The latest Hybrid Transformer Demucs (v4) model achieves 9.0 dB SDR, which is a significant improvement over earlier versions and other leading approaches.

What can I use it for?

demucs can be used for a variety of music production and audio engineering tasks. It enables users to isolate individual elements of a song, which is useful for tasks like:

- Karaoke or music removal - Extracting just the vocals to create a karaoke track

- Remixing or mash-ups - Separating the drums, bass, and other elements to remix a song

- Audio post-production - Cleaning up or enhancing specific elements of a mix

- Music education - Isolating instrument tracks for practicing or study

- Music information retrieval - Analyzing the individual components of a song

The model's state-of-the-art performance and flexible interface make it a powerful tool for both professionals and hobbyists working with audio.

Things to try

Some interesting things to try with demucs include:

- Experimenting with the different pre-trained models to find the best fit for your audio

- Trying the "two-stems" mode to extract just the vocals or other specific element

- Utilizing the "shifts" option to improve separation quality, especially for complex mixes

- Applying the model to a diverse range of musical genres and styles to see how it performs

The maintainer, cjwbw, has also released several other impressive audio models like audiosep, video-retalking, and voicecraft that may be of interest to explore further.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

demucs

344

Demucs is an audio source separator created by Facebook Research. It is a powerful AI model capable of separating audio into its individual components, such as vocals, drums, and instruments. Demucs can be compared to other similar models like Demucs Music Source Separation, Zero shot Sound separation by arbitrary query samples, and Separate Anything You Describe. These models all aim to extract individual audio sources from a mixed recording, allowing users to isolate and manipulate specific elements. Model inputs and outputs The Demucs model takes in an audio file and allows the user to customize various parameters, such as the number of parallel jobs, the stem to isolate, the specific Demucs model to use, and options related to splitting the audio, shifting, overlapping, and clipping. The model then outputs the processed audio in the user's chosen format, whether that's MP3, WAV, or another option. Inputs Audio**: The file to be processed Model**: The specific Demucs model to use for separation Stem**: The audio stem to isolate (e.g., vocals, drums, bass) Jobs**: The number of parallel jobs to use for separation Split**: Whether to split the audio into chunks Shifts**: The number of random shifts for equivariant stabilization Overlap**: The amount of overlap between prediction windows Segment**: The segment length to use for separation Clip mode**: The strategy for avoiding clipping MP3 preset**: The preset for the MP3 output WAV format**: The format for the WAV output MP3 bitrate**: The bitrate for the MP3 output Outputs The processed audio file in the user's chosen format Capabilities Demucs is capable of separating audio into its individual components with high accuracy. This can be useful for a variety of applications, such as music production, sound design, and audio restoration. By isolating specific elements of a mixed recording, users can more easily manipulate and enhance the audio to achieve their desired effects. What can I use it for? The Demucs model can be used in a wide range of projects, from music production and audio editing to sound design and post-production. For example, a musician could use Demucs to isolate the vocals from a recorded song, allowing them to adjust the volume or apply effects without affecting the other instruments. Similarly, a sound designer could use Demucs to extract specific sound elements from a complex audio file, such as the footsteps or ambiance, for use in a video game or film. Things to try One interesting thing to try with Demucs is experimenting with the different model options, such as the number of shifts and the overlap between prediction windows. Adjusting these parameters can have a significant impact on the separation quality and processing time, allowing users to find the optimal balance for their specific needs. Additionally, users could try combining Demucs with other audio processing tools, such as EQ or reverb, to further enhance the separated audio elements.

Updated Invalid Date

audiosep

2

audiosep is a foundation model for open-domain sound separation with natural language queries, developed by cjwbw. It demonstrates strong separation performance and impressive zero-shot generalization ability on numerous tasks such as audio event separation, musical instrument separation, and speech enhancement. audiosep can be compared to similar models like video-retalking, openvoice, voicecraft, whisper-diarization, and depth-anything from the same maintainer, which also focus on audio and video processing tasks. Model inputs and outputs audiosep takes an audio file and a textual description as inputs, and outputs the separated audio based on the provided description. The model processes audio at a 32 kHz sampling rate. Inputs Audio File**: The input audio file to be separated. Text**: The textual description of the audio content to be separated. Outputs Separated Audio**: The output audio file with the requested components separated. Capabilities audiosep can separate a wide range of audio content, from musical instruments to speech and environmental sounds, based on natural language descriptions. It demonstrates impressive zero-shot generalization, allowing users to separate audio in novel ways beyond the training data. What can I use it for? You can use audiosep for a variety of audio processing tasks, such as music production, audio editing, speech enhancement, and audio analytics. The model's ability to separate audio based on natural language descriptions allows for highly customizable and flexible audio manipulation. For example, you could use audiosep to isolate specific instruments in a music recording, remove background noise from a speech recording, or extract environmental sounds from a complex audio scene. Things to try Try using audiosep to separate audio in novel ways, such as isolating a specific sound effect from a movie soundtrack, extracting individual vocals from a choir recording, or separating a specific bird call from a nature recording. The model's flexibility and zero-shot capabilities allow for a wide range of creative and practical applications.

Updated Invalid Date

video-retalking

65

video-retalking is a system developed by researchers at Tencent AI Lab and Xidian University that enables audio-based lip synchronization and expression editing for talking head videos. It builds on prior work like Wav2Lip, PIRenderer, and GFP-GAN to create a pipeline for generating high-quality, lip-synced videos from talking head footage and audio. Unlike models like voicecraft, which focus on speech editing, or tokenflow, which aims for consistent video editing, video-retalking is specifically designed for synchronizing lip movements with audio. Model inputs and outputs video-retalking takes two main inputs: a talking head video and an audio file. The model then generates a new video with the facial expressions and lip movements synchronized to the provided audio. This allows users to edit the appearance and emotion of a talking head video while preserving the original audio. Inputs Face**: Input video file of a talking-head. Input Audio**: Input audio file to synchronize with the video. Outputs Output**: The generated video with synchronized lip movements and expressions. Capabilities video-retalking can generate high-quality, lip-synced videos even in the wild, meaning it can handle real-world footage without the need for extensive pre-processing or manual alignment. The model is capable of disentangling the task into three key steps: generating a canonical face expression, synchronizing the lip movements to the audio, and enhancing the photo-realism of the final output. What can I use it for? video-retalking can be a powerful tool for content creators, video editors, and anyone looking to edit or enhance talking head videos. Its ability to preserve the original audio while modifying the visual elements opens up possibilities for a wide range of applications, such as: Dubbing or re-voicing videos in different languages Adjusting the emotion or expression of a speaker Repairing or improving the lip sync in existing footage Creating animated avatars or virtual presenters Things to try One interesting aspect of video-retalking is its ability to control the expression of the upper face using pre-defined templates like "smile" or "surprise". This allows for more nuanced expression editing beyond just lip sync. Additionally, the model's sequential pipeline means each step can be examined and potentially fine-tuned for specific use cases.

Updated Invalid Date

📊

openvoice

9

The openvoice model, developed by the team at MyShell, is a versatile instant voice cloning AI that can accurately clone the tone color and generate speech in multiple languages and accents. It offers flexible control over voice styles, such as emotion and accent, as well as other style parameters like rhythm, pauses, and intonation. The model also supports zero-shot cross-lingual voice cloning, allowing it to generate speech in languages not present in the training dataset. The openvoice model builds upon several excellent open-source projects, including TTS, VITS, and VITS2. It has been powering the instant voice cloning capability of myshell.ai since May 2023 and has been used tens of millions of times by users worldwide, witnessing explosive growth on the platform. Model inputs and outputs Inputs Audio**: The reference audio used to clone the tone color. Text**: The text to be spoken by the cloned voice. Speed**: The speed scale of the output audio. Language**: The language of the audio to be generated. Outputs Output**: The generated audio in the cloned voice. Capabilities The openvoice model excels at accurate tone color cloning, flexible voice style control, and zero-shot cross-lingual voice cloning. It can generate speech in multiple languages and accents, while allowing for granular control over voice styles, including emotion and accent, as well as other parameters like rhythm, pauses, and intonation. What can I use it for? The openvoice model can be used for a variety of applications, such as: Instant voice cloning for audio, video, or gaming content Customized text-to-speech for assistants, chatbots, or audiobooks Multilingual voice acting and dubbing Voice conversion and style transfer Things to try With the openvoice model, you can experiment with different input reference audios to clone a wide range of voices and accents. You can also play with the style parameters to create unique and expressive speech outputs. Additionally, you can explore the model's cross-lingual capabilities by generating speech in languages not present in the training data.

Updated Invalid Date