style-your-hair

Maintainer: cjwbw

9

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | View on Github |

| Paper link | View on Arxiv |

Create account to get full access

Model overview

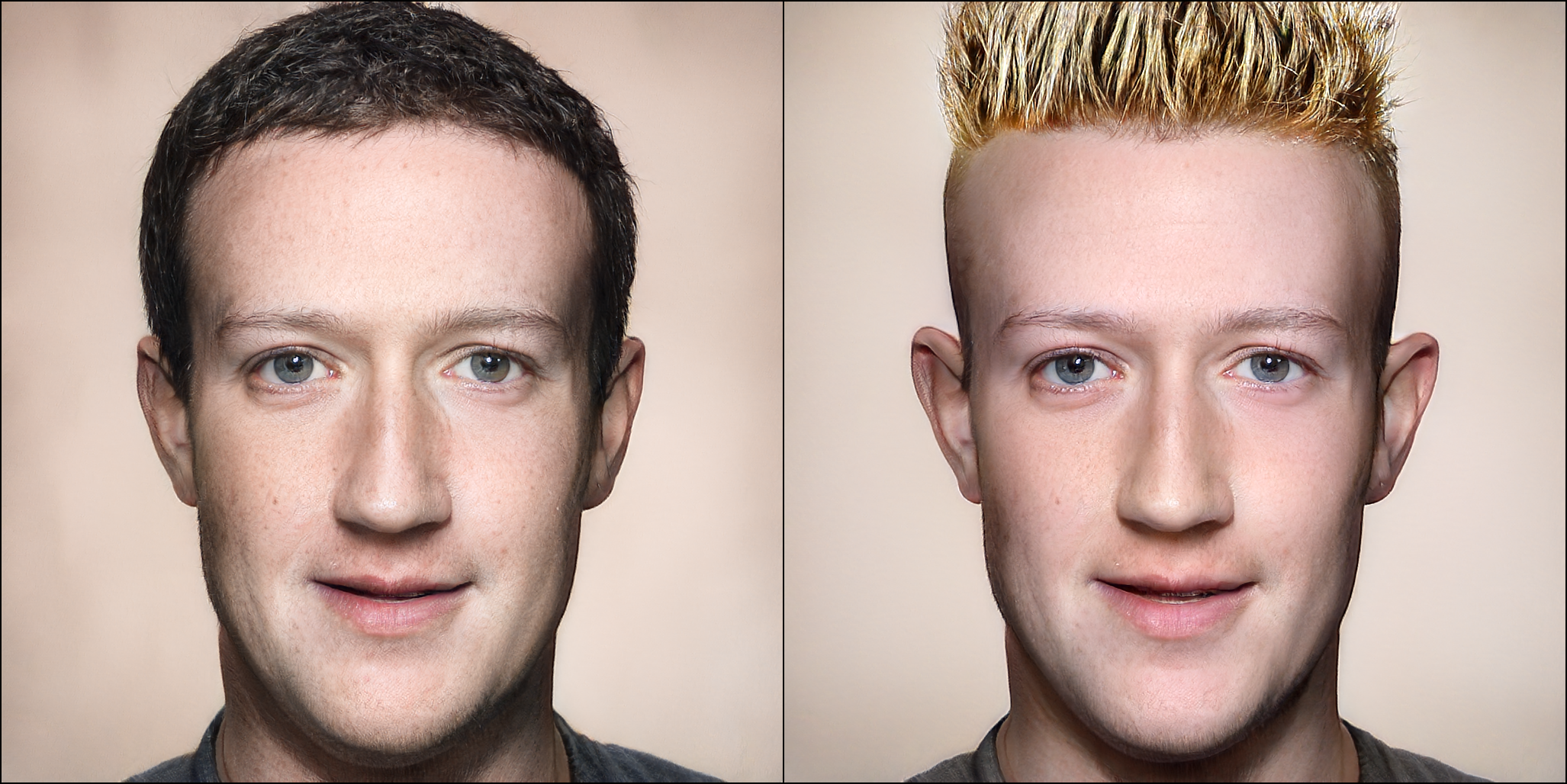

The style-your-hair model, developed by the Replicate creator cjwbw, is a pose-invariant hairstyle transfer model that allows users to seamlessly transfer hairstyles between different facial poses. Unlike previous approaches that assumed aligned target and source images, this model utilizes a latent optimization technique and a local-style-matching loss to preserve the detailed textures of the target hairstyle even under significant pose differences. The model builds upon recent advances in hair modeling and leverages the capabilities of Stable Diffusion, a powerful text-to-image generation model, to produce high-quality hairstyle transfers. Similar models created by cjwbw include herge-style, anything-v4.0, and stable-diffusion-v2-inpainting.

Model inputs and outputs

The style-your-hair model takes two images as input: a source image containing a face and a target image containing the desired hairstyle. The model then seamlessly transfers the target hairstyle onto the source face, preserving the detailed texture and appearance of the target hairstyle even under significant pose differences.

Inputs

- Source Image: The image containing the face onto which the hairstyle will be transferred.

- Target Image: The image containing the desired hairstyle to be transferred.

Outputs

- Transferred Hairstyle Image: The output image with the target hairstyle applied to the source face.

Capabilities

The style-your-hair model excels at transferring hairstyles between images with significant pose differences, a task that has historically been challenging. By leveraging a latent optimization technique and a local-style-matching loss, the model is able to preserve the detailed textures and appearance of the target hairstyle, resulting in high-quality, natural-looking transfers.

What can I use it for?

The style-your-hair model can be used in a variety of applications, such as virtual hair styling, entertainment, and fashion. For example, users could experiment with different hairstyles on their own photos or create unique hairstyles for virtual avatars. Businesses in the beauty and fashion industries could also leverage the model to offer personalized hair styling services or incorporate hairstyle transfer features into their products.

Things to try

One interesting aspect of the style-your-hair model is its ability to preserve the local-style details of the target hairstyle, even under significant pose differences. Users could experiment with transferring hairstyles between images with varying facial poses and angles, and observe how the model maintains the intricate textures and structure of the target hairstyle. Additionally, users could try combining the style-your-hair model with other Replicate models, such as anything-v3.0 or portraitplus, to explore more creative and personalized hair styling possibilities.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

face-align-cog

4

The face-align-cog model is a Cog implementation of a face alignment code from the stylegan-encoder project. It is designed to preprocess input images by aligning and cropping faces, which is often a necessary step before using them with other models. The model is similar to other face processing tools like GFPGAN and style-your-hair, which focus on face restoration and hairstyle transfer respectively. Model inputs and outputs The face-align-cog model takes a single input of an image URI and outputs a new image URI with the face aligned and cropped. Inputs Image**: The input source image. Outputs Output**: The image with the face aligned and cropped. Capabilities The face-align-cog model can be used to preprocess input images by aligning and cropping the face. This can be useful when working with models that require well-aligned faces, such as face recognition or face generation models. What can I use it for? The face-align-cog model can be used as a preprocessing step for a variety of computer vision tasks that involve faces, such as face recognition, face generation, or facial analysis. It could be integrated into a larger pipeline or used as a standalone tool to prepare images for use with other models. Things to try You could try using the face-align-cog model to preprocess your own images before using them with other face-related models, such as the GFPGAN model for face restoration or the style-your-hair model for hairstyle transfer. This can help ensure that your input images are properly aligned and cropped, which can improve the performance of those downstream models.

Updated Invalid Date

anything-v4.0

3.2K

The anything-v4.0 is a high-quality, highly detailed anime-style Stable Diffusion model created by cjwbw. It is part of a collection of similar models developed by cjwbw, including eimis_anime_diffusion, stable-diffusion-2-1-unclip, anything-v3-better-vae, and pastel-mix. These models are designed to generate detailed, anime-inspired images with high visual fidelity. Model inputs and outputs The anything-v4.0 model takes a text prompt as input and generates one or more images as output. The input prompt can describe the desired scene, characters, or artistic style, and the model will attempt to create a corresponding image. The model also accepts optional parameters such as seed, image size, number of outputs, and guidance scale to further control the generation process. Inputs Prompt**: The text prompt describing the desired image Seed**: The random seed to use for generation (leave blank to randomize) Width**: The width of the output image (maximum 1024x768 or 768x1024) Height**: The height of the output image (maximum 1024x768 or 768x1024) Scheduler**: The denoising scheduler to use for generation Num Outputs**: The number of images to generate Guidance Scale**: The scale for classifier-free guidance Negative Prompt**: The prompt or prompts not to guide the image generation Outputs Image(s)**: One or more generated images matching the input prompt Capabilities The anything-v4.0 model is capable of generating high-quality, detailed anime-style images from text prompts. It can create a wide range of scenes, characters, and artistic styles, from realistic to fantastical. The model's outputs are known for their visual fidelity and attention to detail, making it a valuable tool for artists, designers, and creators working in the anime and manga genres. What can I use it for? The anything-v4.0 model can be used for a variety of creative and commercial applications, such as generating concept art, character designs, storyboards, and illustrations for anime, manga, and other media. It can also be used to create custom assets for games, animations, and other digital content. Additionally, the model's ability to generate unique and detailed images from text prompts can be leveraged for various marketing and advertising applications, such as dynamic product visualization, personalized content creation, and more. Things to try With the anything-v4.0 model, you can experiment with a wide range of text prompts to see the diverse range of images it can generate. Try describing specific characters, scenes, or artistic styles, and observe how the model interprets and renders these elements. You can also play with the various input parameters, such as seed, image size, and guidance scale, to further fine-tune the generated outputs. By exploring the capabilities of this model, you can unlock new and innovative ways to create engaging and visually stunning content.

Updated Invalid Date

hairclip

275

The hairclip model, developed by maintainer wty-ustc, is a novel AI model that can design hair by utilizing both text and reference image inputs. It supports editing hairstyle, hair color, or both, making it a versatile tool for hair styling and customization. The model builds upon previous work like StyleCLIP and HairCLIPv2, which have demonstrated the power of combining CLIP and StyleGAN for text-driven image manipulation. Model inputs and outputs The hairclip model takes two main inputs: an image and a text description. The image can be of any face, which the model will use as a reference for editing the hairstyle and/or color. The text description can specify the desired hairstyle, hair color, or both. Inputs Image**: The input image, which can be of any face. The model will use this as a reference for editing the hairstyle and/or color. Editing Type**: Specify whether to edit the hairstyle, hair color, or both. Hairstyle Description**: A text prompt describing the desired hairstyle. Color Description**: A text prompt describing the desired hair color. Outputs Edited Image**: The output image with the hair edited according to the provided inputs. Capabilities The hairclip model is capable of seamlessly blending text-based and image-based hair editing. It can manipulate hairstyles, hair colors, or both, allowing users to customize a person's appearance in a natural and realistic way. The model leverages powerful underlying technologies like CLIP and StyleGAN to achieve high-quality and photorealistic results. What can I use it for? The hairclip model can be used for a variety of creative and practical applications. For example, you could use it to experiment with different hairstyles and colors on yourself or your friends, or to create unique and personalized avatars and characters for games, art projects, or social media. Businesses in the beauty and fashion industries could also leverage the model to offer virtual hair styling services or to generate product visualization imagery. Things to try One interesting thing to try with the hairclip model is to experiment with different combinations of text prompts and reference images. You could start with a simple hairstyle description and see how the model interprets it, then try adding a reference image to see how the two inputs are combined. You could also try more complex or creative text prompts to see how the model responds. Additionally, you could try editing both the hairstyle and color to see the full range of the model's capabilities.

Updated Invalid Date

wavyfusion

3

wavyfusion is a Dreambooth-trained AI model that can generate diverse images, ranging from photographs to paintings. It was created by the Replicate user cjwbw, who has also developed similar models like analog-diffusion, portraitplus, and contributed to models like dreambooth and dreambooth-batch. These models demonstrate the versatility of Dreambooth, a technique that allows training custom Stable Diffusion models on a small set of images. Model inputs and outputs wavyfusion is a text-to-image AI model that generates images based on a provided prompt. The model takes in a variety of inputs, including the prompt, seed, image size, number of outputs, and more. The outputs are a set of generated images that match the given prompt. Inputs Prompt**: The text description of the desired image Seed**: A random seed value to control the image generation Width/Height**: The desired dimensions of the output image Num Outputs**: The number of images to generate Scheduler**: The algorithm used to generate the images Guidance Scale**: The scale for classifier-free guidance Negative Prompt**: Specify things to not see in the output Prompt Strength**: The strength of the prompt when using an initial image Num Inference Steps**: The number of denoising steps to take Outputs A set of generated images that match the provided prompt Capabilities wavyfusion can generate a wide variety of images, from realistic photographs to creative and artistic renderings. The model's diverse training dataset allows it to produce images in many different styles and genres, making it a versatile tool for various creative applications. What can I use it for? With wavyfusion's capabilities, you can use it for all sorts of creative projects, such as: Generating concept art or illustrations for stories, games, or other media Producing unique and personalized images for marketing, branding, or social media Experimenting with different art styles and techniques Visualizing ideas or concepts that are difficult to express through traditional means Things to try One interesting aspect of wavyfusion is its ability to blend different artistic styles and techniques. Try experimenting with prompts that combine elements from various genres, such as "a surrealist portrait of a person in the style of impressionist painting". This can lead to unexpected and visually striking results.

Updated Invalid Date