wsrglow

Maintainer: zkx06111

1

🤔

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | View on Github |

| Paper link | View on Arxiv |

Create account to get full access

Model overview

wsrglow is a Glow-based waveform generative model for audio super-resolution, developed by the researcher zkx06111. It can intelligently upsample audio by 2x resolution, similar to models like AudioSR and ARBSR. The model is based on the Interspeech 2021 paper [object Object].

Model inputs and outputs

wsrglow takes a low-sample rate audio file in WAV format as input and generates a high-resolution version of the same audio. The input and output files can be used for audio upsampling tasks.

Inputs

- input: Low-sample rate input file in .wav format

Outputs

- file: High-resolution output file in .wav format

- text: (not used)

Capabilities

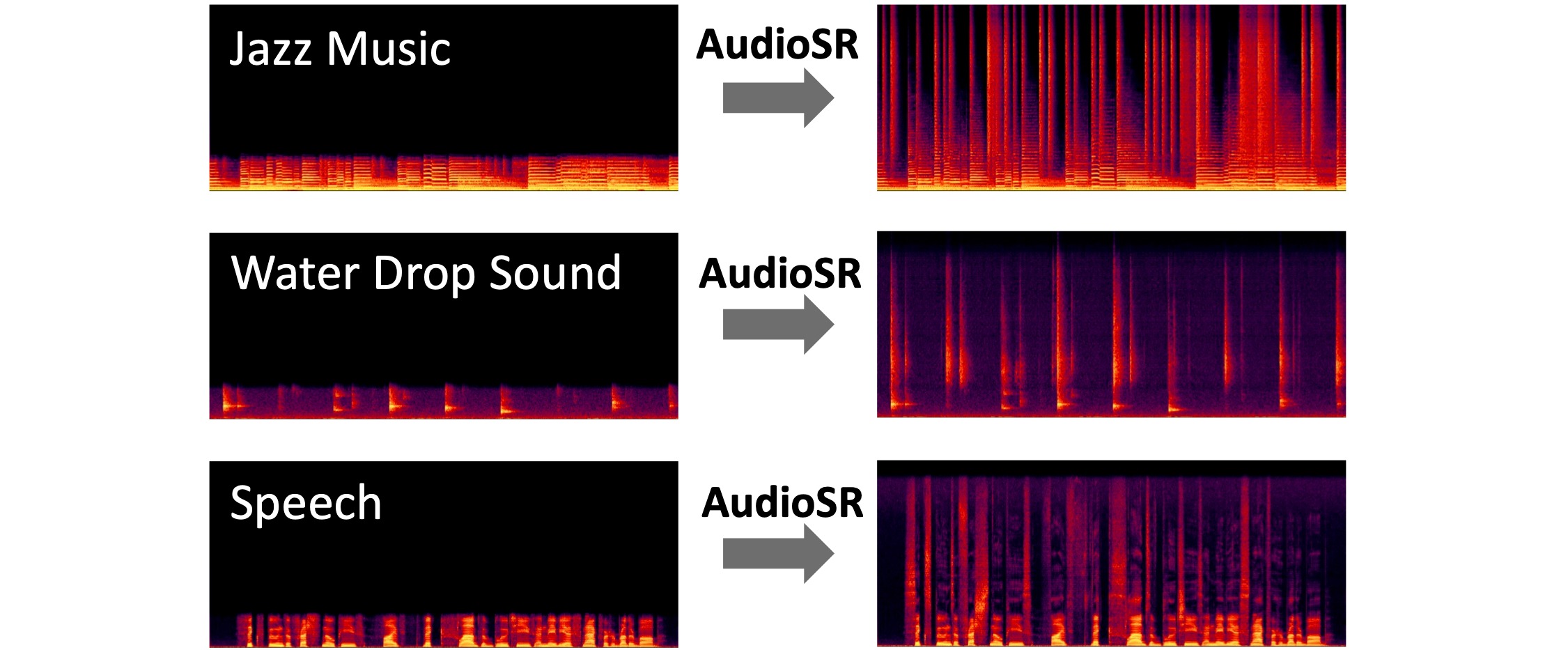

wsrglow can intelligently upscale audio by 2x resolution, preserving details and maintaining audio quality. It leverages Glow, a powerful generative model, to achieve this. The model is capable of handling a variety of audio content, from speech to music.

What can I use it for?

The wsrglow model can be useful for a range of audio processing applications that require high-quality upsampling, such as enhancing the resolution of audio recordings, improving the fidelity of music tracks, or processing low-quality speech samples. It could be particularly valuable in scenarios where audio quality is important, like content production, audio engineering, or multimedia applications.

Things to try

Experiment with different types of audio inputs, from speech to music, to see how wsrglow performs. You can also try varying the input resolution to observe the model's upscaling capabilities. Additionally, you could explore ways to integrate wsrglow into your own audio processing pipelines or workflows.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

audio-super-resolution

46

audio-super-resolution is a versatile audio super-resolution model developed by Replicate creator nateraw. It is capable of upscaling various types of audio, including music, speech, and environmental sounds, to higher fidelity across different sampling rates. This model can be seen as complementary to other audio-focused models like whisper-large-v3, which focuses on speech recognition, and salmonn, which handles a broader range of audio tasks. Model inputs and outputs audio-super-resolution takes in an audio file and generates an upscaled version of the input. The model supports both single file processing and batch processing of multiple audio files. Inputs Input Audio File**: The audio file to be upscaled, which can be in various formats. Input File List**: A file containing a list of audio files to be processed in batch. Outputs Upscaled Audio File**: The super-resolved version of the input audio, saved in the specified output directory. Capabilities audio-super-resolution can handle a wide variety of audio types, from music and speech to environmental sounds, and it can work with different sampling rates. The model is capable of enhancing the fidelity and quality of the input audio, making it a useful tool for tasks such as audio restoration, content creation, and audio post-processing. What can I use it for? The audio-super-resolution model can be leveraged in various applications where high-quality audio is required, such as music production, podcast editing, sound design, and audio archiving. By upscaling lower-quality audio files, users can create more polished and professional-sounding audio content. Additionally, the model's versatility makes it suitable for use in creative projects, content creation workflows, and audio-related research and development. Things to try To get started with audio-super-resolution, you can experiment with processing both individual audio files and batches of files. Try using the model on a variety of audio types, such as music, speech, and environmental sounds, to see how it performs. Additionally, you can adjust the model's parameters, such as the DDIM steps and guidance scale, to explore the trade-offs between audio quality and processing time.

Updated Invalid Date

audiosr-long-audio

1

audiosr-long-audio is a versatile audio super-resolution model created by Sakemin. It can upsample audio files to 48kHz, with the capability to handle longer audio inputs compared to other models. This model is part of Sakemin's suite of audio-related models, which includes the audio-super-resolution model, the musicgen-fine-tuner model, and the musicgen-remixer model. Model inputs and outputs The audiosr-long-audio model accepts several key inputs, including an audio file to be upsampled, a random seed, the number of DDIM (Denoising Diffusion Implicit Models) inference steps, and a guidance scale value. The model outputs a URI pointing to the upsampled audio file. Inputs Input File**: The audio file to be upsampled, provided as a URI. Seed**: A random seed value, which can be left blank to randomize the seed. Ddim Steps**: The number of DDIM inference steps, with a default of 50 and a range of 10 to 500. Guidance Scale**: The scale for classifier-free guidance, with a default of 3.5 and a range of 1 to 20. Truncated Batches**: A boolean flag to enable truncating batches to 5.12 seconds, which is essential for handling long audio files due to memory constraints. Outputs Output**: The upsampled audio file, provided as a URI. Capabilities The audiosr-long-audio model can effectively upsample audio files to a higher 48kHz sample rate, preserving the quality and fidelity of the original audio. This makes it a useful tool for enhancing the listening experience of various audio content, such as music, podcasts, or voice recordings. What can I use it for? The audiosr-long-audio model can be employed in a variety of audio-related projects and applications. For example, musicians and audio engineers could use it to upscale their recorded tracks, improving the overall sound quality. Content creators, such as podcasters or video producers, could also leverage this model to enhance the audio in their productions. Additionally, the model's ability to handle longer audio inputs makes it suitable for processing larger audio files, such as full-length albums or long-form interviews. Things to try One interesting aspect of the audiosr-long-audio model is its flexibility in handling different audio file formats and lengths. Experiment with various types of audio content, from music to speech, to see how the model performs. Additionally, try adjusting the DDIM steps and guidance scale parameters to find the optimal settings for your specific use case.

Updated Invalid Date

arbsr

21

The arbsr model, developed by Longguang Wang, is a plug-in module that extends a baseline super-resolution (SR) network to a scale-arbitrary SR network with a small additional cost. This allows the model to perform non-integer and asymmetric scale factor SR, while maintaining state-of-the-art performance for integer scale factors. This is useful for real-world applications where arbitrary zoom levels are required, beyond the typical integer scale factors. The arbsr model is related to other SR models like GFPGAN, ESRGAN, SuPeR, and HCFlow-SR, which focus on various aspects of image restoration and enhancement. Model inputs and outputs Inputs image**: The input image to be super-resolved target_width**: The desired width of the output image, which can be 1-4 times the input width target_height**: The desired height of the output image, which can be 1-4 times the input width Outputs Output**: The super-resolved image at the desired target size Capabilities The arbsr model is capable of performing scale-arbitrary super-resolution, including non-integer and asymmetric scale factors. This allows for more flexible and customizable image enlargement compared to typical integer-only scale factors. What can I use it for? The arbsr model can be useful for a variety of real-world applications where arbitrary zoom levels are required, such as image editing, content creation, and digital asset management. By enabling non-integer and asymmetric scale factor SR, the model provides more flexibility and control over the final image resolution, allowing users to zoom in on specific details or adapt the image size to their specific needs. Things to try One interesting aspect of the arbsr model is its ability to handle continuous scale factors, which can be explored using the interactive viewer provided by the maintainer. This allows you to experiment with different zoom levels and observe the model's performance in real-time.

Updated Invalid Date

resshift

2

The resshift model is an efficient diffusion model for image super-resolution, developed by the Replicate team member cjwbw. It is designed to upscale and enhance the quality of low-resolution images by leveraging a residual shifting technique. This model can be particularly useful for tasks that require generating high-quality, detailed images from their lower-resolution counterparts, such as real-esrgan, analog-diffusion, and clip-guided-diffusion. Model inputs and outputs The resshift model accepts a grayscale input image, a scaling factor, and an optional random seed. It then generates a higher-resolution version of the input image, preserving the original content and details while enhancing the overall quality. Inputs Image**: A grayscale input image Scale**: The factor to scale the image by (default is 4) Seed**: A random seed (leave blank to randomize) Outputs Output**: A high-resolution version of the input image Capabilities The resshift model is capable of generating detailed, upscaled images from low-resolution inputs. It leverages a residual shifting technique to efficiently improve the resolution and quality of the output, without introducing significant artifacts or distortions. This model can be particularly useful for tasks that require generating high-quality images from low-resolution sources, such as those found in stable-diffusion-high-resolution and supir. What can I use it for? The resshift model can be used for a variety of applications that require generating high-quality images from low-resolution inputs. This includes tasks such as photo restoration, image upscaling for digital displays, and enhancing the visual quality of low-resolution media. The model's efficient and effective upscaling capabilities make it a valuable tool for content creators, designers, and anyone working with images that need to be displayed at higher resolutions. Things to try Experiment with the resshift model by providing a range of input images with varying levels of resolution and detail. Observe how the model is able to upscale and enhance the quality of the output, while preserving the original content and features. Additionally, try adjusting the scaling factor to see how it affects the level of detail and sharpness in the final image. This model can be a powerful tool for improving the visual quality of your projects and generating high-quality images from low-resolution sources.

Updated Invalid Date