Sakemin

Models by this creator

musicgen-fine-tuner

38

musicgen-fine-tuner is a Cog implementation of the MusicGen model, a straightforward and manageable model for music generation. Developed by the Meta team, MusicGen is a simple and controllable model that can generate diverse music without requiring a self-supervised semantic representation like MusicLM. The musicgen-fine-tuner allows users to refine the MusicGen model using their own datasets, enabling them to customize the generated music to their specific needs. Model inputs and outputs The musicgen-fine-tuner model takes several inputs to generate music, including a prompt describing the desired music, an optional input audio file to influence the melody, and various configuration parameters like duration, temperature, and continuation options. The model outputs a WAV or MP3 audio file containing the generated music. Inputs Prompt**: A description of the music you want to generate. Input Audio**: An audio file that will influence the generated music. The model can either continue the melody of the input audio or mimic its overall style. Duration**: The duration of the generated audio in seconds. Continuation**: Whether the generated music should continue the input audio's melody or mimic its overall style. Continuation Start/End**: The start and end times of the input audio to use for continuation. Multi-Band Diffusion**: Whether to use multi-band diffusion when decoding the EnCodec tokens (only works with non-stereo models). Normalization Strategy**: The strategy for normalizing the output audio. Temperature**: Controls the "conservativeness" of the sampling process, with higher values producing more diverse outputs. Classifier Free Guidance**: Increases the influence of inputs on the output, producing lower-variance outputs that adhere more closely to the inputs. Outputs Audio File**: A WAV or MP3 audio file containing the generated music. Capabilities The musicgen-fine-tuner model can generate diverse and customizable music based on user prompts and input audio. It can produce a wide range of musical styles and genres, from classical to electronic, and can be fine-tuned to specialize in specific styles or themes. Unlike more complex models like MusicLM, musicgen-fine-tuner is a single-stage, auto-regressive Transformer model that can generate all the necessary audio components in a single pass, resulting in faster and more efficient music generation. What can I use it for? The musicgen-fine-tuner model can be used for a variety of applications, such as: Soundtrack and background music generation**: Generate custom music for videos, games, or other multimedia projects. Music composition assistance**: Use the model to generate musical ideas or inspirations for human composers and musicians. Audio content creation**: Create custom audio content for podcasts, radio, or other audio-based platforms. Music exploration and experimentation**: Fine-tune the model on your own musical datasets to explore new styles and genres. Things to try To get the most out of the musicgen-fine-tuner model, you can experiment with different prompts, input audio, and configuration settings. Try generating music in a variety of styles and genres, and explore the effects of adjusting parameters like temperature and classifier free guidance. You can also fine-tune the model on your own datasets to see how it performs on specific types of music or audio content.

Updated 9/19/2024

musicgen-stereo-chord

37

musicgen-stereo-chord is a Cog implementation of Meta's MusicGen Melody model, created by sakemin. It can generate music based on audio-based chord conditions or text-based chord conditions, with the key difference being that it is restricted to chord sequences and tempo. This contrasts with the original MusicGen model, which can generate music from a prompt or melody. Model inputs and outputs The musicgen-stereo-chord model takes a variety of inputs to condition the generated music, including a text-based prompt, chord progression, tempo, and time signature. It outputs a generated audio file in either WAV or MP3 format. Inputs Prompt**: A description of the music you want to generate. Text Chords**: A text-based chord progression condition, with each chord specified by a root note and optional chord type. BPM**: The tempo of the generated music, in beats per minute. Time Signature**: The time signature of the generated music, in the format "numerator/denominator". Audio Chords**: An optional audio file that will be used to condition the chord progression. Audio Start/End**: The start and end times within the audio file to use for chord conditioning. Duration**: The length of the generated audio, in seconds. Continuation**: Whether to continue the music from the provided audio file, or to generate new music based on the chord conditions. Multi-Band Diffusion**: Whether to use the Multi-Band Diffusion technique to decode the generated audio. Normalization Strategy**: The strategy to use for normalizing the output audio. Sampling Parameters**: Various parameters to control the sampling process, such as temperature, top-k, and top-p. Outputs Generated Audio**: The generated music in WAV or MP3 format. Capabilities musicgen-stereo-chord can generate coherent and musically plausible chord-based music, with the ability to condition on both text-based and audio-based chord progressions. It also supports features like continuation, where the generated music can build upon a provided audio file, and multi-band diffusion, which can improve the quality of the output audio. What can I use it for? The musicgen-stereo-chord model could be used for a variety of music-related applications, such as: Generating background music for videos, games, or other multimedia projects. Composing chord-based musical pieces for various genres, such as pop, rock, or electronic music. Experimenting with different chord progressions and tempos to inspire new musical ideas. Exploring the use of audio-based chord conditioning to create more authentic-sounding music. Things to try One interesting aspect of musicgen-stereo-chord is its ability to continue generating music from a provided audio file. This could be used to create seamless loops or extended musical compositions by iteratively generating new sections that flow naturally from the previous ones. Another intriguing feature is the multi-band diffusion technique, which can improve the overall quality of the generated audio. Experimenting with this setting and comparing the results to the standard decoding approach could yield interesting insights into the trade-offs between audio quality and generation time.

Updated 9/19/2024

musicgen-remixer

7

musicgen-remixer is a Cog implementation of the MusicGen Chord model, a modified version of Meta's MusicGen Melody model. It can generate music by remixing an input audio file into a different style based on a text prompt. This model is created by sakemin, who has also developed similar models like musicgen-fine-tuner and musicgen. Model inputs and outputs The musicgen-remixer model takes in an audio file and a text prompt describing the desired musical style. It then generates a remix of the input audio in the specified style. The model supports various configuration options, such as adjusting the sampling temperature, controlling the influence of the input, and selecting the output format. Inputs prompt: A text description of the desired musical style for the remix. music_input: An audio file to be remixed. Outputs The remixed audio file in the requested style. Capabilities The musicgen-remixer model can transform input audio into a variety of musical styles based on a text prompt. For example, you could input a rock song and a prompt like "bossa nova" to generate a bossa nova-style remix of the original track. What can I use it for? The musicgen-remixer model could be useful for musicians, producers, or creators who want to experiment with remixing and transforming existing audio content. It could be used to create new, unique musical compositions, add variety to playlists, or generate backing tracks for live performances. Things to try Try inputting different types of audio, from vocals to full-band recordings, and see how the model handles the transformation. Experiment with various prompts, from specific genres to more abstract descriptors, to see the range of styles the model can produce.

Updated 9/19/2024

all-in-one-music-structure-analyzer

4

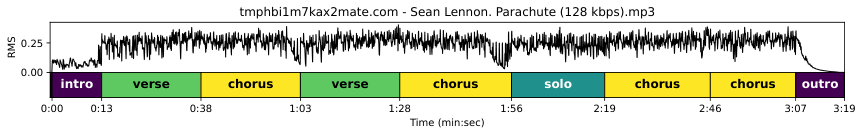

all-in-one-music-structure-analyzer is a Cog implementation of the "All-In-One Music Structure Analyzer" model developed by Taejun Kim. This model provides a comprehensive analysis of music structure, including tempo (BPM), beats, downbeats, functional segment boundaries, and functional segment labels (e.g., intro, verse, chorus, bridge, outro). The model is trained on the Harmonix Set dataset and uses neighborhood attentions on demixed audio to achieve high performance. This model is similar to other music analysis tools like musicgen-fine-tuner and musicgen-remixer created by the same maintainer, sakemin. Model inputs and outputs The all-in-one-music-structure-analyzer model takes an audio file as input and outputs a detailed analysis of the music structure. The analysis includes tempo (BPM), beat positions, downbeat positions, and functional segment boundaries and labels. Inputs Music Input**: An audio file to analyze. Outputs Tempo (BPM)**: The estimated tempo of the input audio in beats per minute. Beats**: The time positions of the detected beats in the audio. Downbeats**: The time positions of the detected downbeats in the audio. Functional Segment Boundaries**: The start and end times of the detected functional segments in the audio. Functional Segment Labels**: The labels of the detected functional segments, such as intro, verse, chorus, bridge, and outro. Capabilities The all-in-one-music-structure-analyzer model can provide a comprehensive analysis of the musical structure of an audio file, including tempo, beats, downbeats, and functional segment information. This information can be useful for various applications, such as music information retrieval, automatic music transcription, and music production. What can I use it for? The all-in-one-music-structure-analyzer model can be used for a variety of music-related applications, such as: Music analysis and understanding**: The detailed analysis of the music structure can be used to better understand the composition and arrangement of a musical piece. Music editing and production**: The beat, downbeat, and segment information can be used to aid in tasks like tempo matching, time stretching, and sound editing. Automatic music transcription**: The model's output can be used as a starting point for automatic music transcription systems. Music information retrieval**: The structural information can be used to improve the performance of music search and recommendation systems. Things to try One interesting thing to try with the all-in-one-music-structure-analyzer model is to use the segment boundary and label information to create visualizations of the music structure. This can provide a quick and intuitive way to understand the overall composition of a musical piece. Another interesting experiment would be to use the model's output as a starting point for further music analysis or processing tasks, such as chord detection, melody extraction, or automatic music summarization.

Updated 9/19/2024

musicgen-chord

2

musicgen-chord is a modified version of Meta's MusicGen Melody model, created by sakemin. This model can generate music based on either audio-based chord conditions or text-based chord conditions. It is a specialized model that focuses on generating music restricted to specific chord sequences and tempos. The model is similar to other models in the MusicGen family, such as musicgen-stereo-chord, which generates music in stereo with chord and tempo restrictions, and musicgen-remixer, which can remix music into different styles using MusicGen Chord. Additionally, the musicgen-fine-tuner model allows users to fine-tune the MusicGen small, medium, and melody models, including the stereo versions. Model inputs and outputs musicgen-chord takes in a variety of inputs to control the generated music, including text-based chord conditions, audio-based chord conditions, tempo, time signature, and more. The model can output audio in either WAV or MP3 format. Inputs Prompt**: A description of the music you want to generate. Text Chords**: A text-based chord progression condition, where chords are specified using a specific format. BPM**: The desired tempo for the generated music. Time Signature**: The time signature for the generated music. Audio Chords**: An audio file that will condition the chord progression. Audio Start/End**: The start and end times in the audio file to use for chord conditioning. Duration**: The duration of the generated audio in seconds. Continuation**: Whether the generated music should continue from the provided audio chords. Multi-Band Diffusion**: Whether to use Multi-Band Diffusion for decoding the EnCodec tokens. Normalization Strategy**: The strategy for normalizing the output audio. Sampling Parameters**: Controls like top-k, top-p, temperature, and classifier-free guidance. Outputs Generated Audio**: The output audio file in either WAV or MP3 format. Capabilities musicgen-chord can generate music with specific chord progressions and tempos. This allows users to create music that fits within certain musical constraints, such as a particular genre or style. The model can also continue generating music based on an existing audio input, allowing for more seamless and coherent compositions. What can I use it for? The musicgen-chord model can be useful for a variety of music-related applications, such as: Music Composition**: Generate new musical compositions with specific chord progressions and tempos, suitable for various genres or styles. Film/Game Scoring**: Create background music for films, TV shows, or video games that fits the desired mood and musical characteristics. Music Remixing**: Rework existing music by generating new variations based on the original chord progressions and tempo. Music Education**: Use the model to create practice exercises or educational materials focused on chord progressions and music theory. Things to try Some interesting things to try with musicgen-chord include: Experiment with different text-based chord conditions to see how they impact the generated music. Explore using audio-based chord conditioning and compare the results to text-based conditioning. Try generating longer, more complex musical compositions by using the continuation feature. Adjust the various sampling parameters, such as temperature and classifier-free guidance, to see how they affect the creativity and diversity of the generated music.

Updated 9/19/2024

audiosr-long-audio

1

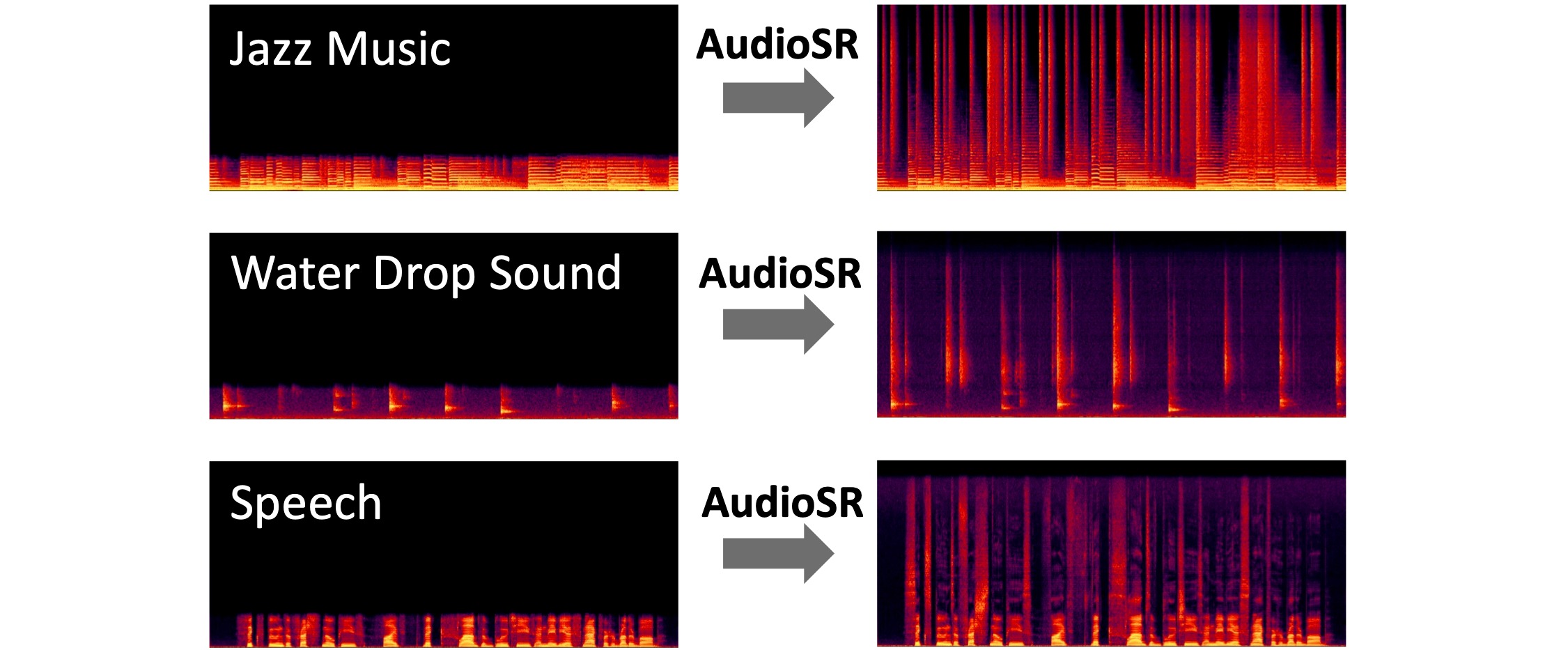

audiosr-long-audio is a versatile audio super-resolution model created by Sakemin. It can upsample audio files to 48kHz, with the capability to handle longer audio inputs compared to other models. This model is part of Sakemin's suite of audio-related models, which includes the audio-super-resolution model, the musicgen-fine-tuner model, and the musicgen-remixer model. Model inputs and outputs The audiosr-long-audio model accepts several key inputs, including an audio file to be upsampled, a random seed, the number of DDIM (Denoising Diffusion Implicit Models) inference steps, and a guidance scale value. The model outputs a URI pointing to the upsampled audio file. Inputs Input File**: The audio file to be upsampled, provided as a URI. Seed**: A random seed value, which can be left blank to randomize the seed. Ddim Steps**: The number of DDIM inference steps, with a default of 50 and a range of 10 to 500. Guidance Scale**: The scale for classifier-free guidance, with a default of 3.5 and a range of 1 to 20. Truncated Batches**: A boolean flag to enable truncating batches to 5.12 seconds, which is essential for handling long audio files due to memory constraints. Outputs Output**: The upsampled audio file, provided as a URI. Capabilities The audiosr-long-audio model can effectively upsample audio files to a higher 48kHz sample rate, preserving the quality and fidelity of the original audio. This makes it a useful tool for enhancing the listening experience of various audio content, such as music, podcasts, or voice recordings. What can I use it for? The audiosr-long-audio model can be employed in a variety of audio-related projects and applications. For example, musicians and audio engineers could use it to upscale their recorded tracks, improving the overall sound quality. Content creators, such as podcasters or video producers, could also leverage this model to enhance the audio in their productions. Additionally, the model's ability to handle longer audio inputs makes it suitable for processing larger audio files, such as full-length albums or long-form interviews. Things to try One interesting aspect of the audiosr-long-audio model is its flexibility in handling different audio file formats and lengths. Experiment with various types of audio content, from music to speech, to see how the model performs. Additionally, try adjusting the DDIM steps and guidance scale parameters to find the optimal settings for your specific use case.

Updated 9/19/2024