zerodim

Maintainer: avivga

1

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | View on Github |

| Paper link | View on Arxiv |

Create account to get full access

Model overview

The zerodim model, developed by Aviv Gabbay, is a powerful tool for disentangled face manipulation. It leverages CLIP-based annotations to facilitate the manipulation of facial attributes like age, gender, ethnicity, hair color, beard, and glasses in a zero-shot manner. This approach sets it apart from models like StyleCLIP, which requires textual descriptions for manipulation, and GFPGAN, which focuses on face restoration.

Model inputs and outputs

The zerodim model takes a facial image as input and allows manipulation of specific attributes. The available attributes include age, gender, hair color, beard, and glasses. The model outputs the manipulated image, seamlessly incorporating the desired changes.

Inputs

- image: The input facial image, which will be aligned and resized to 256x256 pixels.

- factor: The attribute of interest to manipulate, such as age, gender, hair color, beard, or glasses.

Outputs

- file: The manipulated image with the specified attribute change.

- text: A brief description of the manipulation performed.

Capabilities

The zerodim model excels at disentangled face manipulation, allowing users to independently modify facial attributes without affecting other aspects of the image. This capability is particularly useful for applications such as photo editing, virtual try-on, and character design. The model's ability to leverage CLIP-based annotations sets it apart from traditional face manipulation approaches, enabling a more intuitive and user-friendly experience.

What can I use it for?

The zerodim model can be employed in a variety of applications, including:

- Photo editing: Easily manipulate facial attributes in existing photos to explore different looks or create desired effects.

- Virtual try-on: Visualize how a person would appear with different hairstyles, glasses, or other facial features.

- Character design: Quickly experiment with different facial characteristics when designing characters for games, movies, or other creative projects.

Things to try

One interesting aspect of the zerodim model is its ability to separate the manipulation of specific facial attributes from the overall image. This allows users to explore subtle changes or exaggerated effects, unlocking a wide range of creative possibilities. For example, you could try manipulating the gender of a face while keeping other features unchanged, or experiment with dramatic changes in hair color or the presence of facial hair.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

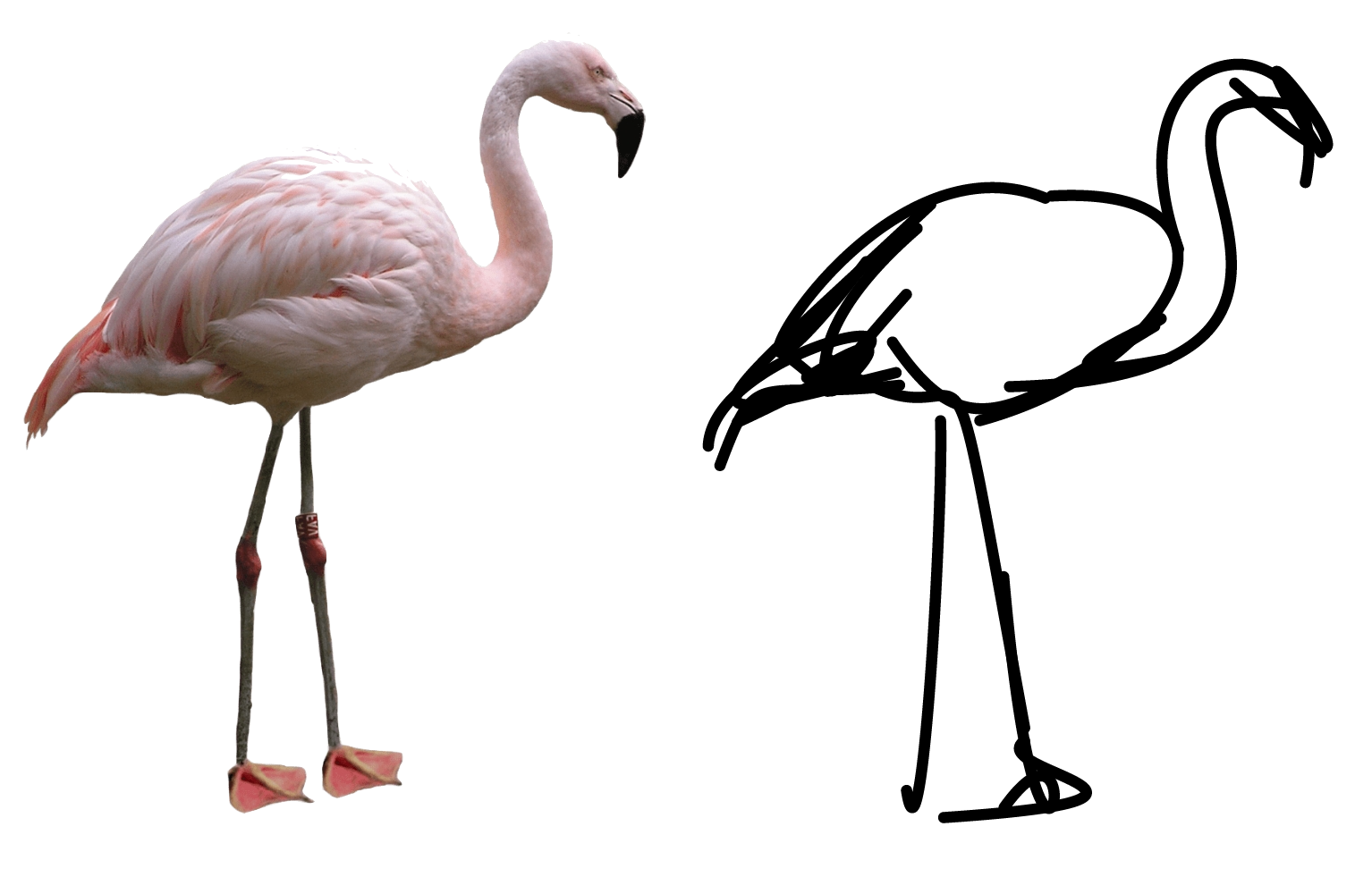

clipasso

8

clipasso is a method for converting an image of an object into a sketch, allowing for varying levels of abstraction. Developed by researchers at Replicate, clipasso uses a differentiable vector graphics rasterizer to optimize the parameters of Bézier curves directly with respect to a CLIP-based perceptual loss. This combines the final and intermediate activations of a pre-trained CLIP model to achieve both geometric and semantic simplifications. The level of abstraction is controlled by varying the number of strokes used to create the sketch. clipasso can be compared to similar models like CLIPDraw, which explores text-to-drawing synthesis through language-image encoders, and Diffvg, a differentiable vector graphics rasterization technique. Model inputs and outputs clipasso takes an image as input and generates a sketch of the object in the image. The sketch is represented as a set of Bézier curves, which can be adjusted to control the level of abstraction. Inputs Target Image**: The input image, which should be square-shaped and without a background. If the image has a background, it can be masked out using the mask_object parameter. Outputs Output Sketch**: The generated sketch, saved in SVG format. The level of abstraction can be controlled by adjusting the num_strokes parameter. Capabilities clipasso can generate abstract sketches of objects that capture the key geometric and semantic features. By varying the number of strokes, the model can produce sketches at different levels of abstraction, from simple outlines to more detailed renderings. The sketches maintain a strong resemblance to the original object while simplifying the visual information. What can I use it for? clipasso could be useful in various creative and design-oriented applications, such as concept art, storyboarding, and product design. The ability to quickly generate sketches at different levels of abstraction can help artists and designers explore ideas and iterate on visual concepts. Additionally, the semantically-aware nature of the sketches could make clipasso useful for tasks like visual reasoning or image-based information retrieval. Things to try One interesting aspect of clipasso is the ability to control the level of abstraction by adjusting the number of strokes. Experimenting with different stroke counts can lead to a range of sketch styles, from simple outlines to more detailed renderings. Additionally, using clipasso to sketch objects from different angles or in different contexts could yield interesting results and help users understand the model's capabilities and limitations.

Updated Invalid Date

styleclip

1.3K

styleclip is a text-driven image manipulation model developed by Or Patashnik, Zongze Wu, Eli Shechtman, Daniel Cohen-Or, and Dani Lischinski, as described in their ICCV 2021 paper. The model leverages the generative power of the StyleGAN generator and the visual-language capabilities of CLIP to enable intuitive text-based manipulation of images. The styleclip model offers three main approaches for text-driven image manipulation: Latent Vector Optimization: This method uses a CLIP-based loss to directly modify the input latent vector in response to a user-provided text prompt. Latent Mapper: This model is trained to infer a text-guided latent manipulation step for a given input image, enabling faster and more stable text-based editing. Global Directions: This technique maps text prompts to input-agnostic directions in the StyleGAN's style space, allowing for interactive text-driven image manipulation. Similar models like clip-features, stylemc, stable-diffusion, gfpgan, and upscaler also explore text-guided image generation and manipulation, but styleclip is unique in its use of CLIP and StyleGAN to enable intuitive, high-quality edits. Model inputs and outputs Inputs Input**: An input image to be manipulated Target**: A text description of the desired output image Neutral**: A text description of the input image Manipulation Strength**: A value controlling the degree of manipulation towards the target description Disentanglement Threshold**: A value controlling how specific the changes are to the target attribute Outputs Output**: The manipulated image generated based on the input and text prompts Capabilities The styleclip model is capable of generating highly realistic image edits based on natural language descriptions. For example, it can take an image of a person and modify their hairstyle, gender, expression, or other attributes by simply providing a target text prompt like "a face with a bowlcut" or "a smiling face". The model is able to make these changes while preserving the overall fidelity and identity of the original image. What can I use it for? The styleclip model can be used for a variety of creative and practical applications. Content creators and designers could leverage the model to quickly generate variations of existing images or produce new images based on text descriptions. Businesses could use it to create custom product visuals or personalized content. Researchers may find it useful for studying text-to-image generation and latent space manipulation. Things to try One interesting aspect of the styleclip model is its ability to perform "disentangled" edits, where the changes are specific to the target attribute described in the text prompt. By adjusting the disentanglement threshold, you can control how localized the edits are - a higher threshold leads to more targeted changes, while a lower threshold results in broader modifications across the image. Try experimenting with different text prompts and threshold values to see the range of edits the model can produce.

Updated Invalid Date

sdxl-lightning-4step

417.0K

sdxl-lightning-4step is a fast text-to-image model developed by ByteDance that can generate high-quality images in just 4 steps. It is similar to other fast diffusion models like AnimateDiff-Lightning and Instant-ID MultiControlNet, which also aim to speed up the image generation process. Unlike the original Stable Diffusion model, these fast models sacrifice some flexibility and control to achieve faster generation times. Model inputs and outputs The sdxl-lightning-4step model takes in a text prompt and various parameters to control the output image, such as the width, height, number of images, and guidance scale. The model can output up to 4 images at a time, with a recommended image size of 1024x1024 or 1280x1280 pixels. Inputs Prompt**: The text prompt describing the desired image Negative prompt**: A prompt that describes what the model should not generate Width**: The width of the output image Height**: The height of the output image Num outputs**: The number of images to generate (up to 4) Scheduler**: The algorithm used to sample the latent space Guidance scale**: The scale for classifier-free guidance, which controls the trade-off between fidelity to the prompt and sample diversity Num inference steps**: The number of denoising steps, with 4 recommended for best results Seed**: A random seed to control the output image Outputs Image(s)**: One or more images generated based on the input prompt and parameters Capabilities The sdxl-lightning-4step model is capable of generating a wide variety of images based on text prompts, from realistic scenes to imaginative and creative compositions. The model's 4-step generation process allows it to produce high-quality results quickly, making it suitable for applications that require fast image generation. What can I use it for? The sdxl-lightning-4step model could be useful for applications that need to generate images in real-time, such as video game asset generation, interactive storytelling, or augmented reality experiences. Businesses could also use the model to quickly generate product visualization, marketing imagery, or custom artwork based on client prompts. Creatives may find the model helpful for ideation, concept development, or rapid prototyping. Things to try One interesting thing to try with the sdxl-lightning-4step model is to experiment with the guidance scale parameter. By adjusting the guidance scale, you can control the balance between fidelity to the prompt and diversity of the output. Lower guidance scales may result in more unexpected and imaginative images, while higher scales will produce outputs that are closer to the specified prompt.

Updated Invalid Date

clipit

6

clipit is a text-to-image generation model developed by Replicate user dribnet. It utilizes the CLIP and VQGAN/PixelDraw models to create images based on text prompts. This model is related to other pixray models created by dribnet, such as 8bidoug, pixray-text2pixel, pixray, and pixray-text2image. These models all utilize the CLIP and VQGAN/PixelDraw techniques in various ways to generate images. Model inputs and outputs The clipit model takes in a text prompt, aspect ratio, quality, and display frequency as inputs. The outputs are an array of generated images along with the text prompt used to create them. Inputs Prompts**: The text prompt that describes the image you want to generate. Aspect**: The aspect ratio of the output image, either "widescreen" or "square". Quality**: The quality of the generated image, with options ranging from "draft" to "best". Display every**: The frequency at which images are displayed during the generation process. Outputs File**: The generated image file. Text**: The text prompt used to create the image. Capabilities The clipit model can generate a wide variety of images based on text prompts, leveraging the capabilities of the CLIP and VQGAN/PixelDraw models. It can create images of scenes, objects, and abstract concepts, with a range of styles and qualities depending on the input parameters. What can I use it for? You can use clipit to create custom images for a variety of applications, such as illustrations, graphics, or visual art. The model's ability to generate images from text prompts makes it a useful tool for designers, artists, and content creators who want to quickly and easily produce visuals to accompany their work. Things to try With clipit, you can experiment with different text prompts, aspect ratios, and quality settings to see how they affect the generated images. You can also try combining clipit with other pixray models to create more complex or specialized image generation workflows.

Updated Invalid Date