Camenduru

Models by this creator

dynami-crafter

1.7K

DynamiCrafter is a generative AI model that can animate open-domain still images based on a text prompt. It leverages pre-trained video diffusion priors to bring static images to life, allowing for the creation of engaging, dynamic visuals. This model is similar to other video generation models like VideoCrafter1, ScaleCrafter, and TaleCrafter, all of which are part of the "Crafter Family" of AI models developed by the same team. Model inputs and outputs DynamiCrafter takes in a text prompt and an optional input image, and generates a short, animated video in response. The model can produce videos at various resolutions, with the highest being 576x1024 pixels. Inputs i2v_input_text**: A text prompt describing the desired scene or animation. i2v_input_image**: An optional input image that can provide visual guidance for the animation. i2v_seed**: A random seed value to ensure reproducibility of the generated animation. i2v_steps**: The number of sampling steps used to generate the animation. i2v_cfg_scale**: The guidance scale, which controls the influence of the text prompt on the generated animation. i2v_motion**: A parameter that controls the magnitude of motion in the generated animation. i2v_eta**: A hyperparameter that controls the amount of noise added during the sampling process. Outputs Output**: A short, animated video file that brings the input text prompt or image to life. Capabilities DynamiCrafter can generate a wide variety of animated scenes, from dynamic natural landscapes to whimsical, fantastical scenarios. The model is particularly adept at capturing motion and creating a sense of liveliness in the generated animations. For example, the model can animate a "fireworks display" or a "robot walking through a destroyed city" with impressive results. What can I use it for? DynamiCrafter can be a powerful tool for a variety of applications, including storytelling, video creation, and visual effects. Its ability to transform static images into dynamic animations can be particularly useful for content creators, animators, and visual effects artists. The model's versatility also makes it a potential asset for businesses looking to create engaging, visually-striking marketing materials or product demonstrations. Things to try One interesting aspect of DynamiCrafter is its ability to generate frame-by-frame interpolation and looping videos. By providing the model with a starting and ending frame, it can generate a seamless animation that transitions between the two. This feature can be particularly useful for creating smooth, looping animations or for generating in-between frames for existing video content. Another intriguing application of DynamiCrafter is its potential for interactive storytelling. By combining the model's animation capabilities with narrative elements, creators could potentially develop dynamic, responsive visual experiences that adapt to user input or evolve over time.

Updated 9/19/2024

moe-llava

1.4K

MoE-LLaVA is a large language model developed by the PKU-YuanGroup that combines the power of Mixture of Experts (MoE) and the versatility of Latent Learnable Visual Attention (LLaVA) to generate high-quality multimodal responses. It is similar to other large language models like ml-mgie, lgm, animate-lcm, cog-a1111-ui, and animagine-xl-3.1 that leverage the power of deep learning to create advanced natural language and image generation capabilities. Model inputs and outputs MoE-LLaVA takes two inputs: a text prompt and an image URL. The text prompt can be a natural language description of the desired output, and the image URL provides a visual reference for the model to incorporate into its response. The model then generates a text output that directly addresses the prompt and incorporates relevant information from the input image. Inputs Input Text**: A natural language description of the desired output Input Image**: A URL pointing to an image that the model should incorporate into its response Outputs Output Text**: A generated response that addresses the input prompt and incorporates relevant information from the input image Capabilities MoE-LLaVA is capable of generating coherent and informative multimodal responses that combine natural language and visual information. It can be used for a variety of tasks, such as image captioning, visual question answering, and image-guided text generation. What can I use it for? You can use MoE-LLaVA for a variety of projects that require the integration of text and visual data. For example, you could use it to create image-guided tutorials, generate product descriptions that incorporate product images, or develop intelligent chatbots that can respond to user prompts with relevant visual information. By leveraging the model's multimodal capabilities, you can create rich and engaging content that resonates with your audience. Things to try One interesting thing to try with MoE-LLaVA is to experiment with different types of input images and text prompts. Try providing the model with a wide range of images, from landscapes and cityscapes to portraits and abstract art, and observe how the model's responses change. Similarly, experiment with different types of text prompts, from simple factual queries to more open-ended creative prompts. By exploring the model's behavior across a variety of inputs, you can gain a deeper understanding of its capabilities and potential applications.

Updated 9/19/2024

🏷️

potat1

153

The potat1 model is an open-source 1024x576 text-to-video model developed by camenduru. It is a prototype model trained on 2,197 clips and 68,388 tagged frames using the Salesforce/blip2-opt-6.7b-coco model. The model has been released in various versions, including potat1-5000, potat1-10000, potat1-10000-base-text-encoder, and others, with different training steps. This model can be compared to similar text-to-video models like SUPIR, aniportrait-vid2vid, and the modelscope-damo-text-to-video-synthesis model, all of which are focused on generating video from text inputs. Model inputs and outputs Inputs Text descriptions that the model can use to generate corresponding videos. Outputs 1024x576 videos that match the input text descriptions. Capabilities The potat1 model can generate videos based on text inputs, producing 1024x576 videos that correspond to the provided descriptions. This can be useful for a variety of applications, such as creating visual content for presentations, social media, or educational materials. What can I use it for? The potat1 model can be used for a variety of text-to-video generation tasks, such as creating promotional videos, educational content, or animated shorts. The model's capabilities can be leveraged by content creators, marketers, and educators to produce visually engaging content more efficiently. Things to try One interesting aspect of the potat1 model is its ability to generate videos at a relatively high resolution of 1024x576. This could be particularly useful for creating high-quality visual content for online platforms or presentations. Additionally, experimenting with the different versions of the model, such as potat1-10000 or potat1-50000, could yield interesting results and help users understand the impact of different training steps on the model's performance.

Updated 5/28/2024

🎯

SUPIR

69

The SUPIR model is a text-to-image AI model. While the platform did not provide a description for this specific model, it shares similarities with other models like sd-webui-models and photorealistic-fuen-v1 in the text-to-image domain. These models leverage advanced machine learning techniques to generate images from textual descriptions. Model inputs and outputs The SUPIR model takes textual inputs and generates corresponding images as outputs. This allows users to create visualizations based on their written descriptions. Inputs Textual prompts that describe the desired image Outputs Generated images that match the input textual prompts Capabilities The SUPIR model can generate a wide variety of images based on the provided textual descriptions. It can create realistic, detailed visuals spanning different genres, styles, and subject matter. What can I use it for? The SUPIR model can be used for various applications that involve generating images from text. This includes creative projects, product visualizations, educational materials, and more. With the provided internal links to the maintainer's profile, users can explore the model's capabilities further and potentially monetize its use within their own companies. Things to try Experimentation with different types of textual prompts can unlock the full potential of the SUPIR model. Users can explore generating images across diverse themes, styles, and levels of abstraction to see the model's versatility in action.

Updated 5/28/2024

🔗

Wav2Lip

50

The Wav2Lip model is a video-to-video AI model developed by camenduru. Similar models include SUPIR, stable-video-diffusion-img2vid-fp16, streaming-t2v, vcclient000, and metavoice, which also focus on video generation and manipulation tasks. Model inputs and outputs The Wav2Lip model takes audio and video inputs and generates a synchronized video output where the subject's lip movements match the provided audio. Inputs Audio file Video file Outputs Synchronized video output with lip movements matched to the input audio Capabilities The Wav2Lip model can be used to generate realistic lip-synced videos from existing video and audio files. This can be useful for a variety of applications, such as dubbing foreign language content, creating animated characters, or improving the production value of video recordings. What can I use it for? The Wav2Lip model can be used to enhance video content by synchronizing the subject's lip movements with the audio track. This could be useful for dubbing foreign language films, creating animated characters with realistic mouth movements, or improving the quality of video calls and presentations. The model could also be used in video production workflows to speed up the process of manually adjusting lip movements. Things to try Experiment with the Wav2Lip model by trying it on different types of video and audio content. See how well it can synchronize lip movements across a range of subjects, accents, and audio qualities. You could also explore ways to integrate the model into your video editing or content creation pipeline to streamline your workflow.

Updated 9/6/2024

instantmesh

37

InstantMesh is an efficient 3D mesh generation model that can create realistic 3D models from a single input image. Developed by researchers at Tencent ARC, InstantMesh leverages sparse-view large reconstruction models to rapidly generate 3D meshes without requiring multiple input views. This sets it apart from similar models like real-esrgan, instant-id, idm-vton, and face-to-many, which focus on different 3D reconstruction and generation tasks. Model inputs and outputs InstantMesh takes a single input image and generates a 3D mesh model. The model can also optionally export a texture map and video of the generated mesh. Inputs Image Path**: The input image to use for 3D mesh generation Seed**: A random seed value to use for the mesh generation process Remove Background**: A boolean flag to remove the background from the input image Export Texmap**: A boolean flag to export a texture map along with the 3D mesh Export Video**: A boolean flag to export a video of the generated 3D mesh Outputs Array of URIs**: The generated 3D mesh models and optional texture map and video Capabilities InstantMesh can efficiently generate high-quality 3D mesh models from a single input image, without requiring multiple views or a complex reconstruction pipeline. This makes it a powerful tool for rapid 3D content creation in a variety of applications, from game development to product visualization. What can I use it for? The InstantMesh model can be used to quickly create 3D assets for a wide range of applications, such as: Game development: Generate 3D models of characters, environments, and props to use in game engines. Product visualization: Create 3D models of products for e-commerce, marketing, or design purposes. Architectural visualization: Generate 3D models of buildings, landscapes, and interiors for design and planning. Visual effects: Use the generated 3D meshes as a starting point for further modeling, texturing, and animation. The model's efficient and robust reconstruction capabilities make it a valuable tool for anyone working with 3D content, especially in fields that require rapid prototyping or content creation. Things to try One interesting aspect of InstantMesh is its ability to remove the background from the input image and generate a 3D mesh that focuses solely on the subject. This can be a useful feature for creating 3D assets that can be easily composited into different environments or scenes. You could try experimenting with different input images, varying the background removal settings, and observing how the generated 3D meshes change accordingly. Another interesting aspect is the option to export a texture map along with the 3D mesh. This allows you to further customize and refine the appearance of the generated model, using tools like 3D modeling software or game engines. You could try experimenting with different texture mapping settings and see how the final 3D models look with different surface materials and details.

Updated 9/19/2024

animatediff-lightning-4-step

34

animatediff-lightning-4-step is an AI model developed by camenduru that performs cross-model diffusion distillation. This model is similar to other AI models like champ, which focuses on controllable and consistent human image animation, and kandinsky-2.2, a multilingual text-to-image latent diffusion model. Model inputs and outputs The animatediff-lightning-4-step model takes a text prompt as input and generates an image as output. The input prompt describes the desired image, and the model uses diffusion techniques to create the corresponding visual representation. Inputs Prompt**: A text description of the desired image. Guidance Scale**: A numerical value that controls the strength of the guidance during the diffusion process. Outputs Output Image**: The generated image that corresponds to the provided prompt. Capabilities The animatediff-lightning-4-step model is capable of generating high-quality images from text prompts. It utilizes cross-model diffusion distillation techniques to produce visually appealing and diverse results. What can I use it for? The animatediff-lightning-4-step model can be used for a variety of creative and artistic projects, such as generating illustrations, concept art, or surreal imagery. The model's capabilities can be leveraged by individuals, artists, or companies looking to experiment with AI-generated visuals. Things to try With the animatediff-lightning-4-step model, you can explore the boundaries of text-to-image generation by providing diverse and imaginative prompts. Try experimenting with different styles, genres, or conceptual themes to see the range of outputs the model can produce.

Updated 9/19/2024

dynami-crafter-576x1024

14

The dynami-crafter-576x1024 model, developed by camenduru, is a powerful AI tool that can create videos from a single input image. This model is part of a collection of similar models created by camenduru, including champ, animate-lcm, ml-mgie, tripo-sr, and instantmesh, all of which focus on image-to-video and 3D reconstruction tasks. Model inputs and outputs The dynami-crafter-576x1024 model takes an input image and generates a video output. The model allows users to customize various parameters, such as the ETA, random seed, sampling steps, motion magnitude, and CFG scale, to fine-tune the video output. Inputs i2v_input_image**: The input image to be used for generating the video i2v_input_text**: The input text to be used for generating the video i2v_seed**: The random seed to be used for generating the video i2v_steps**: The number of sampling steps to be used for generating the video i2v_motion**: The motion magnitude to be used for generating the video i2v_cfg_scale**: The CFG scale to be used for generating the video i2v_eta**: The ETA to be used for generating the video Outputs Output**: The generated video output Capabilities The dynami-crafter-576x1024 model can be used to create dynamic and visually appealing videos from a single input image. It can generate videos with a range of motion and visual styles, allowing users to explore different creative possibilities. The model's customizable parameters provide users with the flexibility to fine-tune the output according to their specific needs. What can I use it for? The dynami-crafter-576x1024 model can be used in a variety of applications, such as video content creation, social media marketing, and visual storytelling. Artists and creators can use this model to generate unique and eye-catching videos to showcase their work or promote their brand. Businesses can leverage the model to create engaging and dynamic video content for their marketing campaigns. Things to try Experiment with different input images and text prompts to see the diverse range of video outputs the dynami-crafter-576x1024 model can generate. Try varying the model's parameters, such as the random seed, sampling steps, and motion magnitude, to explore how these changes impact the final video. Additionally, compare the outputs of this model with those of other similar models created by camenduru to discover the nuances and unique capabilities of each.

Updated 9/19/2024

champ

13

champ is a model developed by Fudan University that enables controllable and consistent human image animation with 3D parametric guidance. It allows users to animate human images by specifying 3D motion parameters, resulting in realistic and coherent animations. This model can be particularly useful for applications such as video game character animation, virtual avatar creation, and visual effects in films and videos. In contrast to similar models like InstantMesh, Arc2Face, and Real-ESRGAN, champ focuses specifically on human image animation with detailed 3D control. Model inputs and outputs champ takes two main inputs: a reference image and 3D motion guidance data. The reference image is used as the basis for the animated character, while the 3D motion guidance data specifies the desired movement and animation. The model then generates an output image that depicts the animated human figure based on the provided inputs. Inputs Ref Image Path**: The path to the reference image used as the basis for the animated character. Guidance Data**: The 3D motion data that specifies the desired movement and animation of the character. Outputs Output**: The generated image depicting the animated human figure based on the provided inputs. Capabilities champ can generate realistic and coherent human image animations by leveraging 3D parametric guidance. The model is capable of producing animations that are both controllable and consistent, allowing users to fine-tune the movement and expression of the animated character. This can be particularly useful for applications that require precise control over character animation, such as video games, virtual reality experiences, and visual effects. What can I use it for? The champ model can be used for a variety of applications that involve human image animation, such as: Video game character animation: Developers can use champ to create realistic and expressive character animations for their games. Virtual avatar creation: Businesses and individuals can use champ to generate animated avatars for use in virtual meetings, social media, and other online interactions. Visual effects in films and videos: Filmmakers and video content creators can leverage champ to enhance the realism and expressiveness of human characters in their productions. Things to try With champ, users can experiment with different 3D motion guidance data to create a wide range of human animations, from subtle gestures to complex dance routines. Additionally, users can explore the model's ability to maintain consistency in the animated character's appearance and movements, which can be particularly useful for creating seamless and natural-looking animations.

Updated 9/19/2024

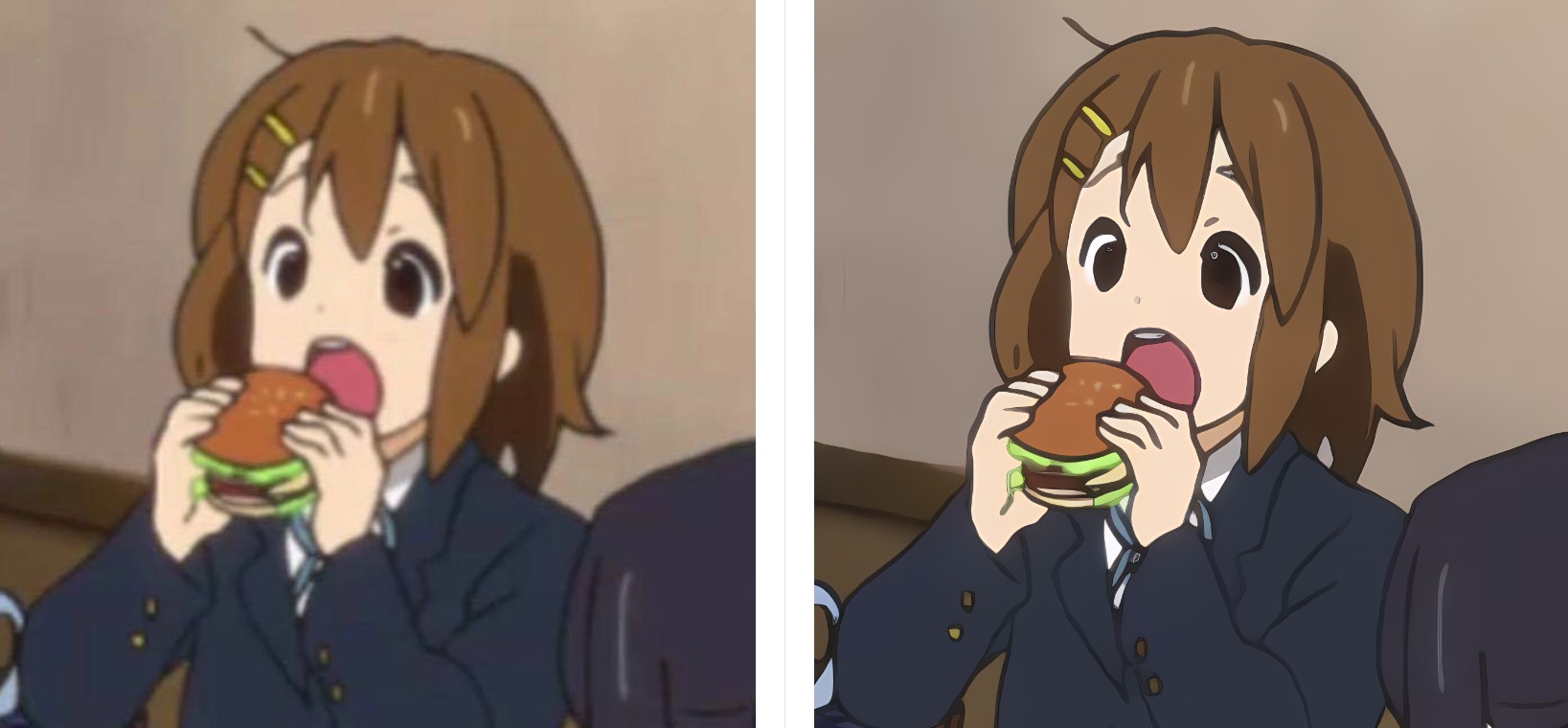

apisr

10

APISR is an AI model developed by Camenduru that generates high-quality super-resolution anime-style images from real-world photos. It is inspired by the "anime production" process, leveraging techniques used in the anime industry to enhance images. APISR can be compared to similar models like animesr, which also focuses on real-world to anime-style super-resolution, and aniportrait-vid2vid, which generates photorealistic animated portraits. Model inputs and outputs APISR takes an input image and generates a high-quality, anime-style super-resolution version of that image. The input can be any real-world photo, and the output is a stylized, upscaled anime-inspired image. Inputs img_path**: The path to the input image file Outputs Output**: A URI pointing to the generated anime-style super-resolution image Capabilities APISR is capable of transforming real-world photos into high-quality, anime-inspired artworks. It can enhance the resolution and visual style of images, making them appear as if they were hand-drawn by anime artists. The model leverages techniques used in the anime production process to achieve this unique aesthetic. What can I use it for? You can use APISR to create anime-style art from your own photos or other real-world images. This can be useful for a variety of applications, such as: Enhancing personal photos with an anime-inspired look Generating custom artwork for social media, websites, or other creative projects Exploring the intersection of real-world and anime-style aesthetics Camenduru, the maintainer of APISR, has a Patreon community where you can learn more about the model and get support for using it. Things to try One interesting thing to try with APISR is experimenting with different types of input images, such as landscapes, portraits, or even abstract art. The model's ability to transform real-world visuals into anime-inspired styles can lead to some unexpected and visually striking results. Additionally, you could try combining APISR with other AI models, like ml-mgie or colorize-line-art, to further enhance the anime-esque qualities of your images.

Updated 9/19/2024