apisr

Maintainer: camenduru

10

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | View on Github |

| Paper link | View on Arxiv |

Create account to get full access

Model overview

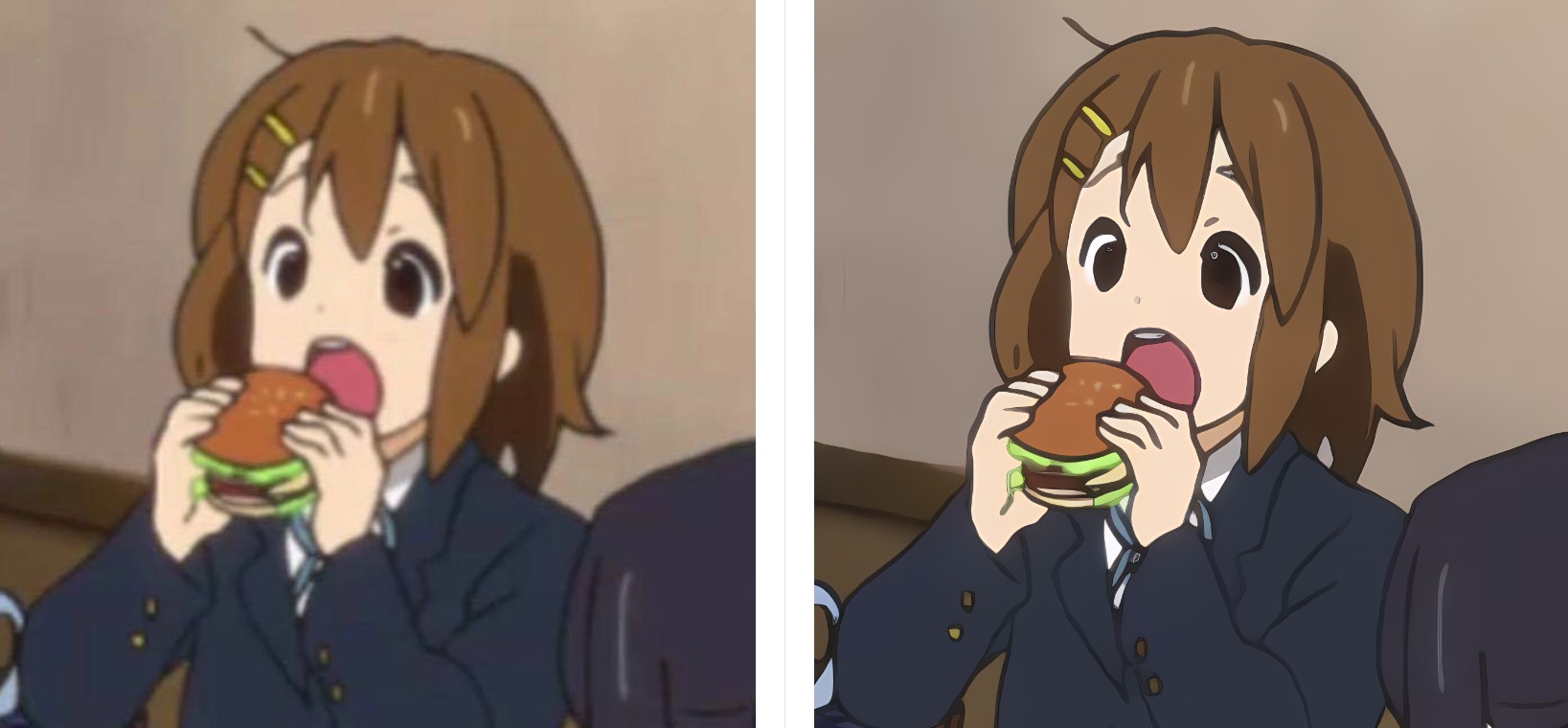

APISR is an AI model developed by Camenduru that generates high-quality super-resolution anime-style images from real-world photos. It is inspired by the "anime production" process, leveraging techniques used in the anime industry to enhance images. APISR can be compared to similar models like animesr, which also focuses on real-world to anime-style super-resolution, and aniportrait-vid2vid, which generates photorealistic animated portraits.

Model inputs and outputs

APISR takes an input image and generates a high-quality, anime-style super-resolution version of that image. The input can be any real-world photo, and the output is a stylized, upscaled anime-inspired image.

Inputs

- img_path: The path to the input image file

Outputs

- Output: A URI pointing to the generated anime-style super-resolution image

Capabilities

APISR is capable of transforming real-world photos into high-quality, anime-inspired artworks. It can enhance the resolution and visual style of images, making them appear as if they were hand-drawn by anime artists. The model leverages techniques used in the anime production process to achieve this unique aesthetic.

What can I use it for?

You can use APISR to create anime-style art from your own photos or other real-world images. This can be useful for a variety of applications, such as:

- Enhancing personal photos with an anime-inspired look

- Generating custom artwork for social media, websites, or other creative projects

- Exploring the intersection of real-world and anime-style aesthetics

Camenduru, the maintainer of APISR, has a Patreon community where you can learn more about the model and get support for using it.

Things to try

One interesting thing to try with APISR is experimenting with different types of input images, such as landscapes, portraits, or even abstract art. The model's ability to transform real-world visuals into anime-inspired styles can lead to some unexpected and visually striking results. Additionally, you could try combining APISR with other AI models, like ml-mgie or colorize-line-art, to further enhance the anime-esque qualities of your images.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

📶

animesr

10

animesr is a real-world super-resolution model for animation videos developed by the Tencent ARC Lab. It can effectively enhance the resolution and quality of low-quality animation videos, producing clear and high-quality results. Compared to similar models like GFPGAN, Real-ESRGAN, RealESRGAN, and PhotoMaker Style, animesr is specifically designed for animation videos, providing superior performance on this type of content. Model inputs and outputs animesr takes either a video file or a zip file of image frames as input, and outputs a high-quality, upscaled video. The model is capable of 4x super-resolution, meaning it can quadruple the resolution of the input video while preserving details and reducing artifacts. Inputs Video**: Input video file Frames**: Zip file of frames from a video Outputs Video**: High-quality, upscaled video Capabilities animesr excels at enhancing the resolution and visual quality of animation videos, producing clear and detailed results with fewer artifacts compared to traditional upscaling methods. The model has been trained on a large dataset of animation videos, allowing it to effectively handle a wide range of animation styles and content. What can I use it for? The animesr model can be used to improve the quality of low-resolution animation videos, making them more suitable for larger displays or video streaming platforms. This can be particularly useful for restoring old or degraded animation content, or for upscaling lower-quality animation created for mobile devices. Additionally, the model could be leveraged by animation studios or video editors to efficiently enhance the production value of their animated projects. Things to try One interesting aspect of animesr is its ability to handle a diverse range of animation styles and content. Try experimenting with the model on different types of animation, from classic cartoons to modern anime, to see how it performs across various visual styles and genres. Additionally, you can explore the impact of adjusting the output scale, as the model supports arbitrary scaling factors beyond the default 4x resolution.

Updated Invalid Date

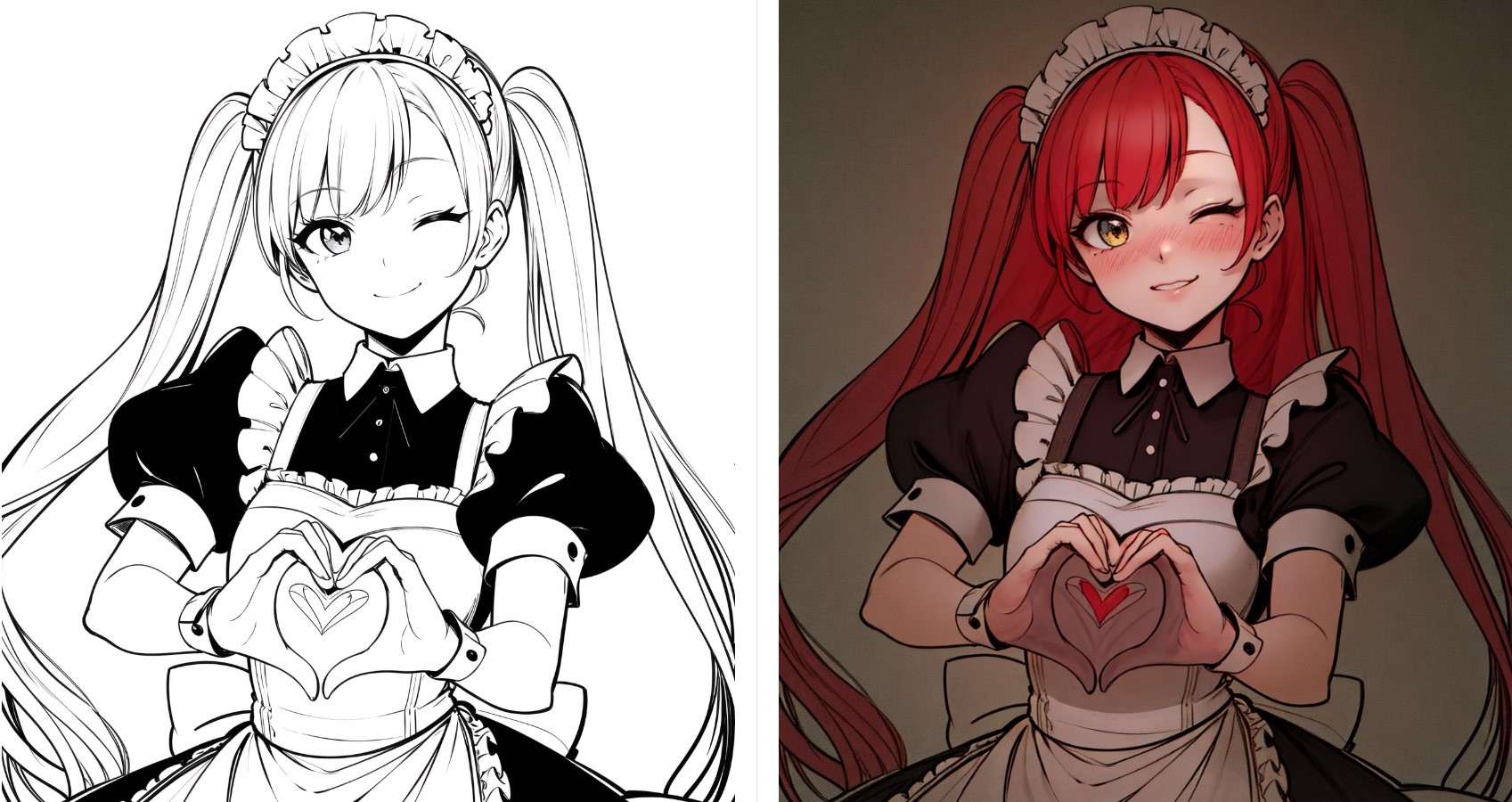

colorize-line-art

7

The colorize-line-art model is a powerful AI tool that can take a simple line art image and transform it into a fully colored, detailed illustration. This model is part of the ControlNet family, a collection of AI models developed by the researcher Lvmin Zhang. The colorize-line-art model specifically leverages the ControlNet architecture to generate high-quality, anime-style illustrations from line art inputs. It can be particularly useful for artists, animators, and designers who want to quickly and easily add color to their sketches and drawings. Similar models like controlnet-scribble, bandw-manga, and controlnet-hough also offer unique capabilities for image generation and editing, each with their own strengths and use cases. Model inputs and outputs The colorize-line-art model takes a single input - an image of line art. The model then processes this input and generates a fully colored, detailed illustration as the output. The input image can be in any standard image format, and the output is also a standard image file. Inputs Input Image**: The line art image to be colorized. Outputs Output Image**: The fully colored, detailed illustration generated from the input line art. Capabilities The colorize-line-art model is capable of generating highly detailed, anime-style illustrations from simple line art inputs. It can capture intricate details, vibrant colors, and a range of artistic styles, allowing users to quickly transform their sketches into professional-looking artwork. What can I use it for? The colorize-line-art model can be a valuable tool for a variety of creative projects, including: Animating 2D illustrations and cartoons Enhancing manga and comic book art Developing concept art and character designs Creating digital paintings and illustrations Prototyping and visualizing product designs The model's ability to generate high-quality, anime-style artwork makes it particularly useful for creators and artists working in the anime and manga genres, as well as those looking to add a touch of whimsy and style to their digital artwork. Things to try One interesting aspect of the colorize-line-art model is its ability to capture a wide range of artistic styles and techniques. Users can experiment with different input prompts, settings, and techniques to explore the model's capabilities and find unique ways to apply it to their creative projects. For example, users might try varying the level of detail in their line art inputs, or adjusting the strength and scale parameters to achieve different visual effects.

Updated Invalid Date

ml-mgie

5

ml-mgie is a model developed by Replicate's Camenduru that aims to provide guidance for instruction-based image editing using multimodal large language models. This model can be seen as an extension of similar efforts like llava-13b and champ, which also explore the intersection of language and visual AI. The model's capabilities include making targeted edits to images based on natural language instructions. Model inputs and outputs ml-mgie takes in an input image and a text prompt, and generates an edited image along with a textual description of the changes made. The input image can be any valid image, and the text prompt should describe the desired edits in natural language. Inputs Input Image**: The image to be edited Prompt**: A natural language description of the desired edits Outputs Edited Image**: The resulting image after applying the specified edits Text**: A textual description of the edits made to the input image Capabilities ml-mgie demonstrates the ability to make targeted visual edits to images based on natural language instructions. This includes changes to the color, composition, or other visual aspects of the image. The model can be used to enhance or modify existing images in creative ways. What can I use it for? ml-mgie could be used in various creative and professional applications, such as photo editing, graphic design, and even product visualization. By allowing users to describe their desired edits in natural language, the model can streamline the image editing process and make it more accessible to a wider audience. Additionally, the model's capabilities could potentially be leveraged for tasks like virtual prototyping or product customization. Things to try One interesting thing to try with ml-mgie is providing more detailed or nuanced prompts to see how the model responds. For example, you could experiment with prompts that include specific color references, spatial relationships, or other visual characteristics to see how the model interprets and applies those edits. Additionally, you could try providing the model with a series of prompts to see if it can maintain coherence and consistency across multiple editing steps.

Updated Invalid Date

aura-sr

5

aura-sr is a GAN-based super-resolution model designed to upscale real-world images. It is based on the GigaGAN approach and can produce impressive results for certain types of images. The model is developed by zsxkib and is available through the Replicate platform. Similar models like SeeSR, ArbSR, ESRGAN, and Real-ESRGAN also aim to improve image super-resolution in various ways. Model inputs and outputs The aura-sr model takes an input image file and a scale factor as its inputs. The scale factor determines how much the image will be upscaled, with options for 2, 4, 8, 16, or 32 times the original size. The model outputs a higher-resolution version of the input image. Inputs image**: The input image file to be upscaled. scale_factor**: The factor by which to upscale the image (2, 4, 8, 16, or 32). max_batch_size**: Controls the number of image tiles processed simultaneously. Higher values may increase speed but require more GPU memory. Outputs Output**: The upscaled image file. Capabilities aura-sr is particularly effective at upscaling PNG, lossless WebP, and high-quality JPEG XL images. It can handle different sized jobs and work quickly, making it a useful tool for tasks that require enlarging images while preserving quality. What can I use it for? The aura-sr model can be used to upscale AI-generated images or high-quality photographs, making them larger and clearer without losing important details. This can be useful for a variety of applications, such as creating larger promotional materials, improving image quality for websites or social media, or enhancing the visual impact of visualizations and data presentations. Things to try While aura-sr is a powerful tool, it does have some limitations. It works best with certain image formats and may not perform well on heavily compressed or low-quality images. Experimenting with different input images and scale factors can help you find the optimal use cases for this model.

Updated Invalid Date