Okaris

Models by this creator

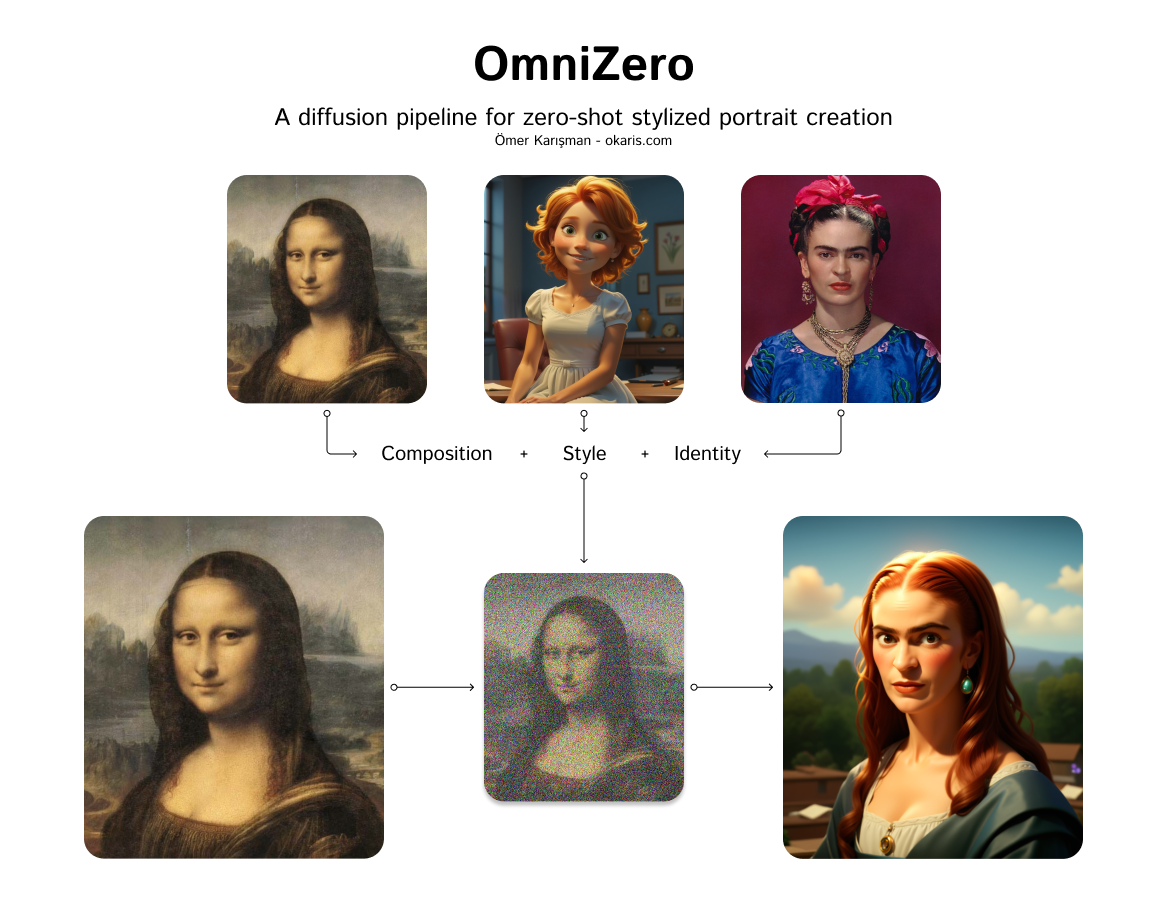

omni-zero

458

Omni-Zero is a diffusion pipeline model created by okaris that enables zero-shot stylized portrait creation. It leverages the power of diffusion models, similar to Stable Diffusion, to generate photo-realistic images from text prompts. However, Omni-Zero adds the ability to apply various styles and effects to the generated portraits, allowing for a high degree of customization and creativity. Model inputs and outputs Omni-Zero takes in a variety of inputs that allow for fine-tuned control over the generated portraits. These include a text prompt, a seed value for reproducibility, a guidance scale, and the number of steps and images to generate. Users can also provide optional input images, such as a base image, style image, identity image, and composition image, to further influence the output. Inputs Seed**: A random seed value for reproducibility Prompt**: The text prompt describing the desired portrait Negative Prompt**: Optional text to exclude from the generated image Number of Images**: The number of images to generate Number of Steps**: The number of steps to use in the diffusion process Guidance Scale**: The strength of the text guidance during the diffusion process Base Image**: An optional base image to use as a starting point Style Image**: An optional image to use as a style reference Identity Image**: An optional image to use as an identity reference Composition Image**: An optional image to use as a composition reference Depth Image**: An optional depth image to use for depth-aware generation Outputs An array of generated portrait images in the form of image URLs Capabilities Omni-Zero excels at generating highly stylized and personalized portraits from text prompts. It can capture a wide range of artistic styles, from photorealistic to more abstract and impressionistic renderings. The model's ability to incorporate various input images, such as style and identity references, allows for a high degree of customization and creative expression. What can I use it for? Omni-Zero can be a powerful tool for artists, designers, and content creators who want to quickly generate unique and visually striking portrait images. It could be used to create custom avatars, character designs, or even personalized art pieces. The model's versatility also makes it suitable for various applications, such as social media content, illustrations, and even product design. Things to try One interesting aspect of Omni-Zero is its ability to blend multiple styles and identities in a single generated portrait. By providing a diverse set of input images, users can explore the interplay of different visual elements and create truly unique and captivating portraits. Additionally, experimenting with the depth image and composition inputs can lead to some fascinating depth-aware and spatially-aware generations.

Updated 9/20/2024

live-portrait

16

The live-portrait model is an AI-powered portrait animation tool that can generate realistic and dynamic animations from a source image or video. Developed by Replicate creator okaris, this model builds upon similar work such as Efficient Portrait Animation with Stitching and Retargeting Control, live portrait with audio, and Portrait animation using a driving video source. The model aims to create lifelike animations that can be used for a variety of applications, from social media content to virtual avatars. Model inputs and outputs The live-portrait model takes in a source image or video, a driving video, and various configuration parameters to control the animation. The outputs are a series of images or a video that brings the source portrait to life, with the movement and expressions matched to the driving video. Inputs Source Image**: The source image to be animated Source Video**: The source video to be animated Driving Video**: The video that will drive the animation Scale, Vx Ratio, Vy Ratio, Flag Remap, Flag Do Crop, Flag Relative, Flag Crop Driving Video, Scale Crop Driving Video, Vx Ratio Crop Driving Video, Vy Ratio Crop Driving Video, Flag Video Editing Head Rotation, Driving Smooth Observation Variance**: Various configuration parameters to control the animation Outputs Output**: A series of images or a video that animates the source portrait Capabilities The live-portrait model is capable of generating realistic and dynamic animations from a variety of input sources. By leveraging the driving video, the model can create animations that closely match the movements and expressions of the source portrait, resulting in a lifelike and engaging experience. The model's ability to control various parameters, such as cropping, scaling, and relative motion, allows for a high degree of customization and fine-tuning of the animation. What can I use it for? The live-portrait model can be used for a wide range of applications, from social media content creation to virtual avatars and virtual reality experiences. Content creators could use the model to generate animated portraits for their videos or social media posts, adding a unique and engaging element to their content. Developers could integrate the model into their applications to create virtual assistants or avatars with realistic facial expressions and movements. Businesses could also leverage the model to create personalized video content or virtual experiences for their customers. Things to try One interesting aspect of the live-portrait model is its ability to work with both source images and videos. This opens up a wide range of creative possibilities, as users could experiment with mixing and matching different source materials and driving videos to create unique and unexpected animations. Additionally, the model's configurable parameters allow for a high degree of control over the animation, enabling users to fine-tune the results to their specific needs or artistic vision.

Updated 9/20/2024