metavoice

Maintainer: camenduru

9

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | View on Github |

| Paper link | No paper link provided |

Create account to get full access

Model overview

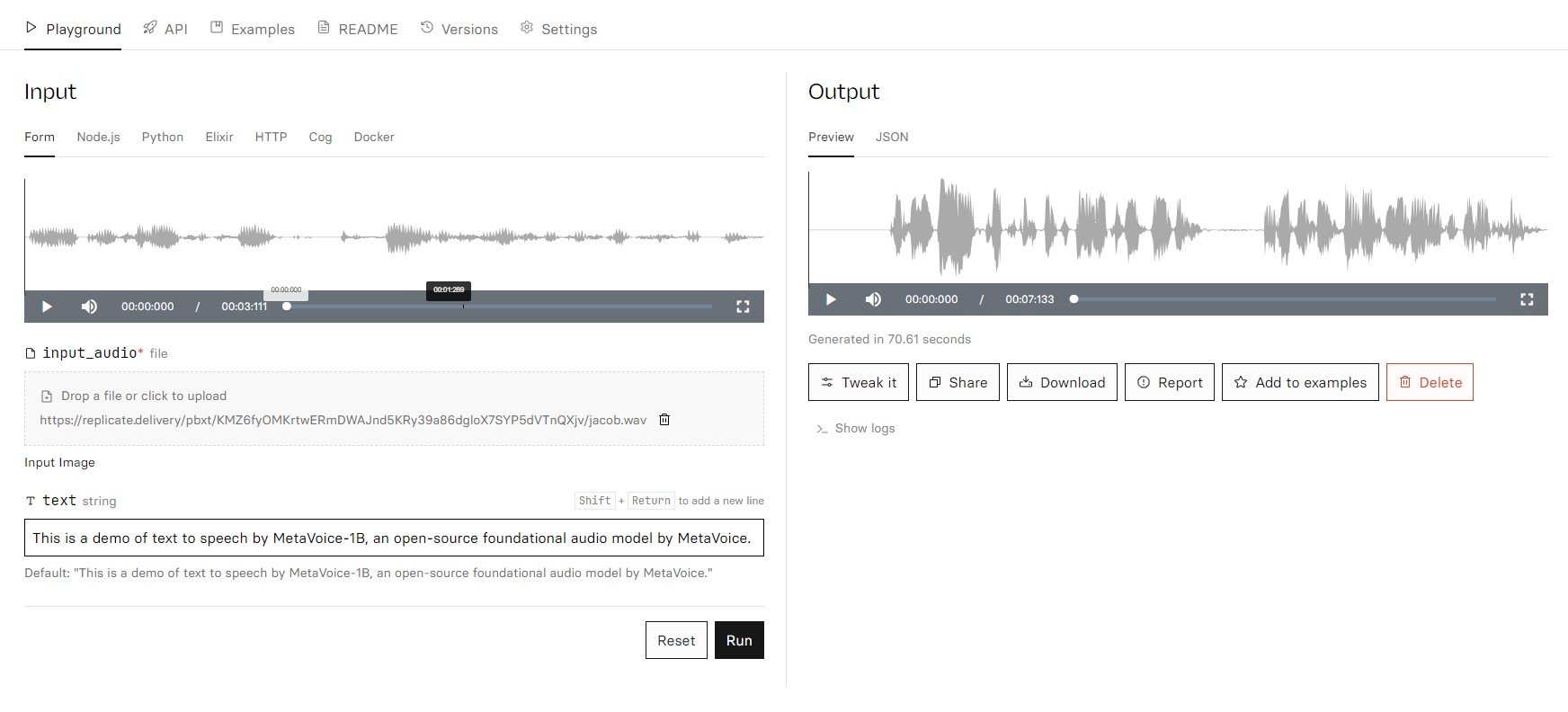

MetaVoice-1B is a 1.2 billion parameter base model trained on 100,000 hours of speech, developed by the MetaVoice team. This large-scale speech model can be used for a variety of text-to-speech and audio generation tasks, similar to models like ml-mgie, meta-llama-3-8b-instruct, whisperspeech-small, voicecraft, and whisperx.

Model inputs and outputs

MetaVoice-1B takes in text as input and generates audio as output. The model can be used for a wide range of text-to-speech and audio generation tasks.

Inputs

- Text: The text to be converted to speech.

Outputs

- Audio: The generated audio in a URI format.

Capabilities

MetaVoice-1B is a powerful foundational audio model capable of generating high-quality speech from text inputs. It can be used for tasks like text-to-speech, audio synthesis, and voice cloning.

What can I use it for?

The MetaVoice-1B model can be used for a variety of applications, such as creating audiobooks, podcasts, or voice assistants. It can also be used to generate synthetic voices for video games, movies, or other multimedia projects. Additionally, the model can be fine-tuned for specific use cases, such as language learning or accessibility applications.

Things to try

With MetaVoice-1B, you can experiment with generating speech in different styles, emotions, or languages. You can also explore using the model for tasks like audio editing, voice conversion, or multi-speaker audio generation.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

one-shot-talking-face

1

one-shot-talking-face is an AI model that enables the creation of realistic talking face animations from a single input image. It was developed by Camenduru, an AI model creator. This model is similar to other talking face animation models like AniPortrait: Audio-Driven Synthesis of Photorealistic Portrait Animation, Make any Image Talk, and AnimateLCM Cartoon3D Model. These models aim to bring static images to life by animating the subject's face in response to audio input. Model inputs and outputs one-shot-talking-face takes two input files: a WAV audio file and an image file. The model then generates an output video file that animates the face in the input image to match the audio. Inputs Wav File**: The audio file that will drive the facial animation. Image File**: The input image containing the face to be animated. Outputs Output**: A video file that shows the face in the input image animated to match the audio. Capabilities one-shot-talking-face can create highly realistic and expressive talking face animations from a single input image. The model is able to capture subtle facial movements and expressions, resulting in animations that appear natural and lifelike. What can I use it for? one-shot-talking-face can be a powerful tool for a variety of applications, such as creating engaging video content, developing virtual assistants or digital avatars, or even enhancing existing videos by animating static images. The model's ability to generate realistic talking face animations from a single image makes it a versatile and accessible tool for creators and developers. Things to try One interesting aspect of one-shot-talking-face is its potential to bring historical or artistic figures to life. By providing a portrait image and appropriate audio, the model can animate the subject's face, allowing users to hear the figure speak in a lifelike manner. This could be a captivating way to bring the past into the present or to explore the expressive qualities of iconic artworks.

Updated Invalid Date

rvc-v2

42

The rvc-v2 model is a speech-to-speech tool that allows you to apply voice conversion to any audio input using any RVC v2 trained AI voice model. It is developed and maintained by pseudoram. Similar models include the realistic-voice-cloning model for creating song covers, the create-rvc-dataset model for building your own RVC v2 dataset, the free-vc model for changing voice for spoken text, the vqmivc model for one-shot voice conversion, and the metavoice model for a large-scale base model for voice conversion. Model inputs and outputs The rvc-v2 model takes an audio file as input and allows you to convert the voice to any RVC v2 trained AI voice model. The output is the audio file with the converted voice. Inputs Input Audio**: The audio file to be converted. RVC Model**: The specific RVC v2 trained AI voice model to use for the voice conversion. Pitch Change**: Adjust the pitch of the AI vocals in semitones. F0 Method**: The pitch detection algorithm to use, either 'rmvpe' for clarity or 'mangio-crepe' for smoother vocals. Index Rate**: Control how much of the AI's accent to leave in the vocals. Filter Radius**: Apply median filtering to the harvested pitch results. RMS Mix Rate**: Control how much to use the original vocal's loudness or a fixed loudness. Protect**: Control how much of the original vocals' breath and voiceless consonants to leave in the AI vocals. Output Format**: Choose between WAV for best quality or MP3 for smaller file size. Outputs Converted Audio**: The input audio file with the voice converted to the selected RVC v2 model. Capabilities The rvc-v2 model can effectively change the voice in any audio input to a specific RVC v2 trained AI voice. This can be useful for tasks like creating song covers, changing the voice in videos or recordings, or even generating novel voices for various applications. What can I use it for? The rvc-v2 model can be used for a variety of projects and applications. For example, you could use it to create song covers with a unique AI-generated voice, or to change the voice in videos or audio recordings to a different persona. It could also be used to generate novel voices for audiobooks, video game characters, or other voice-based applications. The model's flexibility and the wide range of available voice models make it a powerful tool for voice conversion and generation tasks. Things to try One interesting thing to try with the rvc-v2 model is to experiment with the different pitch, index rate, and filtering options to find the right balance of clarity, smoothness, and authenticity in the converted voice. You could also try combining the rvc-v2 model with other audio processing tools to create more complex voice transformations, such as adding effects or mixing the converted voice with the original. Additionally, you could explore training your own custom RVC v2 voice models and using them with the rvc-v2 tool to create unique, personalized voice conversions.

Updated Invalid Date

meta-llama-3-70b-instruct

117.2K

meta-llama-3-70b-instruct is a 70 billion parameter language model from Meta that has been fine-tuned for chat completions. It is part of Meta's Llama series of language models, which also includes the meta-llama-3-8b-instruct, codellama-70b-instruct, meta-llama-3-70b, codellama-13b-instruct, and codellama-7b-instruct models. Model inputs and outputs meta-llama-3-70b-instruct is a text-based model, taking in a prompt as input and generating text as output. The model has been specifically fine-tuned for chat completions, meaning it is well-suited for engaging in open-ended dialogue and responding to prompts in a conversational manner. Inputs Prompt**: The text that is provided as input to the model, which it will use to generate a response. Outputs Generated text**: The text that the model outputs in response to the input prompt. Capabilities meta-llama-3-70b-instruct can engage in a wide range of conversational tasks, from open-ended discussion to task-oriented dialog. It has been trained on a vast amount of text data, allowing it to draw upon a deep knowledge base to provide informative and coherent responses. The model can also generate creative and imaginative text, making it well-suited for applications such as story writing and idea generation. What can I use it for? With its strong conversational abilities, meta-llama-3-70b-instruct can be used for a variety of applications, such as building chatbots, virtual assistants, and interactive educational tools. Businesses could leverage the model to provide customer service, while writers and content creators could use it to generate new ideas and narrative content. Researchers may also find the model useful for exploring topics in natural language processing and exploring the capabilities of large language models. Things to try One interesting aspect of meta-llama-3-70b-instruct is its ability to engage in multi-turn dialogues and maintain context over the course of a conversation. You could try prompting the model with an initial query and then continuing the dialog, observing how it builds upon the previous context. Another interesting experiment would be to provide the model with prompts that require reasoning or problem-solving, and see how it responds.

Updated Invalid Date

free-vc

65

The free-vc model is a tool developed by jagilley that allows you to change the voice of spoken text. It can be used to convert the audio of one person's voice to sound like another person's voice. This can be useful for applications like voice over, dubbing, or text-to-speech. The free-vc model is similar in capabilities to other voice conversion models like VoiceConversionWebUI, incredibly-fast-whisper, voicecraft, and styletts2. Model inputs and outputs The free-vc model takes two inputs: a source audio file containing the words that should be spoken, and a reference audio file containing the voice that the resulting audio should have. The model then outputs a new audio file with the source text spoken in the voice of the reference audio. Inputs Source Audio**: The audio file containing the words that should be spoken Reference Audio**: The audio file containing the voice that the resulting audio should have Outputs Output Audio**: The new audio file with the source text spoken in the voice of the reference audio Capabilities The free-vc model can be used to change the voice of any spoken audio, allowing you to convert one person's voice to sound like another. This can be useful for a variety of applications, such as voice over, dubbing, or text-to-speech. What can I use it for? The free-vc model can be used for a variety of applications, such as: Voice Over**: Convert the voice in a video or audio recording to sound like a different person. Dubbing**: Change the voice in a foreign language film or video to match the local language. Text-to-Speech**: Generate audio of text spoken in a specific voice. Things to try Some ideas for things to try with the free-vc model include: Experiment with different source and reference audio files to see how the resulting audio sounds. Try using the model to create a voice over or dub for a short video or audio clip. See if you can use the model to generate text-to-speech audio in a specific voice.

Updated Invalid Date