xtts-v1

Maintainer: pagebrain

4

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | View on Github |

| Paper link | View on Arxiv |

Create account to get full access

Model overview

The xtts-v1 model from maintainer pagebrain offers voice cloning capabilities with just a 3-second audio clip. This model is similar to other voice cloning models like [object Object], [object Object], and [object Object], which aim to provide versatile instant voice cloning solutions.

Model inputs and outputs

The xtts-v1 model takes a few key inputs - a text prompt, a language, and a reference audio clip. It then generates synthesized speech audio as output, which can be used for voice cloning applications.

Inputs

- Prompt: The text that will be converted to speech

- Language: The output language for the synthesized speech

- Speaker Wav: A reference audio clip used for voice cloning

Outputs

- Output: A URI pointing to the generated audio file

Capabilities

The xtts-v1 model can quickly create a new voice based on just a short audio clip. This enables applications like audiobook narration, voice-over work, language learning tools, and accessibility solutions that require personalized text-to-speech.

What can I use it for?

The xtts-v1 model's voice cloning capabilities open up a wide range of potential use cases. Content creators could use it to generate custom voiceovers for their videos and podcasts. Educators could leverage it to create personalized learning materials. Companies could utilize it to provide more natural-sounding text-to-speech for customer service, product demos, and other applications.

Things to try

One interesting aspect of the xtts-v1 model is its ability to generate speech that closely matches the intonation and timbre of a reference audio clip. You could experiment with using different speaker voices as inputs to create a diverse range of synthetic voices. Additionally, you could try combining the model's output with other tools for audio editing or video lip-synchronization to create more polished multimedia content.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

xtts-v2

311

The xtts-v2 model is a multilingual text-to-speech voice cloning system developed by lucataco, the maintainer of this Cog implementation. This model is part of the Coqui TTS project, an open-source text-to-speech library. The xtts-v2 model is similar to other text-to-speech models like whisperspeech-small, styletts2, and qwen1.5-110b, which also generate speech from text. Model inputs and outputs The xtts-v2 model takes three main inputs: text to synthesize, a speaker audio file, and the output language. It then produces a synthesized audio file of the input text spoken in the voice of the provided speaker. Inputs Text**: The text to be synthesized Speaker**: The original speaker audio file (wav, mp3, m4a, ogg, or flv) Language**: The output language for the synthesized speech Outputs Output**: The synthesized audio file Capabilities The xtts-v2 model can generate high-quality multilingual text-to-speech audio by cloning the voice of a provided speaker. This can be useful for a variety of applications, such as creating personalized audio content, improving accessibility, or enhancing virtual assistants. What can I use it for? The xtts-v2 model can be used to create personalized audio content, such as audiobooks, podcasts, or video narrations. It could also be used to improve accessibility by generating audio versions of written content for users with visual impairments or other disabilities. Additionally, the model could be integrated into virtual assistants or chatbots to provide a more natural, human-like voice interface. Things to try One interesting thing to try with the xtts-v2 model is to experiment with different speaker audio files to see how the synthesized voice changes. You could also try using the model to generate audio in various languages and compare the results. Additionally, you could explore ways to integrate the model into your own applications or projects to enhance the user experience.

Updated Invalid Date

whisper

30.6K

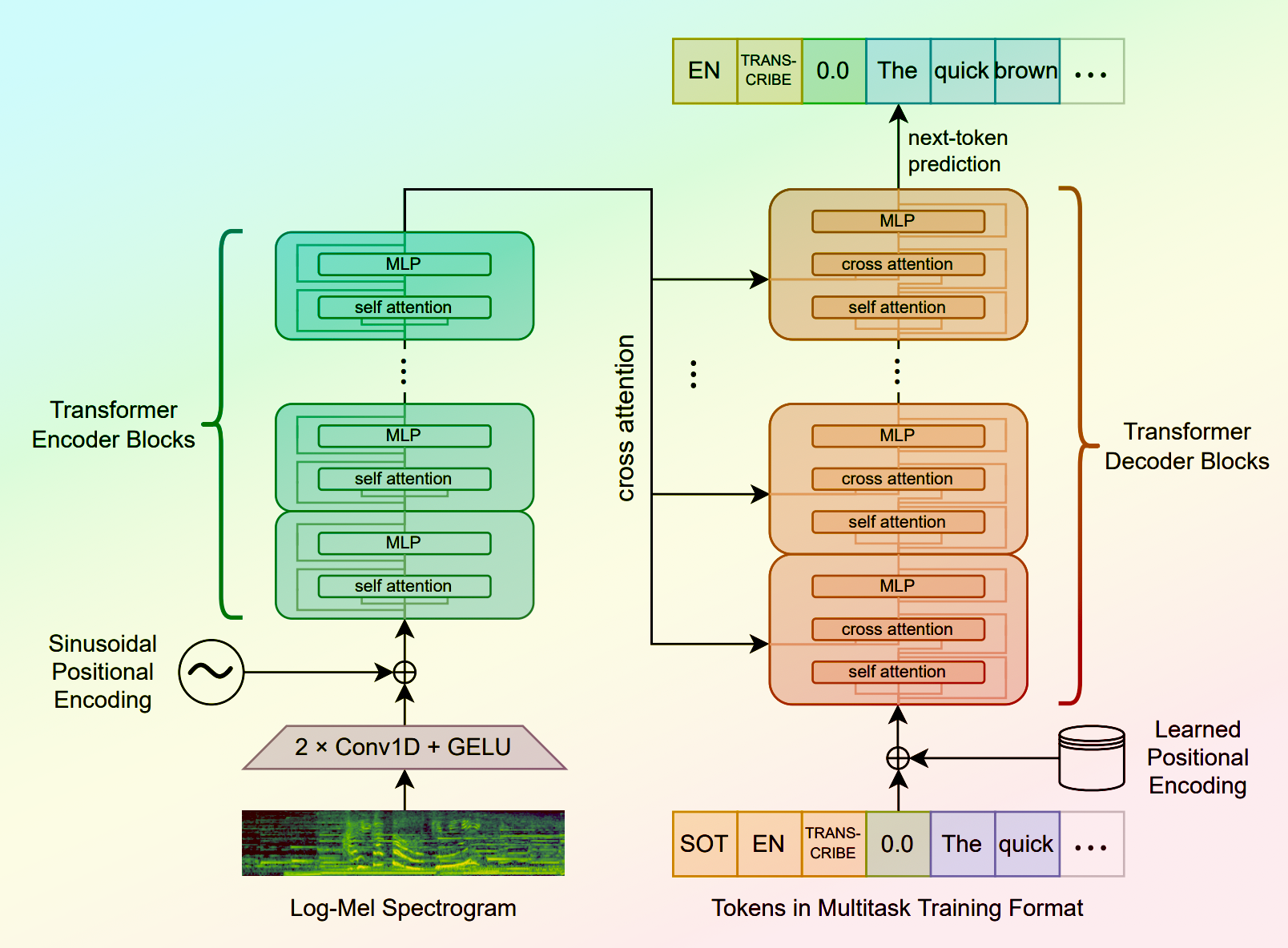

Whisper is a general-purpose speech recognition model developed by OpenAI. It is capable of converting speech in audio to text, with the ability to translate the text to English if desired. Whisper is based on a large Transformer model trained on a diverse dataset of multilingual and multitask speech recognition data. This allows the model to handle a wide range of accents, background noises, and languages. Similar models like whisper-large-v3, incredibly-fast-whisper, and whisper-diarization offer various optimizations and additional features built on top of the core Whisper model. Model inputs and outputs Whisper takes an audio file as input and outputs a text transcription. The model can also translate the transcription to English if desired. The input audio can be in various formats, and the model supports a range of parameters to fine-tune the transcription, such as temperature, patience, and language. Inputs Audio**: The audio file to be transcribed Model**: The specific version of the Whisper model to use, currently only large-v3 is supported Language**: The language spoken in the audio, or None to perform language detection Translate**: A boolean flag to translate the transcription to English Transcription**: The format for the transcription output, such as "plain text" Initial Prompt**: An optional initial text prompt to provide to the model Suppress Tokens**: A list of token IDs to suppress during sampling Logprob Threshold**: The minimum average log probability threshold for a successful transcription No Speech Threshold**: The threshold for considering a segment as silence Condition on Previous Text**: Whether to provide the previous output as a prompt for the next window Compression Ratio Threshold**: The maximum compression ratio threshold for a successful transcription Temperature Increment on Fallback**: The temperature increase when the decoding fails to meet the specified thresholds Outputs Transcription**: The text transcription of the input audio Language**: The detected language of the audio (if language input is None) Tokens**: The token IDs corresponding to the transcription Timestamp**: The start and end timestamps for each word in the transcription Confidence**: The confidence score for each word in the transcription Capabilities Whisper is a powerful speech recognition model that can handle a wide range of accents, background noises, and languages. The model is capable of accurately transcribing audio and optionally translating the transcription to English. This makes Whisper useful for a variety of applications, such as real-time captioning, meeting transcription, and audio-to-text conversion. What can I use it for? Whisper can be used in various applications that require speech-to-text conversion, such as: Captioning and Subtitling**: Automatically generate captions or subtitles for videos, improving accessibility for viewers. Meeting Transcription**: Transcribe audio recordings of meetings, interviews, or conferences for easy review and sharing. Podcast Transcription**: Convert audio podcasts to text, making the content more searchable and accessible. Language Translation**: Transcribe audio in one language and translate the text to another, enabling cross-language communication. Voice Interfaces**: Integrate Whisper into voice-controlled applications, such as virtual assistants or smart home devices. Things to try One interesting aspect of Whisper is its ability to handle a wide range of languages and accents. You can experiment with the model's performance on audio samples in different languages or with various background noises to see how it handles different real-world scenarios. Additionally, you can explore the impact of the different input parameters, such as temperature, patience, and language detection, on the transcription quality and accuracy.

Updated Invalid Date

parler-tts

4.2K

parler-tts is a lightweight text-to-speech (TTS) model developed by cjwbw, a creator at Replicate. It is trained on 10.5K hours of audio data and can generate high-quality, natural-sounding speech with controllable features like gender, background noise, speaking rate, pitch, and reverberation. parler-tts is related to models like voicecraft, whisper, and sabuhi-model, which also focus on speech-related tasks. Additionally, the parler_tts_mini_v0.1 model provides a lightweight version of the parler-tts system. Model inputs and outputs The parler-tts model takes two main inputs: a text prompt and a text description. The prompt is the text to be converted into speech, while the description provides additional details to control the characteristics of the generated audio, such as the speaker's gender, pitch, speaking rate, and environmental factors. Inputs Prompt**: The text to be converted into speech. Description**: A text description that provides details about the desired characteristics of the generated audio, such as the speaker's gender, pitch, speaking rate, and environmental factors. Outputs Audio**: The generated audio file in WAV format, which can be played back or further processed as needed. Capabilities The parler-tts model can generate high-quality, natural-sounding speech with a range of customizable features. Users can control the gender, pitch, speaking rate, and environmental factors of the generated audio by carefully crafting the text description. This allows for a high degree of flexibility and creativity in the generated output, making it useful for a variety of applications, such as audio production, virtual assistants, and language learning. What can I use it for? The parler-tts model can be used in a variety of applications that require text-to-speech functionality. Some potential use cases include: Audio production**: The model can be used to generate natural-sounding voice-overs, narrations, or audio content for videos, podcasts, or other multimedia projects. Virtual assistants**: The model's ability to generate speech with customizable characteristics can be used to create more personalized and engaging virtual assistants. Language learning**: The model can be used to generate sample audio for language learning materials, providing learners with high-quality examples of pronunciation and intonation. Accessibility**: The model can be used to generate audio versions of text content, improving accessibility for individuals with visual impairments or reading difficulties. Things to try One interesting aspect of the parler-tts model is its ability to generate speech with a high degree of control over the output characteristics. Users can experiment with different text descriptions to explore the range of speech styles and environmental factors that the model can produce. For example, try using different descriptors for the speaker's gender, pitch, and speaking rate, or add details about the recording environment, such as the level of background noise or reverberation. By fine-tuning the text description, users can create a wide variety of speech samples that can be used for various applications.

Updated Invalid Date

realistic-voice-cloning

307

The realistic-voice-cloning model, created by zsxkib, is an AI model that can create song covers by cloning a specific voice from audio files. It builds upon the Realistic Voice Cloning (RVC v2) technology, allowing users to generate vocals in the style of any RVC v2 trained voice. This model offers an alternative to similar voice cloning models like create-rvc-dataset, openvoice, free-vc, train-rvc-model, and voicecraft, each with its own unique features and capabilities. Model inputs and outputs The realistic-voice-cloning model takes a variety of inputs that allow users to fine-tune the generated vocals, including the RVC model to use, pitch changes, reverb settings, and more. The output is a generated audio file in either MP3 or WAV format, containing the original song's vocals replaced with the cloned voice. Inputs Song Input**: The audio file to use as the source for the song RVC Model**: The specific RVC v2 model to use for the voice cloning Pitch Change**: Adjust the pitch of the AI-generated vocals Index Rate**: Control the balance between the AI's accent and the original vocals RMS Mix Rate**: Adjust the balance between the original vocal's loudness and a fixed loudness Filter Radius**: Apply median filtering to the harvested pitch results Pitch Detection Algorithm**: Choose between different pitch detection algorithms Protect**: Control the amount of original vocals' breath and voiceless consonants to leave in the AI vocals Reverb Size, Damping, Dryness, and Wetness**: Adjust the reverb settings Pitch Change All**: Change the pitch/key of the background music, backup vocals, and AI vocals Volume Changes**: Adjust the volume of the main AI vocals, backup vocals, and background music Outputs The generated audio file in either MP3 or WAV format, with the original vocals replaced by the cloned voice Capabilities The realistic-voice-cloning model can create high-quality song covers by replacing the original vocals with a cloned voice. Users can fine-tune the generated vocals to achieve their desired sound, adjusting parameters like pitch, reverb, and volume. This model is particularly useful for musicians, content creators, and audio engineers who want to create unique vocal covers or experiments with different voice styles. What can I use it for? The realistic-voice-cloning model can be used to create song covers, remixes, and other audio projects where you want to replace the original vocals with a different voice. This can be useful for musicians who want to experiment with different vocal styles, content creators who want to create unique covers, or audio engineers who need to modify existing vocal tracks. The model's ability to fine-tune the generated vocals also makes it suitable for professional audio production work. Things to try With the realistic-voice-cloning model, you can try creating unique song covers by cloning the voice of your favorite singers or even your own voice. Experiment with different RVC models, pitch changes, and reverb settings to achieve the desired sound. You could also explore using the model to create custom vocal samples or background vocals for your music productions. The versatility of the model allows for a wide range of creative possibilities.

Updated Invalid Date