rankiqa

Maintainer: rossjillian

1

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | View on Github |

| Paper link | View on Arxiv |

Create account to get full access

Model overview

The rankiqa model is a machine learning model developed by Jillian Ross that is used to get image quality scores. This model is similar to other AI models like GFPGAN, which is used for face restoration, and SDXL-HiroshiNagai, which is a Stable Diffusion XL model trained on Hiroshi Nagai's illustrations.

Model inputs and outputs

The rankiqa model takes a single input, which is an image. The output of the model is a single number representing the quality score of the input image.

Inputs

- Image: The image to be assessed for quality.

Outputs

- Output: A numeric score representing the quality of the input image.

Capabilities

The rankiqa model is capable of assessing the quality of input images and providing a numerical score. This can be useful for a variety of applications, such as evaluating the quality of AI-generated images or selecting the best images from a set.

What can I use it for?

The rankiqa model can be used to assess the quality of images for a variety of purposes, such as selecting the best images for a marketing campaign or evaluating the performance of an AI image generation model. For example, you could use the rankiqa model to automatically select the highest-quality images from a large set of images generated by a model like Real-ESRGAN-XXL-Images or Img2Paint_ControlNet.

Things to try

One interesting thing to try with the rankiqa model is to use it to evaluate the quality of images generated by different AI models or techniques. You could compare the quality scores of images generated by different models or with different hyperparameters to understand how the quality of the output varies. This could be particularly useful for projects that involve generating or manipulating images, such as QR2AI's AI-generated QR codes.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

sdxl-lightning-4step

453.2K

sdxl-lightning-4step is a fast text-to-image model developed by ByteDance that can generate high-quality images in just 4 steps. It is similar to other fast diffusion models like AnimateDiff-Lightning and Instant-ID MultiControlNet, which also aim to speed up the image generation process. Unlike the original Stable Diffusion model, these fast models sacrifice some flexibility and control to achieve faster generation times. Model inputs and outputs The sdxl-lightning-4step model takes in a text prompt and various parameters to control the output image, such as the width, height, number of images, and guidance scale. The model can output up to 4 images at a time, with a recommended image size of 1024x1024 or 1280x1280 pixels. Inputs Prompt**: The text prompt describing the desired image Negative prompt**: A prompt that describes what the model should not generate Width**: The width of the output image Height**: The height of the output image Num outputs**: The number of images to generate (up to 4) Scheduler**: The algorithm used to sample the latent space Guidance scale**: The scale for classifier-free guidance, which controls the trade-off between fidelity to the prompt and sample diversity Num inference steps**: The number of denoising steps, with 4 recommended for best results Seed**: A random seed to control the output image Outputs Image(s)**: One or more images generated based on the input prompt and parameters Capabilities The sdxl-lightning-4step model is capable of generating a wide variety of images based on text prompts, from realistic scenes to imaginative and creative compositions. The model's 4-step generation process allows it to produce high-quality results quickly, making it suitable for applications that require fast image generation. What can I use it for? The sdxl-lightning-4step model could be useful for applications that need to generate images in real-time, such as video game asset generation, interactive storytelling, or augmented reality experiences. Businesses could also use the model to quickly generate product visualization, marketing imagery, or custom artwork based on client prompts. Creatives may find the model helpful for ideation, concept development, or rapid prototyping. Things to try One interesting thing to try with the sdxl-lightning-4step model is to experiment with the guidance scale parameter. By adjusting the guidance scale, you can control the balance between fidelity to the prompt and diversity of the output. Lower guidance scales may result in more unexpected and imaginative images, while higher scales will produce outputs that are closer to the specified prompt.

Updated Invalid Date

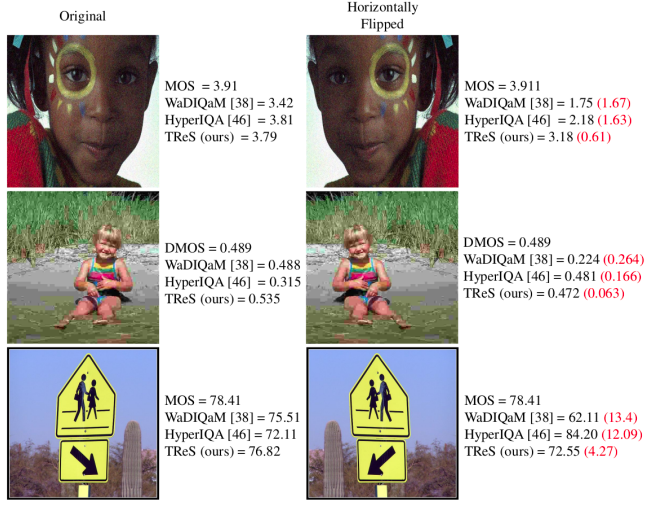

tres_iqa

146

tres_iqa is an AI model for assessing the quality of an image. It can be used to get a numerical score representing the perceived quality of an image. Similar AI models that can be used for image quality assessment include rankiqa, which also provides image quality scores, and stable-diffusion, gfpgan, real-esrgan, and realesrgan, which can be used for image restoration and enhancement. Model inputs and outputs tres_iqa takes a single input - an image file. It outputs a numerical score representing the perceived quality of the input image. Inputs input_image**: The image file to run quality assessment on. Outputs Output**: A numerical score representing the perceived quality of the input image. Capabilities tres_iqa can be used to assess the quality of an image. This could be useful for applications like selecting the best photos from a batch, filtering out low-quality images, or automatically enhancing images. What can I use it for? tres_iqa could be used in a variety of applications that require assessing image quality, such as photo editing, content curation, or image optimization for web or mobile. For example, you could use it to automatically filter out low-quality images from a batch, or to select the best photos from a photo shoot. You could also use it to enhance images by identifying areas that need improvement and applying appropriate editing techniques. Things to try You could try using tres_iqa to assess the quality of a set of images and then use that information to select the best ones or to enhance the lower-quality ones. You could also experiment with different types of images, such as portraits, landscapes, or product shots, to see how the model performs in different contexts.

Updated Invalid Date

ar

1

The ar model, created by qr2ai, is a text-to-image prompt model that can generate images based on user input. It shares capabilities with similar models like outline, gfpgan, edge-of-realism-v2.0, blip-2, and rpg-v4, all of which can generate, manipulate, or analyze images based on textual input. Model inputs and outputs The ar model takes in a variety of inputs to generate an image, including a prompt, negative prompt, seed, and various settings for text and image styling. The outputs are image files in a URI format. Inputs Prompt**: The text that describes the desired image Negative Prompt**: The text that describes what should not be included in the image Seed**: A random number that initializes the image generation D Text**: Text for the first design T Text**: Text for the second design D Image**: An image for the first design T Image**: An image for the second design F Style 1**: The font style for the first text F Style 2**: The font style for the second text Blend Mode**: The blending mode for overlaying text Image Size**: The size of the generated image Final Color**: The color of the final text Design Color**: The color of the design Condition Scale**: The scale for the image generation conditioning Name Position 1**: The position of the first text Name Position 2**: The position of the second text Padding Option 1**: The padding percentage for the first text Padding Option 2**: The padding percentage for the second text Num Inference Steps**: The number of denoising steps in the image generation process Outputs Output**: An image file in URI format Capabilities The ar model can generate unique, AI-created images based on text prompts. It can combine text and visual elements in creative ways, and the various input settings allow for a high degree of customization and control over the final output. What can I use it for? The ar model could be used for a variety of creative projects, such as generating custom artwork, social media graphics, or even product designs. Its ability to blend text and images makes it a versatile tool for designers, marketers, and artists looking to create distinctive visual content. Things to try One interesting thing to try with the ar model is experimenting with different combinations of text and visual elements. For example, you could try using abstract or surreal prompts to see how the model interprets them, or play around with the various styling options to achieve unique and unexpected results.

Updated Invalid Date

realesrgan

16

realesrgan is an AI model for image restoration and face enhancement. It was created by Xintao Wang, Liangbin Xie, Chao Dong, and Ying Shan from the Tencent ARC Lab and Shenzhen Institutes of Advanced Technology. realesrgan extends the powerful ESRGAN model to a practical restoration application, training on pure synthetic data. It aims to develop algorithms for general image and video restoration. realesrgan can be contrasted with similar models like GFPGAN, which focuses on restoring real-world faces, and real-esrgan, which adds optional face correction and adjustable upscaling to the base realesrgan model. Model inputs and outputs realesrgan takes an input image and can output an upscaled and enhanced version of that image. The model supports arbitrary upscaling factors using the --outscale argument. It can also optionally perform face enhancement using the --face_enhance flag, which integrates the GFPGAN model for improved facial details. Inputs img**: The input image to be processed tile**: The tile size to use for processing. Setting this to a non-zero value can help with GPU memory issues, but may introduce some artifacts. scale**: The upscaling factor to apply to the input image. version**: The specific version of the realesrgan model to use, such as the general "RealESRGAN_x4plus" or the anime-optimized "RealESRGAN_x4plus_anime_6B". face_enhance**: A boolean flag to enable face enhancement using the GFPGAN model. Outputs The upscaled and enhanced output image. Capabilities realesrgan can effectively restore and enhance a variety of image types, including natural scenes, anime illustrations, and faces. It is particularly adept at upscaling low-resolution images while preserving details and reducing artifacts. The model's face enhancement capabilities can also improve the appearance of faces in images, making them appear sharper and more natural. What can I use it for? realesrgan can be a valuable tool for a wide range of image processing and enhancement tasks. For example, it could be used to upscale low-resolution images for use in presentations, publications, or social media. The face enhancement capabilities could also be leveraged to improve the appearance of portraits or AI-generated faces. Additionally, realesrgan could be integrated into content creation workflows, such as anime or video game development, to enhance the quality of in-game assets or animated scenes. Things to try One interesting aspect of realesrgan is its ability to handle a wide range of input image types, including those with alpha channels or grayscale. Experimenting with different input formats and the --outscale parameter can help you find the best configuration for your specific needs. Additionally, the model's performance can be tuned by adjusting the --tile size, which can be particularly useful when dealing with high-resolution or memory-intensive images.

Updated Invalid Date