sabuhi-model

Maintainer: sabuhigr

25

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | No Github link provided |

| Paper link | No paper link provided |

Create account to get full access

Model overview

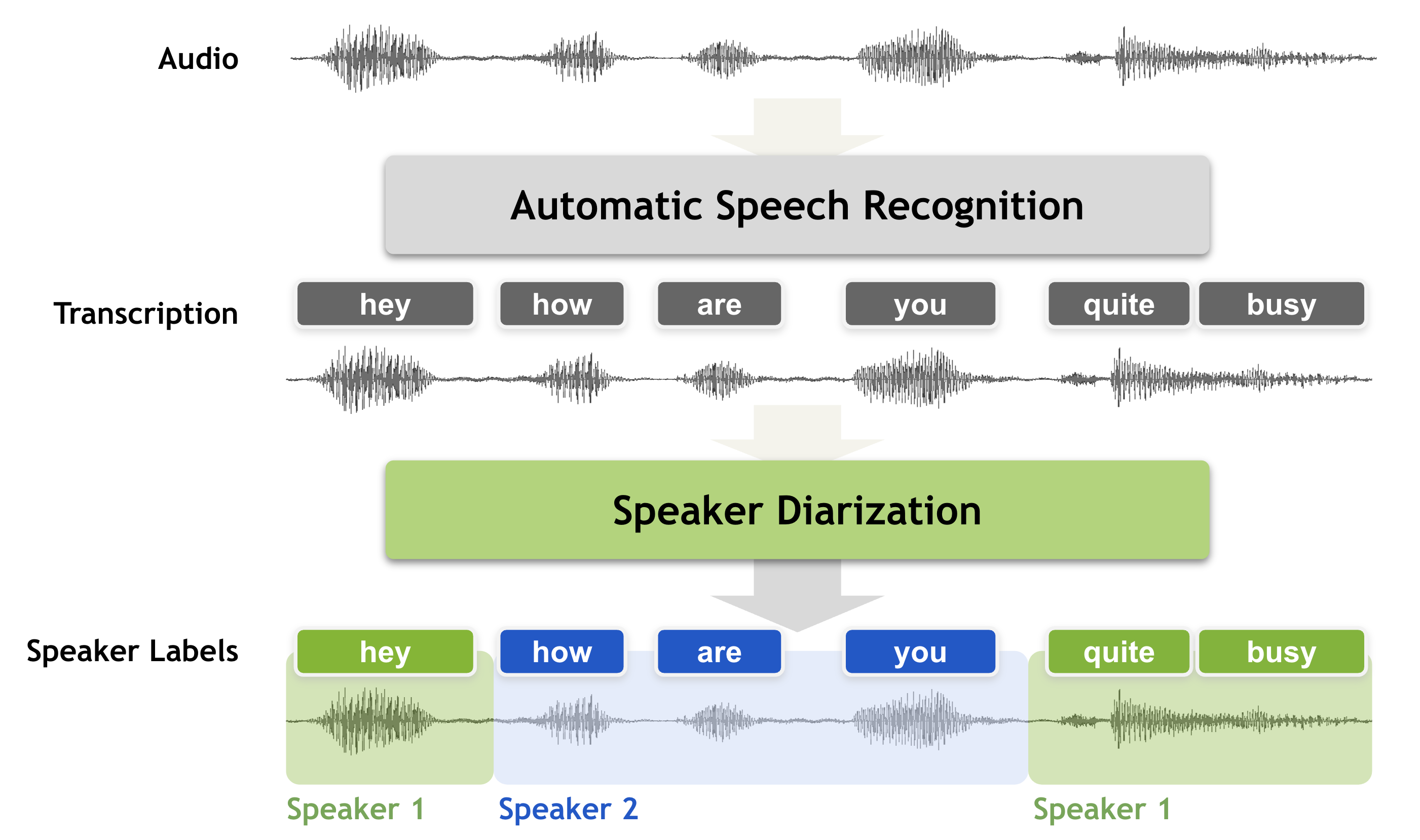

The sabuhi-model is an AI model developed by sabuhigr that builds upon the popular Whisper AI model. This model incorporates channel separation and speaker diarization, allowing it to transcribe audio with multiple speakers and distinguish between them.

The sabuhi-model can be seen as an extension of similar Whisper-based models like [object Object], [object Object], and the original [object Object] model. It offers additional capabilities for handling multi-speaker audio, making it a useful tool for transcribing interviews, meetings, and other scenarios with multiple participants.

Model inputs and outputs

The sabuhi-model takes in an audio file, along with several optional parameters to customize the transcription process. These include the choice of Whisper model, a Hugging Face token for speaker diarization, language settings, and various decoding options.

Inputs

- audio: The audio file to be transcribed

- model: The Whisper model to use, with "large-v2" as the default

- hf_token: Your Hugging Face token for speaker diarization

- language: The language spoken in the audio (can be left as "None" for language detection)

- translate: Whether to translate the transcription to English

- temperature: The temperature to use for sampling

- max_speakers: The maximum number of speakers to detect (default is 1)

- min_speakers: The minimum number of speakers to detect (default is 1)

- transcription: The format for the transcription (e.g., "plain text")

- initial_prompt: Optional text to provide as a prompt for the first window

- suppress_tokens: Comma-separated list of token IDs to suppress during sampling

- logprob_threshold: The average log probability threshold for considering the decoding as failed

- no_speech_threshold: The probability threshold for considering a segment as silence

- condition_on_previous_text: Whether to provide the previous output as a prompt for the next window

- compression_ratio_threshold: The gzip compression ratio threshold for considering the decoding as failed

- temperature_increment_on_fallback: The temperature increment to use when falling back due to threshold issues

Outputs

- The transcribed text, with speaker diarization and other formatting options as specified in the inputs

Capabilities

The sabuhi-model inherits the core speech recognition capabilities of the Whisper model, but also adds the ability to separate and identify multiple speakers within the audio. This makes it a useful tool for transcribing meetings, interviews, and other scenarios where multiple people are speaking.

What can I use it for?

The sabuhi-model can be used for a variety of applications that involve transcribing audio with multiple speakers, such as:

- Generating transcripts for interviews, meetings, or conference calls

- Creating subtitles or captions for videos with multiple speakers

- Improving the accessibility of audio-based content by providing text-based alternatives

- Enabling better search and indexing of audio-based content by generating transcripts

Companies working on voice assistants, video conferencing tools, or media production workflows may find the sabuhi-model particularly useful for their needs.

Things to try

One interesting aspect of the sabuhi-model is its ability to handle audio with multiple speakers and identify who is speaking at any given time. This could be particularly useful for analyzing the dynamics of a conversation, tracking who speaks the most, or identifying the main speakers in a meeting or interview.

Additionally, the model's various decoding options, such as the ability to suppress certain tokens or adjust the temperature, provide opportunities to experiment and fine-tune the transcription output to better suit specific use cases or preferences.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

sabuhi-model-v2

22

The sabuhi-model-v2 is a Whisper AI model developed by sabuhigr that can be used for adding domain-specific words to improve speech recognition accuracy. It is an extension of the sabuhi-model, which also includes channel separation and speaker diarization capabilities. This model can be compared to other Whisper-based models like whisper-subtitles, whisper-large-v3, the original whisper model, and the incredibly-fast-whisper model. Model inputs and outputs The sabuhi-model-v2 takes in an audio file and various optional parameters to customize the speech recognition process. The model can output the transcribed text in a variety of formats, including plain text, with support for translation to English. It also allows for the inclusion of domain-specific words to improve accuracy. Inputs audio**: The audio file to be transcribed model**: The Whisper model to use, with the default being large-v2 hf_token**: A Hugging Face token for speaker diarization language**: The language spoken in the audio, or None to perform language detection translate**: A boolean flag to translate the text to English temperature**: The temperature to use for sampling max_speakers**: The maximum number of speakers to detect min_speakers**: The minimum number of speakers to detect transcription**: The format for the transcription output suppress_tokens**: A comma-separated list of token IDs to suppress during sampling logprob_threshold**: The minimum average log probability for the decoding to be considered successful no_speech_threshold**: The probability threshold for considering a segment as silence domain_specific_words**: A comma-separated list of domain-specific words to include Outputs The transcribed text in the specified format Capabilities The sabuhi-model-v2 extends the capabilities of the original sabuhi-model by allowing for the inclusion of domain-specific words. This can improve the accuracy of the speech recognition, particularly in specialized domains. The model also supports speaker diarization and channel separation, which can be useful for transcribing multi-speaker audio. What can I use it for? The sabuhi-model-v2 can be used for a variety of applications that require accurate speech recognition, such as transcription, subtitling, or voice-controlled interfaces. By incorporating domain-specific words, the model can be particularly useful in industries or applications that require specialized vocabulary, such as medical, legal, or technical fields. Things to try One interesting thing to try with the sabuhi-model-v2 is to experiment with the domain-specific words feature. By providing a list of domain-specific terms, you can see how the model's accuracy and performance change compared to using the default Whisper model. Additionally, you can try adjusting the various input parameters, such as the temperature, suppressed tokens, and speaker detection thresholds, to see how they impact the transcription results.

Updated Invalid Date

whisper

31.0K

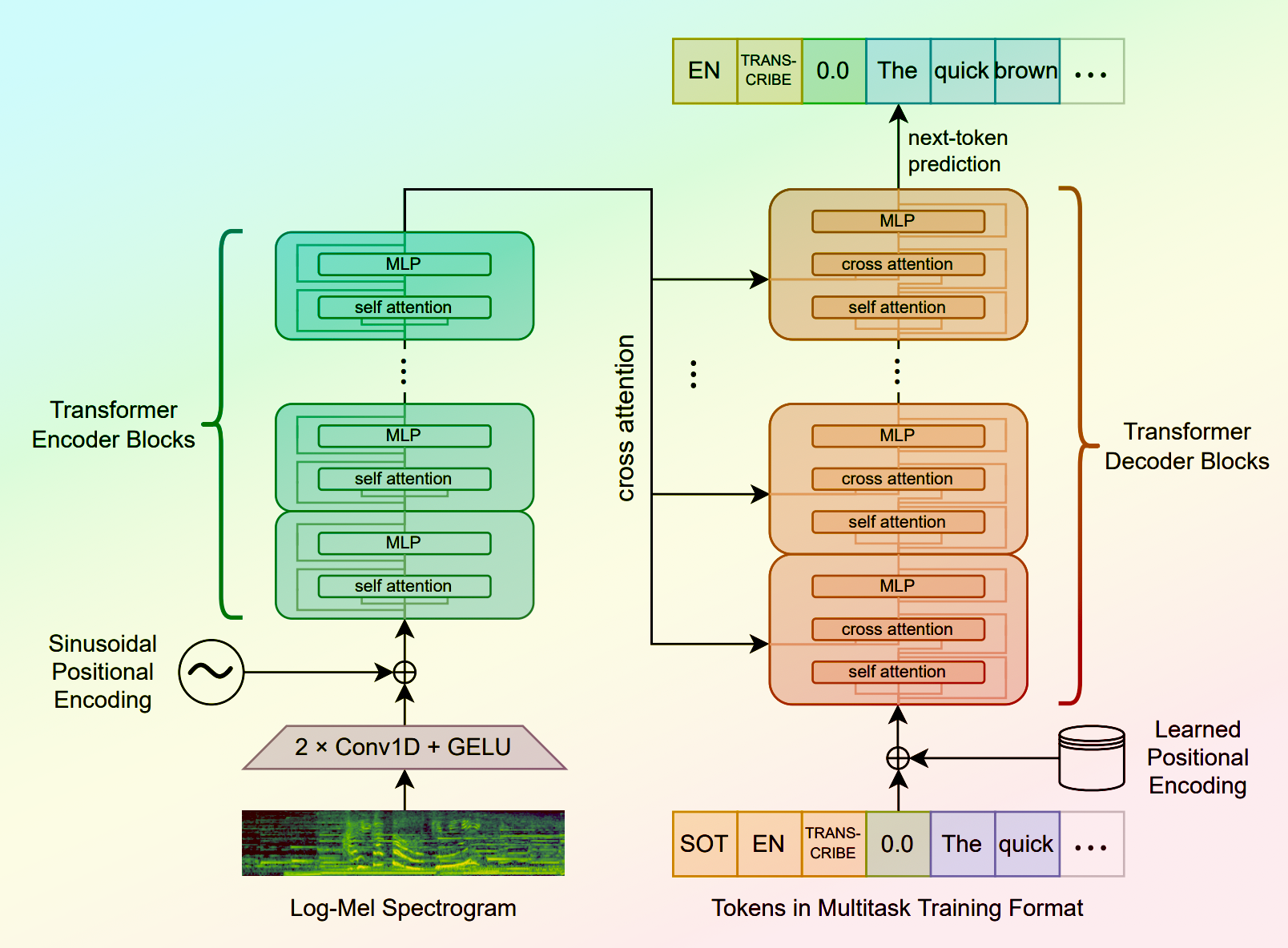

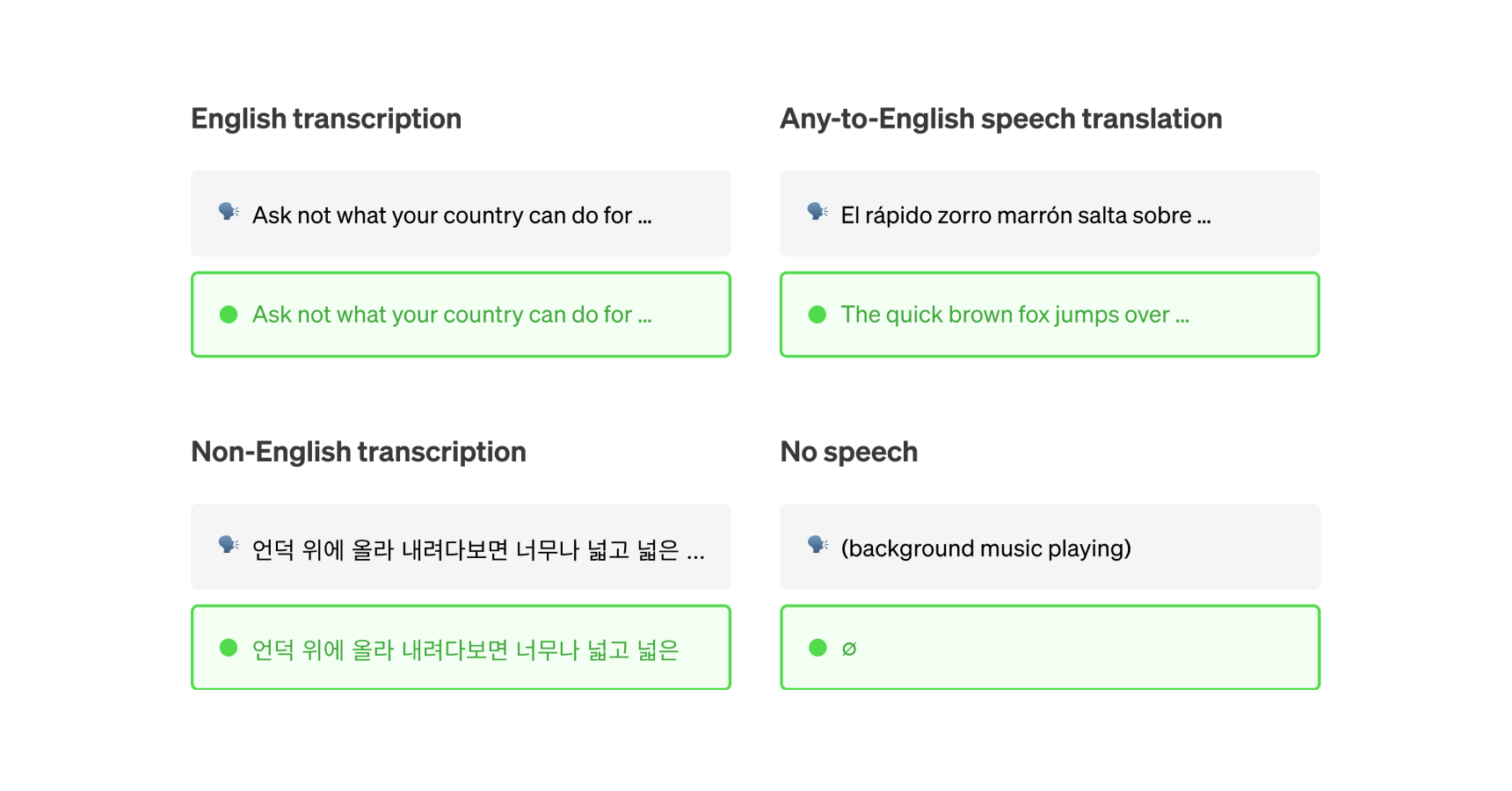

Whisper is a general-purpose speech recognition model developed by OpenAI. It is capable of converting speech in audio to text, with the ability to translate the text to English if desired. Whisper is based on a large Transformer model trained on a diverse dataset of multilingual and multitask speech recognition data. This allows the model to handle a wide range of accents, background noises, and languages. Similar models like whisper-large-v3, incredibly-fast-whisper, and whisper-diarization offer various optimizations and additional features built on top of the core Whisper model. Model inputs and outputs Whisper takes an audio file as input and outputs a text transcription. The model can also translate the transcription to English if desired. The input audio can be in various formats, and the model supports a range of parameters to fine-tune the transcription, such as temperature, patience, and language. Inputs Audio**: The audio file to be transcribed Model**: The specific version of the Whisper model to use, currently only large-v3 is supported Language**: The language spoken in the audio, or None to perform language detection Translate**: A boolean flag to translate the transcription to English Transcription**: The format for the transcription output, such as "plain text" Initial Prompt**: An optional initial text prompt to provide to the model Suppress Tokens**: A list of token IDs to suppress during sampling Logprob Threshold**: The minimum average log probability threshold for a successful transcription No Speech Threshold**: The threshold for considering a segment as silence Condition on Previous Text**: Whether to provide the previous output as a prompt for the next window Compression Ratio Threshold**: The maximum compression ratio threshold for a successful transcription Temperature Increment on Fallback**: The temperature increase when the decoding fails to meet the specified thresholds Outputs Transcription**: The text transcription of the input audio Language**: The detected language of the audio (if language input is None) Tokens**: The token IDs corresponding to the transcription Timestamp**: The start and end timestamps for each word in the transcription Confidence**: The confidence score for each word in the transcription Capabilities Whisper is a powerful speech recognition model that can handle a wide range of accents, background noises, and languages. The model is capable of accurately transcribing audio and optionally translating the transcription to English. This makes Whisper useful for a variety of applications, such as real-time captioning, meeting transcription, and audio-to-text conversion. What can I use it for? Whisper can be used in various applications that require speech-to-text conversion, such as: Captioning and Subtitling**: Automatically generate captions or subtitles for videos, improving accessibility for viewers. Meeting Transcription**: Transcribe audio recordings of meetings, interviews, or conferences for easy review and sharing. Podcast Transcription**: Convert audio podcasts to text, making the content more searchable and accessible. Language Translation**: Transcribe audio in one language and translate the text to another, enabling cross-language communication. Voice Interfaces**: Integrate Whisper into voice-controlled applications, such as virtual assistants or smart home devices. Things to try One interesting aspect of Whisper is its ability to handle a wide range of languages and accents. You can experiment with the model's performance on audio samples in different languages or with various background noises to see how it handles different real-world scenarios. Additionally, you can explore the impact of the different input parameters, such as temperature, patience, and language detection, on the transcription quality and accuracy.

Updated Invalid Date

whisper-subtitles

67

The whisper-subtitles model is a variation of OpenAI's Whisper, a general-purpose speech recognition model. Like the original Whisper model, this model is capable of transcribing speech in audio files, with support for multiple languages. The key difference is that whisper-subtitles is specifically designed to generate subtitles in either SRT or VTT format, making it a convenient tool for creating captions or subtitles for audio and video content. Model inputs and outputs The whisper-subtitles model takes two main inputs: audio_path**: the path to the audio file to be transcribed model_name**: the name of the Whisper model to use, with options like tiny, base, small, medium, and large The model outputs a JSON object containing the transcribed text, with timestamps for each subtitle segment. This output can be easily converted to SRT or VTT subtitle formats. Inputs audio_path**: The path to the audio file to be transcribed model_name**: The name of the Whisper model to use, such as tiny, base, small, medium, or large format**: The subtitle format to generate, either srt or vtt Outputs text**: The transcribed text segments**: A list of dictionaries, each containing the start and end times (in seconds) and the transcribed text for a subtitle segment Capabilities The whisper-subtitles model inherits the powerful speech recognition capabilities of the original Whisper model, including support for multilingual speech, language identification, and speech translation. By generating subtitles in standardized formats like SRT and VTT, this model makes it easier to incorporate high-quality transcriptions into video and audio content. What can I use it for? The whisper-subtitles model can be useful for a variety of applications that require generating subtitles or captions for audio and video content. This could include: Automatically adding subtitles to YouTube videos, podcasts, or other multimedia content Improving accessibility by providing captions for hearing-impaired viewers Enabling multilingual content by generating subtitles in different languages Streamlining the video production process by automating the subtitle generation task Things to try One interesting aspect of the whisper-subtitles model is its ability to handle a wide range of audio file formats and quality levels. Try experimenting with different types of audio, such as low-quality recordings, noisy environments, or accented speech, to see how the model performs. You can also compare the output of the various Whisper model sizes to find the best balance of accuracy and speed for your specific use case.

Updated Invalid Date

whisper-large-v3

3

The whisper-large-v3 model is a general-purpose speech recognition model developed by OpenAI. It is a large Transformer-based model trained on a diverse dataset of audio data, allowing it to perform multilingual speech recognition, speech translation, and language identification. The model is highly capable and can transcribe speech across a wide range of languages, although its performance varies based on the specific language. Similar models like incredibly-fast-whisper, whisper-diarization, and whisperx-a40-large offer various optimizations and additional features built on top of the base whisper-large-v3 model. Model inputs and outputs The whisper-large-v3 model takes in audio files and can perform speech recognition, transcription, and translation tasks. It supports a wide range of input audio formats, including common formats like FLAC, MP3, and WAV. The model can identify the source language of the audio and optionally translate the transcribed text into English. Inputs Filepath**: Path to the audio file to transcribe Language**: The source language of the audio, if known (e.g., "English", "French") Translate**: Whether to translate the transcribed text to English Outputs The transcribed text from the input audio file Capabilities The whisper-large-v3 model is a highly capable speech recognition model that can handle a diverse range of audio data. It demonstrates strong performance across many languages, with the ability to identify the source language and optionally translate the transcribed text to English. The model can also perform tasks like speaker diarization and generating word-level timestamps, as showcased by similar models like whisper-diarization and whisperx-a40-large. What can I use it for? The whisper-large-v3 model can be used for a variety of applications that involve transcribing speech, such as live captioning, audio-to-text conversion, and language learning. It can be particularly useful for transcribing multilingual audio, as it can identify the source language and provide accurate transcriptions. Additionally, the model's ability to translate the transcribed text to English opens up opportunities for cross-lingual communication and accessibility. Things to try One interesting aspect of the whisper-large-v3 model is its ability to handle a wide range of audio data, from high-quality studio recordings to low-quality field recordings. You can experiment with different types of audio input and observe how the model's performance varies. Additionally, you can try using the model's language identification capabilities to transcribe audio in unfamiliar languages and explore its translation functionality to bridge language barriers.

Updated Invalid Date