sd15-consistency-decoder-vae

Maintainer: anotherjesse

2

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | View on Github |

| Paper link | No paper link provided |

Create account to get full access

Model overview

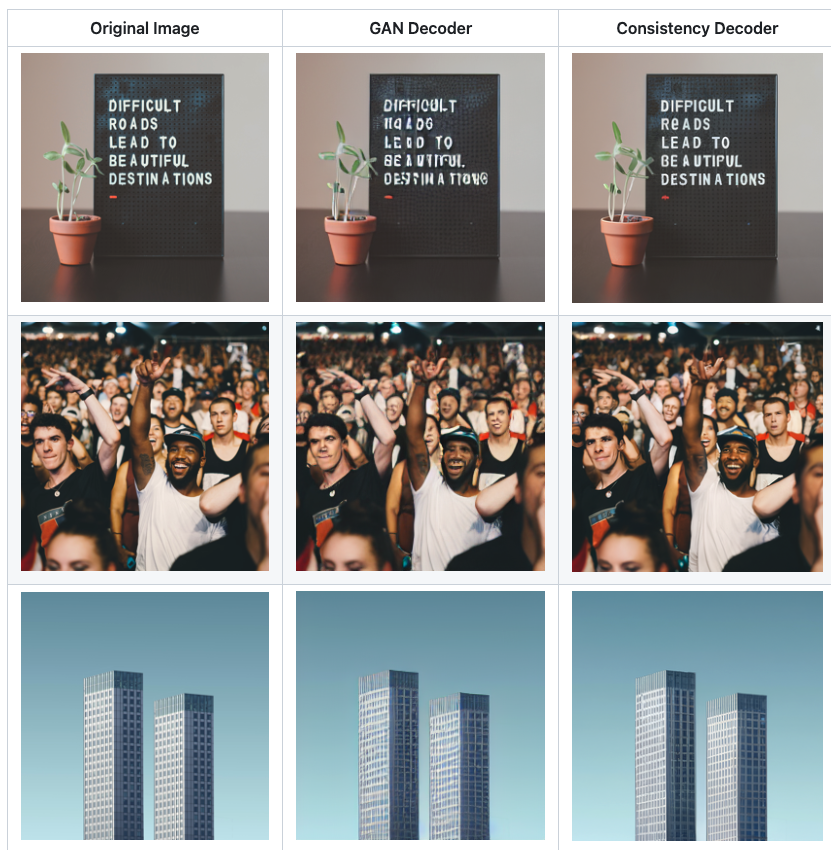

sd15-consistency-decoder-vae is an AI model developed by anotherjesse that builds upon the Stable Diffusion 1.5 and OpenAI's Consistency Decoder models. It aims to generate photo-realistic images from text prompts, similar to other popular text-to-image models like Stable Diffusion and SDv2 Preview.

Model inputs and outputs

sd15-consistency-decoder-vae takes in a text prompt as the main input, along with optional parameters like seed, image size, number of outputs, and more. The model then generates one or more images based on the provided prompt and settings.

Inputs

- Prompt: The text prompt that describes the desired image

- Seed: A random seed value to control image generation

- Width/Height: The desired width and height of the output image

- Num Outputs: The number of images to generate

- Guidance Scale: The scale for classifier-free guidance

- Negative Prompt: Specify things to not see in the output

- Consistency Decoder: Enable or disable the consistency decoder

- Num Inference Steps: The number of denoising steps

Outputs

- One or more generated images, returned as an array of image URLs

Capabilities

sd15-consistency-decoder-vae is capable of generating a wide variety of photorealistic images from text prompts, including scenes, portraits, and more. The consistency decoder feature aims to improve the coherence and stability of the generated images.

What can I use it for?

sd15-consistency-decoder-vae can be used for various creative and practical applications, such as generating concept art, product visualizations, and even personalized content. The model's flexibility allows users to experiment with different prompts and settings to create unique and compelling images. As with any text-to-image model, it can be a powerful tool for artists, designers, and content creators.

Things to try

Try experimenting with different prompts, including specific details, adjectives, and contextual information to see how the model responds. You can also play with the various input parameters, such as adjusting the guidance scale or number of inference steps, to find the settings that work best for your desired output.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

sdv2-preview

28

sdv2-preview is a preview of Stable Diffusion 2.0, a latent diffusion model capable of generating photorealistic images from text prompts. It was created by anotherjesse and builds upon the original Stable Diffusion model. The sdv2-preview model uses a downsampling-factor 8 autoencoder with an 865M UNet and OpenCLIP ViT-H/14 text encoder, producing 768x768 px outputs. It is trained from scratch and can be sampled with higher guidance scales than the original Stable Diffusion. Model inputs and outputs The sdv2-preview model takes a text prompt as input and generates one or more corresponding images as output. The text prompt can describe any scene, object, or concept, and the model will attempt to create a photorealistic visualization of it. Inputs Prompt**: A text description of the desired image content. Seed**: An optional random seed to control the stochastic generation process. Width/Height**: The desired dimensions of the output image, up to 1024x768 or 768x1024. Num Outputs**: The number of images to generate (up to 10). Guidance Scale**: A value that controls the trade-off between fidelity to the prompt and creativity in the generation process. Num Inference Steps**: The number of denoising steps used in the diffusion process. Outputs Images**: One or more photorealistic images corresponding to the input prompt. Capabilities The sdv2-preview model is capable of generating a wide variety of photorealistic images from text prompts, including landscapes, portraits, abstract concepts, and fantastical scenes. It has been trained on a large, diverse dataset and can handle complex prompts with multiple elements. What can I use it for? The sdv2-preview model can be used for a variety of creative and practical applications, such as: Generating concept art or illustrations for creative projects. Prototyping product designs or visualizing ideas. Creating unique and personalized images for marketing or social media. Exploring creative prompts and ideas without the need for traditional artistic skills. Things to try Some interesting things to try with the sdv2-preview model include: Experimenting with different types of prompts, from the specific to the abstract. Combining the model with other tools, such as image editing software or 3D modeling tools, to create more complex and integrated visuals. Exploring the model's capabilities for specific use cases, such as product design, character creation, or scientific visualization. Comparing the output of sdv2-preview to similar models, such as the original Stable Diffusion or the Stable Diffusion 2-1-unclip model, to understand the model's unique strengths and characteristics.

Updated Invalid Date

sdxl-recur

1

The sdxl-recur model is an exploration of image-to-image zooming and recursive generation of images, built on top of the SDXL model. This model allows for the generation of images through a process of progressive zooming and refinement, starting from an initial image or prompt. It is similar to other SDXL-based models like image-merge-sdxl, sdxl-custom-model, masactrl-sdxl, and sdxl, all of which build upon the core SDXL architecture. Model inputs and outputs The sdxl-recur model accepts a variety of inputs, including a prompt, an optional starting image, zoom factor, number of steps, and number of frames. The model then generates a series of images that progressively zoom in on the initial prompt or image. The outputs are an array of generated image URLs. Inputs Prompt**: The input text prompt that describes the desired image. Image**: An optional starting image that the model can use as a reference. Zoom**: The zoom factor to apply to the image during the recursive generation process. Steps**: The number of denoising steps to perform per image. Frames**: The number of frames to generate in the recursive process. Width/Height**: The desired width and height of the output images. Scheduler**: The scheduler algorithm to use for the diffusion process. Guidance Scale**: The scale for classifier-free guidance, which controls the balance between the prompt and the model's own generation. Prompt Strength**: The strength of the input prompt when using image-to-image or inpainting. Outputs The model generates an array of image URLs representing the recursively zoomed and refined images. Capabilities The sdxl-recur model is capable of generating images based on a text prompt, or starting from an existing image and recursively zooming and refining the output. This allows for the exploration of increasingly detailed and complex visual concepts, starting from a high-level prompt or initial image. What can I use it for? The sdxl-recur model could be useful for a variety of creative and artistic applications, such as generating concept art, visual storytelling, or exploring abstract and surreal imagery. The recursive zooming and refinement process could also be applied to tasks like product visualization, architectural design, or scientific visualization, where the ability to generate increasingly detailed and focused images could be valuable. Things to try One interesting aspect of the sdxl-recur model is the ability to start with an existing image and recursively zoom in, generating increasingly detailed and refined versions of the original. This could be useful for tasks like image enhancement, object detection, or content-aware image editing. Additionally, experimenting with different prompts, zoom factors, and other input parameters could lead to the discovery of unexpected and unique visual outputs.

Updated Invalid Date

sd15-cog

1

sd15-cog is a Stable Diffusion 1.5 inference model created by dazaleas. It includes several models and is designed for generating high-quality images. This model is similar to other Stable Diffusion models like ssd-lora-inference, cog-a1111-ui, and turbo-enigma, which also focus on text-to-image generation. Model inputs and outputs The sd15-cog model accepts a variety of inputs to customize the image generation process. These include the prompt, seed, steps, width, height, cfg scale, and more. The model outputs an array of image URLs. Inputs vae**: The vae to use seed**: The seed used when generating, set to -1 for random seed model**: The model to use steps**: The steps when generating width**: The width of the image height**: The height of the image prompt**: The prompt hr_scale**: The scale to resize cfg_scale**: CFG Scale defines how much attention the model pays to the prompt when generating enable_hr**: Generate high resoultion version batch_size**: Number of images to generate (1-4) hr_upscaler**: The upscaler to use when performing second pass sampler_name**: The sampler used when generating negative_prompt**: The negative prompt (For things you don't want) denoising_strength**: The strength when applying denoising hr_second_pass_steps**: The steps when performing second pass Outputs An array of image URLs Capabilities The sd15-cog model can generate high-quality, photorealistic images from text prompts. It supports a variety of customization options to fine-tune the output, such as adjusting the resolution, sampling method, and denoising strength. What can I use it for? You can use sd15-cog to create custom illustrations, portraits, and other images for a variety of applications, such as marketing materials, product designs, and social media content. The model's ability to generate diverse and realistic images makes it a powerful tool for creative professionals and hobbyists alike. Things to try Try experimenting with different prompts, sampling methods, and other settings to see how they affect the output. You can also explore the model's ability to generate images with specific styles or themes by adjusting the prompt and other parameters.

Updated Invalid Date

realistic-vision-v3.0

4

The realistic-vision-v3.0 is a Cog model based on the SG161222/Realistic_Vision_V3.0_VAE model, created by lucataco. It is a variation of the Realistic Vision family of models, which also includes realistic-vision-v5, realistic-vision-v5.1, realistic-vision-v4.0, realistic-vision-v5-img2img, and realistic-vision-v5-inpainting. Model inputs and outputs The realistic-vision-v3.0 model takes a text prompt, seed, number of inference steps, width, height, and guidance scale as inputs, and generates a high-quality, photorealistic image as output. The inputs and outputs are summarized as follows: Inputs Prompt**: A text prompt describing the desired image Seed**: A seed value for the random number generator (0 = random, max: 2147483647) Steps**: The number of inference steps (0-100) Width**: The width of the generated image (0-1920) Height**: The height of the generated image (0-1920) Guidance**: The guidance scale, which controls the balance between the text prompt and the model's learned representations (3.5-7) Outputs Output image**: A high-quality, photorealistic image generated based on the input prompt and parameters Capabilities The realistic-vision-v3.0 model is capable of generating highly realistic images from text prompts, with a focus on portraiture and natural scenes. The model is able to capture subtle details and textures, resulting in visually stunning outputs. What can I use it for? The realistic-vision-v3.0 model can be used for a variety of creative and artistic applications, such as generating concept art, product visualizations, or photorealistic portraits. It could also be used in commercial applications, such as creating marketing materials or visualizing product designs. Additionally, the model's capabilities could be leveraged in educational or research contexts, such as creating visual aids or exploring the intersection of language and visual representation. Things to try One interesting aspect of the realistic-vision-v3.0 model is its ability to capture a sense of photographic realism, even when working with fantastical or surreal prompts. For example, you could try generating images of imaginary creatures or scenes that blend the realistic and the imaginary. Additionally, experimenting with different guidance scale values could result in a range of stylistic variations, from more abstract to more detailed and photorealistic.

Updated Invalid Date