flux-360

Maintainer: igorriti

4

| Property | Value |

|---|---|

| Run this model | Run on Replicate |

| API spec | View on Replicate |

| Github link | View on Github |

| Paper link | No paper link provided |

Create account to get full access

Model overview

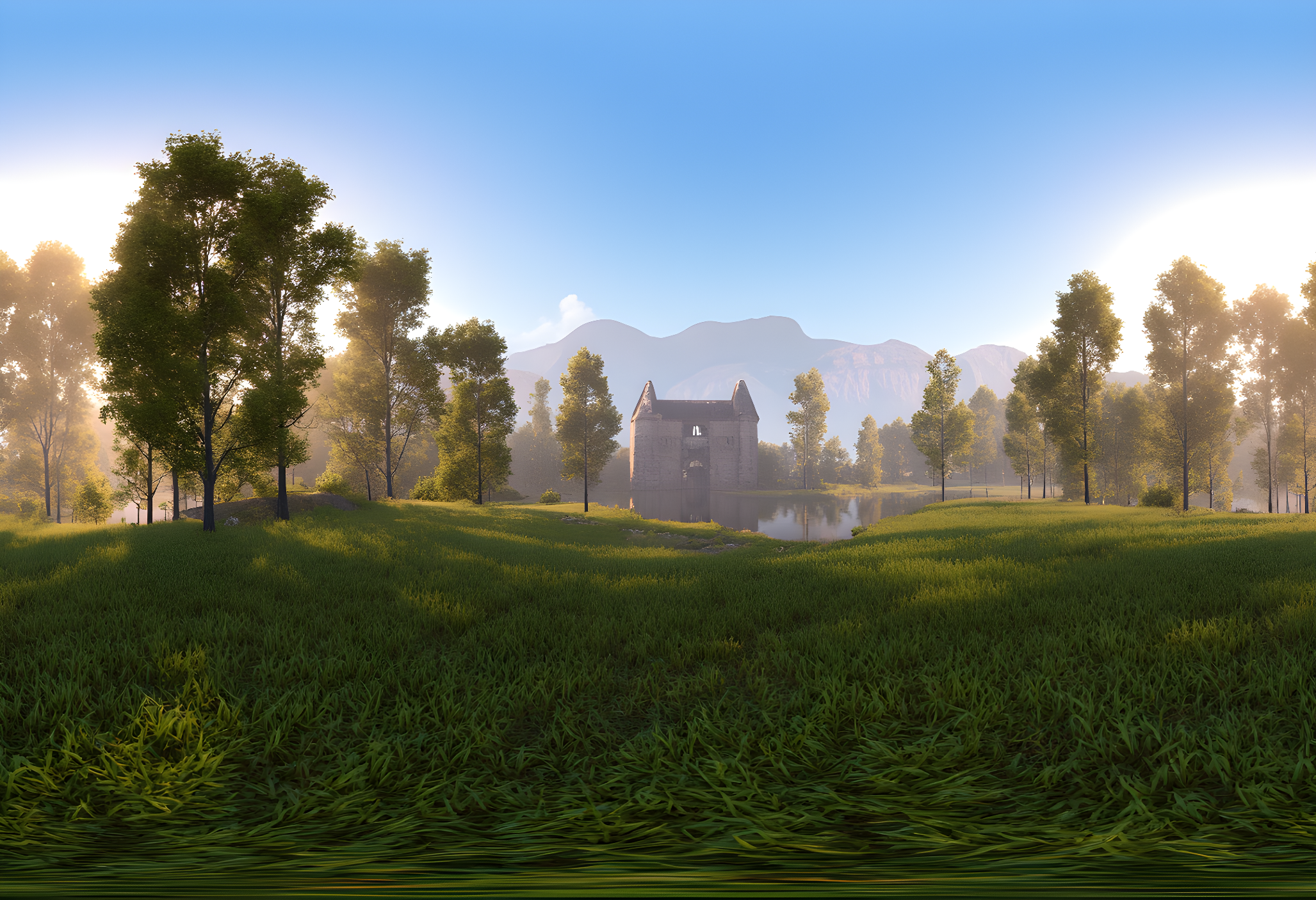

The flux-360 model is a powerful tool for generating 360-degree panoramic images. It builds upon the capabilities of the Stable Diffusion model, with additional fine-tuning and customization by the maintainer igorriti. While similar models like sdxl-panorama, sdxl-panoramic, and flux_img2img also focus on panoramic and image-to-image tasks, the flux-360 model offers unique features and capabilities.

Model inputs and outputs

The flux-360 model takes a variety of inputs, including an image, a prompt, and various settings to control the generation process. The model can generate 360-degree panoramic images, with the ability to specify the aspect ratio, resolution, and number of output images.

Inputs

- Prompt: A text description of the desired image content.

- Image: An input image that can be used for inpainting or image-to-image tasks.

- Mask: A mask image that specifies areas to be preserved or inpainted.

- Seed: A random seed value for reproducible generation.

- Model: The specific model to use for the generation, with options for a "dev" or "schnell" version.

- Width and Height: The desired dimensions of the generated image, if using a custom aspect ratio.

- Aspect Ratio: The aspect ratio for the generated image, with options like 1:1, 16:9, or custom.

- Num Outputs: The number of images to generate.

- Guidance Scale: The strength of the text-to-image guidance during the diffusion process.

- Prompt Strength: The strength of the prompt when using image-to-image or inpainting modes.

- Num Inference Steps: The number of steps to take during the diffusion process.

Outputs

- Generated Images: The 360-degree panoramic images produced by the model, in the specified format (e.g., WEBP).

Capabilities

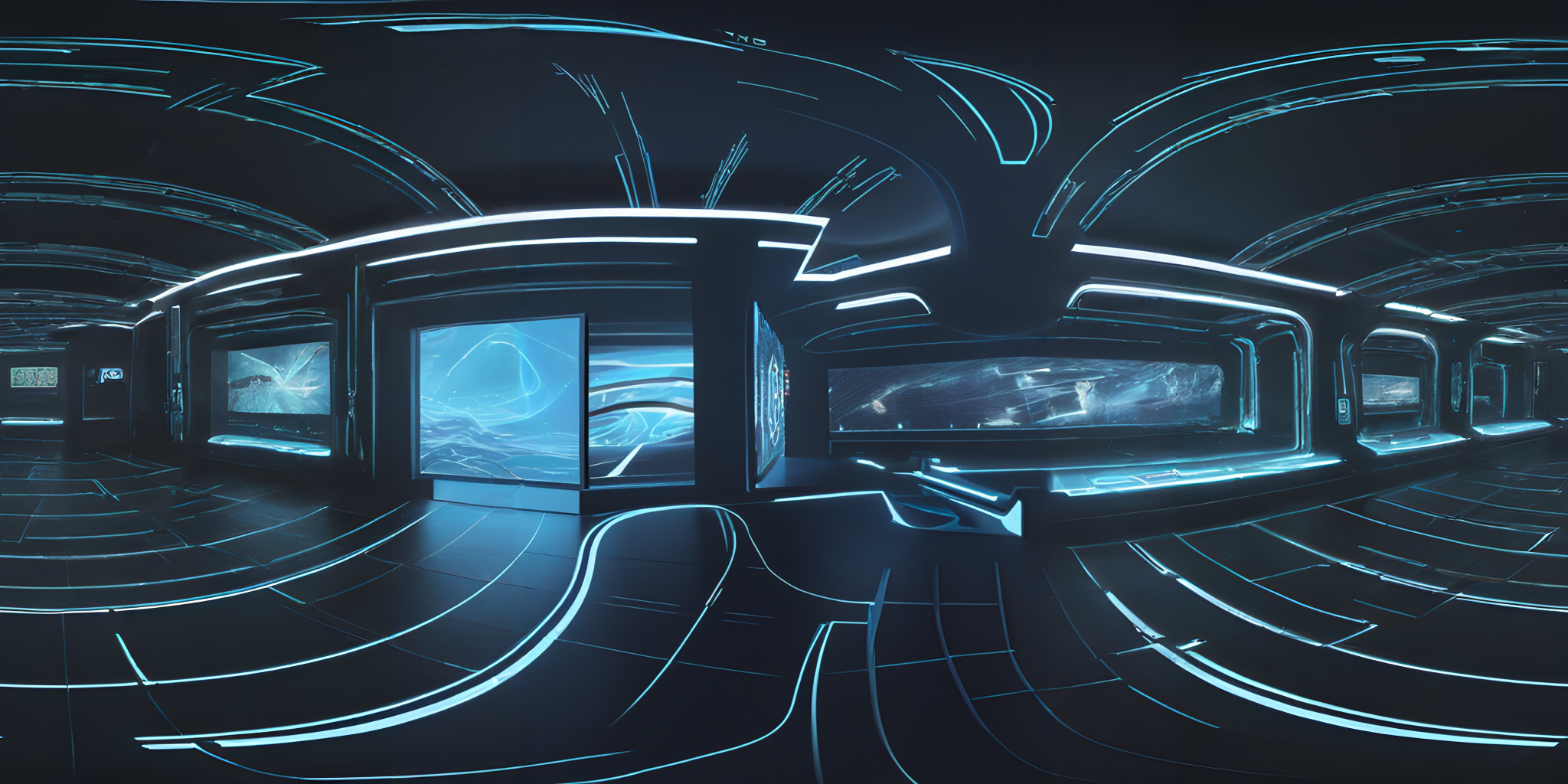

The flux-360 model excels at generating high-quality 360-degree panoramic images from text prompts. It can produce immersive, realistic-looking panoramas that capture a wide range of scenes and environments. The model's ability to fine-tune with additional LoRA models, like the flux-cinestill model, further enhances its versatility and creative potential.

What can I use it for?

The flux-360 model can be a valuable tool for a variety of applications, such as virtual tourism, real estate, and video production. By generating visually stunning 360-degree panoramas, users can create immersive experiences, virtual tours, or even background assets for creative projects. The model's flexibility in terms of aspect ratio and resolution also makes it suitable for a wide range of use cases, from social media posts to high-quality virtual experiences.

Things to try

Experiment with different prompts and settings to explore the range of possibilities offered by the flux-360 model. Try combining it with other LoRA models, such as sdxl-lightning-4step, to achieve unique visual styles or faster generation times. Additionally, explore the model's image-to-image and inpainting capabilities to refine or manipulate existing images into stunning 360-degree panoramas.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

flux-cinestill

46

flux-cinestill is a Stable Diffusion model created by adirik that is designed to produce images with a cinematic, film-like aesthetic. It is part of the "FLUX" series of models, which also includes similar models like flux-schnell-lora, flux-dev-multi-lora, and flux-dev-lora. Model inputs and outputs The flux-cinestill model takes a text prompt as input and generates one or more images as output. The user can specify various parameters such as the seed, aspect ratio, guidance scale, and number of inference steps to control the output. Inputs Prompt**: A text prompt describing the desired image Seed**: A random seed to ensure reproducible generation Model**: The specific model to use for inference (e.g. "dev" or "schnell") Width/Height**: The desired dimensions of the output image Aspect Ratio**: The aspect ratio of the output image Num Outputs**: The number of images to generate Guidance Scale**: The strength of the text guidance during the diffusion process Num Inference Steps**: The number of steps to perform during the diffusion process Extra LoRA**: An additional LoRA model to combine with the main model LoRA Scale**: The scaling factor for the main LoRA model Extra LoRA Scale**: The scaling factor for the additional LoRA model Replicate Weights**: Custom weights to use for the Replicate model Outputs Output Images**: One or more images generated based on the input prompt and parameters Capabilities The flux-cinestill model is capable of generating high-quality images with a cinematic, film-like aesthetic. It can produce a wide variety of scenes and subjects, from realistic landscapes to surreal, dreamlike compositions. The model's ability to blend different LoRA models allows for further customization and fine-tuning of the output. What can I use it for? The flux-cinestill model can be used for a variety of creative projects, such as generating concept art, illustrations, or even movie posters. Its cinematic style could be particularly useful for filmmakers, photographers, or artists looking to create a specific mood or atmosphere in their work. The model's flexibility also makes it suitable for personal projects or experiments in visual arts and design. Things to try Some interesting things to try with the flux-cinestill model include experimenting with different combinations of LoRA models, adjusting the guidance scale and number of inference steps to achieve different styles, and using the model to generate a series of images with a cohesive cinematic aesthetic. Exploring the model's capabilities with a wide range of prompts can also lead to unexpected and intriguing results.

Updated Invalid Date

sdxl-panorama

1

The sdxl-panorama model is a version of the Stable Diffusion XL (SDXL) model that has been fine-tuned for panoramic image generation. This model builds on the capabilities of similar SDXL-based models, such as sdxl-recur, sdxl-controlnet-lora, sdxl-outpainting-lora, sdxl-black-light, and sdxl-deep-down, each of which focuses on a specific aspect of image generation. Model inputs and outputs The sdxl-panorama model takes a variety of inputs, including a prompt, image, seed, and various parameters to control the output. It generates panoramic images based on the provided input. Inputs Prompt**: The text prompt that describes the desired image. Image**: An input image for img2img or inpaint mode. Mask**: An input mask for inpaint mode, where black areas will be preserved and white areas will be inpainted. Seed**: A random seed to control the output. Width and Height**: The desired dimensions of the output image. Refine**: The refine style to use. Scheduler**: The scheduler to use for the diffusion process. LoRA Scale**: The LoRA additive scale, which is only applicable on trained models. Num Outputs**: The number of images to output. Refine Steps**: The number of steps to refine, which defaults to num_inference_steps. Guidance Scale**: The scale for classifier-free guidance. Apply Watermark**: A boolean to determine whether to apply a watermark to the output image. High Noise Frac**: The fraction of noise to use for the expert_ensemble_refiner. Negative Prompt**: An optional negative prompt to guide the image generation. Prompt Strength**: The prompt strength when using img2img or inpaint mode. Num Inference Steps**: The number of denoising steps to perform. Outputs Output Images**: The generated panoramic images. Capabilities The sdxl-panorama model is capable of generating high-quality panoramic images based on the provided inputs. It can produce detailed and visually striking landscapes, cityscapes, and other panoramic scenes. The model can also be used for image inpainting and manipulation, allowing users to refine and enhance existing images. What can I use it for? The sdxl-panorama model can be useful for a variety of applications, such as creating panoramic images for virtual tours, film and video production, architectural visualization, and landscape photography. The model's ability to generate and manipulate panoramic images can be particularly valuable for businesses and creators looking to showcase their products, services, or artistic visions in an immersive and engaging way. Things to try One interesting aspect of the sdxl-panorama model is its ability to generate seamless and coherent panoramic images from a variety of input prompts and images. You could try experimenting with different types of scenes, architectural styles, or natural landscapes to see how the model handles the challenges of panoramic image generation. Additionally, you could explore the model's inpainting capabilities by providing partial images or masked areas and observing how it fills in the missing details.

Updated Invalid Date

flux_img2img

10

flux_img2img is a ready-to-use image-to-image workflow powered by the Flux AI model. It can take an input image and generate a new image based on a provided prompt. This model is similar to other image-to-image models like sdxl-lightning-4step, flux-pro, flux-dev, realvisxl-v2-img2img, and ssd-1b-img2img, all of which are focused on generating high-quality images from text or image inputs. Model inputs and outputs flux_img2img takes in an input image, a text prompt, and some optional parameters to control the image generation process. It then outputs a new image that reflects the input image modified according to the provided prompt. Inputs Image**: The input image to be modified Seed**: The seed for the random number generator, 0 means random Steps**: The number of steps to take during the image generation process Denoising**: The denoising value to use Scheduler**: The scheduler to use for the image generation Sampler Name**: The sampler to use for the image generation Positive Prompt**: The text prompt to guide the image generation Outputs Output**: The generated image, returned as a URI Capabilities flux_img2img can take an input image and modify it in significant ways based on a text prompt. For example, you could start with a landscape photo and then use a prompt like "an anime style fantasy castle in the foreground" to generate a new image with a castle added. The model is capable of making large-scale changes to the image while maintaining high visual quality. What can I use it for? flux_img2img could be used for a variety of creative and practical applications. For example, you could use it to generate new product designs, concept art for games or movies, or even personalized art pieces. The model's ability to blend an input image with a textual prompt makes it a powerful tool for anyone looking to create unique visual content. Things to try One interesting thing to try with flux_img2img is to start with a simple input image, like a photograph of a person, and then use different prompts to see how the model can transform the image in unexpected ways. For example, you could try prompts like "a cyberpunk version of this person" or "this person as a fantasy wizard" to see the range of possibilities.

Updated Invalid Date

sdxl-panoramic

6

The sdxl-panoramic is a custom AI model developed by Replicate that generates seamless 360-degree panoramic images. It builds upon Replicate's previous work on the sdxl, sdxl-inpainting, and sdxl-niji-se models, incorporating techniques like GFPGAN upscaling and image inpainting to create a high-quality, panoramic output. Model inputs and outputs The sdxl-panoramic model takes a text prompt as its input, and generates a 360-degree panoramic image as output. The input prompt can describe the desired scene or content, and the model will generate an image that matches the prompt. Inputs Prompt**: A text description of the desired panoramic image. Seed**: An optional integer value to control the random number generator and produce consistent outputs. Outputs Image**: A seamless 360-degree panoramic image, generated based on the input prompt. Capabilities The sdxl-panoramic model is capable of generating a wide variety of panoramic scenes, from futuristic cityscapes to fantastical landscapes. It can handle complex prompts and produce highly detailed, immersive images. The model's ability to seamlessly stitch together the panoramic output is a key feature that sets it apart from other text-to-image models. What can I use it for? The sdxl-panoramic model could be used to create visually stunning backgrounds or environments for various applications, such as virtual reality experiences, video game environments, or architectural visualizations. Its panoramic output could also be used in marketing, advertising, or social media content to capture a sense of scale and immersion. Things to try Try experimenting with different prompts that describe expansive, panoramic scenes, such as "a sprawling cyberpunk city at night" or "a lush, alien world with towering mountains and flowing rivers." The model's ability to handle complex, detailed prompts and produce cohesive, 360-degree images is a key strength to explore.

Updated Invalid Date